By Youxi

In a database system, the query optimizer and transaction system are two of its major bedrocks. These components are so important that a large number of data structures, mechanisms, and features in the whole database architecture design are built around them. The query optimizer is responsible for speeding up queries and organizing the underlying data system more efficiently. The transaction system is responsible for storing data safely, stably, and persistently, while providing logical implementation for users' read and write concurrency.

In this blog, we'll be exploring the database transaction system and analyze the atomicity in the PolarDB transaction system. Due to the intricate nature of this system, we will present this topic in two parts. Part one focusses on the concept of atomicity itself while part two focusses on the implementation of atomicity in PolarDB.

Before we start, consider a few important questions. You may have asked these questions before knowing the database. However, these questions may have been simply answered by "pre-write log" or "crash recovery mechanism". We want to dive deep into the implementation and internal principles of these mechanisms.

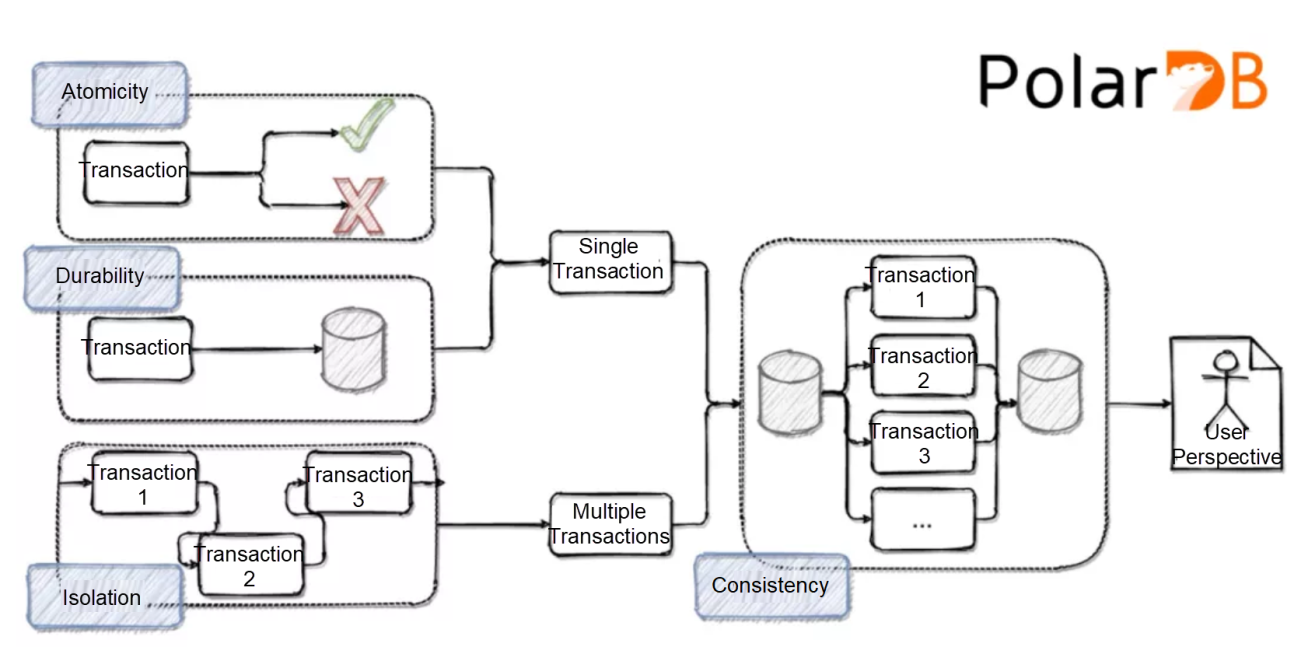

After the famous ACID (atomicity, consistency, isolation, durability) features were put forward, the concept of ACID has been frequently talked about (originally written into the SQL92 standard). These four features can roughly summarize the core demands of people for databases. Atomicity is the first feature to be discussed in this article. We first focus on the position of atomicity in transaction ACID.

Here is my understanding of the relationship between atomicity, consistency, isolation, durability of a database. I think ACID features of a database can be defined from two perspectives. AID (atomicity, durability, isolation) features are defined from the perspective of the transaction itself, while C (consistency) is defined from the perspective of the user.

The following is my understanding of each feature:

This article mainly focuses on atomicity, and the topic of crash recovery in this article may involve durability. Isolation and consistency are not discussed in this article. In the visibility section, we default that the database has complete isolation, that is, the isolation level that can be serialized.

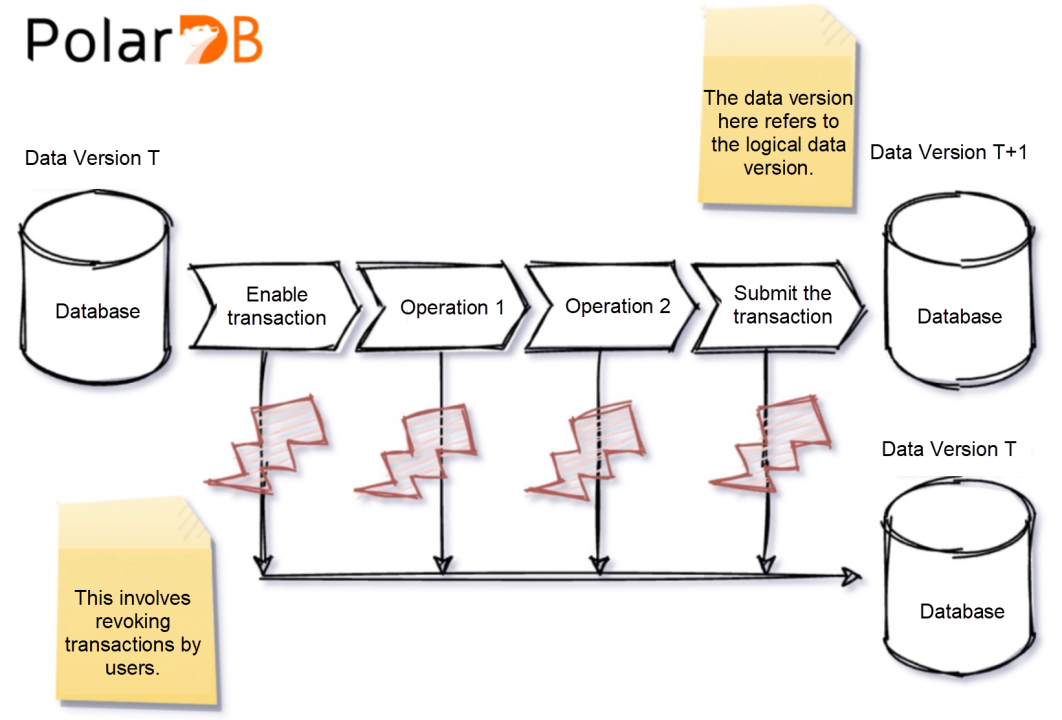

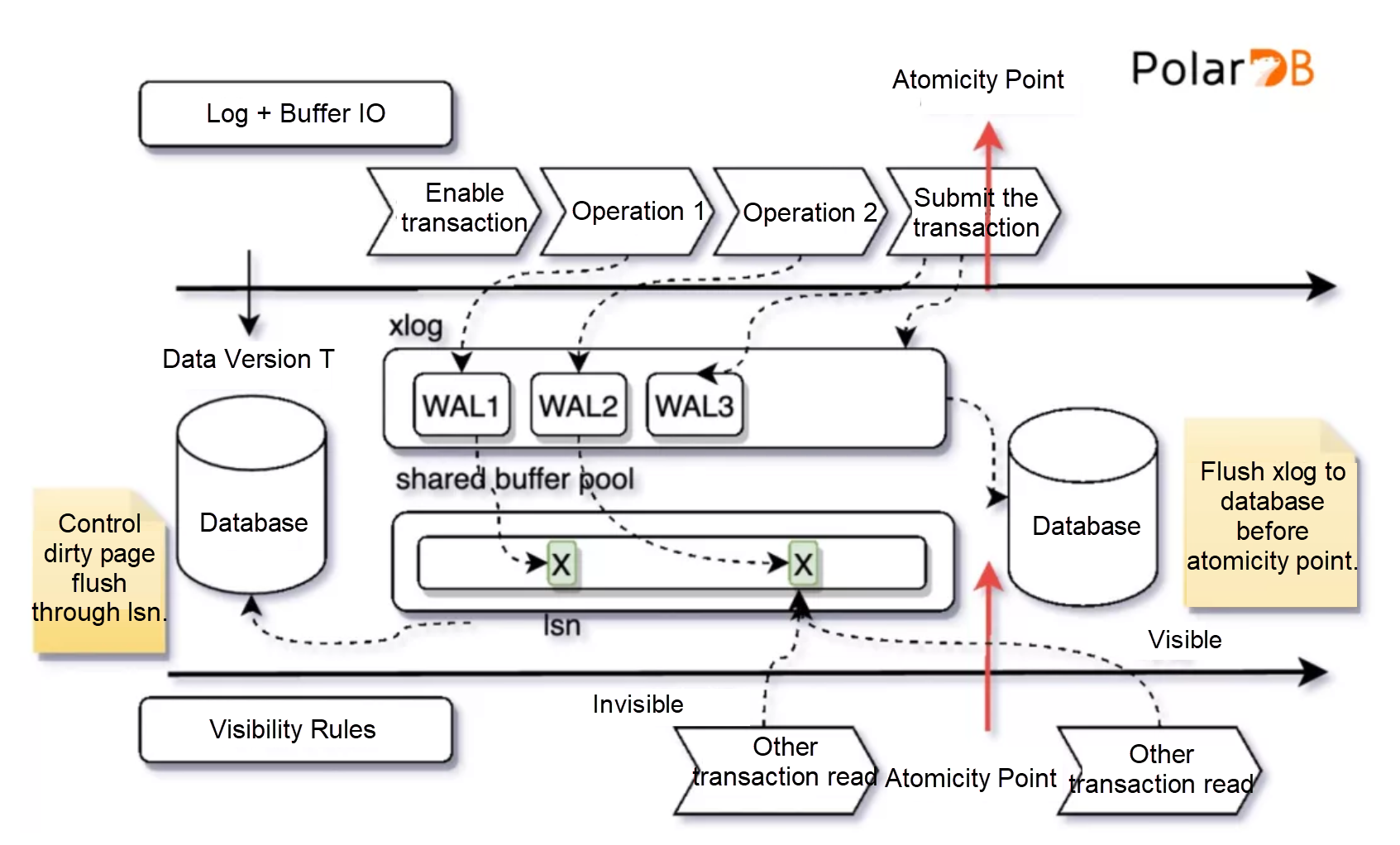

We have talked a lot about the database's transaction features, and let's move on to our topic: atomicity. We still take the preceding example to explain atomicity. Suppose the current state of the database is T, and now we want to upgrade the data state to T + 1 through transaction A. Let's take a look at the atomicity in this process.

If we want to ensure that this transaction is atomic, we can define three requirements. Only when the following three requirements are met can we say that the transaction is atomic:

Note that we have not defined this time point, and we are not even sure whether the time point in 2/3 is the same time point. What we can be sure of is that this time point exists Otherwise, we cannot say the transaction is atomic. Atomicity determines that there must be a definite time point for submission and rollback. In addition, according to our description just now, we can infer the time point in 2 can be defined as an atomicity point. The submissions before the atomicity point are not visible to us and are only visible after. Thus, for other transactions in the database, this atomic point is the time point when the transaction is submitted. The point in 3 can be regarded as a durability point because this conforms to the definition of crash recovery by persistence. That is, for durability, the transactions after point 3 have been submitted.

First of all, let's talk about atomicity from two simple schemes. This is to explain why the data structures we will introduce in each step are essential to realizing atomicity.

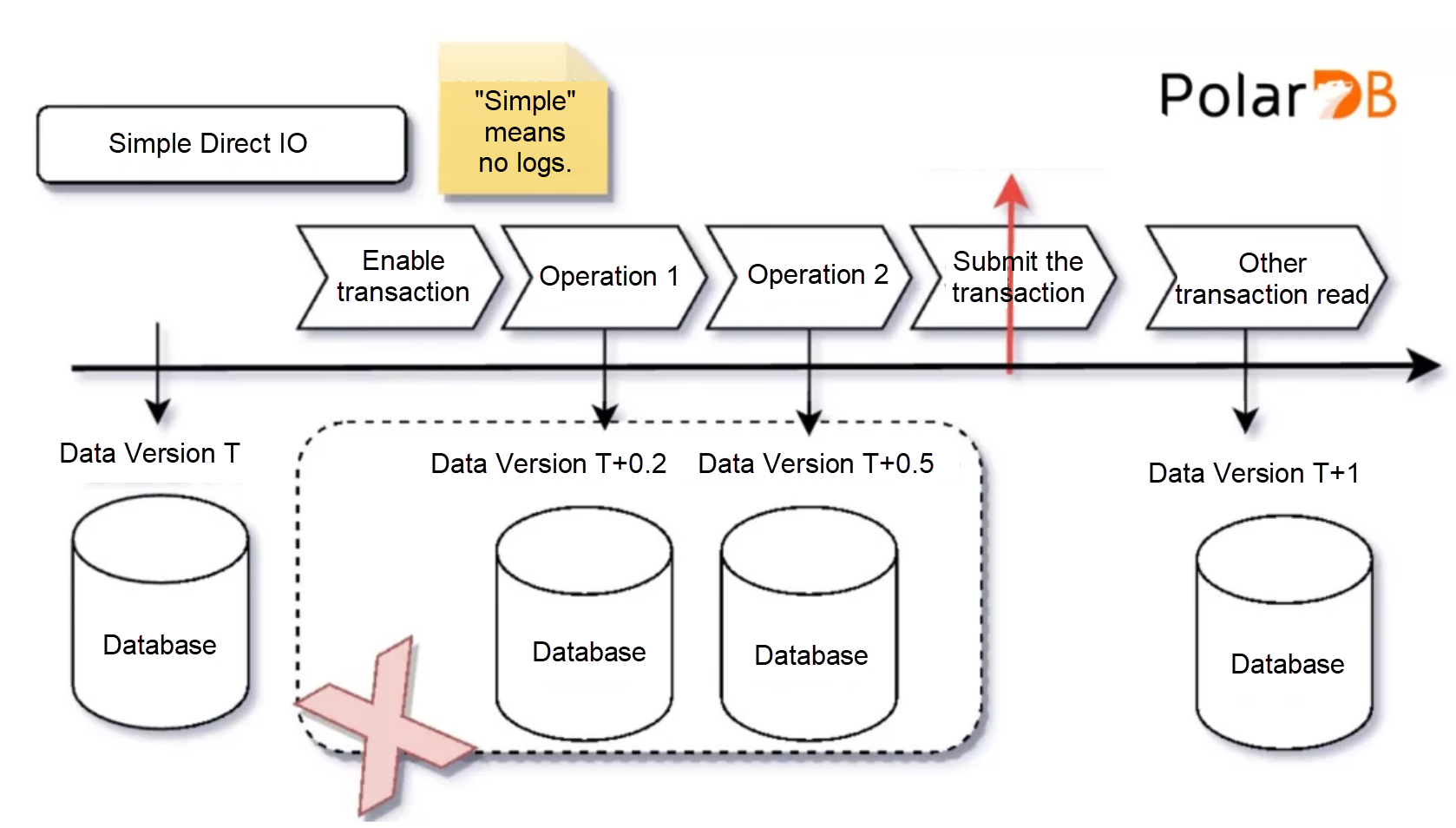

Suppose there is such a database: each user operation writes data to disk. We call this method Simple Direct IO, and "simple" means that we do not record any data logs but only the data itself. Suppose that the initial data version is T. Then if a data crash occurs after we insert some data, a T + 0.5 version of the data page will be written to the disk. And we have no way to roll back or continue subsequent operations. Such a failed case undoubtedly violates atomicity because the current state is neither submission nor rollback but an intermediate state. So, this is a failed attempt.

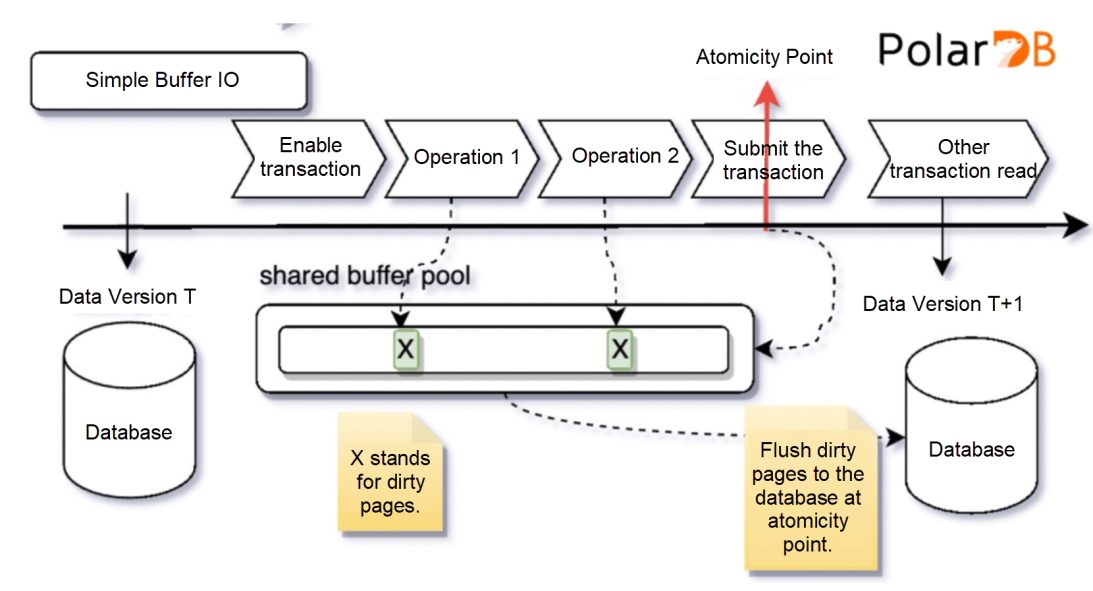

We have another scheme called Simple Buffer IO. Again, we have no logs, but we have added a new data structure called "shared buffer pool". Each time we write a data page, we do not write the data directly to the database but the shared buffer pool. This has obvious advantages. First, the read and write efficiency will be greatly improved. We do not have to wait for the data page to be actually written to disk before we do other things. Instead, we can proceed with this task asynchronously. Secondly, if the database rolls back or crashes before the transaction is submitted, we only need to discard the data in the shared buffer pool. Only when the database is successfully submitted can it actually write the data to disk. Then it seems that we have met the requirements in terms of visibility and crash recovery.

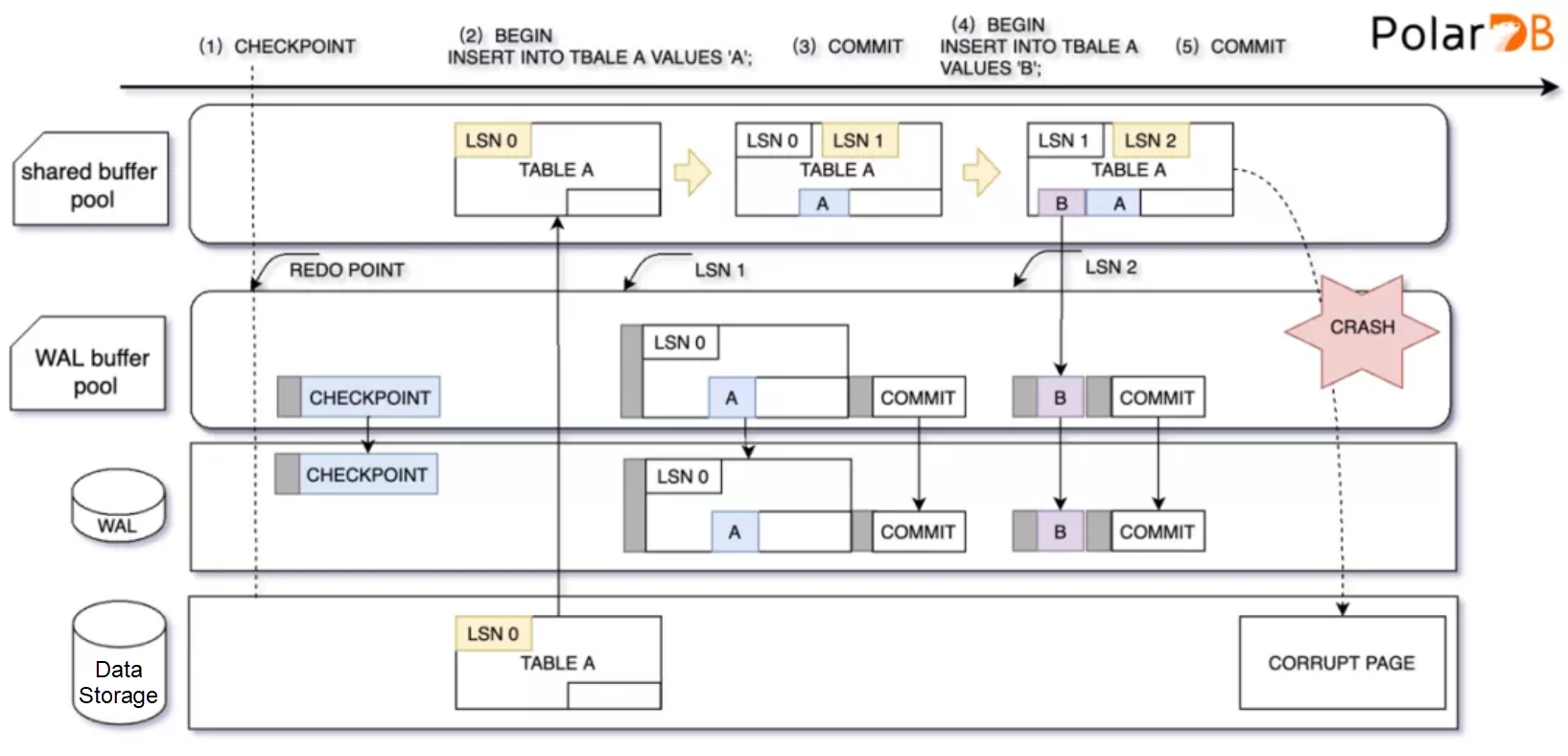

However, there is still a tricky problem in the above scheme, that is, data persistence is not as simple as we think. For example, if there are 10 dirty pages in the shared buffer pool, we can use storage technology to ensure that the disk flush of a single page is atomic. But the database may crash at any time during the period of these 10 pages. Then, no matter when we decide to write data, once the machine crashes during this process, the data may generate a T + 0.5 version on the disk. And even after restarting, we still cannot redo or roll back.

The above two examples seem to show that the database cannot ensure data consistency without relying on other structures (another popular scheme is Shadow Paging of SQLite database, which is not discussed here). Therefore, if we want to solve these problems, we need to introduce another important data structure: data log.

Based on Buffer IO, we introduced data logs to tackle data inconsistency.

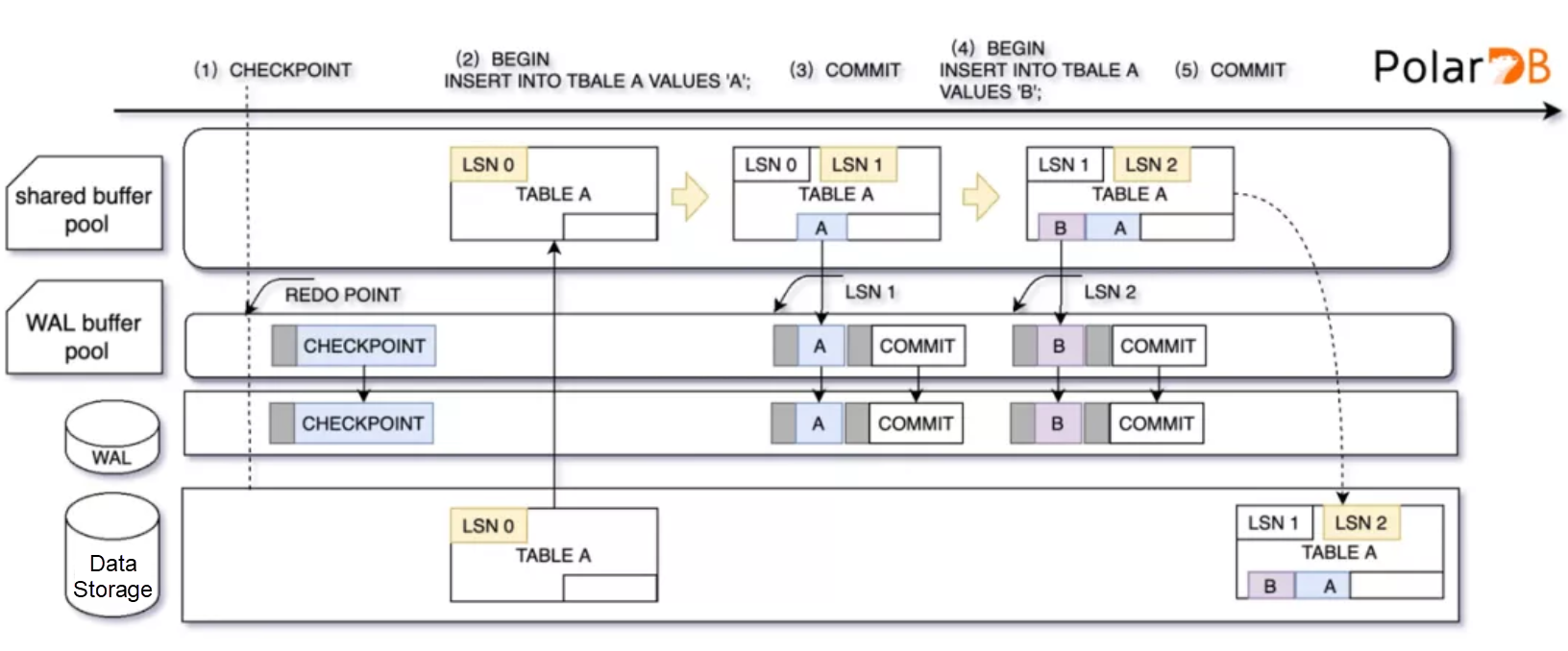

The idea behind the part of the data cache is the same as our previous idea, except that we will record an additional xlog buffer before writing the data. These xlog buffers are logs with sequence, and their serial numbers are called lsn. We record the log lsn of data on the data page. Each data page has recorded the latest serial number of the log that has updated it. This feature is to ensure the consistency of logs and data.

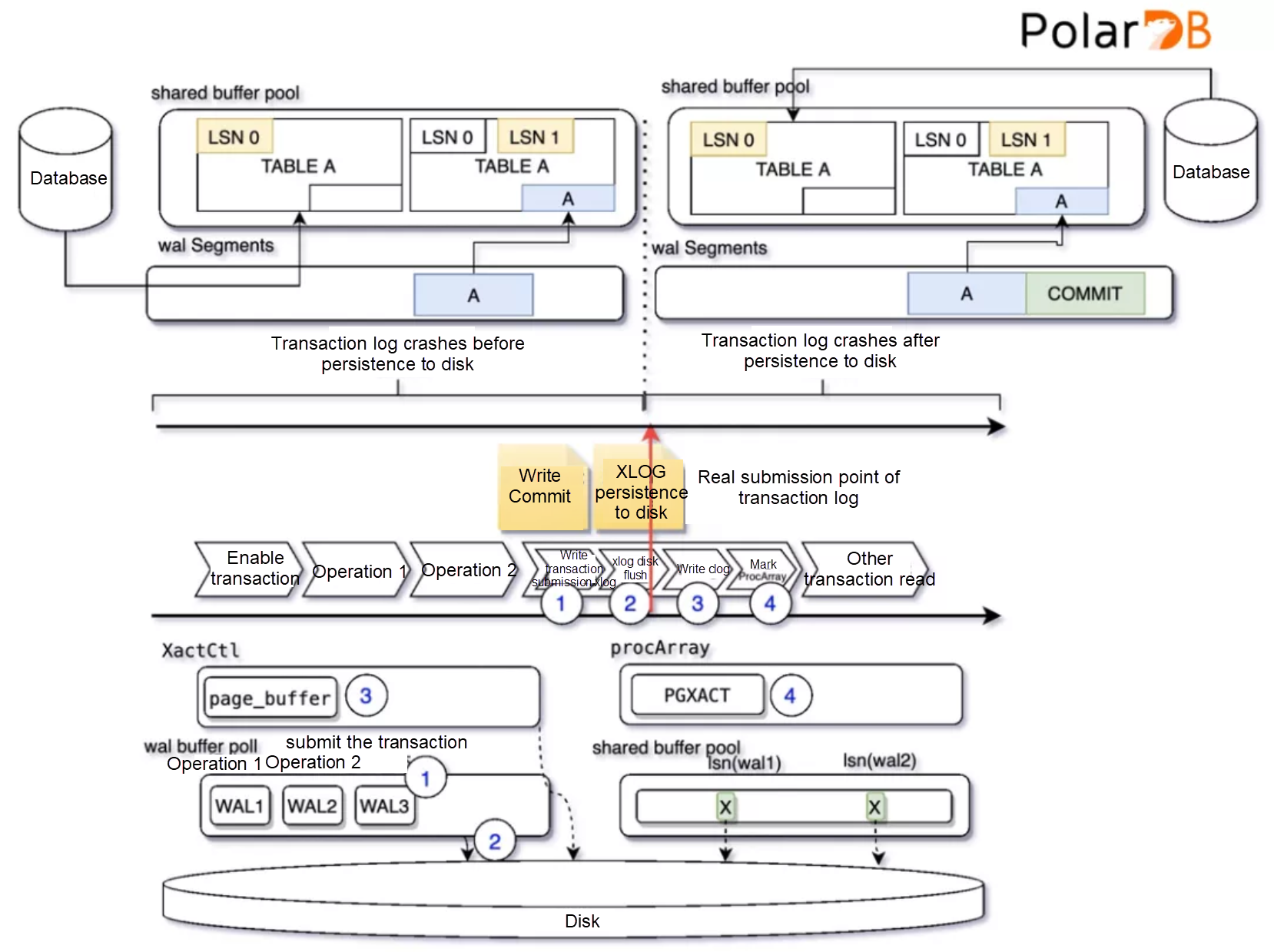

Assume that if the log we can introduce is exactly the same as the data version, and the data log is persistent before the log, then we can recover the data from this consistent log page whenever the data crash. By doing so, the data crash issue mentioned earlier can be solved. Regardless of whether the crashes are before or after transaction submission, we can recover the correct version of data through log playback. Thus, the atomicity of crash recovery is realized. And we can implement the visibility through multi-version snapshots. It is not easy to ensure that the data log is consistent with the data. Let's take a look at how to ensure this and how to recover the data when it crashes.

The purpose of Write Ahead Log (WAL) is to ensure data recoverability. In order to ensure the consistency between WAL log and data, when the data cache is persisted to disk, the WAL log corresponding to the persistent data page must be persisted to disk first. This expounds the essence of controlling dirty page flush.

After that, if the database runs normally, the bgwriter/checkpoint processes will asynchronously flush the data pages to the disk. If the database crashes, the data can be recovered in the shared buffer pool through log playback and then written to the disk asynchronously. This is because the data log and transaction submission log of A and B logs have been flushed to the disk.

The recovery of WAL seems to be perfect, but unfortunately, there are still some flaws in the scheme just mentioned. Suppose that when a bgwriter process encounters CRASH of the database when writing data asynchronously, some dirty pages are written to the disk, and there may be bad pages on the disk. (data page of PolarDB is 8k. In extreme cases, 4k write on disk may create bad pages) However, WAL cannot replay the data on bad pages. So another mechanism is needed to ensure that the database can find the original data in extreme cases. This is the important mechanism: fullpage.

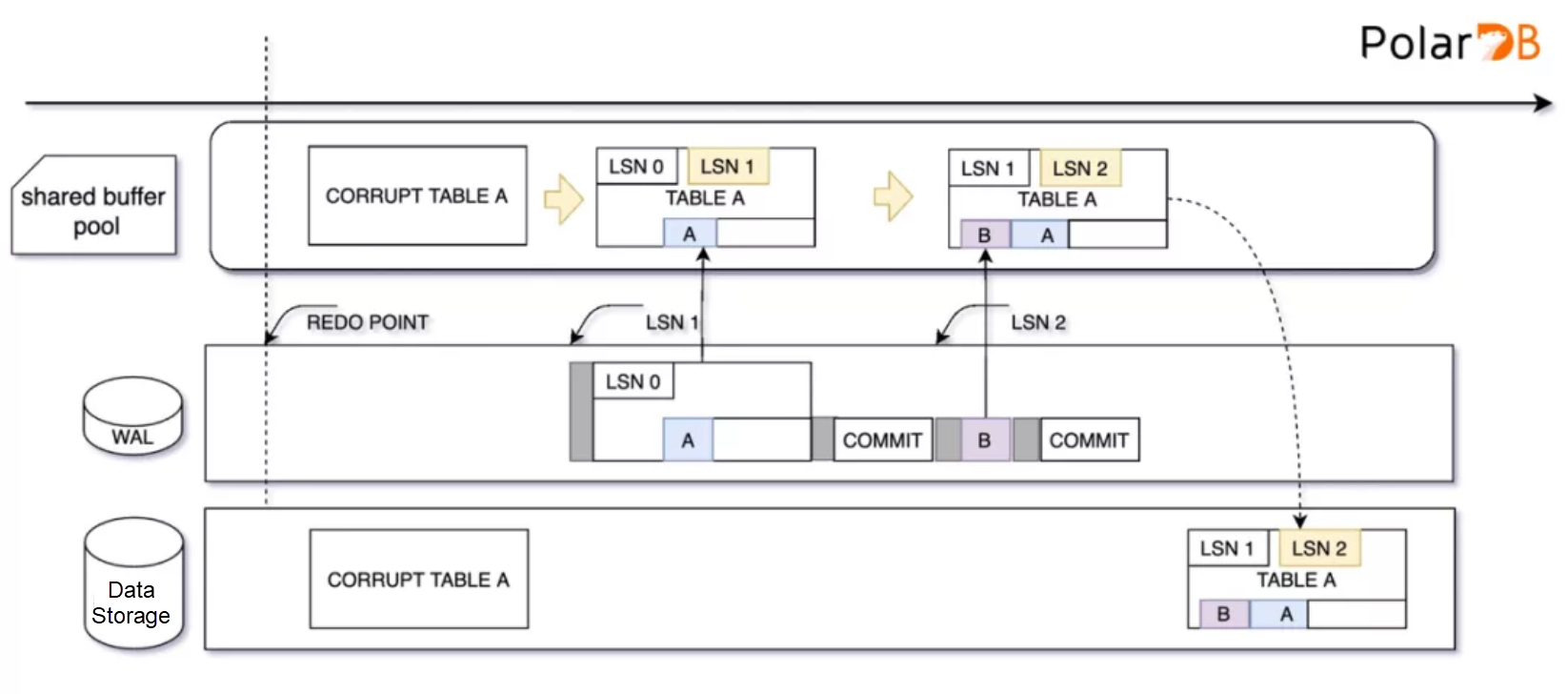

When data is modified for the first time after each checkpoint operation, PolarDB writes the modified data together with the entire data page to the wal buffer and then flushes it to the disk. This kind of WAL containing the entire data page is called backup block. The backup block enables WAL to replay the complete data pages under any circumstances. The following content describes a complete process.

At this time, if the database crashes, when the database is pulled up again for recovery and it encounters a bad page, the correct data can be played back step by step through the original version of the page recorded in the original WAL.

After learning the first two sections, we can continue to talk about how the data are played back if the database crashes. Here we demonstrate a playback of a data page that is written badly.

After the data playback is successful, the data in the shared buffer pool can be asynchronously flushed to the disk to replace the previously damaged data.

We have devoted a large part of this article to how the database achieves crash recovery through pre-writing logs. It seems to be able to explain the meaning of durability point. Next, we will talk about the visibility issue.

Our description of atomicity involves the concept of visibility. Visibility is implemented by a complex set of MVCC mechanisms in PolarDB, most of which belong to the category of isolation. A brief description of visibility will be given here, while a more detailed description will be continued in an article on isolation.

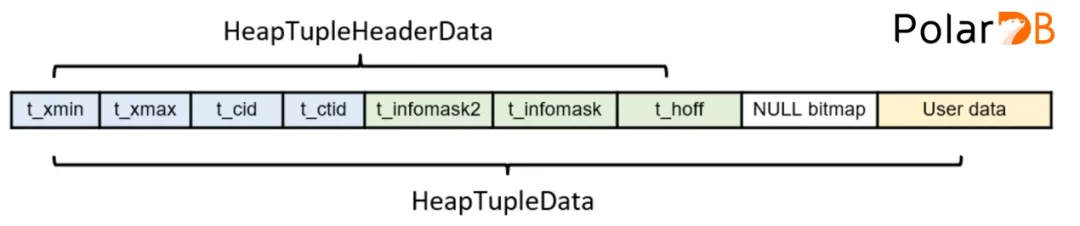

The first thing to talk about is the transaction tuple. It is the smallest unit of data, where the data actually reside. Here we only need to focus on a few fields.

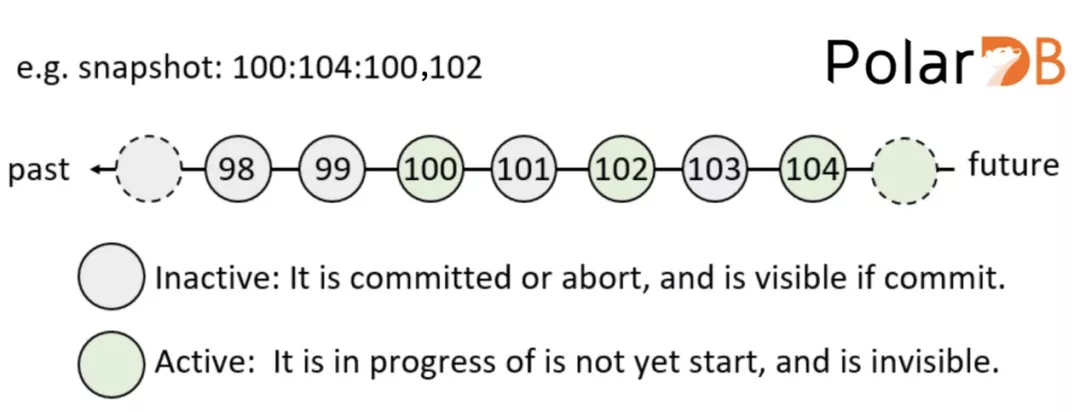

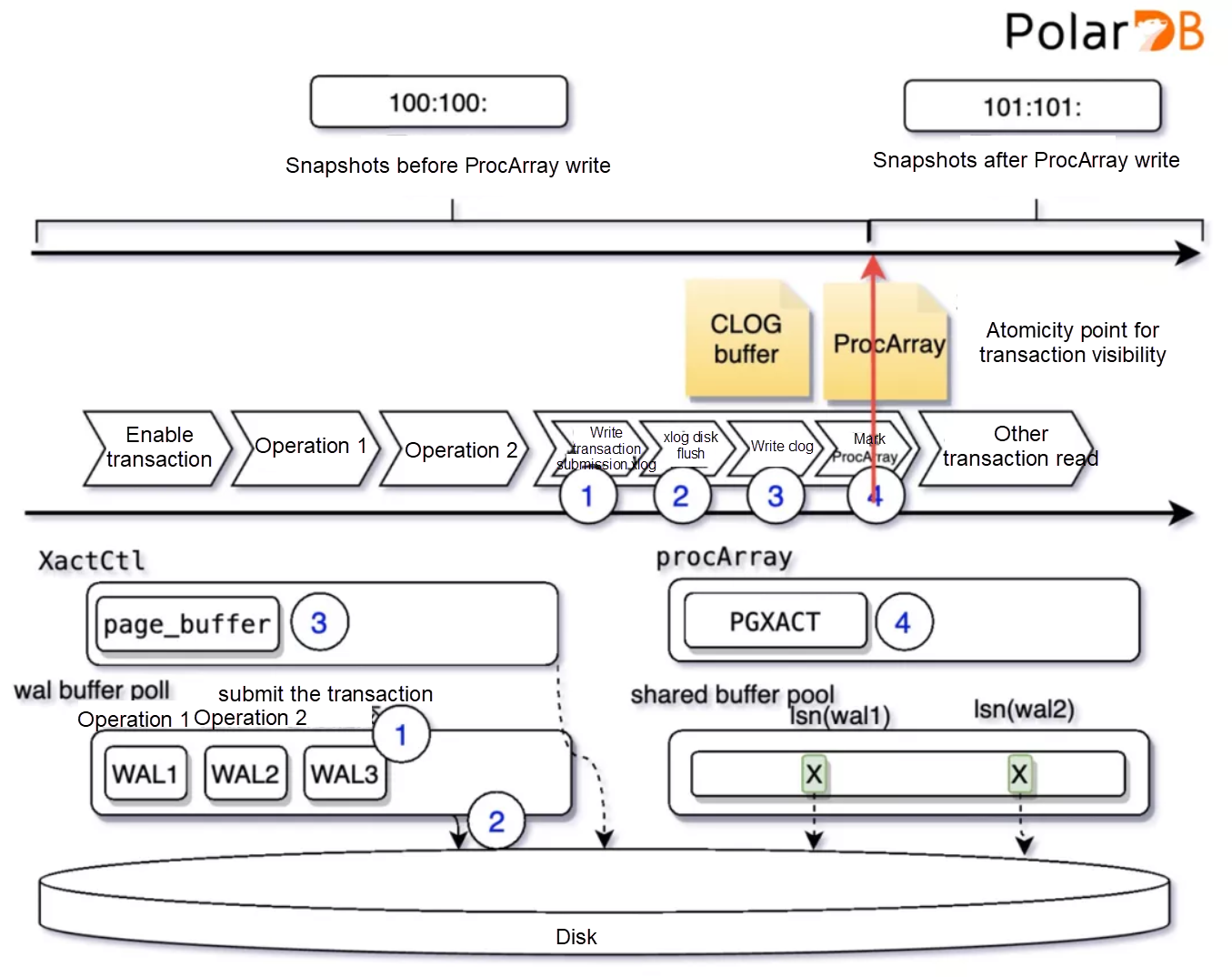

The second thing to talk about is snapshot. A snapshot records the state of a transaction in a database at a certain time point.

All we need to know about snapshot is that it can be used to obtain the state of all possible transactions in the database at a certain time point from procArray.

The third point is the current transaction state. It refers to the mechanism in the database that determines the running state of the transaction. In a concurrent environment, it is very important to determine the transaction state we see.

When viewing the transaction state in a tuple, three data structures: t_infomask, procArray, and clog may be involved.

In a visibility judgment process, the access order is infomask -> snapshot -> clog, and the decisive order is snapshot-> clog -> infomask.

infomask is the most easily obtained information, which is recorded in the head of the tuple. Under some conditions, the visibility of the current transaction can be clarified through infomask without involving the following data structures. Snapshots have the highest level of decision-making power, and finally determine whether the state of xmin and xmax transactions is running or not running; clog is used to assist in visibility judgment and setting the value of infomask. For example, if the judgment of xmin transaction visibility shows that it has been submitted in snapshot and clog, t_infomask will be set to be submitted. However, if it shows that it has been submitted in snapshot and not in clog, the system determines that a crash or rollback has occurred and sets infomask to the illegal transaction.

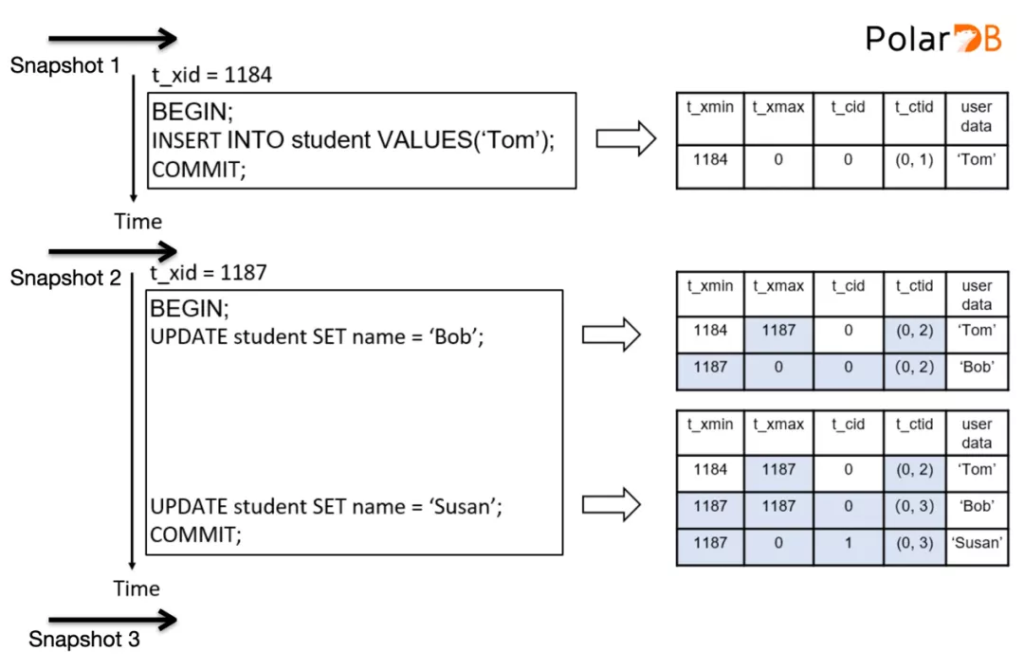

After introducing tuples and snapshots, we can move on to the topic of snapshot visibility. The visibility in PolarDB has a complex definition system. It is defined by many combinations of information. But the most direct ones are snapshots and tuple headers. The following illustrates the visibility of tuple headers and snapshots through an example of data insertion and update.

Isolation is not discussed in this article, and we assume that the isolation level is serializable.

From the above analysis, we can draw a simple conclusion: the visibility of the database depends on the timing of the snapshot. The so-called different visibility versions in atomicity mean that different snapshots are taken. Snapshots determine whether an executing transaction has been submitted. This kind of submission has nothing to do with the submission state of transaction mark or even the submission of record clog. We can use this method to make the snapshot we get consistent with the transaction submission.

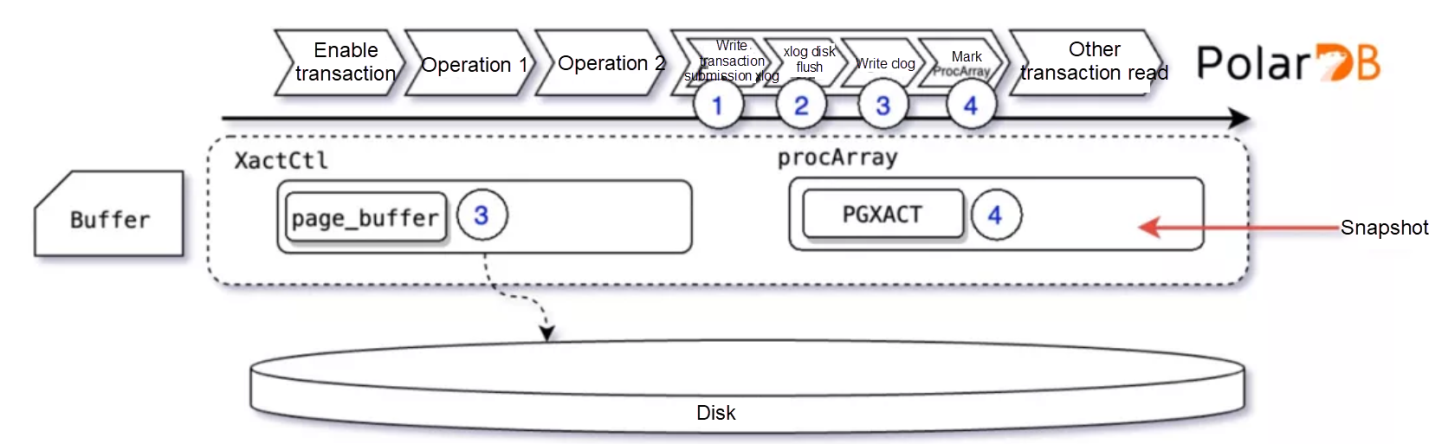

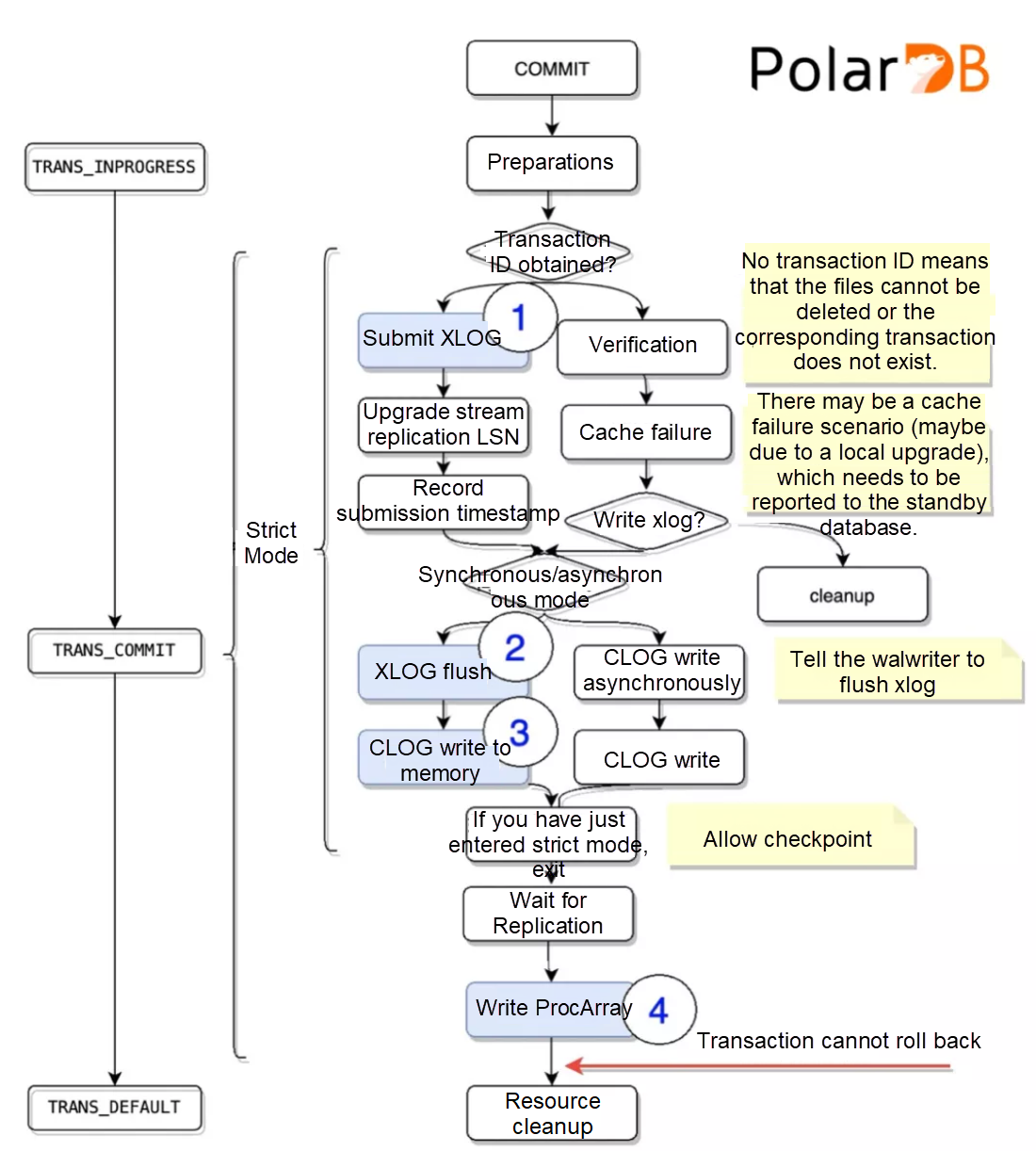

We have briefly talked about the visibility of PolarDB snapshots already, and here we will briefly introduce the specific implementation issues when transactions are submitted.

The core idea of our visibility mechanism design is: transactions should only see the version of the data they should see. How should we define "should see"? Here is a simple example: if a tuple's xmin transaction is not submitted, it is very likely to be invisible to other transactions. If the xmin transaction of a tuple has been submitted, it is likely to be visible to other transactions. How to know whether this xmin has been submitted or not? As mentioned above, we decide through snapshots, so the key mechanism of transaction submission is the update mechanism of the new snapshot.

When a transaction is submitted, visibility involves two important data structures: clog buffer and procArray. The relationship between the two has been explained above. They play a role in judging the visibility of transactions. Of course, procArray plays a decisive role. This is because the acquisition of snapshots is a process of traversing ProcArray.

Actually, in the third step, the information of the transaction submission will be written to the clog buffer. Transaction clog at this time is marked as submitted, but it is still not. Then the transaction marks ProcArray as "has been submitted". In this step, the transaction has completed the actual submission. The snapshot obtained after this time point will update the data version.

After learning the PolarDB crash recovery and visibility theory, we can know that PolarDB uses a pre-writing log and Buffer IO scheme to ensure transaction crash recovery and visibility consistency, thus achieving atomicity. Then let's move on to the most important aspects of transaction submission to find out what exactly the atomicity point we mentioned earlier refers to.

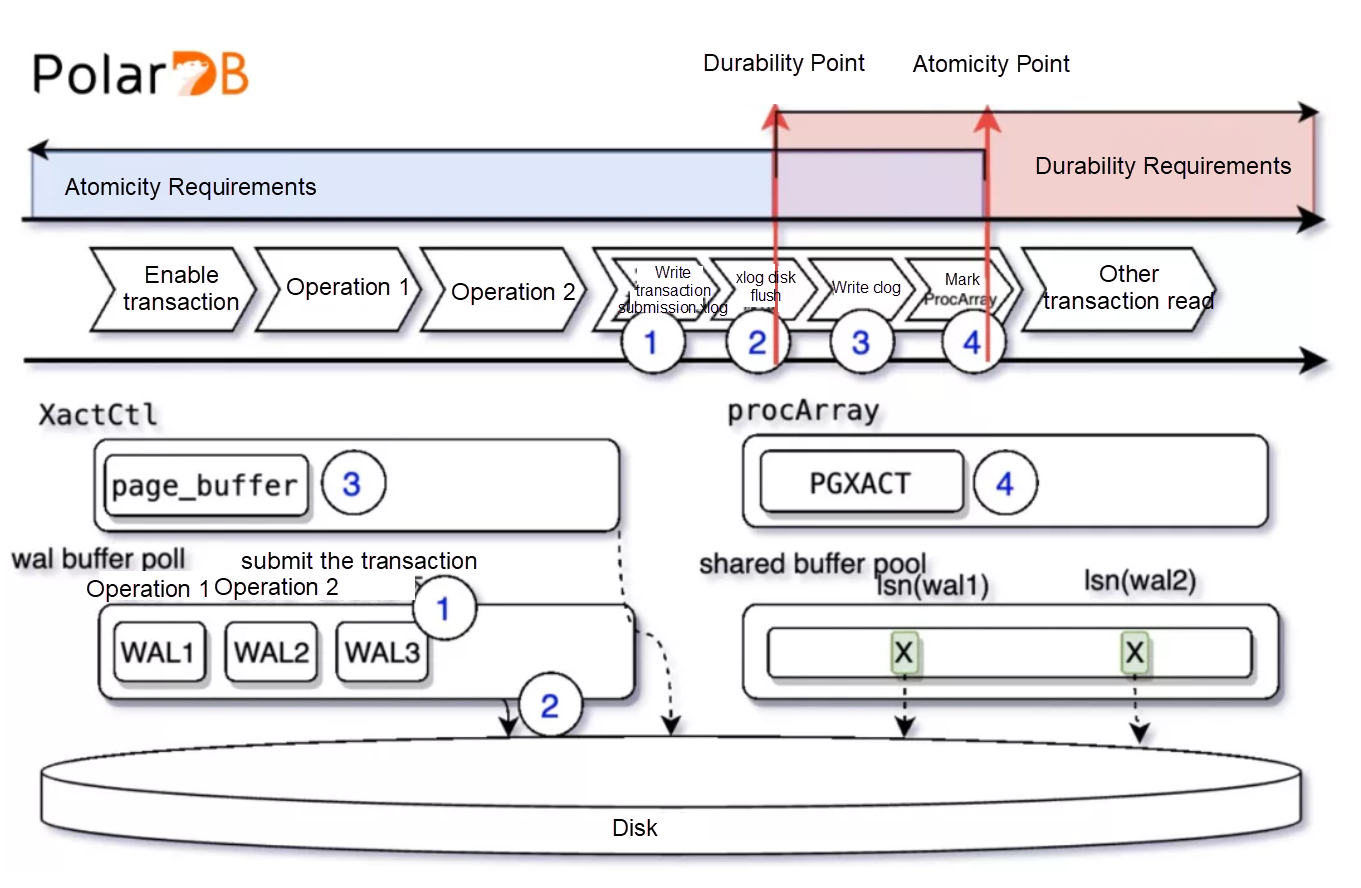

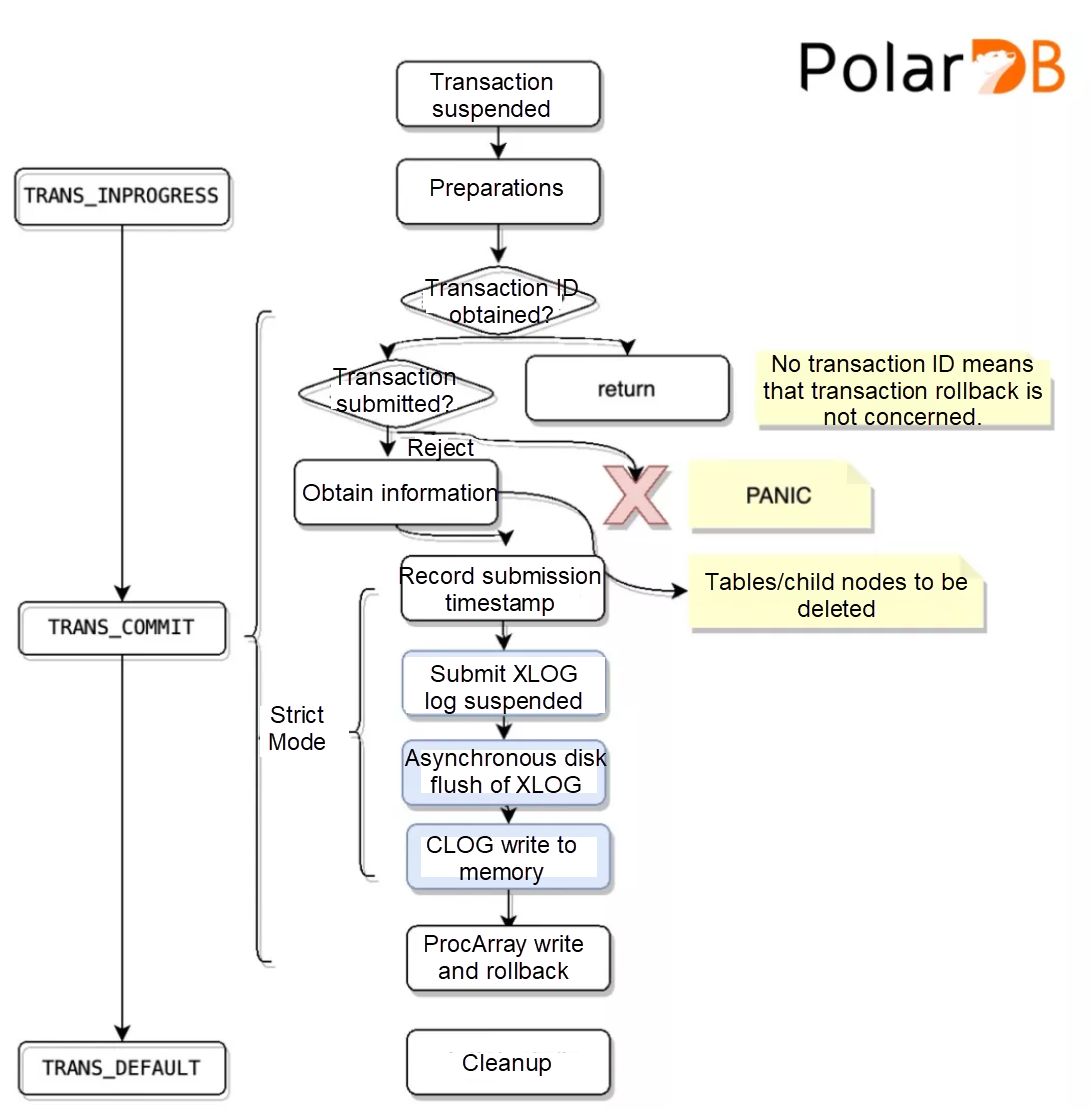

Simply put, there are four operations in the transaction submission that are most important to transaction atomicity. In this section, we talk about the first two operations.

When we mark the point of this xlog (WAL log) write, let's consider two scenarios:

This seems to show that point 2 is the critical point of crash recovery. It indicates that database crash recovery can return to T or T + 1 state. So how do we call this point? Let's recall the concept of persistence: once a transaction is submitted, the modification of the database by transaction is permanently retained in the database. The two are actually consistent in essence. So we call point 2 durability point.

Another point about xlog disk flushing is that xlog disk flushing and playback have the atomicity of a single file. CRC check in the WAL log head provides validity check of a single WAL file. If a WAL is damaged in disk write, the contents of this WAL log are invalid. This ensures that there will not be partial playback of data.

Let's continue to look at operations 3 and 4:

Operation 3 records the current state of the transaction in the clog buffer. It can be regarded as a layer of log cache. Operation 4 writes the submission operation to ProcArray, which is a very important step. So we can know that the snapshot determines the transaction state through ProcArray. That is, this step determines the state of the transaction that other transactions see.

If the transaction crashes or rolls back before operation 4, the data version seen by all other transactions in the database is T. This means the transaction has not actually been submitted. This judgment is determined by the order of visibility -> snapshot -> Procarray.

While after operation 4, the transaction is submitted for all observers because all snapshot data versions obtained after this time point are T + 1.

From this point, operation 4 fully meets the meaning of atomic operation. This is because the execution of operation 4 affects whether the transaction can be successfully submitted. The Transaction is always allowed to be rolled back before operation 4 because no other transactions see the T + 1 state of the transaction. However, after operation 4, the transaction is not allowed to be rolled back. Otherwise, when other transactions of version T + 1 are read, it will cause data inconsistency. The concept of atomicity is that transactions are successfully submitted or rolled back when submission is failed. Since rollback is not allowed after operation 4, operation 4 can be seen as a sign of successful transaction submission.

In summary, we can define operation 4 as the atomicity point of the transaction.

Again, let's look at the concept of atomicity and durability:

We mark operation 4 as an atomicity point. Because at the moment of operation 4, objectively all observers think that the transaction has been submitted. The version of the snapshot is upgraded from T to T + 1, and the transaction can no longer be rolled back. Then once the transaction is submitted, will atomicity not take effect? I think yes. Atomicity only guarantees data consistency of the moment transaction is successfully submitted. It makes no sense to talk about atomicity when the transaction has ended. Therefore, atomicity ensures the visibility and recoverability of transactions before the atomicity point.

We mark point 2 as a durability point because according to durability, the transaction can be retained permanently after it is successful. Based on the above speculation, this point is undoubtedly durability point 2. Therefore, we should ensure durability all the time starting from point 2.

After explaining points 2 and 4, we can finally define the two most important concepts involved in transaction submission. We can now answer the first question: at what moment is the transaction actually submitted? The answer is that the transaction can be completely restored after the durability point. While the transaction after the atomicity point is regarded as actually submitted by other transactions. But the two are not separate. How can we understand this?

I think this is a compromise of atomicity. As long as the order of the two points can make the data in different states consistent, then it meets our definition of atomicity.

Finally, let's consider why the two points are not merged. The operation of the durability point is the disk flushing of the WAL log, which involves disk I/O. What the atomicity point does is to write ProcArray, which requires a big lock on ProcArray that is seriously competed. We can consider it as a high-frequency shared memory write. Both are related to the efficiency of database transactions. If the two are bound to form an atomic operation, the waiting for the two will be annoyingly long. This may greatly reduce the operation efficiency of transactions. From this perspective, the separation of behaviors of the two is out of efficiency consideration.

Obviously not. In the above diagram, we can see that there may be segments that do not meet the requirements of atomicity or durability in the middle of this time period.

Specifically, suppose the atomicity point is before the durability point, let's consider the transaction situation when the crash occurs between the two. Other transactions will see T + 1 version of data before the crash, and T version of data after the crash. This behavior of "seeing future data" is obviously not allowed.

The real submission is atomicity point submission.

The basic truth is that the real sign of submission is when the data version is upgraded from T to T + 1. This point is the atomicity point. Before this point, the data versions seen by other transactions are T, so transactions are not really submitted. After this point, the transactions cannot be rolled back. This is enough to show that this is the real submission point of the transaction.

Finally, let's focus on operations 1 and 3:

In this section, let's go back to the transaction submission functions and take a look at the positions of these operations in the function call stack.

Finally, let's summarize the article series, focusing on the topic of "how to achieve transaction atomicity". We talked about the database crash recovery feature and transaction visibility to explain the underlying principles of PolarDB to achieve atomicity. As we introduce the principle of pre-write log + buffer IO, we also talk about shared buffer, WAL log, clog, ProcArray. These data structures are important to atomicity. Under the transaction, the various modules of the database are cleverly integrated, making full use of computer resources like disk, cache, and I/O to form a complete database system.

We might think of other models in computer science, such as the ISO network model. In it, TCP protocol in the transport layer provides reliable communication services on an unreliable channel. Database transactions implement a similar idea, that is, to reliably store data on an unreliable operating system (may crash at any time) and disk storage (cannot support atomic storage of a large amount of data write). This simple but important idea can be said to be the cornerstone of the database system. It is so important that most of the core data structures in the entire database are related to it. Perhaps with the development of the database, future technology will produce more advanced database architecture systems. But we must not forget that atomicity and durability should still be the core of database design.

Alright, the talk of atomicity is over. Finally, for our fellow database enthusiasts, we leave you with several questions related to the points we mentioned in this article for you to consider.

New-Gen Cluster Non-Inductive Data Migration of Alibaba Cloud In-Memory Database Tair

ApsaraDB - July 3, 2019

ApsaraDB - March 17, 2025

Alibaba Clouder - July 16, 2020

Stone Doyle - January 28, 2021

ApsaraDB - January 23, 2024

ApsaraDB - April 9, 2025

PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More PolarDB for Xscale

PolarDB for Xscale

Alibaba Cloud PolarDB for Xscale (PolarDB-X) is a cloud-native high-performance distributed database service independently developed by Alibaba Cloud.

Learn More PolarDB for MySQL

PolarDB for MySQL

Alibaba Cloud PolarDB for MySQL is a cloud-native relational database service 100% compatible with MySQL.

Learn More SOFAStack™

SOFAStack™

A one-stop, cloud-native platform that allows financial enterprises to develop and maintain highly available applications that use a distributed architecture.

Learn MoreMore Posts by ApsaraDB