In November 2018, Dragonfly, a cloud-native image distribution system from Alibaba, was on display at KubeCon Shanghai and has become a CNCF sandbox level project since then. Dragonfly mainly resolves the image distribution problems in Kubernetes-based distributed application orchestration systems. In 2017, open source became one of Alibaba's most central infrastructure technologies. In this article, we discuss the production practices of the Dragonfly-based unified file distribution platform in China Mobile (Zhejiang branch) DCOS.

A year after Alibaba adopted open source as a core technology, Dragonfly has been used in a variety of industrial fields. DCOS is the container cloud platform at the China Mobile Zhejiang branch. Currently, 185 application systems are running on this platform, including core systems such as the China Mobile service mobile app and the CRM application. This article mainly describes Dragonfly's implementation in the container cloud platform (DCOS) at China Mobile Zhejiang branch to resolve problems in the large-scale cluster scenario, such as low distribution efficiency, low success rate, and difficult network bandwidth control. In addition, Dragonfly upgraded its features and established high availability deployment based on feedback from the DCOS platform to the community.

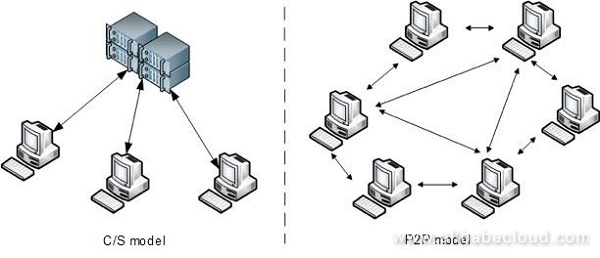

As the DCOS container cloud platform continuously improves and hosts more and more applications (nearly 10,000 running containers), it has become increasingly difficult for distribution service systems using traditional C/S (client-server) architecture to meet requirements in scenarios such as publishing code packages and transmitting files in large-scale distributed applications due to the following reasons:

Before we describe Dragonfly, let's quickly recap some basic concepts in computer networking. P2P (peer-to-peer) is a node-to-node network technology that connects individual nodes and distributes resources and services in networks among individual nodes. Information transmission and service implementation are carried out directly across nodes to avoid single-node performance bottlenecks that may otherwise occur in traditional C/S architecture.

Dragonfly is a CNCF open-source file distribution service solution based on the P2P and CDN technologies and suitable for distributing container images and files. Dragonfly can efficiently resolve low file and image distribution efficiency, low success rate, and network bandwidth control problems in an enterprise's large-scale cluster scenarios.

Core components of Dragonfly:

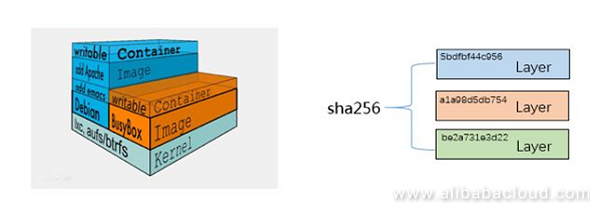

Dragonfly distribution principle (take image distribution, for example): Unlike ordinary files, container images consist of multiple storage layers. Downloading container images is also performed at a layer level instead of downloading a single file. Images in each layer can be divided into data blocks and serve as seeds. After container images are downloaded, the unique IDs of images in each layer and the sha256 algorithm are used to combine downloaded images into complete images. Consistency is ensured during the downloading process.

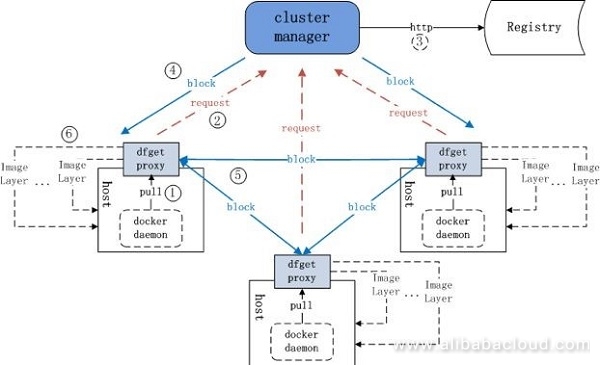

The following diagram shows how images are downloaded in Dragonfly.

Based on the preceding Dragonfly characteristics and the actual production conditions, the China Mobile Zhejiang branch decided to introduce the Dragonfly technology into its container cloud platform to reform its existing code package publishing model, share the transmission bandwidth bottleneck on a single file server by using a P2P network, and ensure the consistency of image files throughout the publishing process.

Based on the Dragonfly technology and the production practices of China Mobile Zhejiang branch, the unified distribution platform has the following overall design objectives:

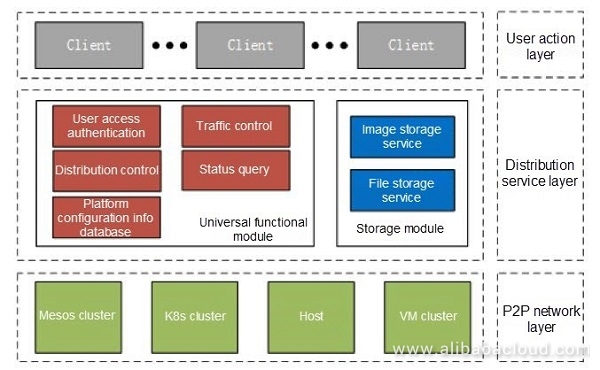

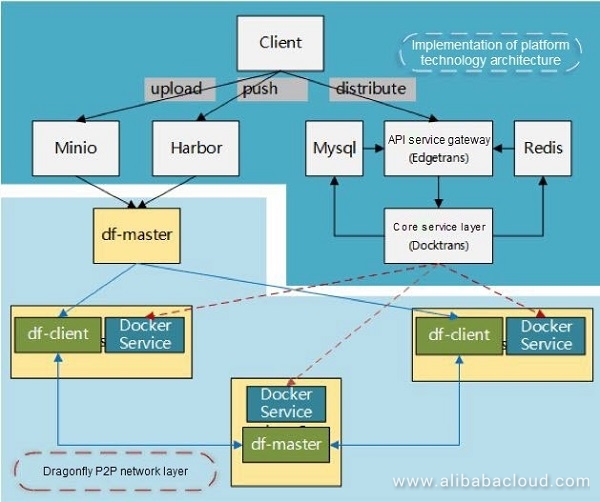

Based on these objectives, the overall architecture design is as follows:

The P2P network layer is a distribution network that consists of multiple computing nodes and allows different heterogeneous clusters (host clusters, K8s clusters, and Mesos clusters) to be connected.

As the core architecture of the entire universal distribution system, the distribution service layer consists of the functional modules and the storage modules. Among them, the user access authentication module provides the system login verification feature; based on Dragonfly, the distribution control module implements task distribution in a P2P manner; the traffic control module enables tenants to configure bandwidth for different tasks; the configuration info database is responsible for recording basic information, such as target clusters in the network layer and task status; the status query module enables users to closely monitor the distribution task progress; the user action layer consists of any number of interface-based clients.

According to the preceding platform design objectives and architecture analyses, the DOCS container cloud team conducted secondary development of the platform features based on the open-source components, including the following:

df-client implements container mirroring. The lightweight container deployment improves networking efficiency. The cluster host nodes that are newly added to the network layer can start P2P Agent nodes in a few seconds by downloading and starting images.

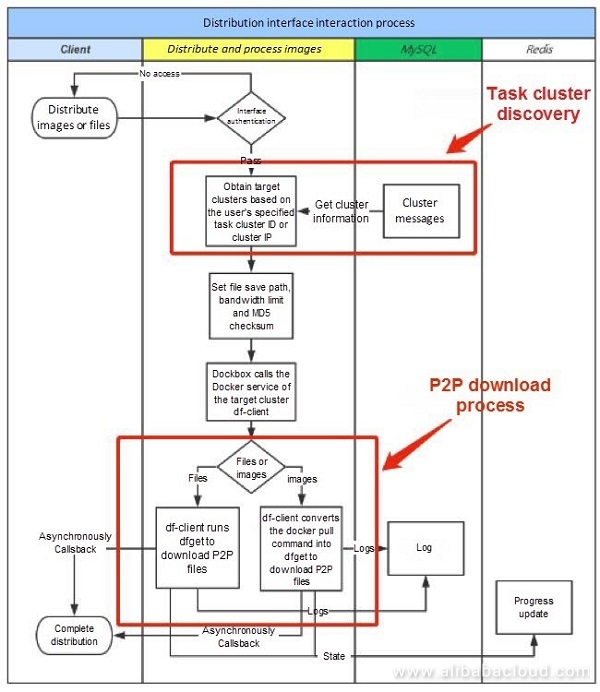

The core interface layer (Docktrans) screens the command-line details at the bottom layer of dfget and provides interface-based features to simplify user operations. Distributing to multiple P2P task nodes via unified remote calls eliminates the need for users to perform download operations, like dfget, node by node and simplifies the "one-to-many" task launching model.

The following figure shows how the core modules of the unified distribution platform distribute tasks.

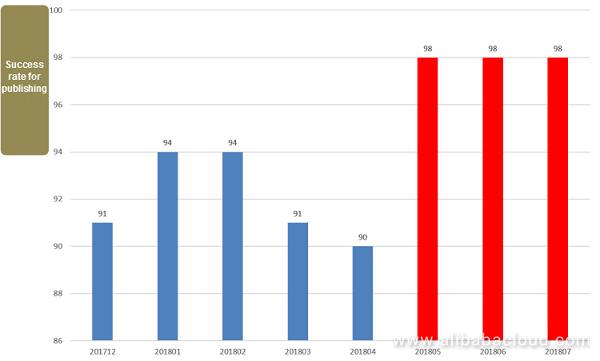

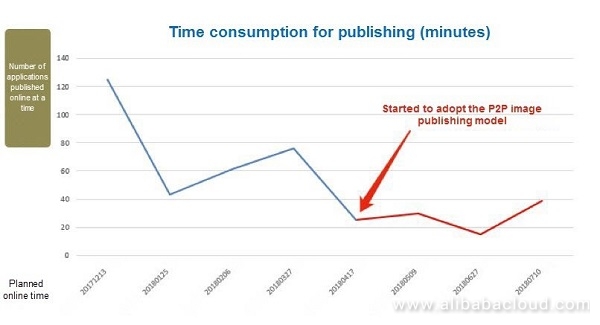

Currently, over 200 business systems and over 1,700 application modules that are currently running in the production environment have been optimized to use the image publishing model. The time consumption for publishing and the publishing success rate have significantly improved:

After the P2P image publishing method is adopted, the monthly success rate of publishing multiple applications at a time is steady at 98%.

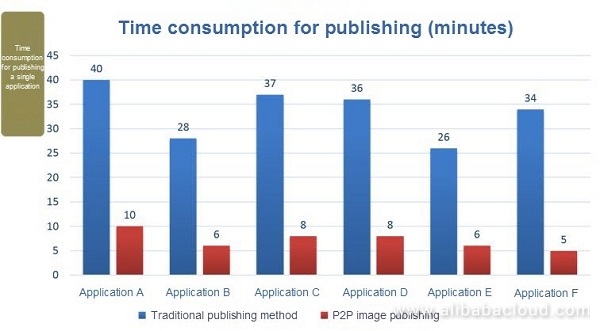

After April, the container cloud platform began using the P2P image publishing method in place of the code package publishing model in traditional distribution systems. After the platform is reformed, publishing multiple applications intensively at once significantly reduces time consumption (by 67% on average).

In the meantime, the container cloud platform selects multiple application clusters to test the efficiency in publishing a single application's P2P images after the transformation. As we can see, the time consumption for publishing a single application is significantly reduced (by 81.5% on average) compared with consumption by the platform before reformation.

The unified file distribution platform has resolved the efficiency and consistency problems faced by the China Mobile Zhejiang branch when using its DCOS platform to publish code and has become a key component of the platform. The unified file distribution platform also supports efficient file distribution in larger-scale clusters. This distribution platform can be consecutively applied to batch-distribute cluster installation media and batch-update cluster configuration files.

Currently, the interface-based client is almost developed and is in production testing and deployment. The four planned core features of the distribution platform are Task Management, Target Management, Permission Management, and System Analysis. Currently, the first three features are available.

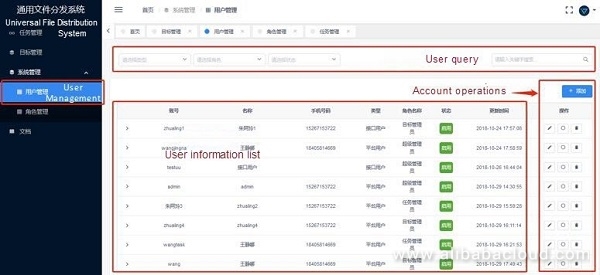

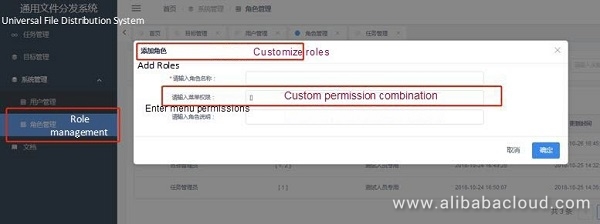

Permission Management (namely, user management) is designed to provide customized permission management features targeting different users, as listed below:

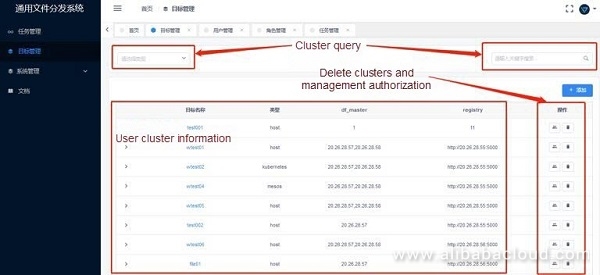

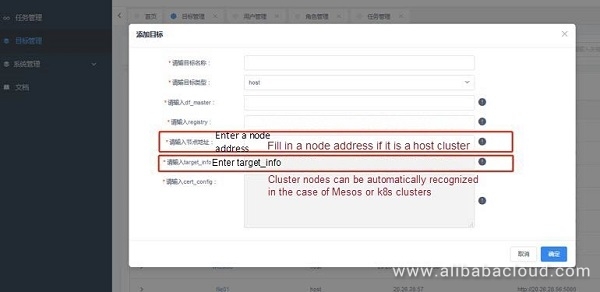

Target Management enables users to manage target cluster nodes when distributing tasks and manage P2P cluster networking, as well as cluster node status and health, as described below:

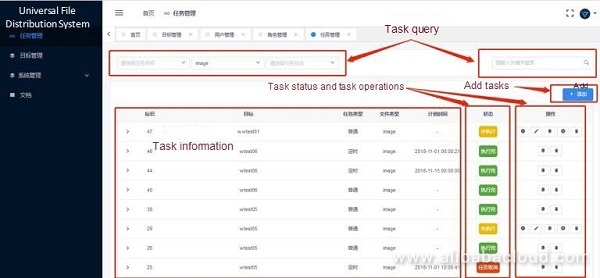

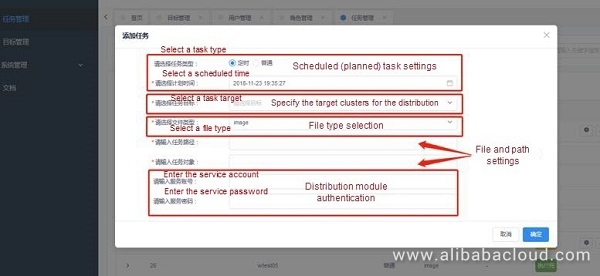

Task Management enables users to create, delete, and stop file or image distribution tasks and perform other operations, as detailed below:

The system analysis feature is expected to be released later to provide platform administrators and users with statistical graphs showing information such as task distribution time consumption, success rate, and task execution efficiency and facilitate platform intelligence via data statistics and prediction.

Active-standby mirror database disaster tolerance ensures data consistency between the active and standby databases through image synchronization.

Tai Yun, a contributor in the Dragonfly community, said during a Dragonfly Meetup,

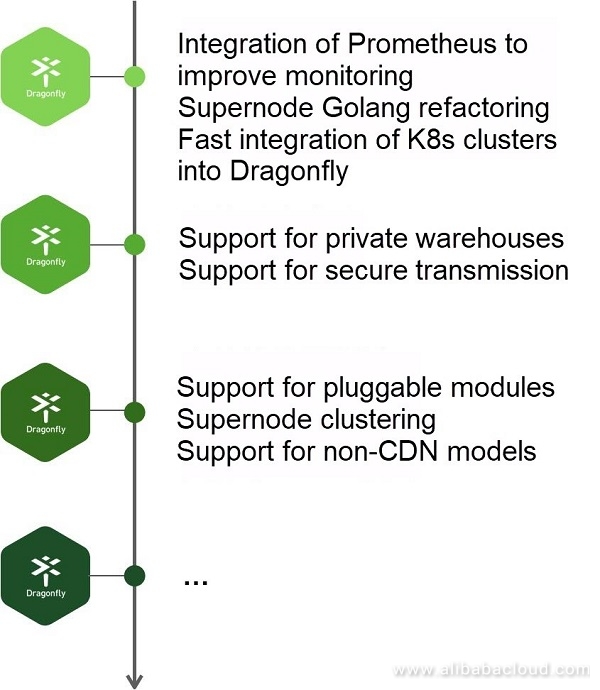

"Dragonfly is now a CNCF sandbox project with 2700+ stars. Many enterprises are using Dragonfly to resolve various problems they have encountered when distributing images and files. We will continuously improve Dragonfly to provide a more powerful and simpler distribution tool for cloud-native applications. I look forward to working with you to make Dragonfly a CNCF 'graduated' project as soon as possible."

We currently plan to contribute interface feature displays to the CNCF Dragonfly community to further enrich community content. We hope that more people join and help to improve the community.

Authors:

Chen Yuanzheng, Cloud Computing Architect at China Mobile (Zhejiang)

Wang Miaoxin, Cloud Computing Architect at China Mobile (Zhejiang)

To learn more about Dragonfly, visit https://developer.alibabacloud.com/opensource/project/dragonfly

Official GitHub page: https://github.com/dragonflyoss/Dragonfly

Alibaba Cluster Data: Using 270 GB of Open Source Data to Understand Alibaba Data Centers

508 posts | 48 followers

FollowAlibaba Developer - September 16, 2020

Alibaba Developer - September 16, 2020

Alibaba Clouder - September 17, 2020

Alibaba Clouder - April 9, 2020

Alibaba Clouder - October 14, 2020

Alibaba Clouder - April 18, 2018

508 posts | 48 followers

Follow ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More CDN(Alibaba Cloud CDN)

CDN(Alibaba Cloud CDN)

A scalable and high-performance content delivery service for accelerated distribution of content to users across the globe

Learn More Container Registry

Container Registry

A secure image hosting platform providing containerized image lifecycle management

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn MoreMore Posts by Alibaba Cloud Native Community