There is growing attention on reasoning models, which are AI systems designed to simulate human reasoning for problem solving and decision making. The trend coincides with the gradual shift in AI demand from model training to inference, and expanded discussions about test-time compute (also known as inference compute), which refer to the allocation of additional processing time for models during operation for enhanced reliability and sensibility of content output.

Note: the pronunciation of QwQ: /kwju:/ , similar to the word "quill".

Against this backdrop, Alibaba Cloud has recently released its reasoning AI model QwQ (Qwen with Questions). The released version QwQ-32B-Preview, an open-source experimental research model with 32 billion parameters, showcases impressive analytical capabilities.

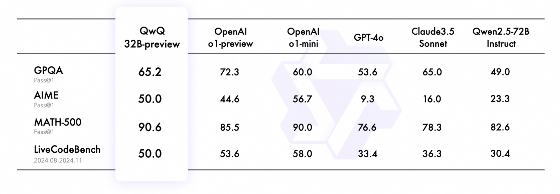

Currently in its preview phase, the AI model can process prompts up to 32,000 tokens in length. It excels in solving complex problems in mathematics and programming, surpassing state-of-the-art (SOTA) models in benchmarks like MATH-500 — a comprehensive set of 500 mathematics test cases — and the American Invitational Mathematics Examination (AIME), demonstrating impressive mathematical skills and problem-solving prowess.

QwQ showcases impressive analytical capabilities

The model’s responses to prompts reveal its ability to engage in multi-step reasoning, constructing intricate thought processes. This includes deep introspection, where it questions its own assumptions, participates in thoughtful self-dialogue, and analyzes each step of its reasoning.

Despite these advancements, significant challenges remain. The research team notes in the paper that while the model performs exceptionally in mathematics and coding, it requires further development in areas like common-sense reasoning and nuanced language comprehension. It’s available on Huggingface and ModelScope.

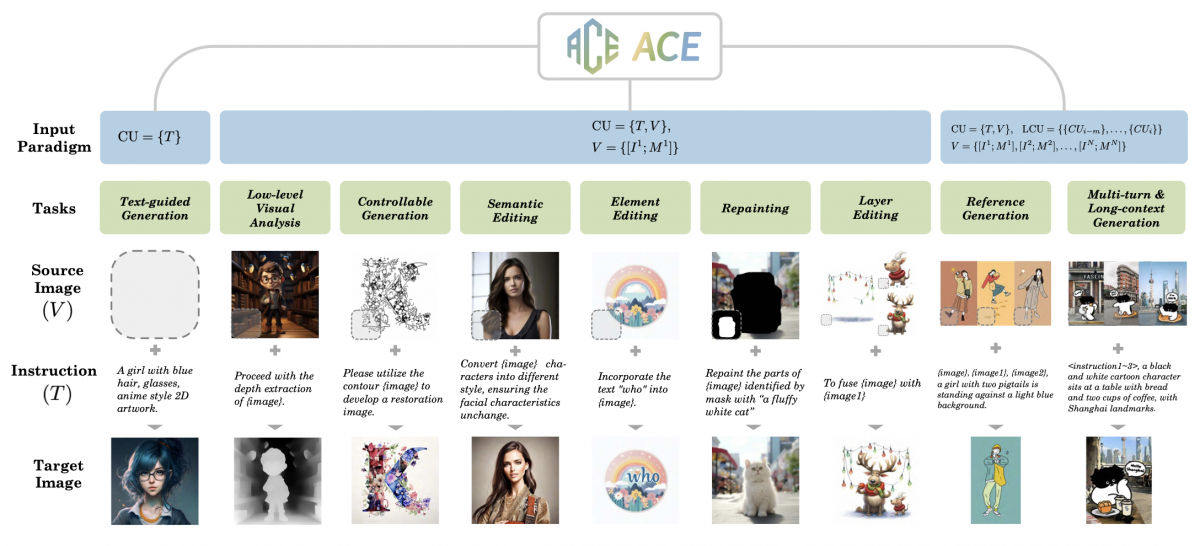

On the multimodal front, Alibaba Cloud also unveiled ACE (All-round Creator and Editor), a unified foundational model framework that supports various visual generation tasks, including image generation and editing, allowing complex and precise editing requests to be easily accomplished through multi-turn interactions. To facilitate this enhanced function, the research team developed a unified condition format named Long-context Condition Unit (LCU), which supports multimodal inputs and incorporates long-context conditions to enhance comprehension. Then, the team proposed a novel Transformer-based diffusion model that further enhances training for various generation and editing tasks.

Various image generation and editing tasks supported by ACE

This article was originally published on Alizila writtern by Selina Zhang.

Performance Optimization | Several Methods to Make Images Load Faster

AI Growth Is Built Upon Infrastructure Modernization: How Businesses Must Adapt to Thrive

1,084 posts | 277 followers

FollowAlibaba Cloud Community - January 2, 2025

Alibaba Cloud Community - September 19, 2024

Alibaba Cloud Community - October 9, 2024

Alibaba Cloud Community - July 7, 2023

Alibaba Cloud Community - January 30, 2024

Alibaba Cloud Community - April 11, 2023

1,084 posts | 277 followers

Follow Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn More Tongyi Qianwen (Qwen)

Tongyi Qianwen (Qwen)

Top-performance foundation models from Alibaba Cloud

Learn More Alibaba Cloud for Generative AI

Alibaba Cloud for Generative AI

Accelerate innovation with generative AI to create new business success

Learn MoreMore Posts by Alibaba Cloud Community