By Xining Wang

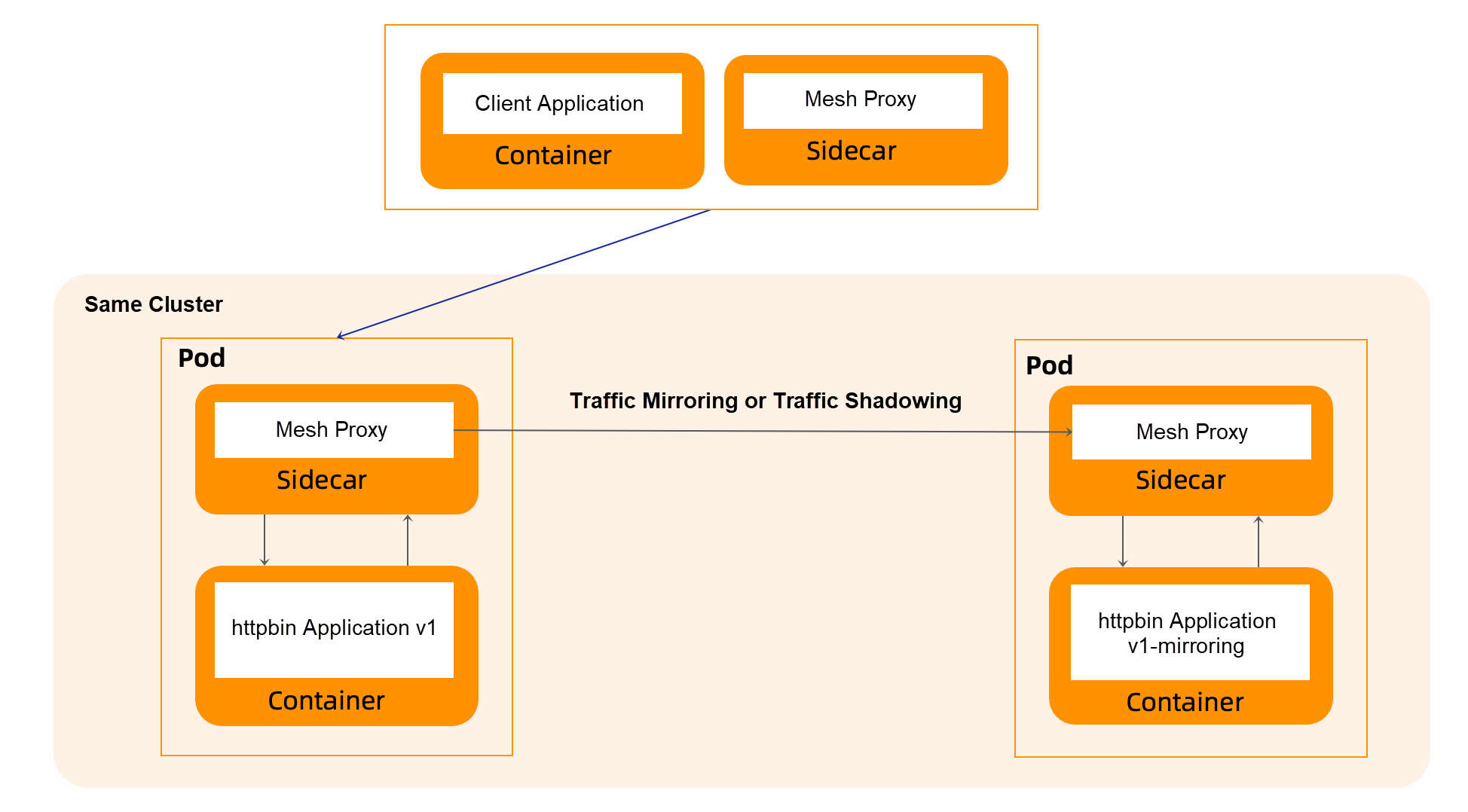

Microservices provide excellent features for rapid development and deployment. However, how to reduce the risk of changes caused by rapid development and deployment has become an issue. Service mesh technology provides Traffic Mirroring (also known as Traffic shadowing), which sends copies of real-time traffic to the mirroring service. The mirrored traffic occurs outside the critical request path of the primary service. As such, the production traffic can be mirrored and copied to the test cluster or the new test version, and the test can be performed before the real-time traffic is guided, which can reduce the risk of version change and bring powerful functions to the production with the lowest possible risk.

Traffic mirroring has the following advantages:

We will describe several typical scenarios that can take advantage of traffic mirroring.

The following is a simple YAML snippet that shows how to use Istio to enable traffic mirroring:

apiVersion: networking.istio.io/v1beta1

kind: VirtualService

metadata:

name: myapp-traffic-mirroring

spec:

hosts:

- myapp

http:

- route:

- destination:

host: myapp.default.svc.cluster.local

port:

number: 8000

subset: v1

weight: 100

mirror:

host: myapp.default.svc.cluster.local

port:

number: 8000

subset: v1-mirroringThe VirtualService routes 100% of traffic to the v1 subset while mirroring the same traffic to that v1-mirroring subset. The same request sent to the v1 subset will be duplicated and trigger the v1-mirroring subset.

The quickest way to see this effect is by looking at the application's log when v1-mirroring sends some requests to the v1 version of the application.

The response you will get when you call the application comes from the v1 subset. However, you will see requests mirrored to the v1-mirroring subset.

The following is an demo. First, all traffic is version v1. Then, use rules to mirror traffic to version v1-mirroring.

First, deploy two versions of the httpbin service:

apiVersion: v1

kind: ServiceAccount

metadata:

name: httpbin

---

apiVersion: v1

kind: Service

metadata:

name: httpbin

labels:

app: httpbin

service: httpbin

spec:

ports:

- name: http

port: 8000

targetPort: 80

selector:

app: httpbin

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: httpbin-v1

spec:

replicas: 1

selector:

matchLabels:

app: httpbin

version: v1

template:

metadata:

labels:

app: httpbin

version: v1

spec:

serviceAccountName: httpbin

containers:

- image: docker.io/kennethreitz/httpbin

imagePullPolicy: IfNotPresent

name: httpbin

ports:

- containerPort: 80

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: httpbin-v1-mirroring

spec:

replicas: 1

selector:

matchLabels:

app: httpbin

version: v1-mirroring

template:

metadata:

labels:

app: httpbin

version: v1-mirroring

spec:

serviceAccountName: httpbin

containers:

- image: docker.io/kennethreitz/httpbin

imagePullPolicy: IfNotPresent

name: httpbin

ports:

- containerPort: 80Then, deploy a client application sleep service to provide a load for curl.

apiVersion: v1

kind: ServiceAccount

metadata:

name: sleep

---

apiVersion: v1

kind: Service

metadata:

name: sleep

labels:

app: sleep

service: sleep

spec:

ports:

- port: 80

name: http

selector:

app: sleep

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: sleep

spec:

replicas: 1

selector:

matchLabels:

app: sleep

template:

metadata:

labels:

app: sleep

spec:

terminationGracePeriodSeconds: 0

serviceAccountName: sleep

containers:

- name: sleep

image: curlimages/curl

command: ["/bin/sleep", "infinity"]

imagePullPolicy: IfNotPresent

volumeMounts:

- mountPath: /etc/sleep/tls

name: secret-volume

volumes:

- name: secret-volume

secret:

secretName: sleep-secret

optional: true

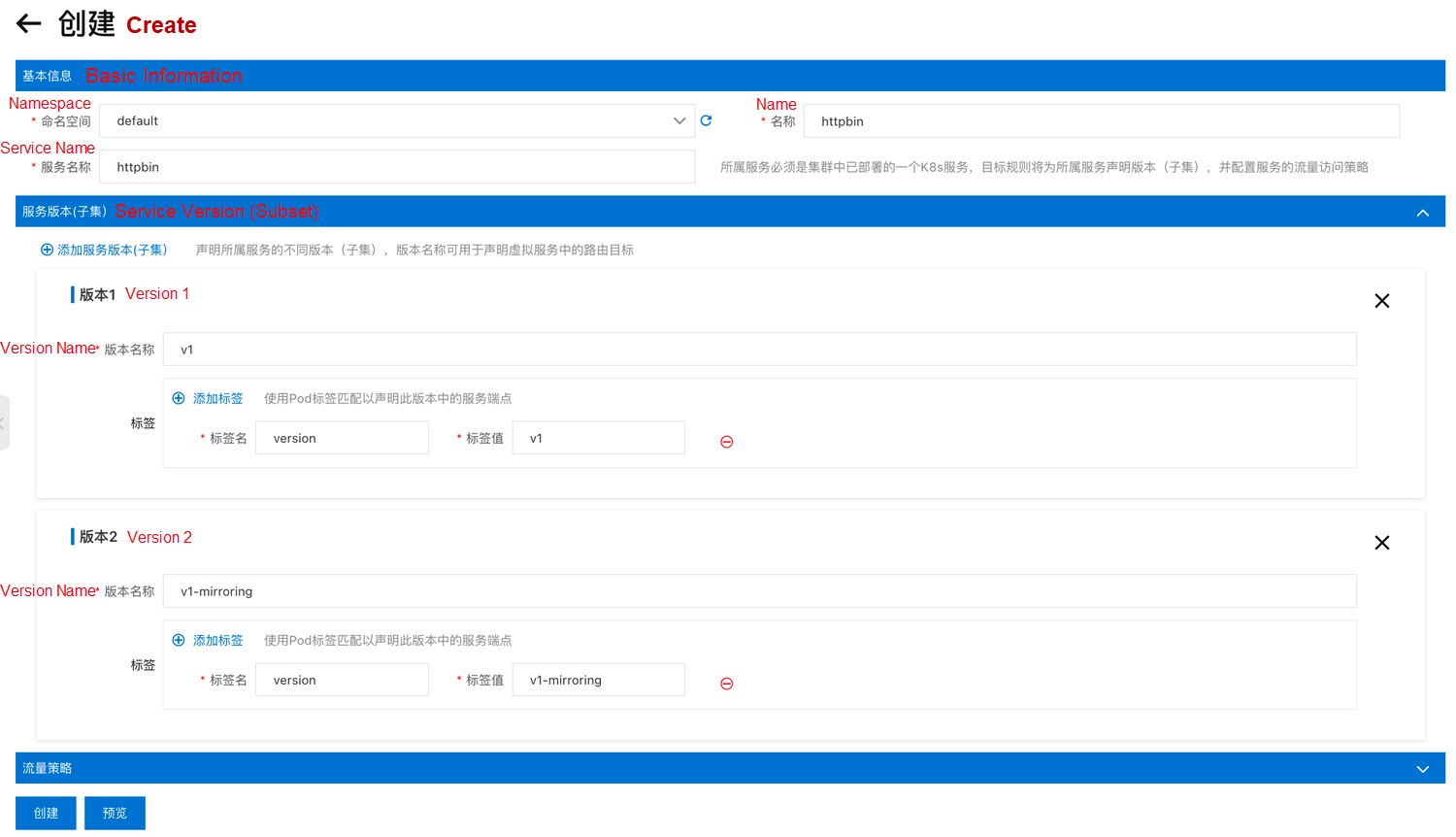

---Create a new target rule using the following console, defining two different versions: v1 and v1-mirroring.

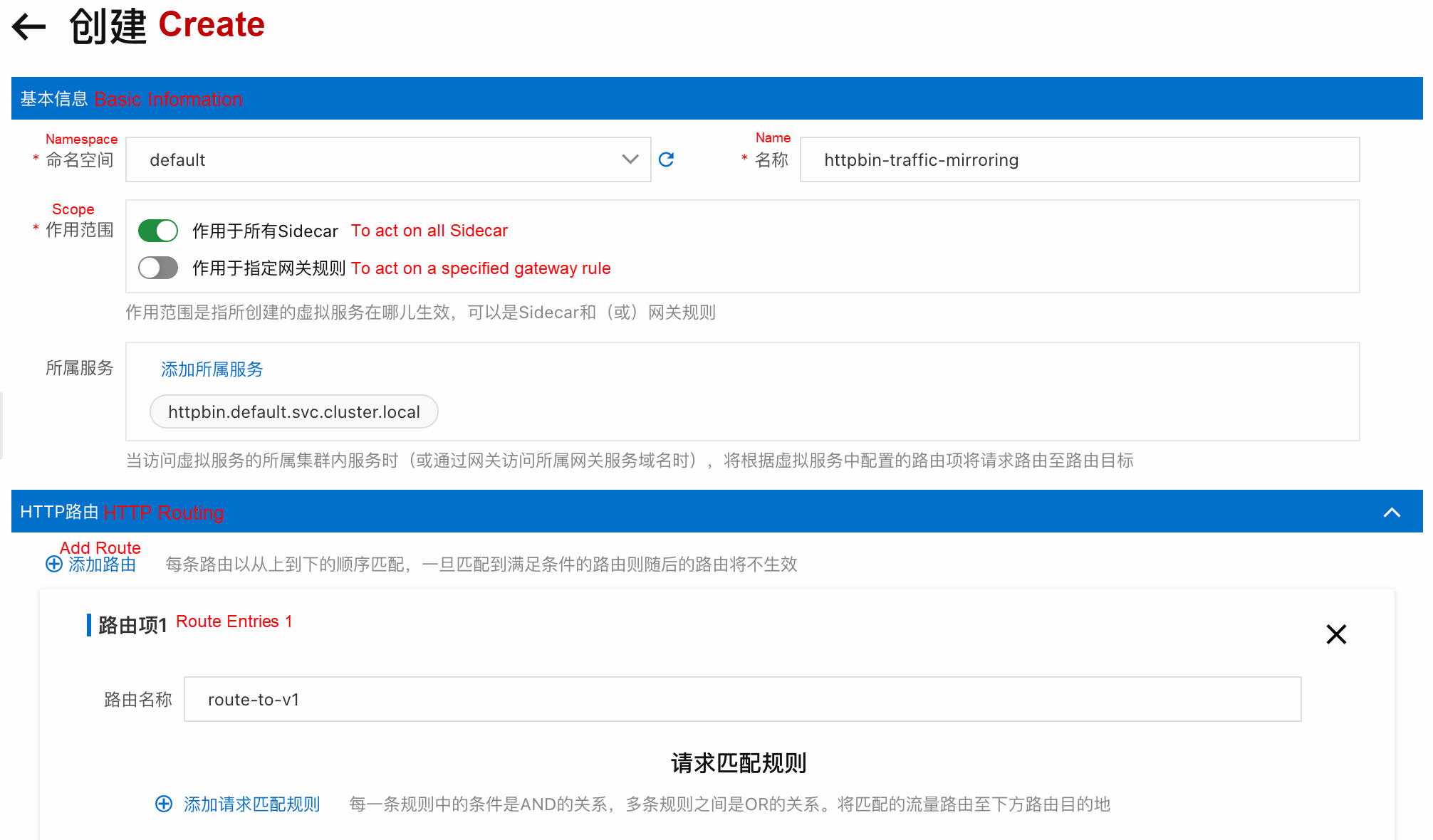

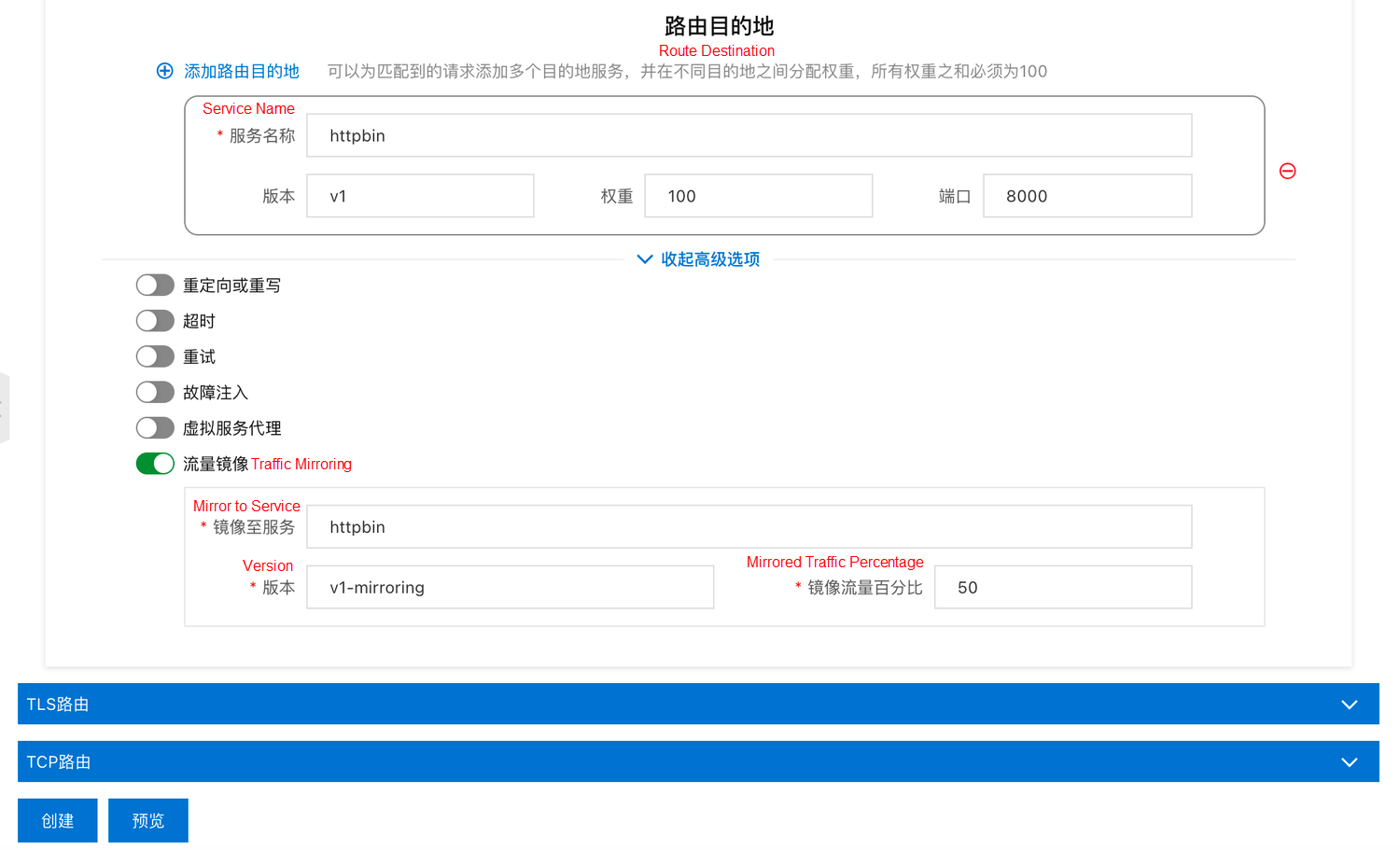

Create a new virtual service routing policy in the following console and import 100% of traffic to v1. At the same time, the traffic is mirrored to the v1-mirroring service.

Create a new routing policy using the following YAML to import 100% of traffic to V1. At the same time, the traffic is mirrored to the v2 service.

apiVersion: networking.istio.io/v1beta1

kind: DestinationRule

metadata:

name: httpbin

spec:

host: httpbin

subsets:

- name: v1

labels:

version: v1

- name: v1-mirroring

labels:

version: v1-mirroring

---

apiVersion: networking.istio.io/v1beta1

kind: VirtualService

metadata:

name: httpbin-traffic-mirroring

spec:

hosts:

- httpbin

http:

- route:

- destination:

host: httpbin

port:

number: 8000

subset: v1

weight: 100

mirror:

host: httpbin

port:

number: 8000

subset: v1-mirroring

mirrorPercentage:

value: 50After waiting for the application service to run normally, we send some traffic to the httpbin service through the sleep application.

export SLEEP_POD=$(kubectl get pod -l app=sleep -o jsonpath={.items..metadata.name})

kubectl exec -it $SLEEP_POD -c sleep -- curl http://httpbin:8000/headersYou can find that the pod of the v1 and v1- mirroring versions contains the preceding request traffic access records, indicating that 100% of traffic is sent to the pod of the v1 version, and 50% of traffic is mirrored to the pod of the v1- mirroring, which matches the mirroring policy defined in the preceding section.

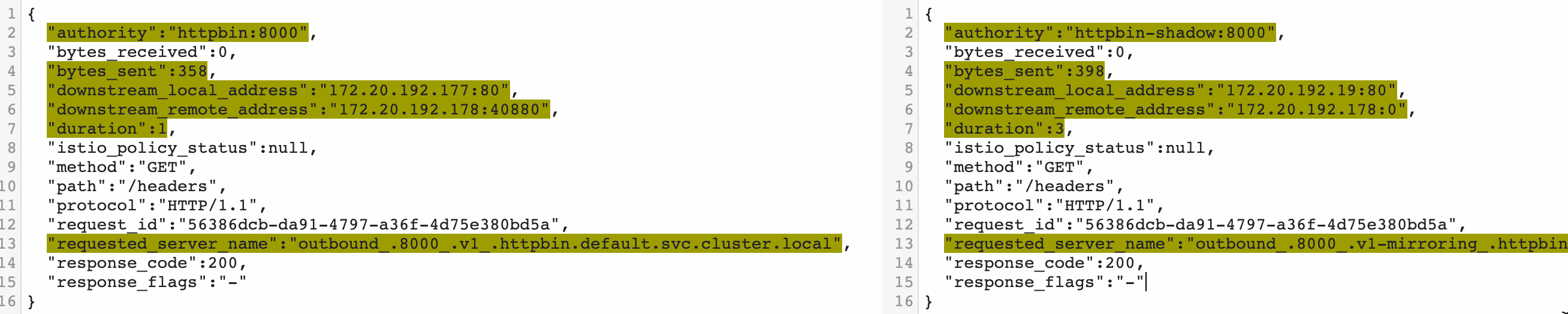

Comparing the content of the two logs, we can see that the traffic in v1 is mirrored to v1- mirroring, and the v1- mirroring message in the log is larger than that in v1 because the traffic is marked as traffic shadowing. -shadow is automatically added to the shadow traffic in the authority result.

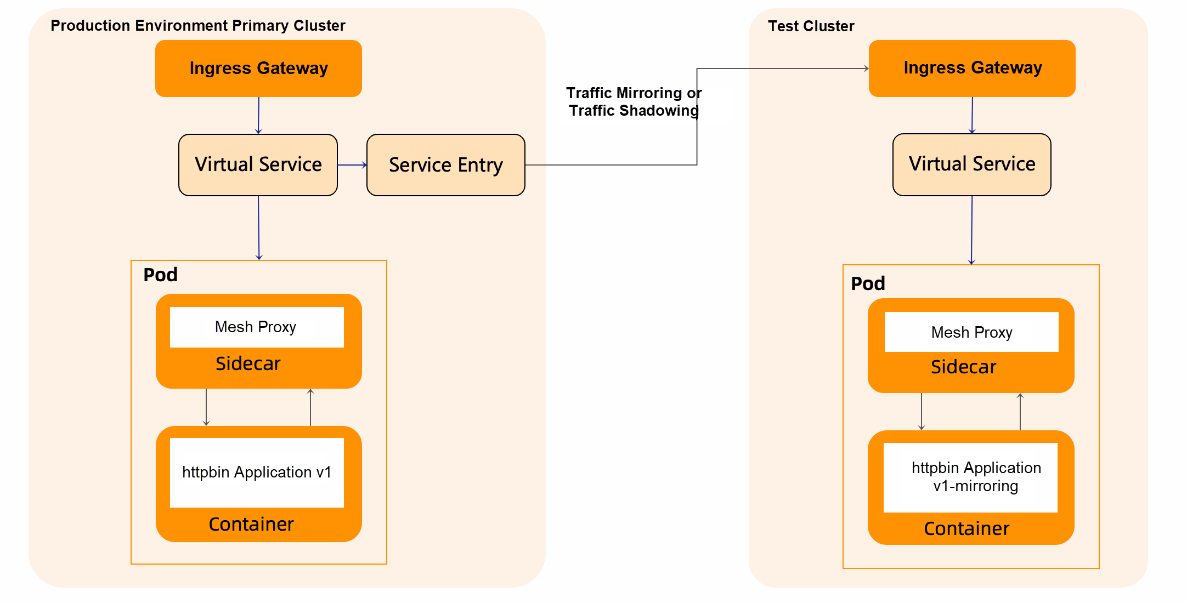

Traffic mirroring based on the gateway layer is generally used to import real online traffic for pre-release environments, so it is mostly used across clusters. In this example, cluster A in the production environment and cluster B in the test environment are used. The subject request is from cluster A in the production environment, and the gateway of cluster A copies the traffic to cluster B, as shown in the following figure:

Deploy a version of the httpbin service in the test cluster:

apiVersion: v1

kind: ServiceAccount

metadata:

name: httpbin

---

apiVersion: v1

kind: Service

metadata:

name: httpbin

labels:

app: httpbin

service: httpbin

spec:

ports:

- name: http

port: 8000

targetPort: 80

selector:

app: httpbin

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: httpbin-v1

spec:

replicas: 1

selector:

matchLabels:

app: httpbin

version: v1

template:

metadata:

labels:

app: httpbin

version: v1

spec:

serviceAccountName: httpbin

containers:

- image: docker.io/kennethreitz/httpbin

imagePullPolicy: IfNotPresent

name: httpbin

ports:

- containerPort: 80

---apiVersion: networking.istio.io/v1alpha3

kind: Gateway

metadata:

name: httpbin-gateway

spec:

selector:

istio: ingressgateway # use istio default controller

servers:

- port:

number: 80

name: http

protocol: HTTP

hosts:

- "*"

---

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: httpbin

spec:

hosts:

- "*"

gateways:

- httpbin-gateway

http:

- match:

- uri:

prefix: /headers

route:

- destination:

host: httpbin

port:

number: 8000

Then, access the ingress gateway in cluster B, confirm that the preceding test service works properly, and return the corresponding request result.

curl http://{ingress gateway address of cluster B}/headersSimilar results are listed below:

{

"headers": {

"Accept": "*/*",

"Host": "47.99.56.58",

"User-Agent": "curl/7.79.1",

"X-Envoy-Attempt-Count": "1",

"X-Envoy-External-Address": "120.244.218.105",

"X-Forwarded-Client-Cert": "By=spiffe://cluster.local/ns/default/sa/httpbin;Hash=158e4ef69876550c34d10e3bfbd8d43f5ab481b16ba0e90b4e38a2d53acf134f;Subject=\"\";URI=spiffe://cluster.local/ns/istio-system/sa/istio-ingressgateway-service-account"

}

}Since the service host of the mirror is an external domain name, we need to add a ServiceEntry to specify the DNS resolution method of the service host.

apiVersion: networking.istio.io/v1alpha3

kind: ServiceEntry

metadata:

name: httpbin-cluster-b

spec:

hosts:

- httpbin.mirror.cluster-b

location: MESH_EXTERNAL

ports:

- number: 80 # Note that the port value here is port 80 of the ingress gateway that points to cluster-b.

name: http

protocol: HTTP

resolution: STATIC

endpoints:

- address: 47.95.xx.xx # Note that the IP value here is the ingress gateway address that points to cluster-b.Then, create a new routing policy by the following YAML, and import 100% of traffic to the v1 HTTP service in the primary cluster. At the same time, the traffic is mirrored to the service in test cluster B, where httpbin.mirror.cluster-b points to the external service address through the access domain name.

apiVersion: networking.istio.io/v1alpha3

kind: Gateway

metadata:

name: httpbin-gateway

spec:

selector:

istio: ingressgateway # use istio default controller

servers:

- port:

number: 80

name: http

protocol: HTTP

hosts:

- "*"

---

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: httpbin

spec:

gateways:

- httpbin-gateway

hosts:

- '*'

http:

- match:

- uri:

prefix: /headers

mirror:

host: httpbin.mirror.cluster-b

port:

number: 80

mirrorPercentage:

value: 50

route:

- destination:

host: httpbin

port:

number: 8000

subset: v1

View the Envoy config dump in ingress gateway pods in the primary cluster. You can see something like this:

"routes": [

{

"match": {

"prefix": "/headers",

"case_sensitive": true

},

"route": {

"cluster": "outbound|8000|v1|httpbin.default.svc.cluster.local",

"timeout": "0s",

"retry_policy": {

"retry_on": "connect-failure,refused-stream,unavailable,cancelled,retriable-status-codes",

"num_retries": 2,

"retry_host_predicate": [

{

"name": "envoy.retry_host_predicates.previous_hosts",

"typed_config": {

"@type": "type.googleapis.com/envoy.extensions.retry.host.previous_hosts.v3.PreviousHostsPredicate"

}

}

],

"host_selection_retry_max_attempts": "5",

"retriable_status_codes": [

503

]

},

"request_mirror_policies": [

{

"cluster": "outbound|80||httpbin.mirror.cluster-b",

"runtime_fraction": {

"default_value": {

"numerator": 500000,

"denominator": "MILLION"

}

},

"trace_sampled": false

}

],Traffic mirroring is primarily used to be able to test services with actual production traffic without affecting the end client in any way. It is useful when we rewrite an existing service. We want to verify whether the new version can handle various incoming requests in the same way or when we want to compare benchmarks between two versions of the same service. It can be used to perform some additional out-of-bounds processing on our request, which can be done asynchronously (such as collecting some additional statistics or performing some extended log entry).

In practice, mirroring production traffic to a test cluster is an effective way to reduce the risk of new deployments, whether in production or non-production environments. Like large Internet companies, they have been doing this for many years. Service mesh technology provides traffic shadowing based on seven layers of load, which can be easily created, whether it is to create a mirror copy within a cluster or implement traffic replication across clusters. We can create a more realistic experimental environment through traffic mirroring, in which we can debug, test, or collect data and replay traffic under real traffic, which makes online work controllable. Moreover, service mesh technology unifying the management mesh strategy can unify the technology stack and liberate the team from the complex technology stack, reducing mental burdens.

Alibaba Cloud Service Mesh FAQ (5): ASM Gateway Supports Creating HTTPS Listeners on the SLB Side

56 posts | 8 followers

FollowXi Ning Wang(王夕宁) - May 26, 2023

Xi Ning Wang(王夕宁) - May 26, 2023

Xi Ning Wang(王夕宁) - May 26, 2023

Xi Ning Wang(王夕宁) - May 26, 2023

Alibaba Container Service - March 29, 2019

三辰 - July 6, 2020

56 posts | 8 followers

Follow Alibaba Cloud Service Mesh

Alibaba Cloud Service Mesh

Alibaba Cloud Service Mesh (ASM) is a fully managed service mesh platform that is compatible with Istio.

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More Function Compute

Function Compute

Alibaba Cloud Function Compute is a fully-managed event-driven compute service. It allows you to focus on writing and uploading code without the need to manage infrastructure such as servers.

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn MoreMore Posts by Xi Ning Wang(王夕宁)