By Liu Qiuyang and Cai Jing

In the context of a highly integrated global economy, the digital entertainment industry is growing into a potent driver of cultural and commercial exchange. Against this backdrop, a number of game vendors have launched their games overseas and achieved remarkable successes, largely owed to a global server architecture that connects players from around the world. Global deployment expands not only the market share of a game but also the global influence of the game vendor. However, it poses many technical challenges.

As frequent interaction at low latency is essential to gaming services, a global server architecture is only possible through multi-region deployments. In real-world operations, game vendors usually need to plan server deployment based on the geographic locations of their target users and their tolerance for delay. The following regions are typically preferred by game vendors. First, the US (East). This region is densely populated, ensuring a reach to a wide range of players across North America. Second, Germany (Frankfurt). This region is Europe's Internet hub, which facilitates a good online gaming experience for players across Europe. The third is Singapore, where game vendors can serve a wide range of players in Southeast Asia. The fourth is Japan (Tokyo), where game vendors can provide support for players from Japan and South Korea.

Given potential variations in configurations, version updates, and numbers of servers across different regions, how to effectively achieve consistent delivery of game servers on a global scale has become a key challenge in designing a global server architecture. In this article, we will use an example to explain the best practices for achieving consistent delivery of global game servers across multiple regions.

In this example, we plan to deploy servers in the China (Shanghai), Japan (Tokyo), and Germany (Frankfurt) regions. Therefore, we need infrastructure resources in these three regions. If infrastructure resources are heterogeneous and complex, the declarative APIs and consistent delivery provided by cloud-native architectures can fully shield the differences in underlying resources, allowing game O&M engineers to focus more on the game applications and significantly improving the efficiency of game server delivery. Considering the stability of independent management in each region and the complexity of user scheduling, we believe that the best way to achieve consistent game server delivery is to deploy Kubernetes clusters independently in each region and achieve centralized O&M management based on multi-cluster management capabilities.

Therefore, we choose the Distributed Cloud Container Platform for Kubernetes (ACK One) provided by Alibaba Cloud to manage Kubernetes clusters in multiple regions. ACK One is an enterprise-level cloud-native platform designed for scenarios such as hybrid clouds, multiple clusters, and disaster recovery. ACK One can connect to and manage Kubernetes clusters in any region and on any infrastructure, and supports consistent management and control over various aspects, such as applications, traffic, security, storage, and observability.

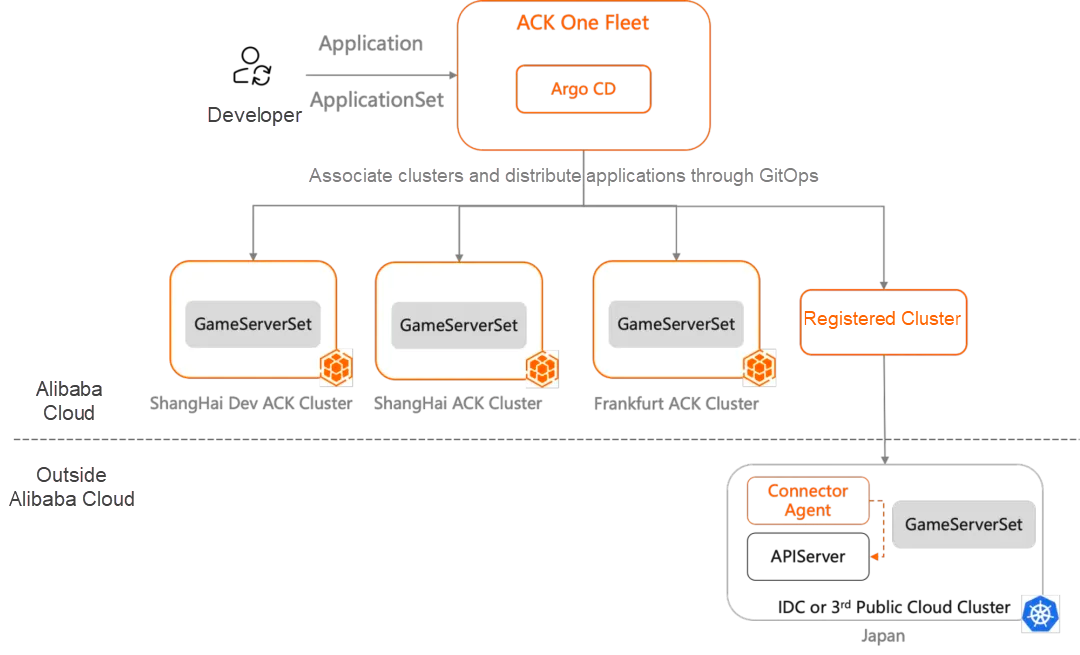

As shown in the following figure, the architecture used in this example includes three production environments in different regions and one development and test environment. In most cases, a version can be deployed to the production environment only after it has been verified and its stability has been confirmed in the development and test environment. This ensures the overall stability of the project and effectively prevents risks.

The architecture used in this example is a multi-cluster hybrid cloud architecture. Specifically, the ShangHai Cluster, Frankfurt Cluster, and ShangHai Dev Cluster are ACK clusters. The cluster in Japan is a Kubernetes cluster outside Alibaba Cloud and is integrated and managed by registration with ACK One. Within the clusters, we use GameServerSets to deploy game servers. A GameServerSet is a game-specific workload provided by OpenKruise, an open-source project incubated by the Cloud Native Computing Foundation (CNCF). Compared with native Deployment and StatefulSet workloads, GameServerSet workloads provide game semantics and are more relevant to game scenarios, making O&M management of game servers more convenient and efficient.

After preparing Kubernetes clusters, we use an ACK One Fleet instance to manage all the clusters, either hosted on the cloud or deployed in data centers:

First, register clusters deployed in data centers or on third-party public clouds with ACK One using the Registered Clusters feature[1]. Specifically:

kubectl apply -f agent.yaml command in the cluster to register the cluster.Now, the cluster deployed in Japan has been registered with Alibaba Cloud.

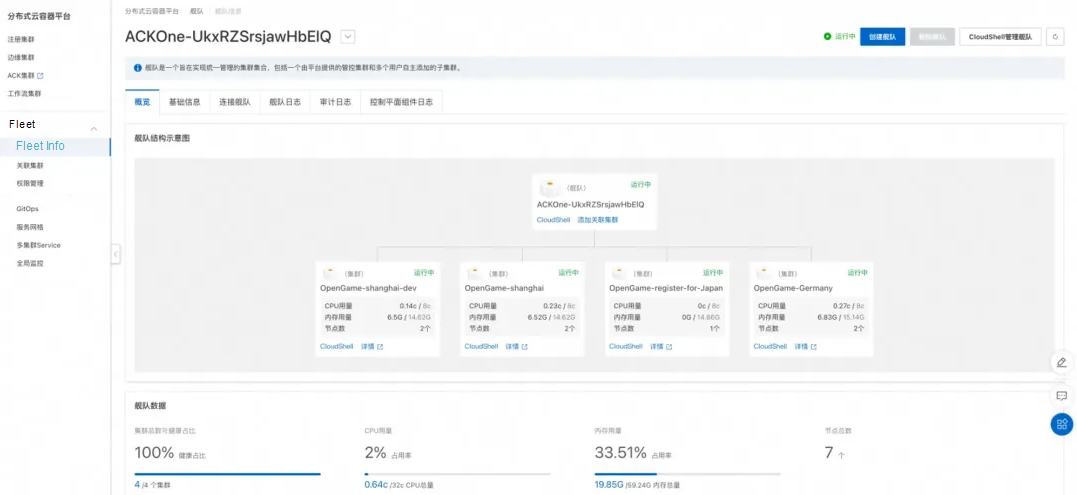

Then, enable Fleet management [3] and associate the registered cluster and the cloud clusters in the ACK One console[4]. Because the clusters are deployed in different regions, the ACK One Fleet associates the clusters over the Internet, hence the need to configure an Internet NAT gateway for the virtual private cloud (VPC) where the Fleet instance resides.

At this point, a multi-cluster Fleet instance is ready, as shown in the following figure.

Before introducing the specific deployment operations, let's get acquainted with the principle of cloud-native delivery. Cloud-native is declarative rather than process-oriented, which means that the delivery of a cloud-native application focuses on the definition of the application rather than the deployment process. The definition of an application is in its YAML file, which describes what the application should be like by configuring parameters. All changes and deployments of an application are in fact changes to the description in its YAML file.

Therefore, we need a repository to store the YAML file, record the current description of the application, and trace and audit history changes to the YAML file. Git repositories perfectly meet such requirements. O&M engineers can upload YAML files by using commits in merge requests. Permission management and auditing of uploaded YAML files follow the guidelines of Git repositories. Ideally, we only need to maintain one set of YAML description files of game servers and use the files to simultaneously deploy game servers in multiple regions around the globe, without having to manage and deploy the clusters one by one. This is the idea of GitOps.

The biggest challenge in implementing GitOps is the abstract description of game server applications. As mentioned at the beginning of this article, game servers deployed in different regions are more or less different, so it is difficult to describe all the game servers in one YAML file. Assuming that we need to deploy 10 game servers in Shanghai and three in Frankfurt. In this case, we cannot describe all the game servers in one YAML file because the values of the replicas field will be different for the game servers. So, do we have to maintain a separate YAML file for each region? This is not a reasonable approach, either. If we need to change the value of a non-differential field, for example, to add a tag to all game servers in different regions, we have to modify all YAML files one by one. If the number of clusters is large, this process is very prone to omissions or errors, which contradicts the idea of cloud-native delivery.

We can use Helm charts to further abstract game server applications and extract their differential fields as values. In our example (see Example of Game Server Helm Charts on GitHub[5]), we can extract the following differential fields:

• Primary image - The primary image of game servers in each region or cluster may vary.

• Sidecar image - The sidecar image of game servers in each region or cluster may vary.

• Replicas - The number of game servers to be deployed in each cluster or region may vary.

• Auto scaling - The auto scaling requirements of game servers in each region or cluster may vary.

All the other fields are kept consistent for game servers across different regions.

Now with an understanding of GitOps and having abstracted and described game server applications, we can use ACK One GitOps to deploy the applications. Perform the following procedures.

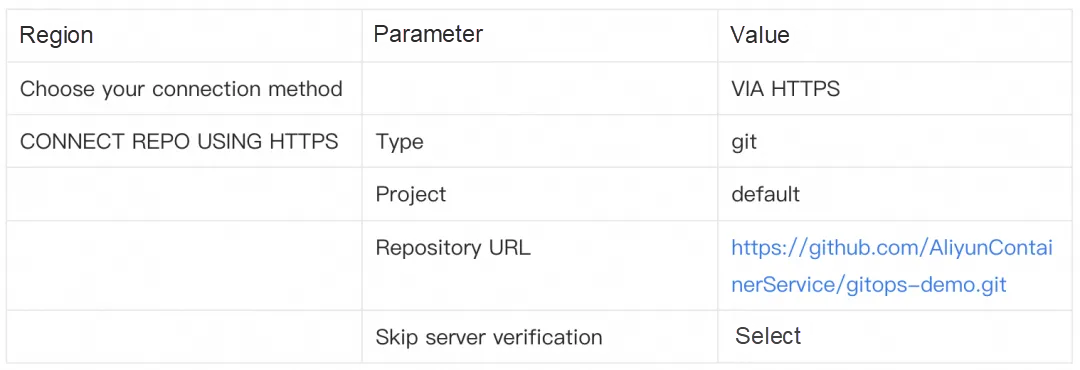

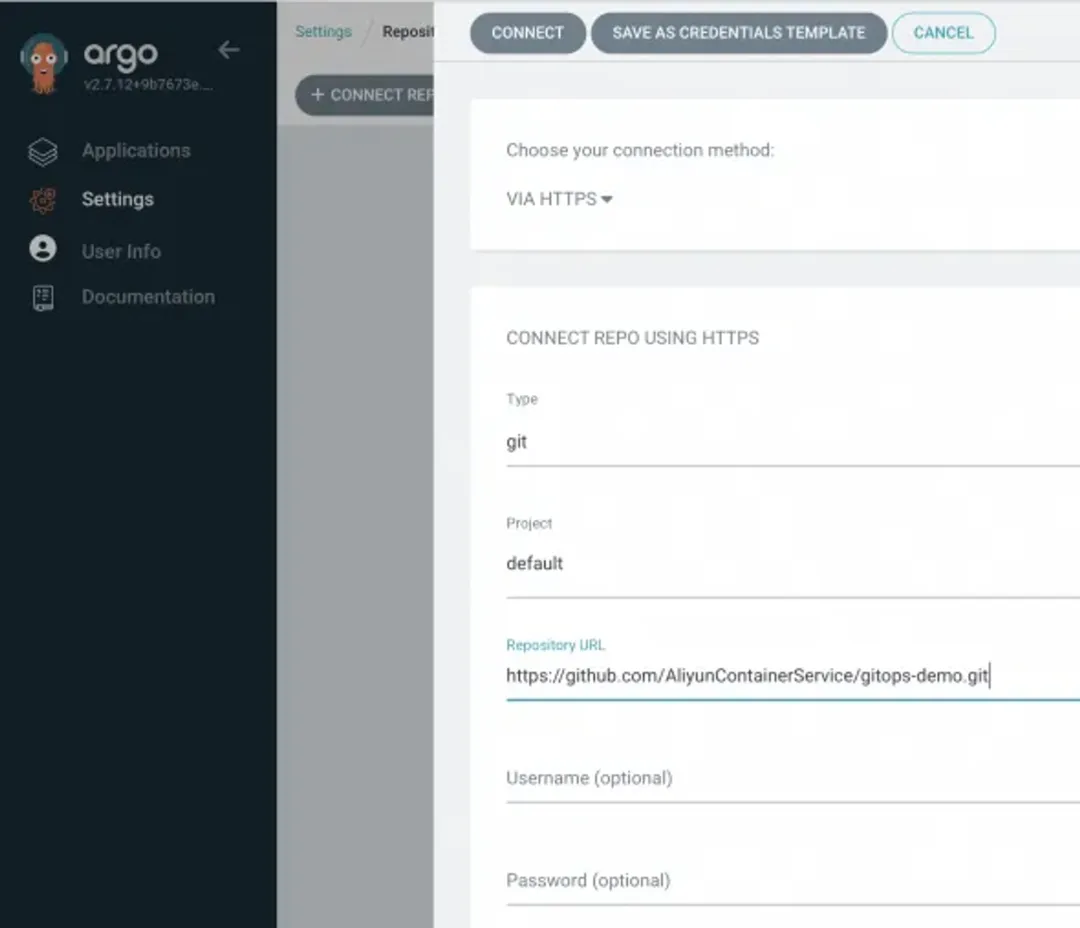

Log on to the Argo CD UI: In the left-side navigation pane of the ACK One console, choose Fleet > GitOps. On the GitOps page, click GitOps Console. Click LOG IN VIA ALIYUN to log on to the Argo CD UI. If public access to Argo CD is needed, configure public access on the GitOps page[6].

PvE games usually have servers deployed in different regions. In most cases, O&M engineers manually control the number of activated game servers in each region. For best practices of cloud-native PvE games, see Kruise-Game: Best Practices for Traditional PvE Games[7].

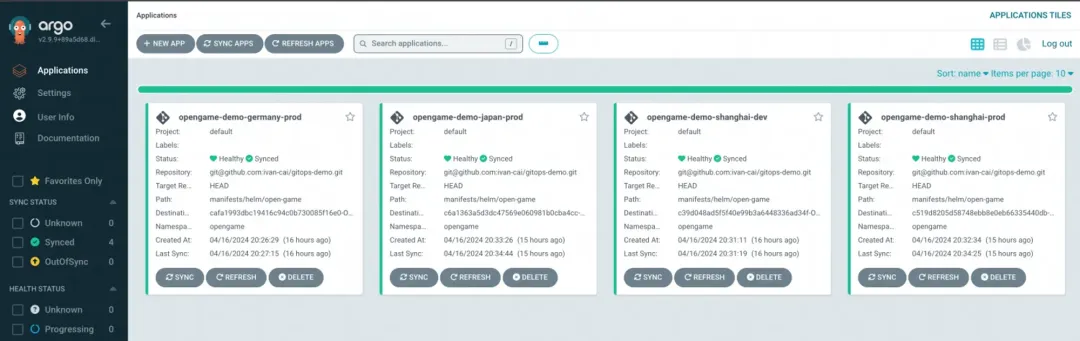

When using Argo CD for the first time, we can use the visualized console to create applications for clusters in each region.

1. In the left-side navigation pane of the Argo CD UI, choose Applications. On the Applications page, click + NEW APP.

2. In the panel that appears, configure the following information and click CREATE. (In this example, an application named opengame-demo-shanghai-dev is created.)

| Region | Parameter | Value |

| GENERAL | Application Name | opengame-demo-shanghai-dev |

| Project Name | default | |

| SYNC POLICY | Select Automatic from the drop-down list. Valid values: | |

| • Manual: Manually synchronize changes in the Git repository. | ||

| • Automatic: Automatically synchronize changes in the Git repository every three minutes. | ||

| SYNC OPTIONS | Select AUTO-CREATE NAMESPACE. | |

| SOURCE | Repository URL | Select an existing Git repository from the drop-down list. In this example, select https://github.com/Aliyuncontainerservice/gitops-demo.git |

| Revision | HEAD | |

| Path | manifests/helm/open-game | |

| DESTINATION | Cluster URL/Cluster Name | Select a cluster from the drop-down list. |

| Namespace | opengame | |

| VALUES FILES | values. yaml | |

| HELM | replicas | Set the parameter to 3 to deploy three game servers |

| PARAMETERS | scaled.enabled | Set the parameter to false to disable auto scaling. |

3. You can check the status of the newly created application named opengame-demo-shanghai-dev on the Applications page. If SYNC POLICY is set to Manual, you need to click SYNC to manually deploy the application in the specified cluster. After the status of the application changes to Healthy and Synced, the application is deployed.

4. Click the name of the application to view the application details. The details page displays the topology and status of Kubernetes resources used by the application.

With a basic understanding of Argo CD, we can use ApplicationSet to quickly deploy game servers. The differences between clusters are extracted as elements. For example, in the following YAML file, three fields are abstracted from the cluster dimension. The cluster field indicates the application name, the url field indicates the cluster endpoint, and the replicas field indicates the number of game servers deployed in the cluster.

After you write the YAML file of an ApplicationSet, deploy the file to the ACK One Fleet instance. Four applications are automatically created.

kubectl apply -f pve.yaml -n argocd

# The pve.yaml file contains the following content:

apiVersion: argoproj.io/v1alpha1

kind: ApplicationSet

metadata:

name: minecraft

spec:

generators:

- list:

elements:

- cluster: shanghai-dev

url: <https://47.100.237.xxx:6443>

replicas: '1'

- cluster: shanghai-prod

url: <https://47.101.214.xxx:6443>

replicas: '3'

- cluster: frankfurt-prod

url: <https://8.209.103.xxx:6443>

replicas: '2'

- cluster: japan-prod

url: <https://10.0.0.xxx:6443>

replicas: '2'

template:

metadata:

name: '{{cluster}}-minecraft'

spec:

project: default

source:

repoURL: '<https://github.com/AliyunContainerService/gitops-demo.git>'

targetRevision: HEAD

path: manifests/helm/open-game

helm:

valueFiles:

- values.yaml

parameters: #Corresponding to the parameter values extracted from Helm charts

- name: replicas

value: '{{replicas}}'

- name: scaled.enabled

value: 'false'

destination:

server: '{{url}}'

namespace: game-server #Set the deployment destination to the game-server namespace in the cluster.

syncPolicy:

syncOptions:

- CreateNamespace=true #Automatically create a namespace if the namespace does not exist in the cluster.In this YAML file, all image versions are the same. If you want the image versions of different clusters to vary, you can add new parameters in the same way as replicas.

For a PvP game, the number of room servers is adjusted by the autoscaler of the game, rather than being manually specified by O&M engineers. For best practices of cloud-native PvP games, see Kruise-Game: Best Practice for Session-Based Games (PvP Room)[8].

In OpenKruiseGame (OKG), we implement elastic scaling of room servers by configuring the ScaledObject object for the GameServerSet. Therefore, scaled.enabled must be set to true in this scenario. In addition, the number of room server replicas is controlled by Argo CD and OKG, which conflict with each other. This can be solved by configuring the spec.ignoreDifferences field so that Argo CD ignores changes in the number of GameServerSet replicas. Therefore, the YAML file of the PvP game contains the following content:

apiVersion: argoproj.io/v1alpha1

kind: ApplicationSet

metadata:

name: pvp

spec:

generators:

- list:

elements:

- cluster: shanghai-dev

url: <https://47.100.237.xxx:6443>

- cluster: shanghai-prod

url: <https://47.101.214.xxx:6443>

- cluster: frankfurt-prod

url: <https://8.209.103.xxx:6443>

- cluster: japan-prod

url: <https://10.0.0.xxx:6443>

template:

metadata:

name: '{{cluster}}-pvp'

spec:

project: defaultminecraft

ignoreDifferences: # Set this parameter so that the number of GameServerSet minecraft replicas is controlled by the cluster itself.

- group: game.kruise.io

kind: GameServerSet

name: minecraft

namespace: game

jsonPointers:

- /spec/replicas

source:

repoURL: '<https://github.com/AliyunContainerService/gitops-demo.git>'

targetRevision: HEAD

path: manifests/helm/open-game

helm:

valueFiles:

- values.yaml

destination:

server: '{{url}}'

namespace: pvp-server

syncPolicy:

syncOptions:

- CreateNamespace=trueThis article uses a practical example to illustrate the best practices for achieving consistent delivery of global game servers across multiple regions using ACK One. The example involves four Kubernetes clusters and a simple YAML file for deploying game servers. In real-world production environments, the number of clusters often increases, and game server applications tend to have more complex configurations. The crucial step is to abstract the application.

[1] Overview of registered clusters

https://www.alibabacloud.com/help/en/ack/distributed-cloud-container-platform-for-kubernetes/user-guide/overview-9

[2] Create a registered cluster in the ACK console

https://www.alibabacloud.com/help/en/ack/distributed-cloud-container-platform-for-kubernetes/user-guide/create-a-cluster-registration-proxy-and-register-a-kubernetes-cluster-deployed-in-a-data-center

[3] Enable Fleet management

https://www.alibabacloud.com/help/en/ack/distributed-cloud-container-platform-for-kubernetes/user-guide/enable-fleet-management

[4] ACK One console

https://account.aliyun.com/login/login.htm?oauth_callback=https%3A%2F%2Fcs.console.aliyun.com%2Fone

[5] Example of Game Server Helm Charts on GitHub

https://github.com/AliyunContainerService/gitops-demo/tree/main/manifests/helm/open-game

[6] Enable public access to Argo CD

https://www.alibabacloud.com/help/en/ack/distributed-cloud-container-platform-for-kubernetes/user-guide/enable-gitops-public-network-access

[7] Kruise-Game: Best Practices for Traditional PvE Games

https://www.alibabacloud.com/blog/kruise-game-best-practices-for-traditional-pve-games_600822

[8] Kruise-Game: Best Practice for Session-Based Games (PvP Room)

https://www.alibabacloud.com/blog/kruise-game-best-practice-for-session-based-games-pvp-room_600547

ACK Cloud Native AI Suite | Efficiently Scheduling Large Scale AI Big Data Tasks on Kubernetes

ACK Cloud Native AI Suite | Elastic Acceleration of Generative AI Model Inference with Fluid

223 posts | 33 followers

FollowAlibaba Cloud Native Community - November 15, 2023

Alibaba Container Service - July 8, 2024

Alibaba Container Service - July 5, 2024

Alibaba Container Service - July 4, 2024

Alibaba Container Service - July 5, 2024

Alibaba Container Service - July 8, 2024

223 posts | 33 followers

Follow Best Practices

Best Practices

Follow our step-by-step best practices guides to build your own business case.

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More Function Compute

Function Compute

Alibaba Cloud Function Compute is a fully-managed event-driven compute service. It allows you to focus on writing and uploading code without the need to manage infrastructure such as servers.

Learn More Managed Service for Prometheus

Managed Service for Prometheus

Multi-source metrics are aggregated to monitor the status of your business and services in real time.

Learn MoreMore Posts by Alibaba Container Service