By Shaoyu Huang and Yuanhong Peng

At the Apsara Conference in October 2021, LifseaOS, which was created for cloud-native, was officially released. It was integrated into the managed node pool of Alibaba Cloud Container Service ACK Pro as an operating system option.

Not long ago, LifseaOS was officially made open-source in the OpenAnolis. Users can build and customize their own container-specific OS based on LifseaOS open-source code.

Speaking of LifseaOS, I have to mention its main scenario: containers.

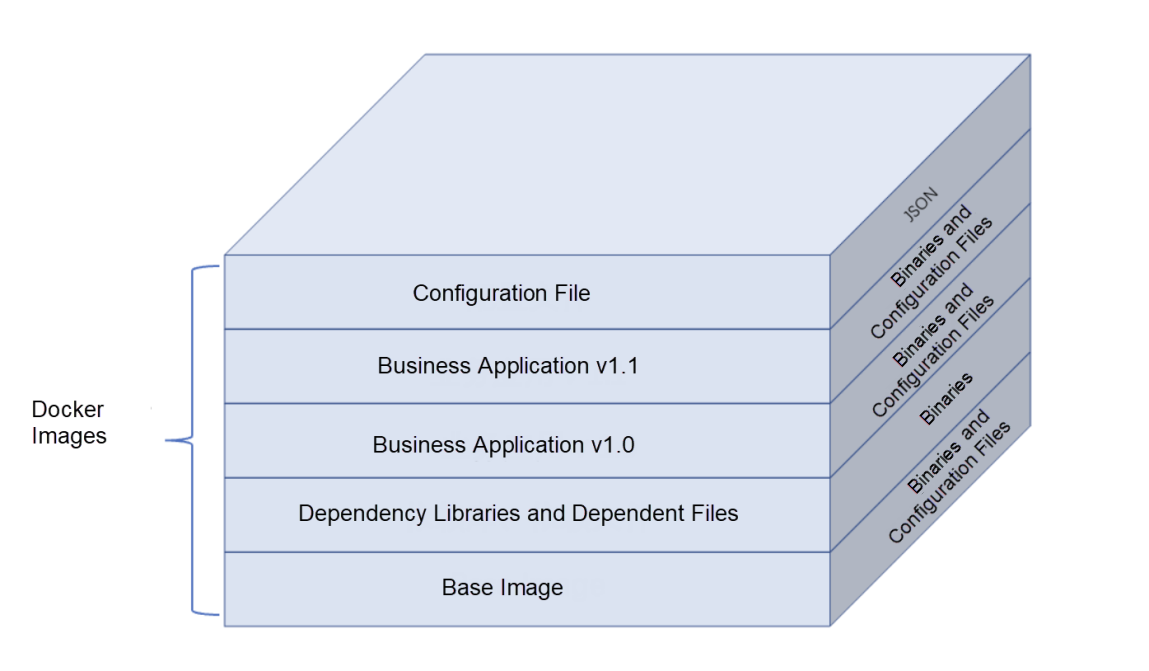

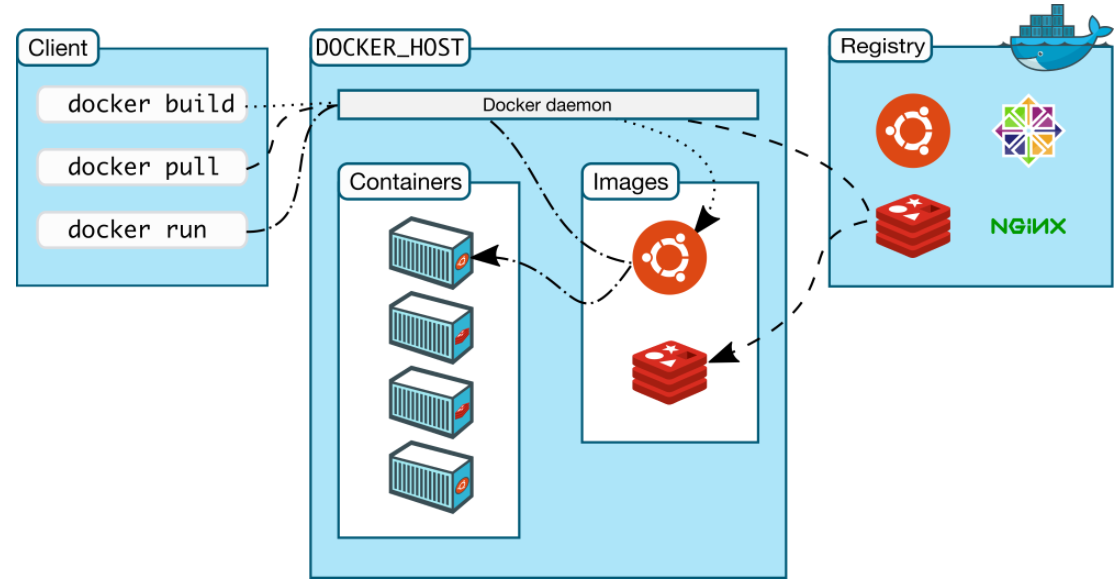

From the earliest UNIX chroot to the Linux LXC, the early container runtime technology based on cgroup and namespace has been evolving, but with no phased breakthrough. Until 2013, the emergence of Docker promoted the popularization of containers. After a few years of development, container technology has become the mainstream IT infrastructure technology and are widely used. The rapid development of container technology mostly benifits from Docker. Looking back at the initial work of Docker at that time, we can find that it did not make disruptive technological changes. Its core innovation mainly includes the following two parts:

These two key innovations brought about the entire revolution of development, integration, and deployment . First of all, the container image provides a convenient way for DevOps. The developers have the fully control of the application execution environment and can directly put their development results online without considering environmental factors, such as operating system compatibility and library dependency, thus realizing the Docker slogan – Build, Ship, and Run Any App, Anywhere. Secondly, the emergence of restful API makes the lifecycle management of containers more convenient. Using orchestration tools to manage containers, SRE can quickly and indiscriminately deploy, upgrade, and offline applications, realizing a qualitative leap from pet to cattle for application management.

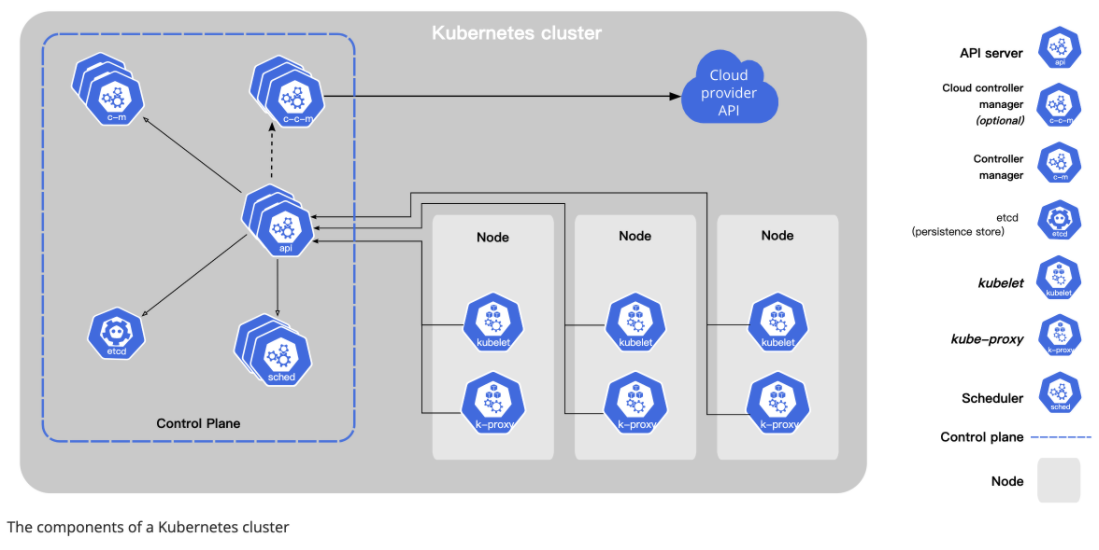

The development of container technology brings about many related technologies such as container orchestration, container storage, and container networking. These fields are closely integrated to form the cloud-native ecosystem. Since 2015, a complete set of cloud-native operating systems has been formed around Kubernetes. With Kubernetes, you can deploy containers in a cluster quickly and efficiently without paying attention to complex cluster resource allocation and container scheduling.

In the progress of making things cloud-native, there exists a component that steps slowly, that is the operating system (OS). Although the presence of the OS is not strong, it indeed acts as a bridge between hardware and software, silently provides the application with the ability of stand-alone resource management and execution environment construction, which plays a pivotal role. However, in cloud-native scenarios, traditional operating systems have shown various discomfort:

Now let's think about the time before Docker appears, the application operation and maintenance personnel also had similar problems, such as how to match the application execution environment with different OS environments and how to manage the applications conveniently and quickly. However, Docker has solved these problems well. Can we learn the ideas from Docker to solve the problem of OS operation and maintenance?

There are already some container-optimized operating systems in the industry (often called ContainerOS), including AWS bottlerocket, Redhat Fodera CoreOS, and Rancher RancherOS. Most of them have the following features:

These characteristics also verify our thinking. We can solve the problem of scattered versions through immutable OS images and use API to solve the problem of cluster operation and maintenance. Also, if we can apply API to operation and maintenance, does that mean we can also use the OS as a Kubernetes manageable resource and let Kubernetes manage the OS like containers?

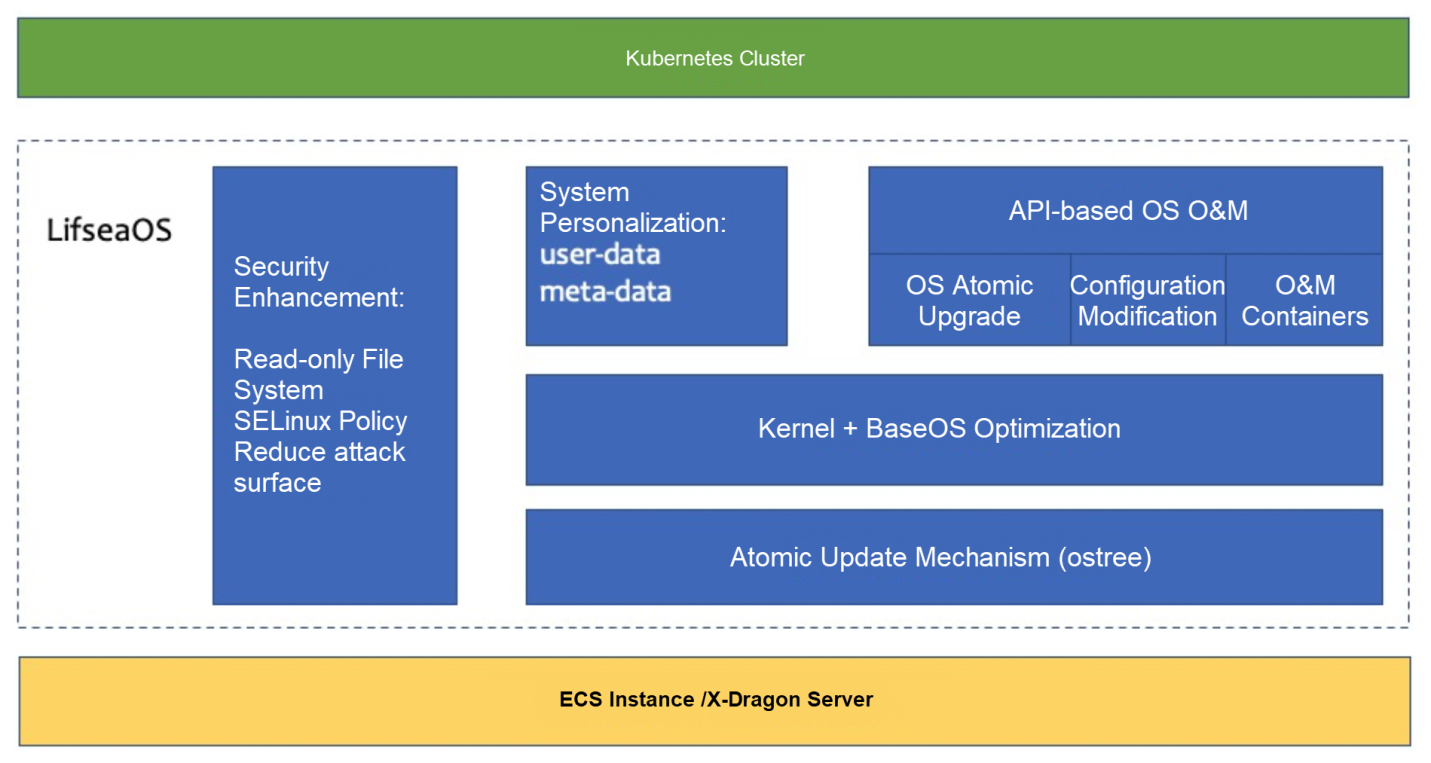

Based on the above thinking, we have launched LifseaOS, a cloud-native OS.

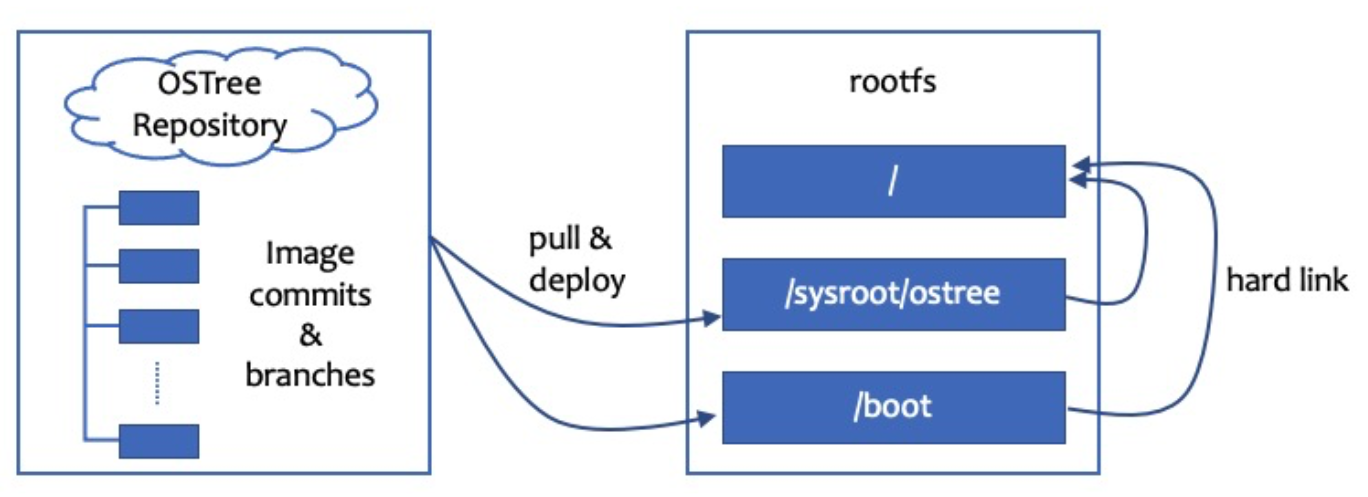

LifseaOS uses the rpm-otree feature to upgrade and rollback images atomically. At the same time, a large number of optimizations are made to make the overall OS lighter, faster and safer.

In the meantime, we provide an OS operation and maintenance tool (functions continue to enrich). The user's maintenance operations for OS are carried out by invoking this tool through Alibaba Cloud Assistant, reducing open operations for OS, and conducting corresponding audits.

API-based O&M plays a more important role in the O&M of the OS in the cloud-native. We can use a Kubernetes controller to connect O&M API, combined with the version of the OS, so Kubernetes manages a HostOS like a container.

The characteristics of LifseaOS are not only the image version management and O&M API described above. Its name describes the characteristics of LifseaOS as an OS born for the cloud and containers:

LifseaOS integrates the necessary cloud-native components by default and only retains the system services and software packages required for Kubernetes pods to run. The entire system only has about 200 software packages, compared with the traditional operating system (Alibaba Cloud Linux 2/3 and CentOS) which contains more than 500 software packages. The number of packages is reduced by 60%, which is more lightweight.

The cloud-init (ECS metadata management component commonly used by cloud service providers) suite is replaced with Ignition of CoreOS, and a large number of unnecessary functions are tailored. Unnecessary kernel modules, systemd services (such as systemd-logind, systemd-resolved), and many practical gadgets included with systemd are removed.

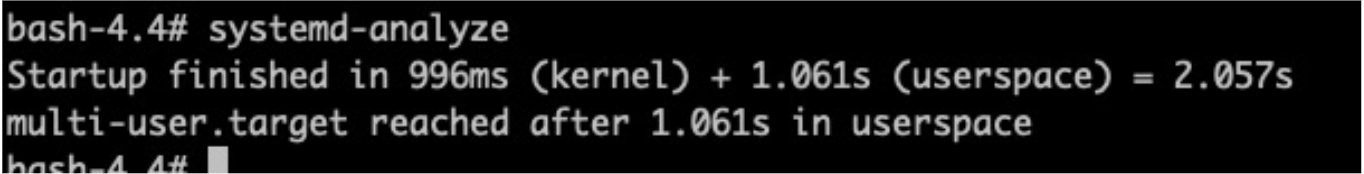

LifseaOS is positioned as the operating system of virtual machines running on the cloud, so it does not involve too many hardware drivers. The necessary kernel driver modules are changed to the built-in mode, initramfs are removed, and udev rules are simplified. As a result, the startup speed is improved. Let's take ECS instance of type ecs.g7.large as an example. The first startup time of LifseaOS remains about 2s:

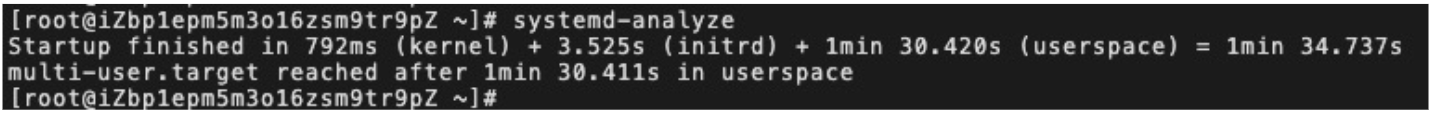

Let's take Alibaba Cloud Linux 3 as an example. The first startup time of the traditional operating system is more than 1 min:

The root file system of LifseaOS is read-only. Only the /etc and /var directories are writable to meet the basic system configuration requirements. It complies with the principle of immutable infrastructure and prevents container escapes and unauthorized operations on the host file system. LifseaOS does not support Python but still retains its shell because the ACK cluster deployment stage involves executing a series of shell scripts to do initialization work. The shell will be further removed.

In addition, LifseaOS removes the sshd service and prohibits users from logging on to the system to perform a series of operations that may not be recorded. LifseaOS still provides a dedicated O&M container for emergency O&M requirements. The O&M container needs to be pulled up using the API. It is not enabled by default.

LifseaOS does not support the installation, upgrade, and uninstallation of a single rpm package and does not provide yum. So it removes rpm-ostree package in Fedora CoreOS and only retains the ostree package. (The former can manage the OS based on a single rpm package, while the latter only manages files.) Updates and rollbacks at the granularity of the image ensure consistency in package versions and system configurations across nodes in the cluster. Each ContainerOS image must pass strict tests before it is released. Compared with traditional upgrades that are based on RPM packages, upgrades based on OS images ensure higher system stability after upgrades are completed.

You are welcome to join the OS SIG in OpenAnolis to build container-specific operating systems to cloud-native.

Shaoyu Huang from Alibaba Cloud, core member of Container Optimized Operating System SIG in the OpenAnolis community.

Yuanhong Peng from Alibaba Cloud, core member of Container Optimized Operating System SIG in the OpenAnolis community.

"When Can the Operating System Be Recognized?" A 16-Year Insistence of Open-Source Developers

OpenAnolis Officially Launches Its First CSV Confidential Container Solution with HYGON

85 posts | 5 followers

FollowAlibaba Clouder - April 8, 2018

ApsaraDB - March 4, 2021

H Ohara - November 20, 2023

OpenAnolis - April 27, 2022

Alibaba Cloud Community - December 1, 2021

Alibaba Clouder - October 10, 2020

85 posts | 5 followers

Follow ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Bastionhost

Bastionhost

A unified, efficient, and secure platform that provides cloud-based O&M, access control, and operation audit.

Learn More Managed Service for Grafana

Managed Service for Grafana

Managed Service for Grafana displays a large amount of data in real time to provide an overview of business and O&M monitoring.

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn MoreMore Posts by OpenAnolis