This article is a summary of presentation shared by AliExpress CTO Guo Dongbai at the first Alibaba Online Technology Summit. During his presentation he introduces the theory behind the architecture of e-commerce platform AliExpress as well as introducing how to fully optimize a cloud stack based on the calculation of bounce rates between pages. His steps to optimizing the cloud stack of an e-commerce site also touches on dynamic acceleration, static +ESI, element merge requests, and CDN scheduling optimization.

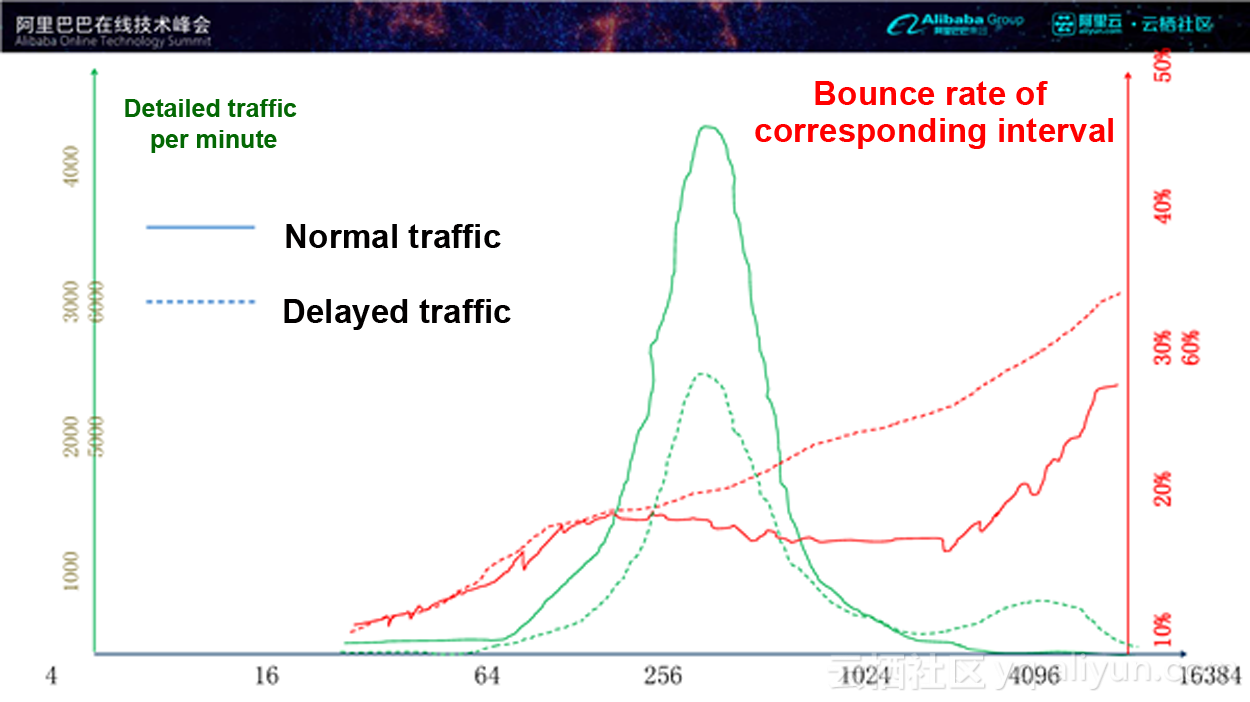

This above figure shows the relationship between the traffic distribution and bounce rate. The horizontal axis presents the latency interval while the vertical axis presents traffic flow distribution. The green curve presents the traffic flow distribution of incoming customers to the website or application.

As can be seen, most of the traffic flow is distributed within the range of several hundred to 1,000 milliseconds. As latency increases, the bounce rate also increases. A basic conversion formula is used calculate the entire system: (conversion rate = 1 - Bounce rate). In case of performance faults, such as soaring latencies in a few servers, we can find that the high-speed performance traffic flow decreases. As the traffic flows with high latencies increases, the bounce rate also climbs quickly. The process indicates that the longer the latency, the higher the latency bounce rate which then leads to a lower conversion rate. We can see the dotted line as the level before optimization, and the solid line as the level after optimization. The difference between them is the new conversion rate after optimization.

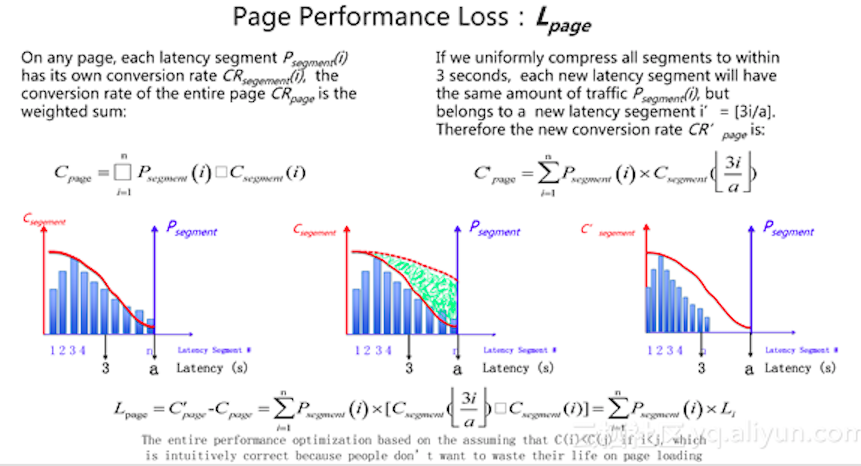

Let's dig into this topic further. In the figure above, the red line represents the conversion rate, and the blue bars represent the distribution of performance intervals. Suppose when we compress the performance from 1 second to 3 seconds, the return of conversion rate is the green part in the figure. How are the returns achieved? The higher the latency, the lower the conversion rate. As returns are a monotone decreasing function, the return after compression is the green part in the figure. If we know the compression time, we can estimate the return on a single page and the return is called Performance Loss.

Next, we will calculate the theoretical bounce rate from page A to page B. As shown in the figure above, A is a page and 0, 1, 2 and 3 are its pre-order page, and end represents the bounce page. We can find that the exit bounce rate = total bounce rate after compensation - natural bounce rate. In the formula, the natural bounce rate refers to bounce behaviors because of the users' dissatisfaction with the commodity content or comments.

Based on this assumption, we can promote it to all the pages. A large website may have hundreds of pages. In the example above, there are two chains: Search - Details – Order and Shop - Shop details - Order.

In such a relationship, if we calculate the bounce rate for each chain, we are actually having the theoretical maximum traffic flow for each chain and the maximum traffic for the final page. This is in fact a process to calculate full stack performance loss. We can know the contribution of every tiny process to the whole picture. This is vital for preparing the optimization solution. During optimization solution preparation, we can test on a page for accurate measurement of the return of a page, so that we can clearly understand the contribution of one optimization solution to the whole system, that is, the order returns of this optimization on the e-commerce system.

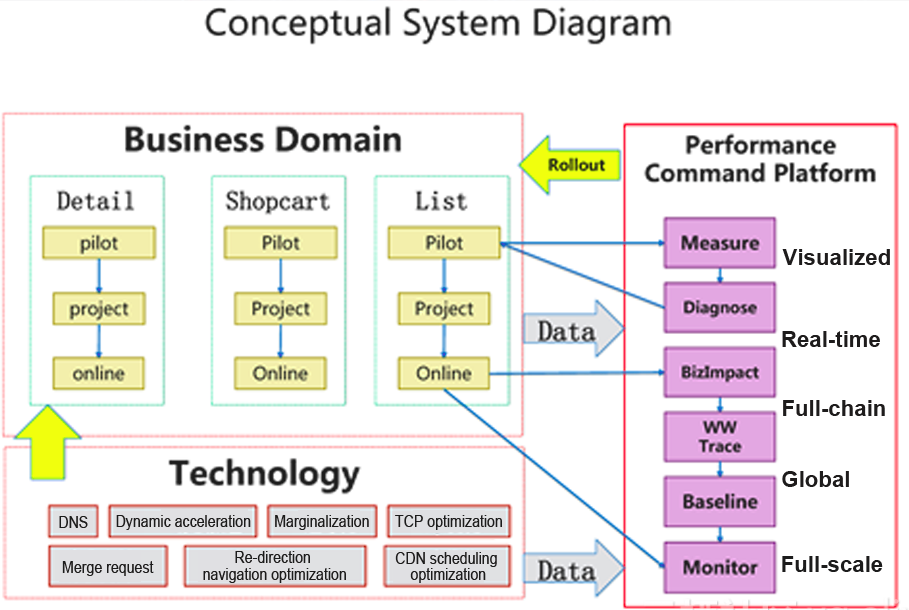

The platform is independent from the business field. There are different optimization solutions tailored to different business fields, as shown in the left bottom section of the figure. The platform aims to visualize the current performance; measure the current performance level in real time; monitor the performance of the whole chain; stay available to all fields; and perform full-scale measurement. The platform is actually converting the returns of performance optimization into a measurable and intuitive result. During the entire experiment, data keeps accumulating. Data can bring accurate measurement results which determine whether the optimization can be promoted to the full stack.

Performance optimization process

First, you should have many business units from which to collect users' behavioral data from. The data, such as request time and connection time is processed by the collection system. The processed data will undergo one of the two methods of analysis: offline analysis and real-time analysis. The difference between the two methods is that offline analysis can process more data.

The analysis results will be saved in a business database and eventually sent to cache layer for tracking and YoY analysis. The back end will support many different application scenarios, such as alarm issuing, monitoring and reports.

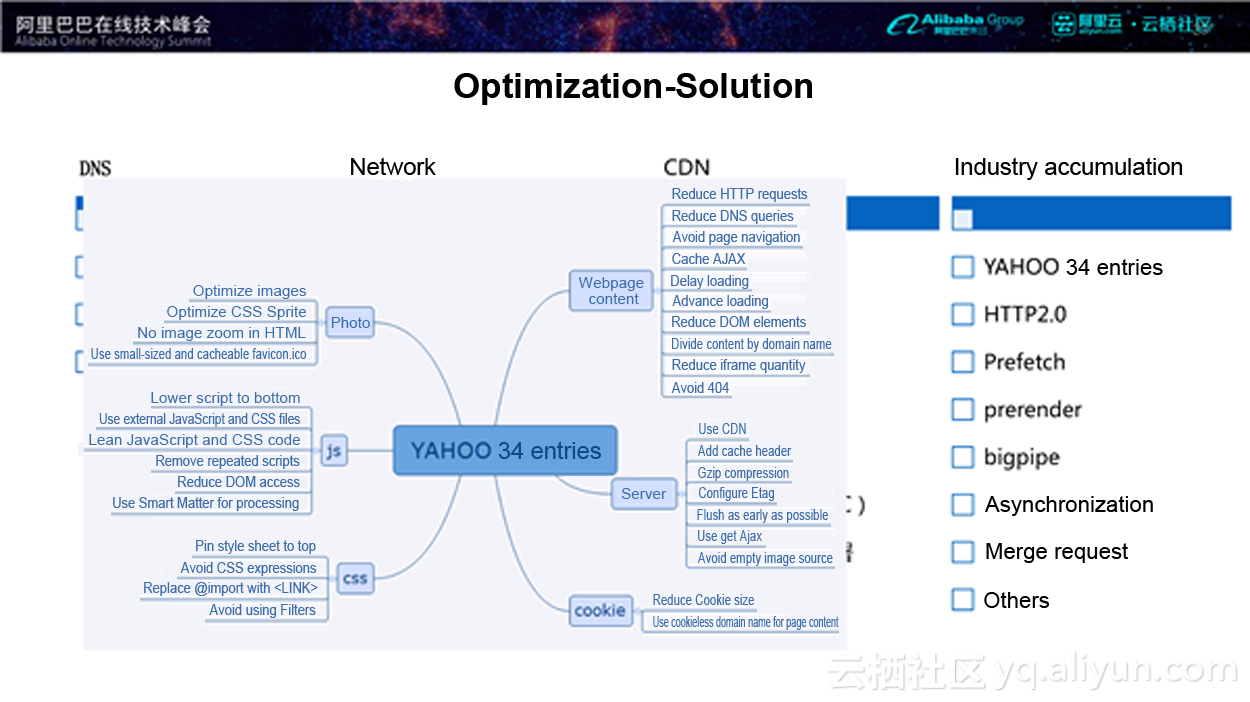

In fact, there are many optimization solutions in the industry for the DNS layer, network layer and CDN layer. Which ones of them are the most effective? Next I will list several effective optimization solutions I used:

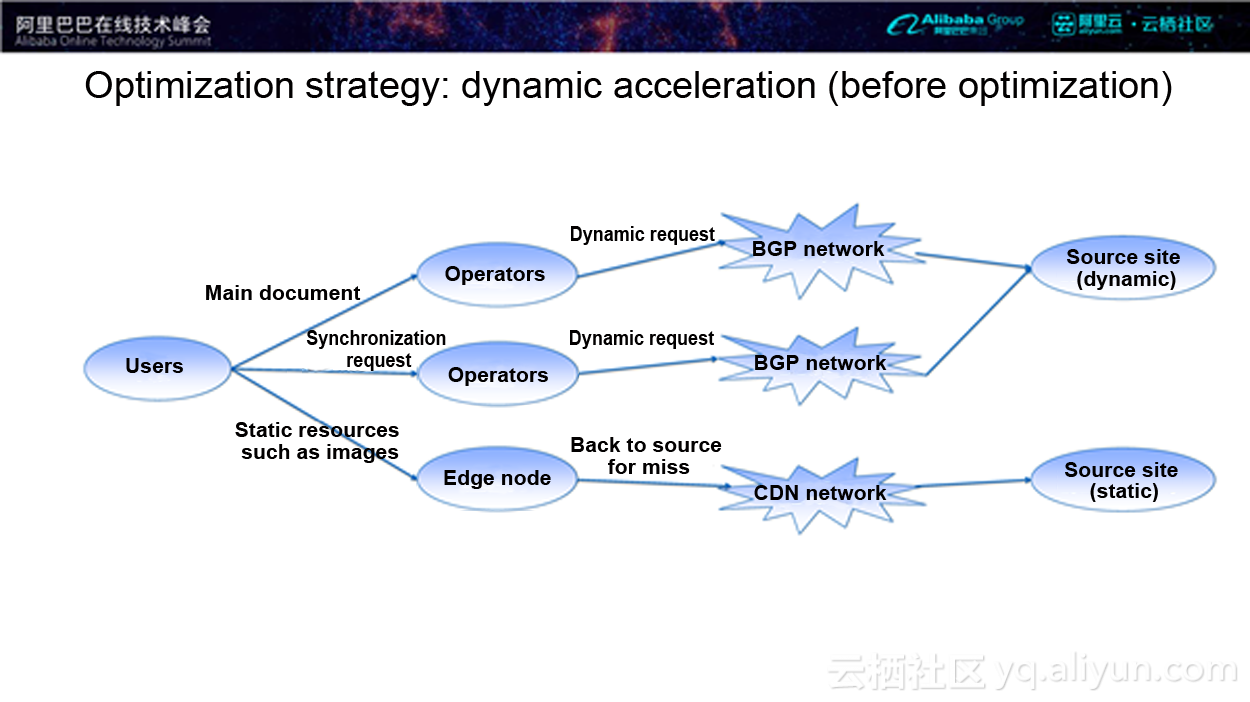

Before optimization, all the users' dynamic requests are on the source site, and the request chain is: User - Operator - Source site. All requests go to the source site as data, leading to a very long and time-consuming request chain.

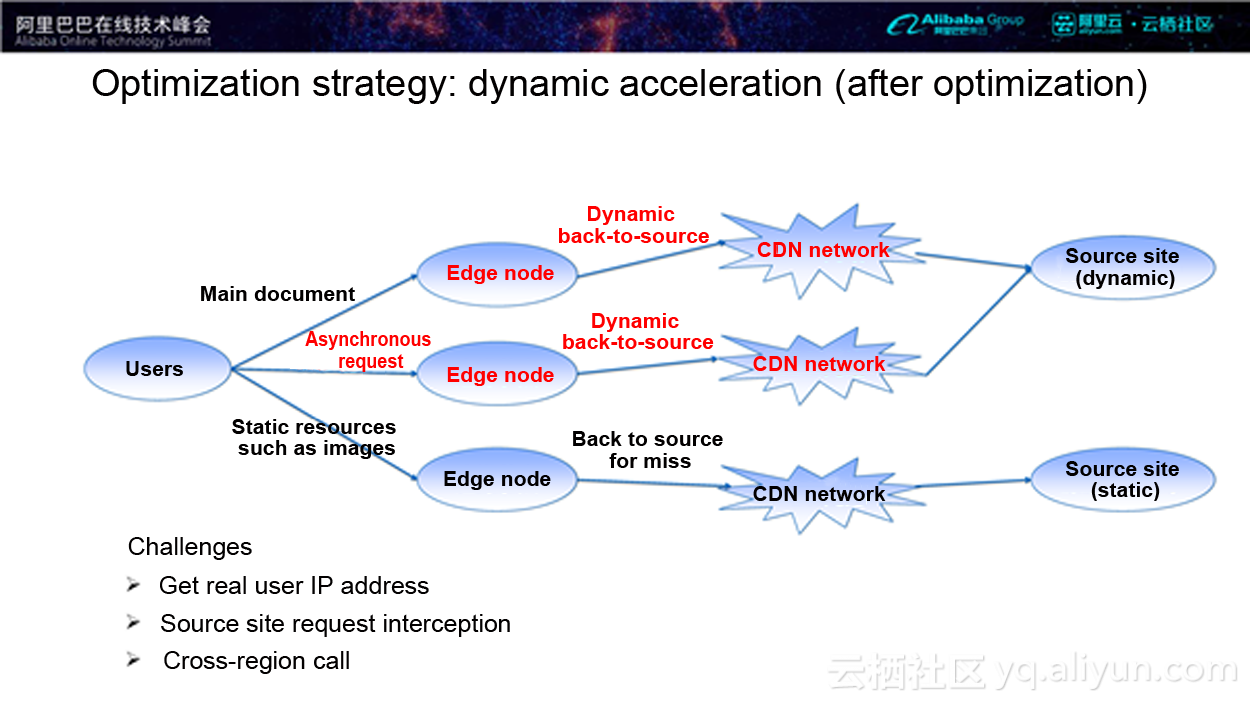

After optimization, dynamic data is pushed as much as possible to the edge nodes which do not need to go to the source site for requests, but instead request directly at the edge nodes.

Here’s another optimization for this: the request can be synchronous or asynchronous, and elements within multiple pages can be requested at the same time. The entire back-to-source process is the dynamic acceleration on the content. Another way to achieve dynamic acceleration is if back-to-source is required, let CDN decide the optimal route for the back-to-source network. CDN will help to find an optimal back-to-source chain. Dynamic acceleration actually involves a series of optimization methods, including content compression for example. The whole process also has many technical challenges: e-commerce suppliers need to know the real IP address of users; the source site needs to launch request interception to guard against attacks and so on.

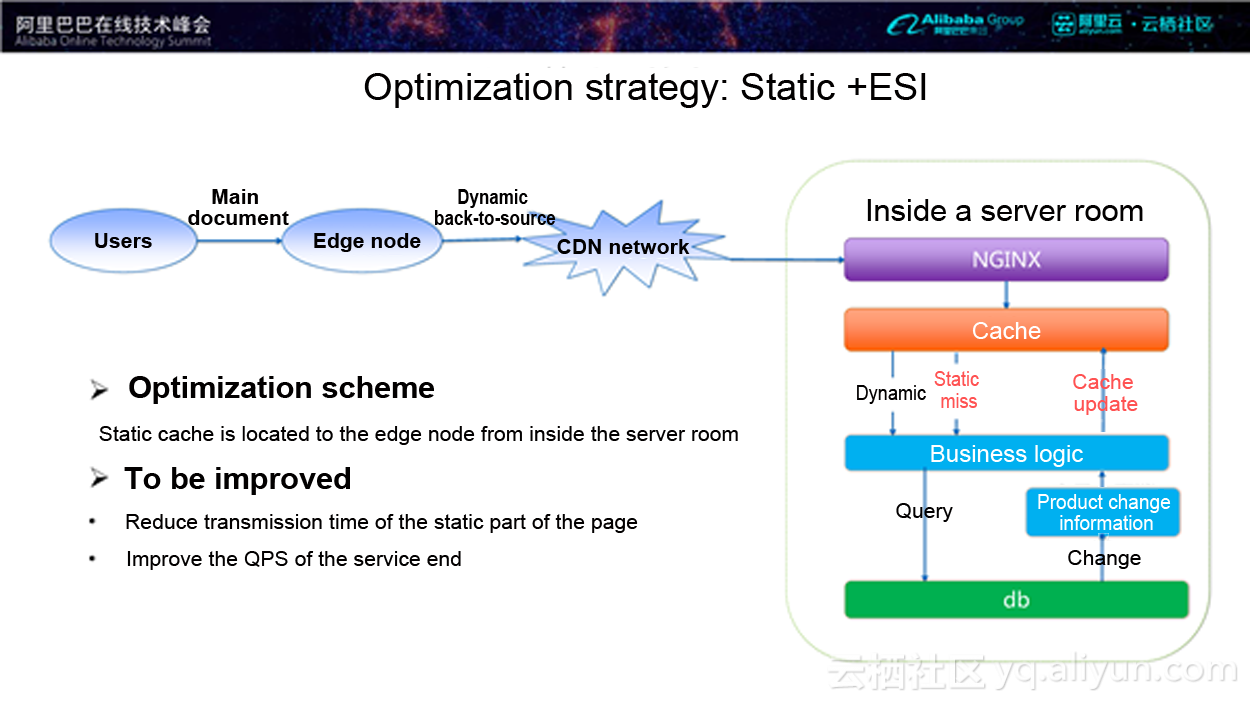

Users put content on the edge node and cache the content in the server room. If it is dynamic content, it will be sent back to the source database, however if it is static miss content, it is sent back to the source database through the business logic. The request chain is usually “read chain”, “write chain”, and the db will be changed. The changed db messages are consumed by customers and updated and stored into the cache through business logic, so the information in the cache is always the latest. This is to say, if the user request can hit the edge node, it returns. Otherwise, the request will hit the cache layer and return.

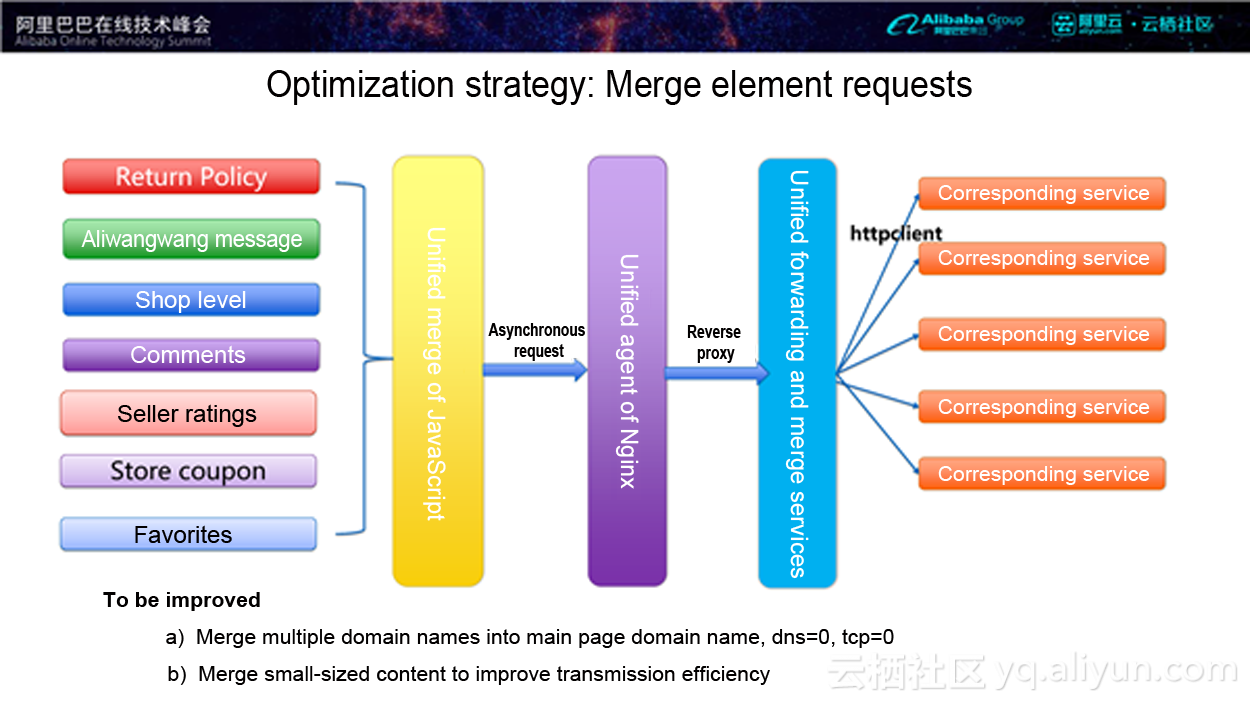

There will be many child elements in a page. If you request each of them independently, every request is a back-to-source call, and every request will take a long time (including the time needed for TCP connection). Merging element requests means to merge all the requests into one and provide the unified request to the service side, and the service side will dispatch these requests and merge and return the result in a unified way.

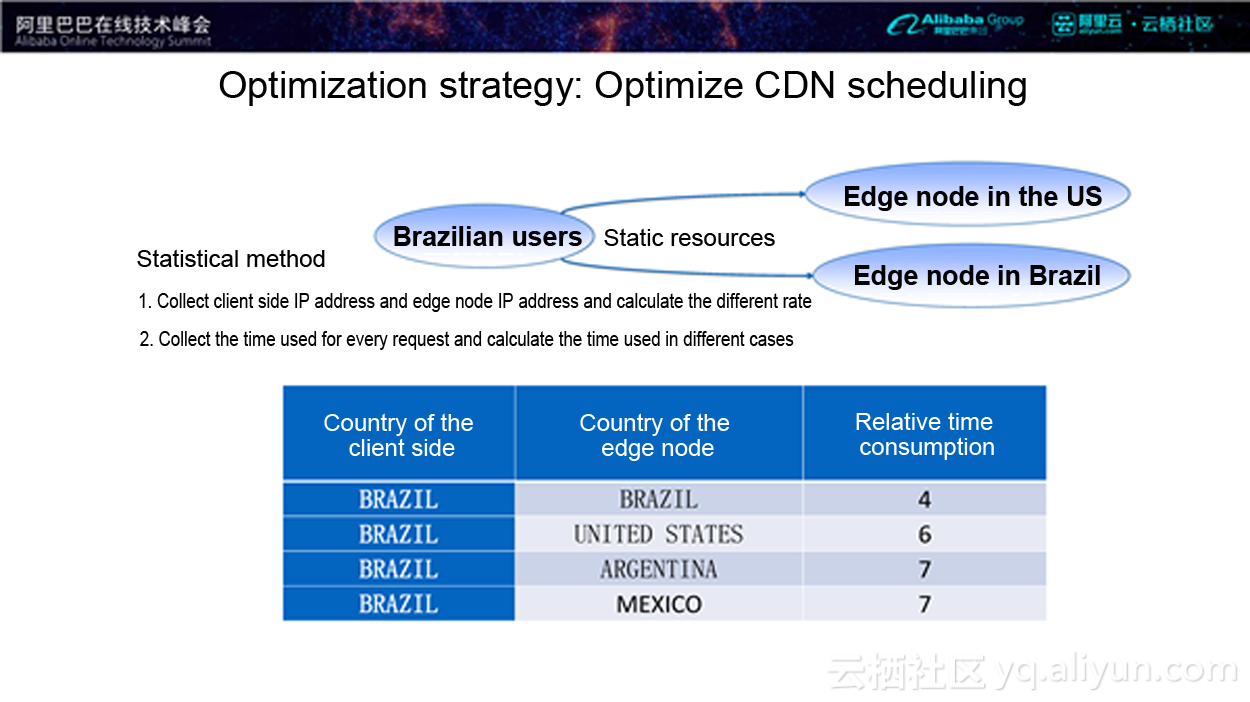

AliExpress boasts many users in various countries around the world. It is the second largest e-commerce website in Brazil. Brazilian users can request the edge nodes in the US or the edge nodes in Brazil. Tests show that less time is consumed if Brazilian users request the edge nodes in Brazil, namely 4 units for example, then 5 units of time is consumed if they request the edge nodes in the US. But the request to nodes in Argentina, which is close to Brazil in geography, takes 7 units of time. So we can draw a conclusion that we cannot estimate the time consumption of request to a node by geographic locations alone, and CDN scheduling can be optimized on this basis.

Through the above theoretical analysis and optimizations, you can improve the analysis capability of your entire system. Previous optimizations has benefited AliExpress website by increasing orders by 5.07% as well as recording a hugely significant drop in performance loss.

"Massive sales… just for you!" - The Art of Personalized Marketing

2,593 posts | 793 followers

FollowNick Patrocky - March 8, 2024

Kevin Scolaro, MBA - May 16, 2024

Rupal_Click2Cloud - November 8, 2023

Alibaba Cloud Big Data and AI - November 4, 2025

Alibaba Clouder - March 11, 2019

Alibaba Clouder - February 8, 2021

2,593 posts | 793 followers

Follow Livestreaming for E-Commerce Solution

Livestreaming for E-Commerce Solution

Set up an all-in-one live shopping platform quickly and simply and bring the in-person shopping experience to online audiences through a fast and reliable global network

Learn More E-Commerce Solution

E-Commerce Solution

Alibaba Cloud e-commerce solutions offer a suite of cloud computing and big data services.

Learn More Black Friday Cloud Services Sale

Black Friday Cloud Services Sale

Get started on cloud with $1. Start your cloud innovation journey here and now.

Learn MoreMore Posts by Alibaba Clouder