By Yunjian Song

This article is an overview of the newly released features in SQL Server 2022 and my personal understanding of each function as a user. In fact, SQL Server 2022 has made significant advancements in its engine, and each feature deserves a dedicated article for in-depth discussion. However, as this article is only an overview, I will not delve into the details of each feature.

The focus of this article is on the SQL Server 2022 engine itself. The integration with Azure, S3 Blob, and the enhancements in terms of deployment for On Linux and Kubernetes are beyond the scope of this article.

This article will conduct tests on some new features but will not perform in-depth testing. This means that only preliminary verification will be done, and the environment will be kept simple and released after use.

ApsaraDB RDS for SQL Server 2022 Enterprise Cluster Edition

mssql.x4.medium.e2 (2c8g)

Sample database: https://github.com/microsoft/sql-server-samples/tree/master/samples/databases/wide-world-importers

Some of the new features introduced in SQL Server 2022 can immediately improve performance and provide the greatest enhancement. Most products require changes in usage to improve performance, which often comes with higher costs. On the contrary, enhancing a product's capabilities without changing how it is used is the most beneficial approach.

For instance, when the battery power of a mobile phone remains the same, manually turning off unused functions frequently to save power can be more costly than having the operating system automatically turn off unused functions based on specific rules.

The same principle applies to databases. Many new features may require changes in application code, resulting in higher usage costs. On the other hand, if you enhance the kernel, you only need to enable or disable certain functions, which is more cost-effective.

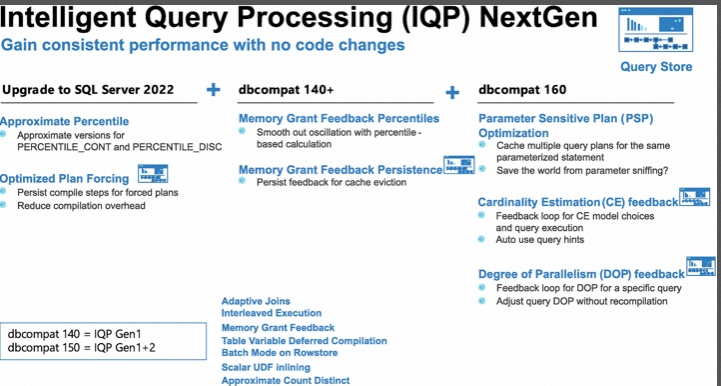

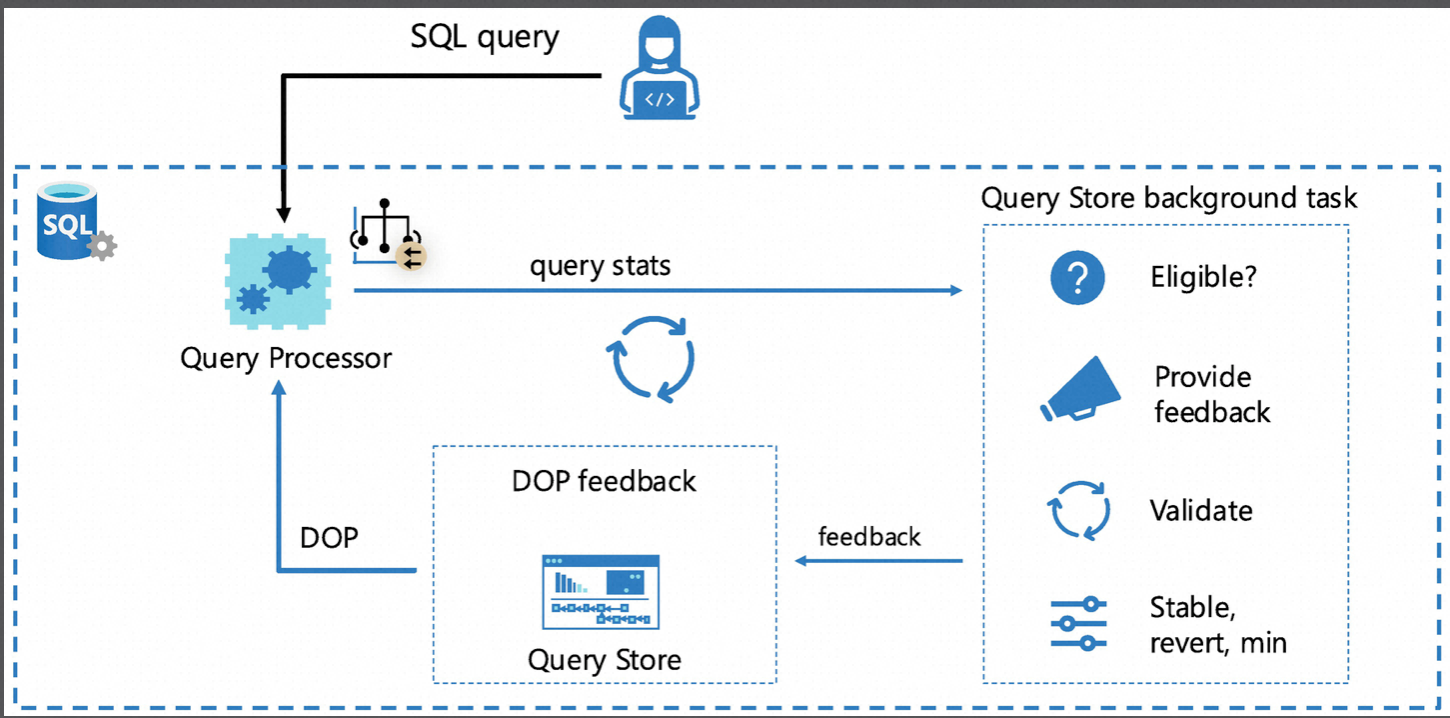

The overview of Build-In Query Intelligence is depicted in the following figure. It can be seen that Query Store is at the core of implementing these foundations. So, what exactly is Query Store?

In the past, one of the important tasks for Database Administrators (DBAs) was performance optimization, and "establishing a baseline" was a crucial part of this process. Establishing a baseline can be likened to regular physical check-ups, where healthy indicators serve as the baseline. When a person feels uncomfortable, comparing the checked data with the indicators can quickly narrow down the possible causes. Similarly, when a system experiences bottlenecks, comparing the current indicators with the baseline can help quickly identify the troubleshooting scope.

Another task in performance optimization is capturing slow SQL queries. DBAs regularly capture statements that consume high resources for manual tuning.

Although SQL Server has many built-in DMV (Dynamic Management Views) for recording statistics, this information is memory-based and disappears after a restart. Additionally, it only provides aggregated data without detailed breakdown.

Both establishing a baseline and capturing slow SQL queries require data collection, which usually requires a certain SQL Server foundation and the deployment of additional components such as monitoring collection, data processing, and display.

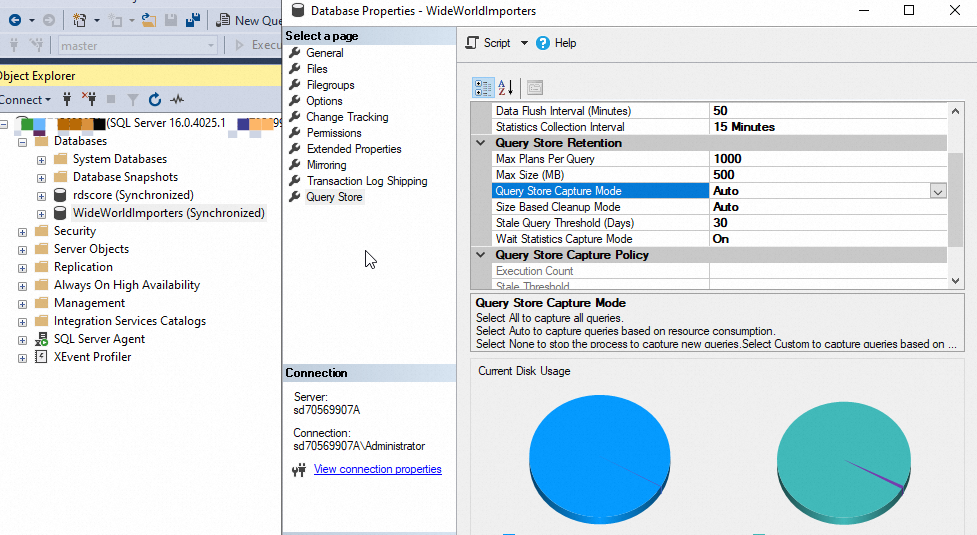

Traditionally, external collectors were used for this purpose. However, since 2016, SQL Server has introduced Query Store, which allows for the persistence of various metadata related to executed SQL performance on disk. Enabling Query Store is just an option. This metadata includes:

• Multi-version information of execution plans

• Execution plan statistics (I/O, CPU usage, etc.)

• Wait type information

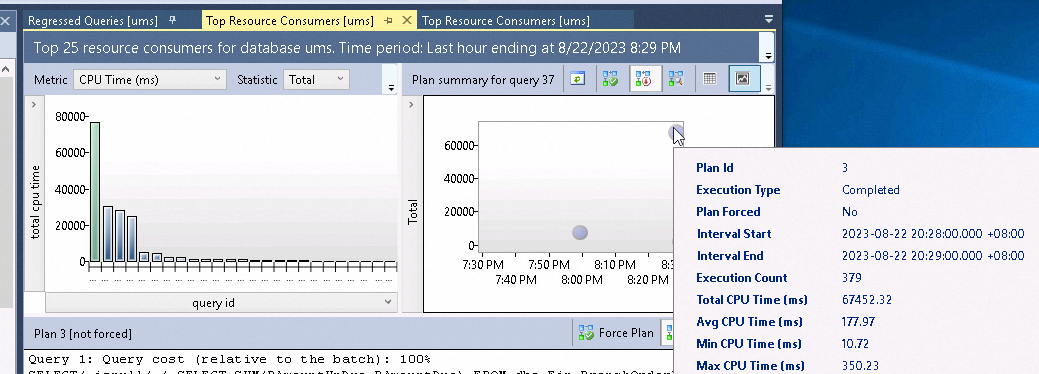

For instance, we can utilize Query Store to sort queries based on CPU usage and summarize them every minute. In the example below, the SQL statement corresponds to only one execution plan, showing the related information such as 379 executions in one minute and CPU times.

With Query Store, the previous DBA optimization method has been simplified as follows:

Query Store has gone through several versions and is enabled by default in SQL Server 2022. Default enabling means that Microsoft takes responsibility for any side effects caused by this feature. In my opinion, this feature has reached a very mature stage.

Therefore, Query Store provides decision data for the optimizer features discussed below.

An SQL statement undergoes a series of processes in the optimizer, from syntax parsing to generating an execution plan. The quality of the execution plan directly affects the performance of the SQL statement. During the parsing process, various types of data are referenced, and the accuracy of this data is crucial for the execution plan. Let's take an analogy: when you want to go to a shopping mall, the quickest route depends on whether there is traffic congestion. If there is congestion, taking the subway may be faster than driving a car. In this analogy, the metadata of traffic conditions on the road is essential for determining the optimal execution path.

When an SQL statement is parsed into an execution plan, it also relies on metadata such as statistics, the presence of indexes, and the estimated number of rows after filtering. Cardinality Estimation refers to the estimation of the number of rows accessed by SQL when it interacts with live table objects through operations like filtering or joining. The accuracy of this estimation directly impacts the quality of the execution plan.

In SQL Server versions prior to 2014 (compatibility level lower than 2014), the evaluation assumes no correlation between the data. For example, the estimated number of rows for the condition where a = 1 and b = 2 equals the selectivity of a multiplied by the selectivity of b, multiplied by the total number of rows.

For SQL Server 2014 and later versions (compatibility level >= 2014), the preset scenario considers that data has many correlations, and the estimated rows for multiple conditions within the same table should be higher. More details about the specific algorithm can be found in the reference.

These two models accommodate different types of workloads. The old model (SQL Server compatibility level lower than 2014) is suitable for simple workloads with low correlations, while the new model (compatibility level >= 2014) is suitable for more complex queries (referred to as "modern workloads" by Microsoft). Previously, these models could be controlled globally through compatibility levels or individually through hints, but neither method was perfect.

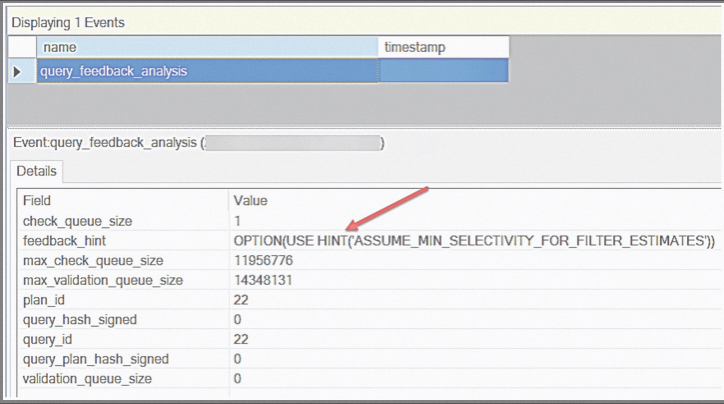

The mechanism provided by SQL Server 2022 is to provide feedback through historical queries. The query optimizer is linked with the Query Store to compile SQL statements and identify statements with significant cost differences between different Cardinality Estimations (CE). It then attempts to attach CE hints and correct this mechanism based on the results from the Query Store (if the additional hints do not significantly improve or even reduce performance). The figure below, extracted from Bob Ward, illustrates this concept.

This means that the CE model can adapt to different queries at the per query level, which is already an advanced DBA's work, that is, making optimization decisions based on multiple historical records. No application adaptation is required to enable this feature. Only the following conditions need to be met:

• Enable Query Store

• The compatibility level is 160

When executing queries in SQL Server, certain operations are clearly dependent on memory, such as sort or hash join. The execution plan will predefine the amount of memory required and request it in advance. However, there are two issues that arise:

The amount of memory granted depends on the execution plan. For instance, sorting 10,000 data versus 100,000 data would require different amounts of memory. However, execution plans often inaccurately estimate the required memory.

SQL Server 2022 introduces a memory historical record feature based on the Query Store. This means that the memory grant for the latest query is determined based on the actual memory usage of previous query executions. However, this adjustment is only made in scenarios where there is a significant difference in memory grant.

Enabling conditions:

• The compatibility level is 140 (2017) and above.

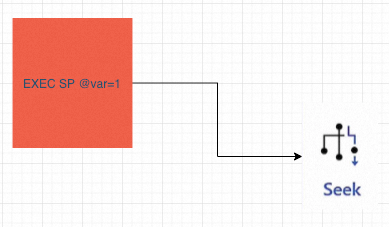

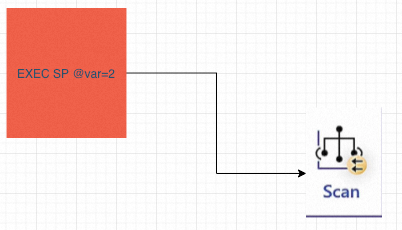

As mentioned earlier, the metadata on which the process of query -> execution plan in SQL Server depends counts on cost estimation, and a prerequisite for cost estimation is parameters. For example, if "where a =1" returns 10,000 rows and "where a=2" returns 1 row, the execution plans are usually different. For example, in the following figure, parameter 1 corresponds to one seeking, and parameter 2 corresponds to one scanning:

In addition, one of the remarkable features of commercial databases like SQL Server and Oracle is their ability to handle complex SQL statements. However, this can be both an advantage and a disadvantage. The powerful SQL engine can lead to abuse. In comparison, commonly used SQL statements in MySQL and PostgreSQL (PG) are much simpler and more suitable for the modern concept of Domain-Driven Design (DDD) and microservices deployment. But that's a separate topic. The largest execution plan I have encountered was for a stored procedure with over 10,000 rows, and it had a size of 80 MB.

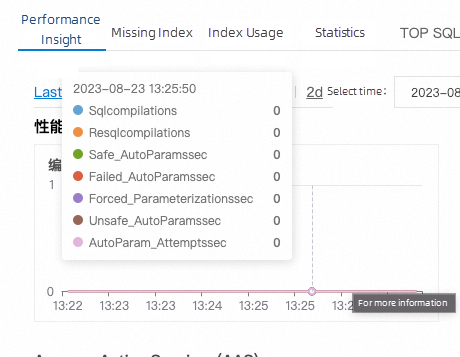

Compiling such execution plans comes at a high cost. It not only consumes CPU resources but also increases the execution time of the statements. When similar statements are executed concurrently, it can potentially cause compilation bottlenecks at the system level. This can be observed through wait types and performance counters, such as the ones captured in the Alibaba Cloud console.

Therefore, caching the compiled execution plan is a better choice. However, this brings another problem: what if the cached execution plan is inaccurate? What if the cost of using the cached plan is higher than recompiling? This has always been a challenge for DBAs. Understanding this problem determines whether one is a novice or a professional.

There are various traditional solutions. For SQL statements, optimization of parameter writing, regular updating of statistical information, adding recompile hints to statements, index adjustments, and SQL splitting are common approaches. A more advanced solution is proactively monitoring high-consumption statements and clearing a single cache at a fixed point. Of course, there is a less advanced solution - restarting (as all execution plan caches are cleared after a restart, requiring recompilation of all plans).

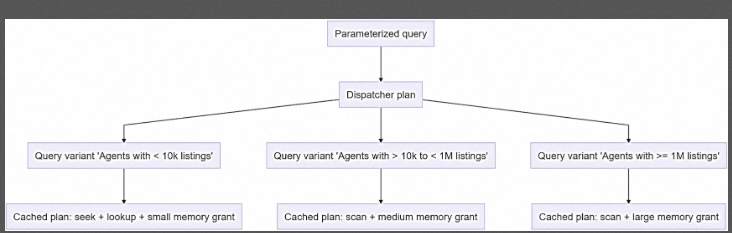

In SQL Server 2022, the introduced PSP (Plan Stability Priority) helps solve this problem. With the ability of the Query Store to cache multiple execution plans for a single SQL statement, it becomes possible to select a more suitable plan based on parameters. The general principle is as follows: adding a dispatcher between the Query Hash and plan cache hash to determine which cached execution plan to use based on the dispatcher. This feature is indeed a great help for slightly larger databases with complex queries. Although I haven't had the opportunity to witness specific cases yet, based on my experience, this feature will significantly reduce the O&M threshold.

Enabling conditions:

The compatibility level is 160 (SQL Server 2022)

One metric in SQL Server is the maximum degree of parallelism, while the other is the "parallel overhead threshold." The former determines the maximum number of cores that a query can use for concurrent execution, while the latter refers to initiating parallel execution when the execution cost reaches a certain value.

These two metrics originated in the 1990s, with default values of 0 and 5, respectively. A value of 0 means that SQL Server will determine the number of cores to use for parallel execution, which is typically equal to the number of physical cores in the current machine. However, on modern machines with multiple sockets and NUMA, this configuration is highly unreasonable. For typical OLTP systems, the value is adjusted to 2 or 4 by default, while separate configurations are made for certain OLAP statements.

The default value of the "parallel overhead threshold" is 5, which is extremely low. Today's hardware capabilities have significantly evolved, and single-core CPUs may even outperform previous multi-core CPUs in completing tasks. Parallelism incurs a high cost for core collaboration, and if the parallel overhead threshold is set too low, the collaboration cost can exceed the query execution cost.

These two values are set at the instance level. Although they have been subsequently introduced at the database level, the scope remains too broad. Perhaps class AP statements require a higher degree of parallelism (DOP), while setting the DOP to 1 may be more reasonable for class TP.

DOP Feedback works similarly to other feedback mechanisms mentioned earlier. It relies on Query Store and utilizes historical feedback experience. The Query Store background task evaluates factors such as query execution count, duration, and parallel efficiency to determine whether a query is suitable for using DOP feedback. Based on the built-in rules, the DOP is dynamically adjusted for the statement.

Enabling conditions:

ALTER DATABASE SCOPED CONFIGURATION SET DOP_FEEDBACK = ONBuild-In Query Intelligence allows SQL Server to make better adjustments based on historical data when the optimizer makes decisions. This self-adaptability has reached a new level. In my opinion, databases should also move towards this direction in the future. As an underlying platform system, databases should prioritize users' attention towards their business rather than the complexity of underlying data usage.

All the provided functions can greatly improve the performance and stability of the system without requiring any changes to the code. Therefore, if conditions permit, we recommend upgrading to SQL Server 2022 and enabling Query Store.

If you are interested in self-adaptive databases, you can read What is a Self-Driving Database Management System? by Andy Pavlo.

The ledger database was introduced in SQL Server 2019 and partially enhanced in SQL Server 2022. It utilizes blockchain technology to create distributed ledger databases that are tamper-proof and related to zero-trust. However, the demand for this functionality is relatively low in China, similar to SSL and TDE. I won't elaborate on this further.

This feature provides a permanent solution to the TempDB metadata problem. Each Data Definition Language (DDL) statement operation, such as creating or deleting tables, requires modifying the metadata used for internal database allocation. In SQL Server, this is referred to as PFS (Page Free Space) pages. Normally, this should not become a bottleneck. However, temporary tables and table variables are special cases for DDL statements. If these objects are created frequently and concurrently, it leads to high-frequency modifications of PFS pages. The modification of PFS pages is done through "pessimistic concurrency control" using a special lock called Latch Lock, which protects the memory structure. This modification itself becomes a database bottleneck. For many years, it has been common practice among SQL Server DBAs to create an instance based on the number of machine cores. TempDB typically has at least 4 files, and having multiple files means multiple PFS pages, which reduces the likelihood of lock contention. SQL Server 2022 updates PFS pages using "optimistic concurrency control," which essentially solves the contention problem. The cost of optimistic concurrency for only a few system pages can be well controlled. This improvement aims to reduce operations and maintenance (O&M) efforts.

This option requires to be activated manually:

ALTER SERVER CONFIGURATION SET MEMORY_OPTIMIZED TEMPDB_METADATA = ONBuffer Pool is based on the memory structure. Random lookup of memory costs less and is more suitable for hash structure. Therefore, random lookup is usually not a problem. However, scanning large-scale instances is usually costly, and the memory of large-scale instances is usually 256 GB and above. When the memory is greater than 64 GB, the previous single-threaded Buffer Pool scan becomes multi-threaded, which improves the scanning performance.

Affected operations include:

• Database startup

• Database shutdown or restart

• AG Failover

• Delete the database

• Delete the file from the database

• Full or differential database backup

• Database restoration

• Transaction log restoration

• Online restoration

From my experience, this function has been improved for instances with memory more than 256 GB. When AlwaysOn fails over, the scan speed will be much faster. However, in other scenarios, there is no obvious speed increase, so the application scope is limited.

This feature allows AlwaysOn clusters to contain contained databases. It means that the previous instance-level objects, such as jobs, logins, and linked server objects, can be migrated along with the databases or switched with HA to maintain consistency.

I think this function is to further reduce the O&M costs. This is because, after HA occurs, if the jobs or logins are lost, it is basically equivalent to a failure. Containing them to the database to maintain consistency through cores is much more stable.

The database recovery task is now run with a higher deadlock priority to avoid being chosen as a deadlock victim with user transactions.

In other words, the deadlock priority of the system thread session for recovery is "high".

Although the probability of this situation is low, I have unfortunately encountered it. Microsoft should have designed it this way a long time ago. I have encountered that after AlwaysOn switchover, the user's new connection killed the recovery thread as a deadlock, causing the database to remain addicted to the recovery state. This problem was resolved after restarting, directly resulting in unavailable time.

We fixed a problem where a replica database would get stuck in a recovery pending state.

Ensured data movement is not paused to replicas due to internal log block errors.

Eliminated schema lock contention problems on secondary replicas

I have encountered all the above three problems unfortunately. The worst outcome was having to rebuild AlwaysOn, which was a daunting task when dealing with several terabytes of data at once. I am unable to confirm the improvement at the moment, but if I have the opportunity to verify it, I will provide additional information on the actual effect.

A crucial feature of relational databases is ACID (atomicity, consistency, isolation, durability), where durability ensures the recovery of crash consistency. In the event of power failure or process crashes, the data consistency in the database should remain unaffected.

To achieve this, recovery actions are performed when the database restarts. This involves rolling back data that was dropped on disk for uncommitted transactions or redoing dirty pages that were not yet dropped on disk for committed transactions. This process is known as undo/redo recovery.

However, the length of the recovery process depends on the earliest active transaction at the time of the crash, restart, or shutdown. I have encountered an 8-hour recovery scenario where a user performed extensive ETL operations overnight, resulting in hundreds of gigabytes of active transaction logs. Upon restarting the instance, the user had to scan and recover from hundreds of gigabytes of logs, leading to 8 hours of unavailability.

Microsoft introduced parallel recovery in previous versions to address slowness issues, but based on my experience, the results were unsatisfactory. Concurrent recovery processes even resulted in deadlocks.

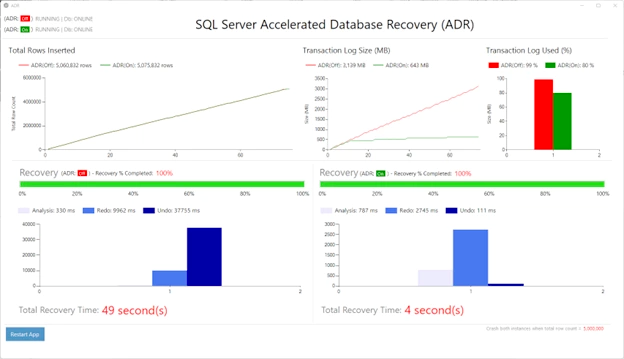

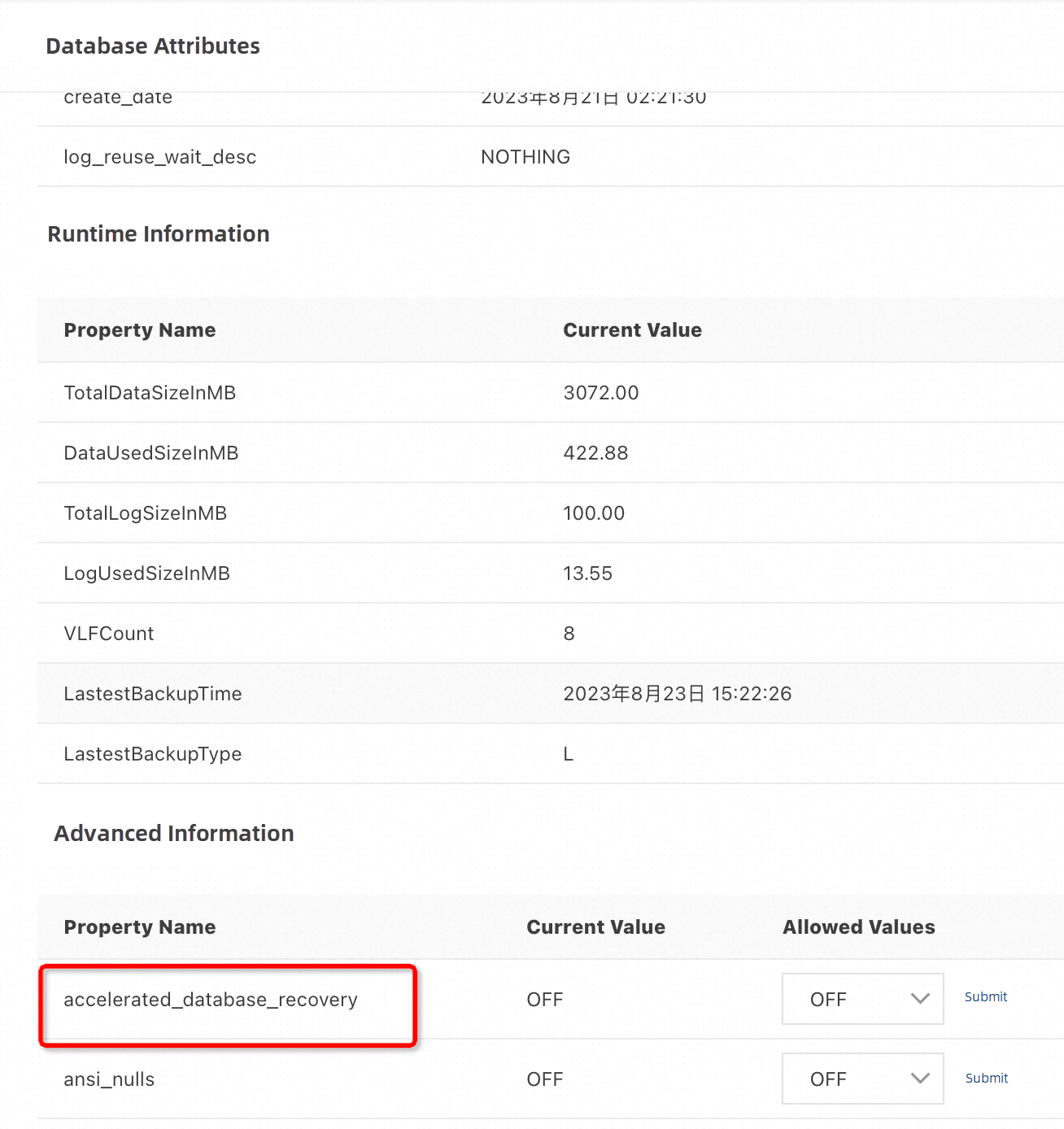

ADR (Accelerated Database Recovery) was introduced in SQL Server 2019, and SQL Server 2022 has made many improvements to this mechanism. The basic principle of ADR is to control the concurrency of multiple versions. Consequently, it has similar drawbacks to transaction snapshot isolation, requiring additional storage space, CPU, and memory usage.

The following benefits can be observed:

• Large transactions do not cause uncontrollable log growth.

• Reduce database unavailability caused by large transaction rollbacks.

• Resolve the long-standing recovery time issue.

The recovery time of verification data on the Microsoft's official blog is 49 seconds and 4 seconds. The huge difference indicates that there is still huge space for improvement.

To sum up, the recovery time can be shortened from the earliest transaction logs in the past to the latest checkpoint (generally 60 seconds), which will greatly reduce the recovery time. At the same time, due to the existence of data snapshots, logs can truncate more aggressively and avoid disk space problems. Therefore, you are suggested to enable this function to improve the availability for databases with hundreds of GB, high load and long transactions.

Modify the attributes of the database directly in the ApsaraDB for RDS console.

Parallel Redo is mainly used by AlwaysOn, but the effect is limited. Sometimes, we even need to disable it. The optimization of SQL Server 2022 is to remove the limit that the number of workers should be no more than 100, which is helpful for instances with a large number of databases. However, we do not know whether some problems of parallel Redo have been fixed.

JSON has been the de facto standard for the vast majority of data exchanges today. SQL Server 2016 begins to support JSON in the T-SQL, and SQL Server 2022 adds JSON_ARRAY and IS_JSON functions. You can basically know what they can do according to their names, and you can refer to Microsoft documents for details.

Generally speaking, today's applications are already very mature in JSON processing, with mature class libraries and schemes. JSON parsing on the database side usually does more harm than good so that I won't go into details here.

• DATE_BUCKET

• GENERATE_SERIES

• FIRST_VALUE and LAST_VALUE

They are all time-related functions. See Microsoft's official website for details.

• DATETRUNC

• STRING_SPLIT

• IS [NOT] DISTINCT FROM

I have always held a conservative attitude towards the additional capability of providing SQL dialect built into the database. Because of its own characteristics, the cost of scaling out is very high, while the application is easier to scale out because it is stateless, and the application language has more powerful logic processing capability. Therefore, individuals are usually more inclined to complete the work outside the database and transfer the computing power that is not used for data access to the application.

This function itself is used for end-to-end encryption. The application side grasps the encryption key, and the entire trace data is encrypted. The encrypted data cannot be viewed in SQL Server. Personally, I think it serves as a solution to the trust problem between customers and cloud vendors. Therefore, even if the cloud PaaS side is responsible for database O&M, it cannot view the contents of the database.

Some improvements have been made in the SQL Server 2022. The application's key is placed in the security area of SQL Server to enable some string operations, such as the Like operation, to take effect. I will not go into details here.

TLS 1.3 is used to support trace encryption. This feature meets compliance requirements. I will not go into details here.

Roles such as ##MS_DefinitionReader##, ##MS_ServerStateReader##, ## MS_ServerPerformanceStateReader## are responsible for viewing the performance data of the system and can not view business data. These roles may be added for some third-party O&M personnel to use, while preventing data from leaking. In my opinion, this kind of function in China is not that practical.

Overall, SQL Server 2022 is worth upgrading, especially in terms of the "adaptive query" feature. While it may not be as powerful as "self-driving," it can be considered as reaching the level of L2.5 assisted driving.

There are also expected improvements in HA/DR, particularly in significantly reducing the recovery time for DR, which is crucial for enterprise-level applications.

If you are currently facing challenges with the availability and performance of your databases, investing in the upgrade to SQL Server 2022 will bring pleasant surprises.

Note: This article does not cover integration with Azure/AWS, nor does it involve Kubernetes or Linux. Additionally, snapshot backups are not included due to their niche market.

Check out Free Trials and Special Offers: https://www.alibabacloud.com/product/apsaradb-for-rds-sql-server

About Database Kernel | PolarDB Optimizer Query Transformation: IN-List Transformation

About Database Kernel | PolarDB Query Optimization: Predicate Pushdown

ApsaraDB - July 19, 2023

Alibaba Cloud Native - March 6, 2024

digoal - August 2, 2023

ApsaraDB - January 17, 2025

Junho Lee - June 22, 2023

Yen Sheng - April 3, 2023

ApsaraDB RDS for SQL Server

ApsaraDB RDS for SQL Server

An on-demand database hosting service for SQL Server with automated monitoring, backup and disaster recovery capabilities

Learn More ApsaraDB RDS for MySQL

ApsaraDB RDS for MySQL

An on-demand database hosting service for MySQL with automated monitoring, backup and disaster recovery capabilities

Learn More ApsaraDB RDS for PostgreSQL

ApsaraDB RDS for PostgreSQL

An on-demand database hosting service for PostgreSQL with automated monitoring, backup and disaster recovery capabilities

Learn More Server Load Balancer

Server Load Balancer

Respond to sudden traffic spikes and minimize response time with Server Load Balancer

Learn MoreMore Posts by ApsaraDB