By Tianchen Ding and Huaixin Chang

In the last article, we discussed the annoying CPU throttling, which affects some of the key metrics of applications running in containers. We have to sacrifice the deployment density of the container to avoid throttling. This will lead to a waste of resources. Then, we introduced the new CPU Burst technology for the solution. This technology can ensure the service quality of container operation without reducing the deployment density. Does this sound like a silver bullet? What are the side effects of CPU Burst? Are there any scenarios where it is inapplicable?

This article introduces the scheduling guarantee that CPU Burst breaks and evaluates the impact of CPU Burst. The CPU usage difference caused by the CPU Burst is not obvious in CPU utilization, but we are still concerned about the impact of this change. The conclusion is clear that the negative effects of CPU Burst can be ignored. In environments where CPU utilization is as high as 70%, CPU Burst starts affecting key indicators. Your CPU utilization rates in the daily production environment are far below this level.

The CPU Bandwidth Controller can prevent some processes from consuming too much CPU time and ensure that all processes that need CPU get enough CPU time. It has a high stability guarantee because when

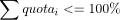

Bandwidth Controller is set to meet  , and it will have the following scheduling stability constraints:

, and it will have the following scheduling stability constraints:

The  is the quota of the i cgroup. It is the CPU demand of the cgroup within a period.

is the quota of the i cgroup. It is the CPU demand of the cgroup within a period.

Bandwidth Controller makes CPU time statistics for each period. The scheduling stability constraint ensures that all tasks submitted in a period can be processed in that period. For each CPU cgroup, this means tasks submitted at any time can be executed in a period. This is the real-time constraint of tasks:

Regardless of the task priority, Worst-Case Execution Time (WCET) is no longer than one period.

If the stability of the  scheduler is broken, tasks will be accumulated in each period, and the execution time of newly submitted jobs will be increasing.

scheduler is broken, tasks will be accumulated in each period, and the execution time of newly submitted jobs will be increasing.

After we use CPU Burst to allow burst CPU usage to improve the quality of service, what is the impact on the stability of the scheduler? When multiple cgroups are using the burst CPU at the same time, the stability constraints of the scheduler and the real-time guarantee of tasks may be broken. At this time, the probability of the two constraints being guaranteed is the key. If the probability of the two constraints being guaranteed is high, the real-time performance of the task is guaranteed for most cycles. This way, you can use CPU Burst with confidence. If the probability is low, you cannot use CPU Burst to improve the service quality. You should reduce the deployment density and improve the resource allocation of the CPU.

The next concern is how to calculate the probability of two constraints being broken in a particular scenario.

A quantitative calculation can be defined as a queuing theory problem and solved through the Monte Carlo simulation method. The results of quantitative calculation show that the main factors determining whether CPU Burst can be used in the current scenario are average CPU utilization and cgroup number. The lower the CPU utilization, or the greater the number of cgroups, means the less likely the two constraints are broken. You can rest assured to use CPU Burst. Conversely, if the CPU utilization is high or the number of cgroups is small, we should reduce the deployment and improve the configuration before using CPU Burst to eliminate the impact of CPU throttling on process execution.

The definition of the problem is the number of cgroup is m. The quota of each cgroup is limited to 1/m, and each cgroup in each period generates a computing demand subject to a specific distribution. These distributions are independent and identically of each other. Let's say the task arrives at the beginning of each period. If the CPU demand in that period exceeds 100%, and the task WCET in the current period exceeds 1 period, the excess is accumulated to be processed together with the new CPU demand generated in the next period. The input is the number of cgroups (m) and the specific distribution that each CPU demand meets. The output is the probability that WCET > period and WCET expectation when each period ends.

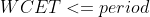

The solution process of using Monte Carlo simulation is omitted. Pay attention to the third part of articles for details. Here is an example: the input CPU demand is Pareto distribution and m=10/20/30. You can choose the Pareto distribution for explanation because it produces more burst use of long-tail CPU, which can have a greater impact. The format of the data items in the table is  . The closer

. The closer  is to 1, the better. The lower the

is to 1, the better. The lower the  probability is, the better.

probability is, the better.

| u_avg | m=10 | m=20 | m=30 |

| 10% | 1.0000/0.00% | 1.0000/0.00% | 1.0000/0.00% |

| 30% | 1.0000/0.00% | 1.0000/0.00% | 1.0000/0.00% |

| 50% | 1.0003/0.03% | 1.0000/0.00% | 1.0000/0.00% |

| 70% | 1.0077/0.66% | 1.0013/0.12% | 1.0004/0.04% |

| 90% | 1.4061/19.35% | 1.1626/10.61% | 1.0867/6.52% |

The results are consistent with our intuition. On the one hand, higher CPU demand (CPU utilization) results in easier CPU bursts to break the stability constraint. This causes the task WCET expectation to be longer. On the other hand, the greater the number of cgroups that are independently distributed in CPU demand means a lower probability that they simultaneously generate CPU burst demand. The easier it is for the scheduler stability constraints to be maintained means the closer the WCET expectation is to 1 period.

After reading this article, you should have a qualitative understanding of the impact of CPU Burst. Please stay tuned for the next article for more information about the evaluation method.

Tianchen Ding from Alibaba Cloud, core member of Cloud Kernel SIG in the OpenAnolis community.

Huaixin Chang from Alibaba Cloud, core member of Cloud Kernel SIG in the OpenAnolis community.

Kill the Annoying CPU Throttling and Make Containers Run Faster

91 posts | 5 followers

FollowOpenAnolis - March 29, 2022

Alibaba Cloud Native Community - July 13, 2022

Alibaba F(x) Team - March 1, 2022

OpenAnolis - March 24, 2022

HaydenLiu - December 5, 2022

Alibaba Cloud Community - May 14, 2024

91 posts | 5 followers

Follow Alibaba Cloud Linux

Alibaba Cloud Linux

Alibaba Cloud Linux is a free-to-use, native operating system that provides a stable, reliable, and high-performance environment for your applications.

Learn More Function Compute

Function Compute

Alibaba Cloud Function Compute is a fully-managed event-driven compute service. It allows you to focus on writing and uploading code without the need to manage infrastructure such as servers.

Learn More Elastic High Performance Computing Solution

Elastic High Performance Computing Solution

High Performance Computing (HPC) and AI technology helps scientific research institutions to perform viral gene sequencing, conduct new drug research and development, and shorten the research and development cycle.

Learn More Quick Starts

Quick Starts

Deploy custom Alibaba Cloud solutions for business-critical scenarios with Quick Start templates.

Learn MoreMore Posts by OpenAnolis

Start building with 50+ products and up to 12 months usage for Elastic Compute Service

Get Started for Free Get Started for Free