By Jeremy Pedersen

Well friends, it's that time of the week again. I came here to chew gum and write blogs...and I'm all outta gum.

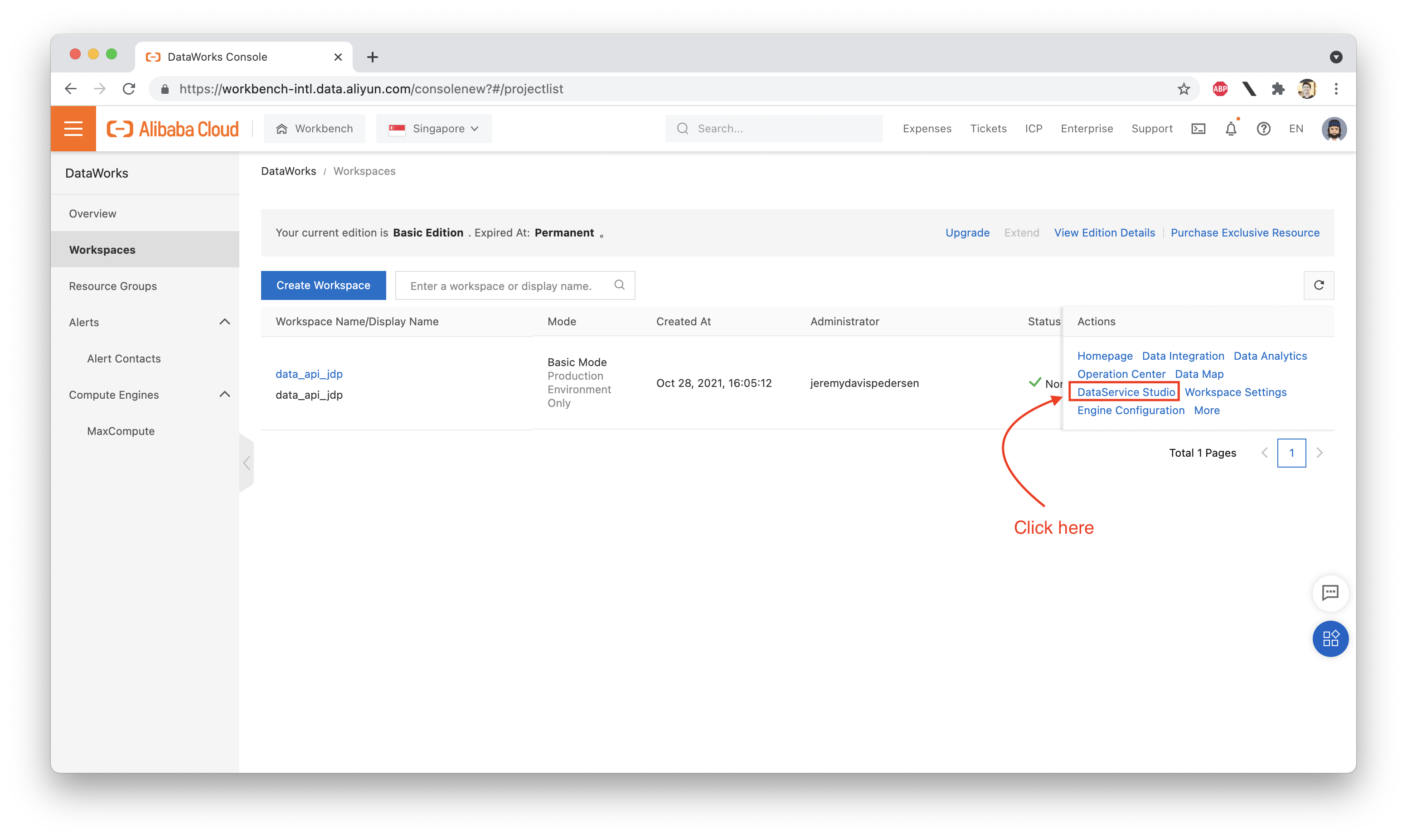

This week, we dive into a little-known but very handy service called DataService Studio. It's part of DataWorks, but you don't need to be a big data wizard (or even a programmer) to use it.

What does it do, you ask? DataService Studio deploys APIs. It takes a data source - such as a MySQL database - and deploys an HTTP API in front of it. It does this using API Gateway, Alibaba Cloud's API service.

This lets you deploy useful data-driven APIs without writing any code, which makes it a lot easier to push data to third party systems or applications.

Ok, let's try setting up our own API!

Before we can set up and use DataService Studio itself, we need to create a data source.

In this blog, I'll assume the data source is an Alibaba Cloud RDS MySQL database, running MySQL 8.0. Please follow along with this blog post to learn how to set that up and load in some example data.

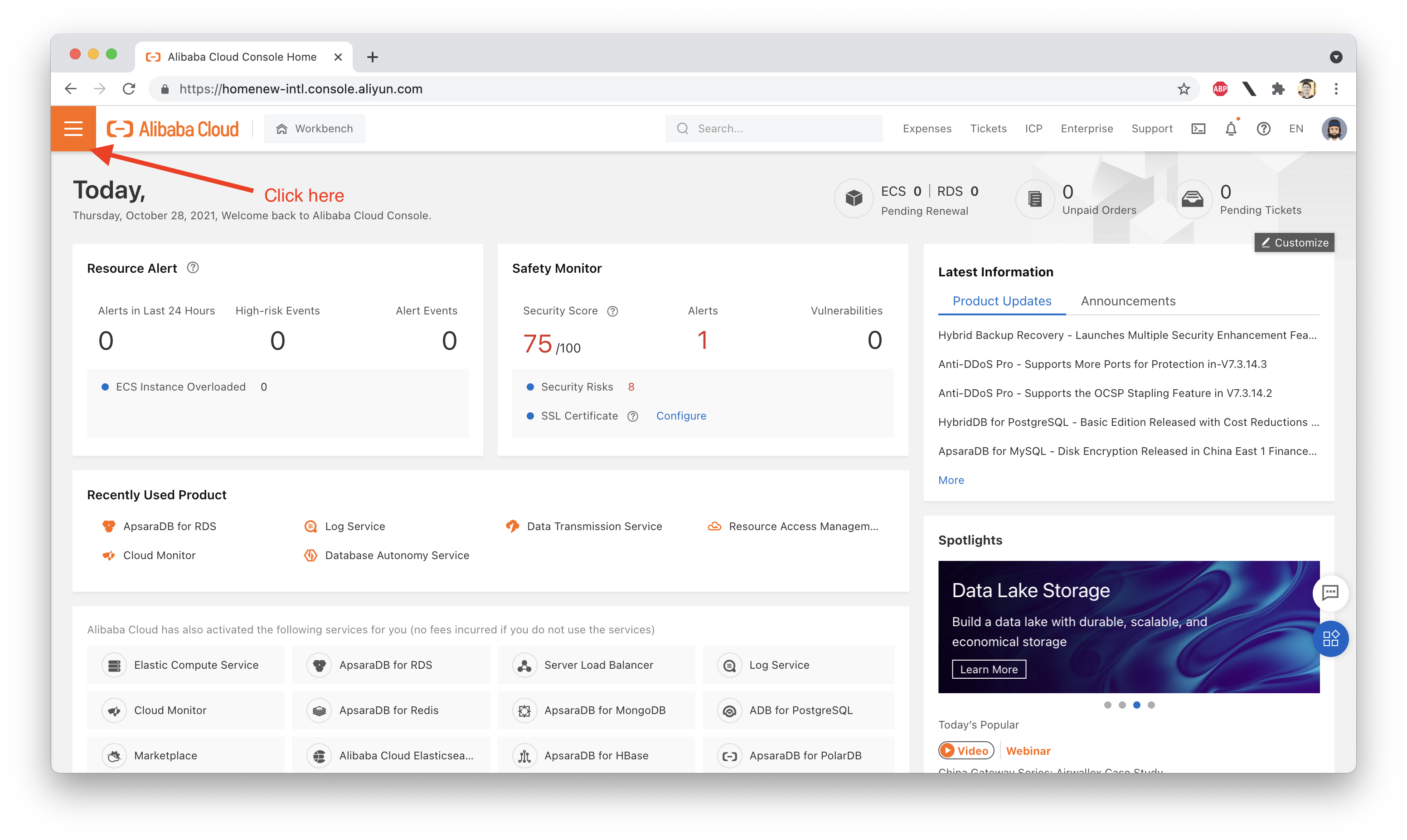

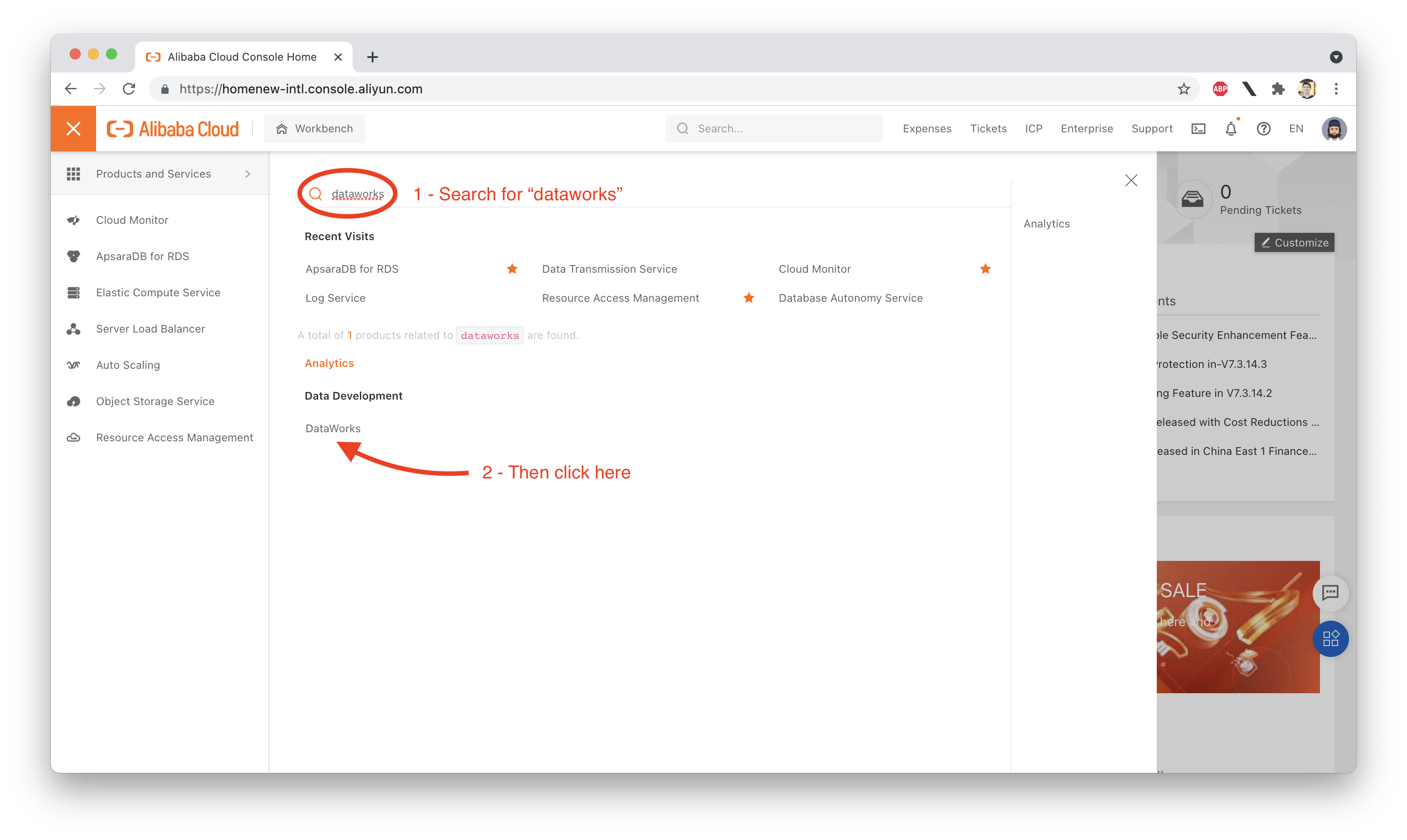

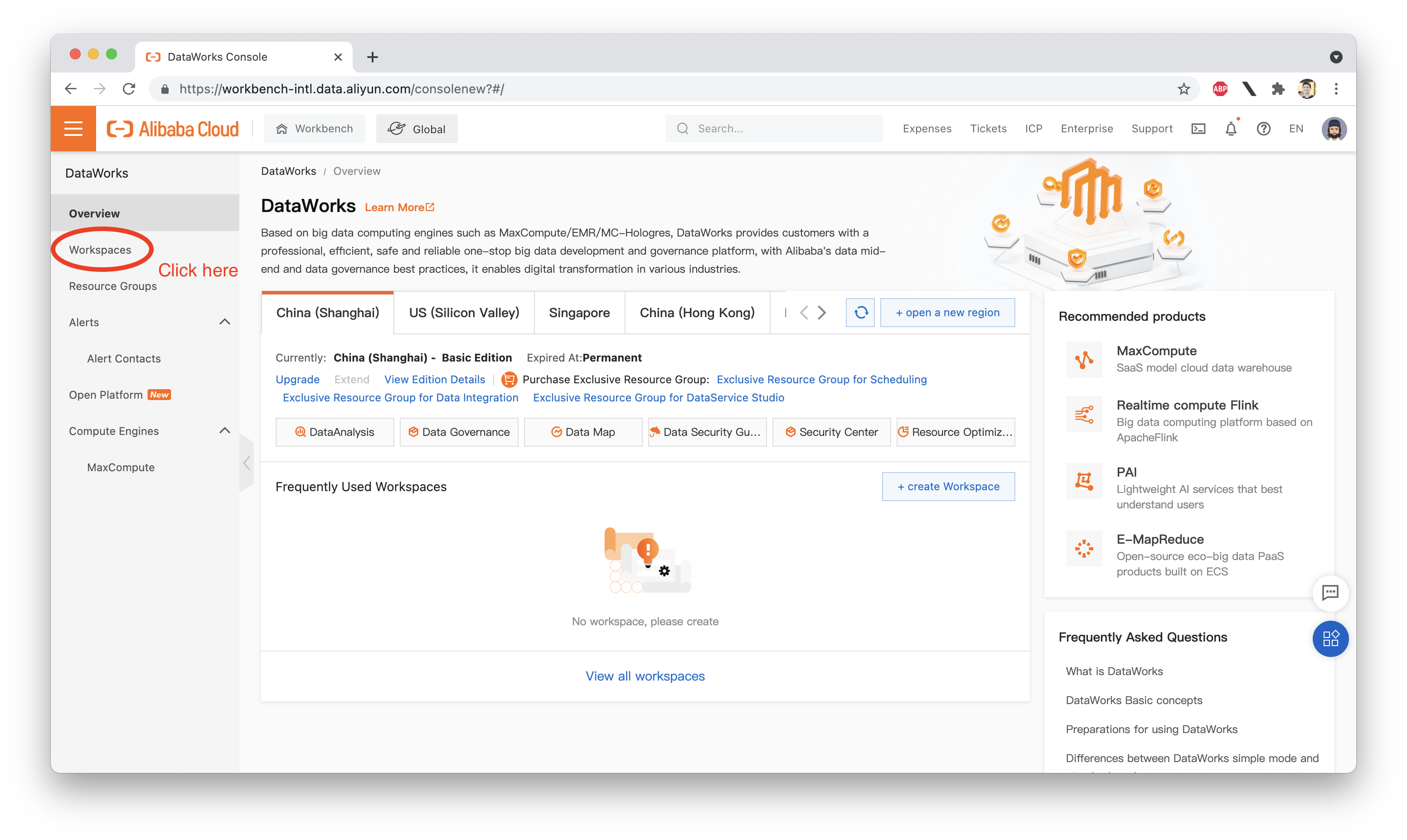

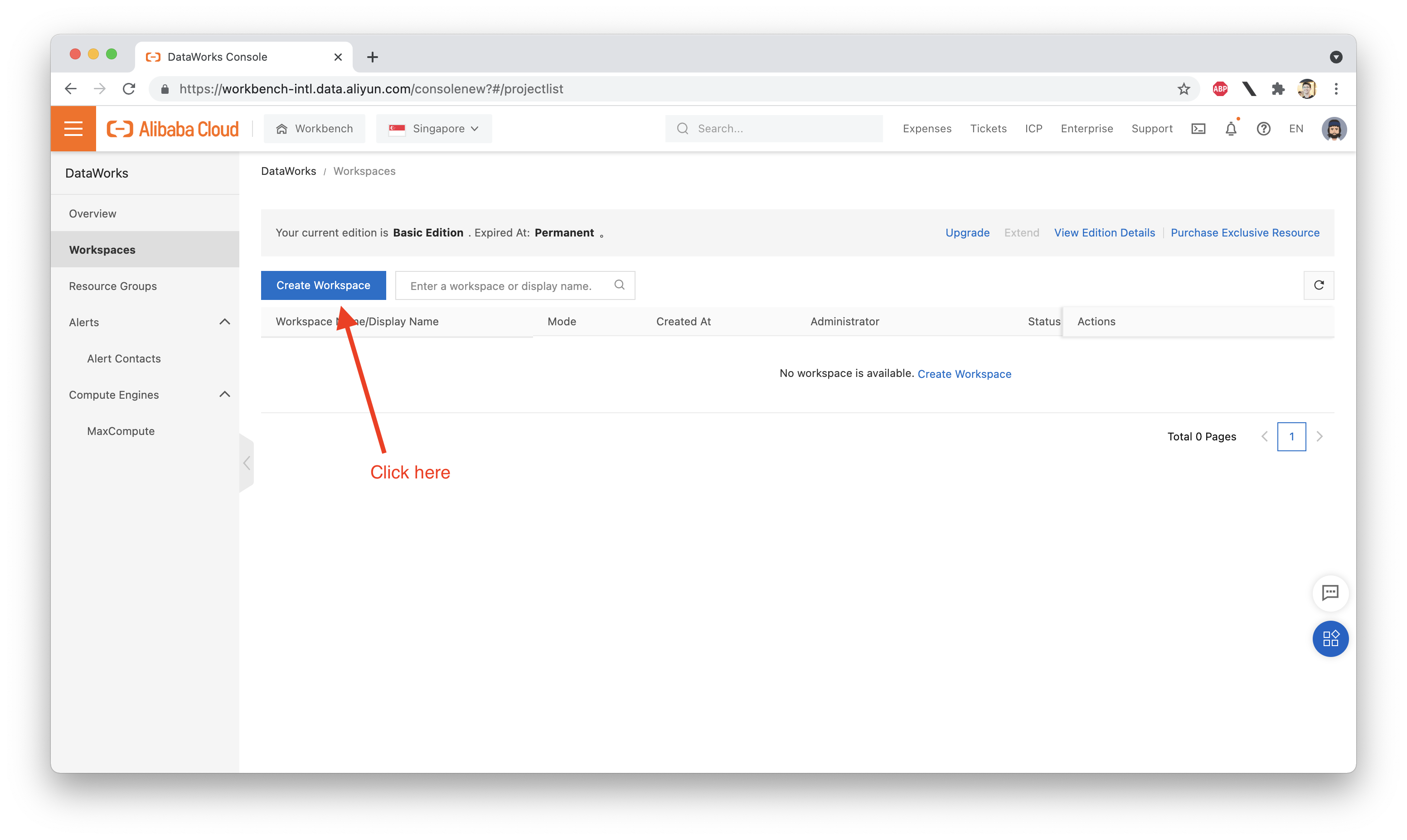

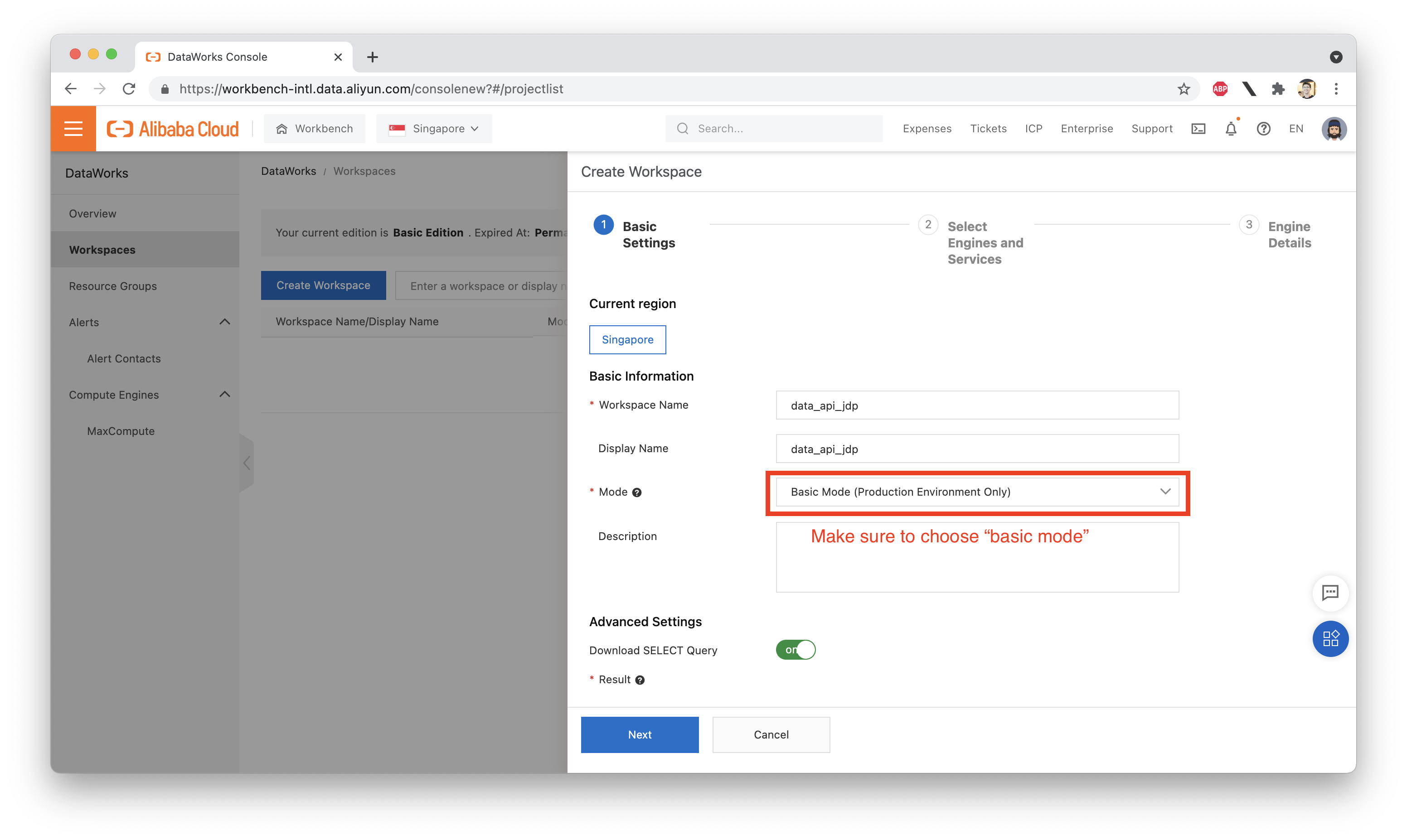

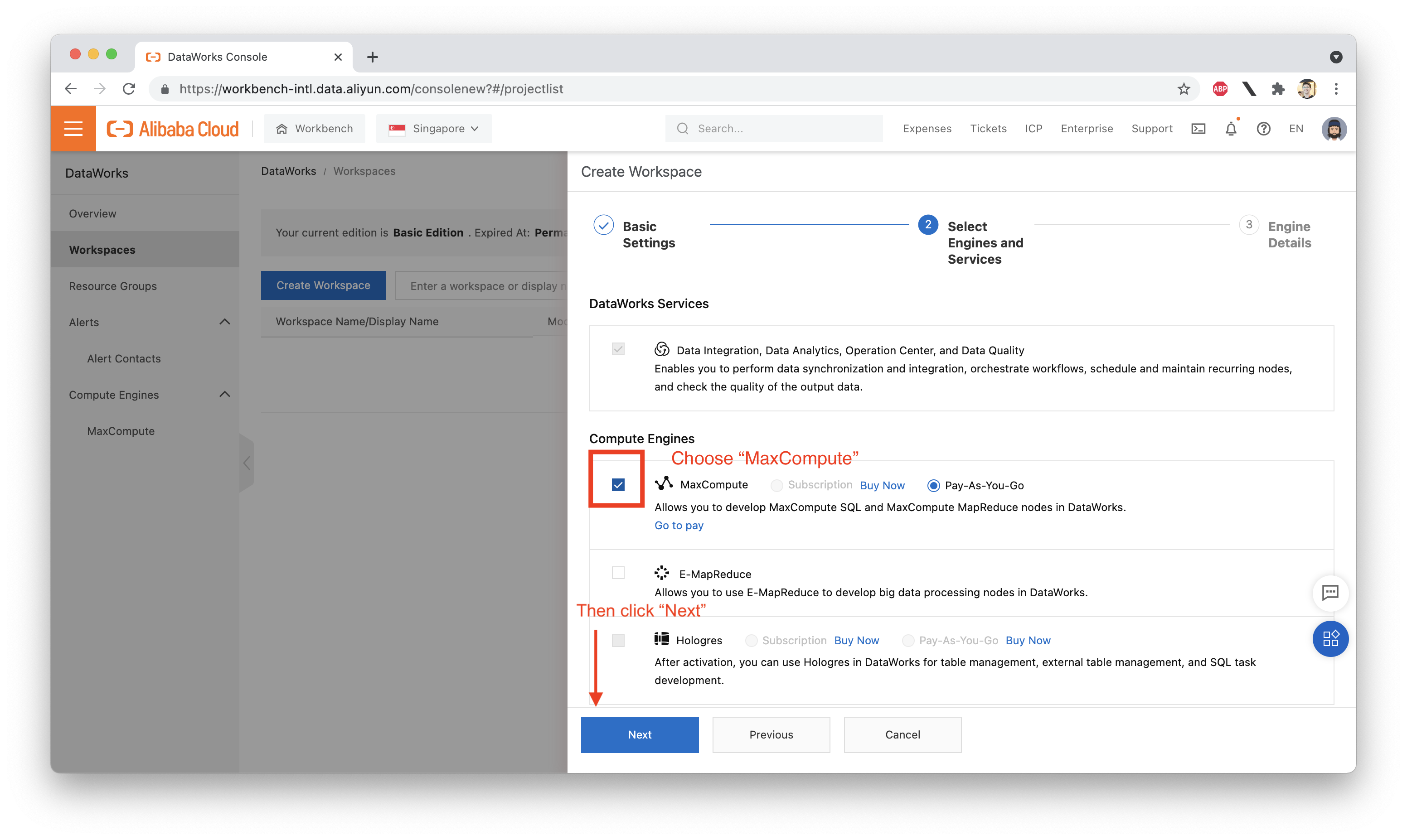

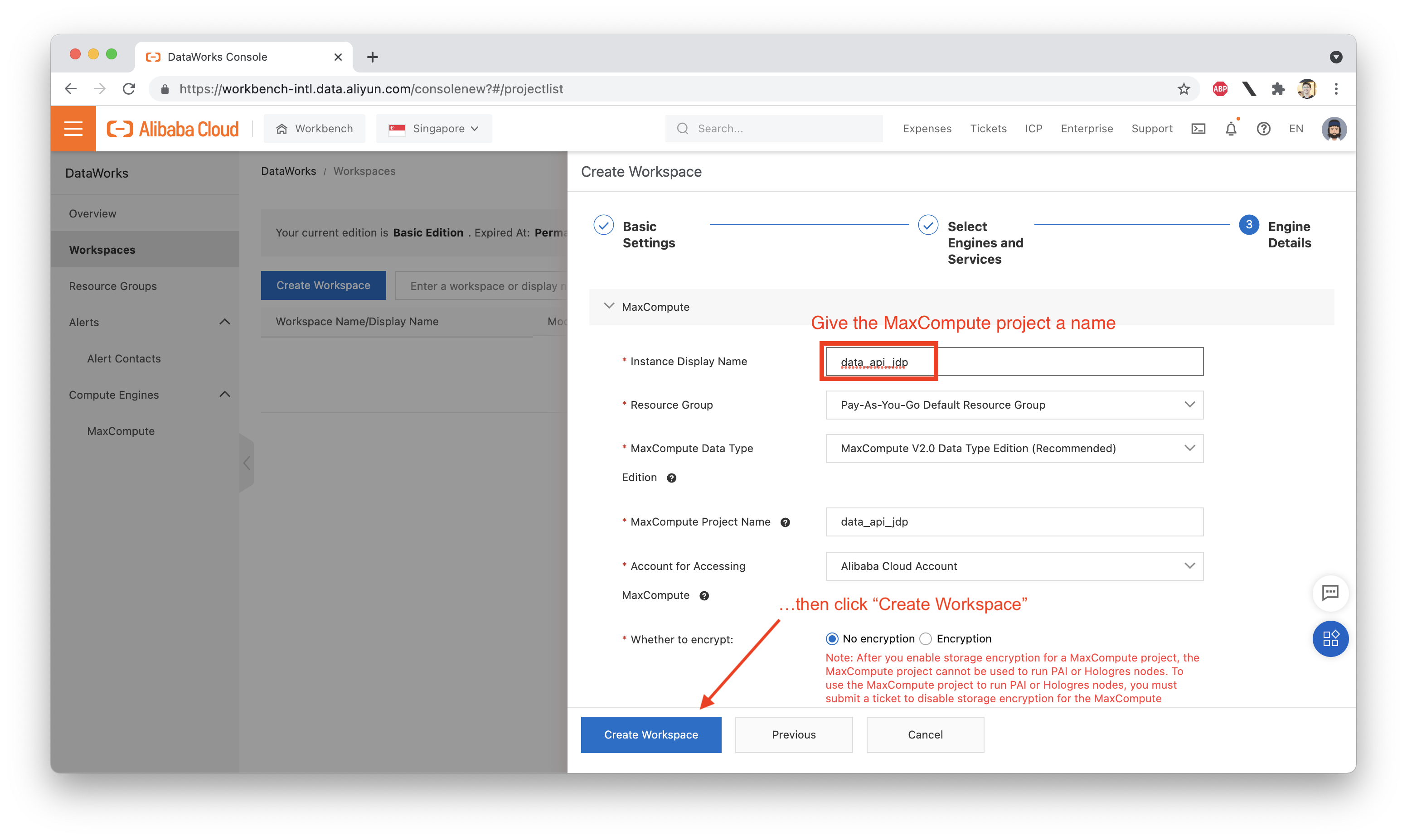

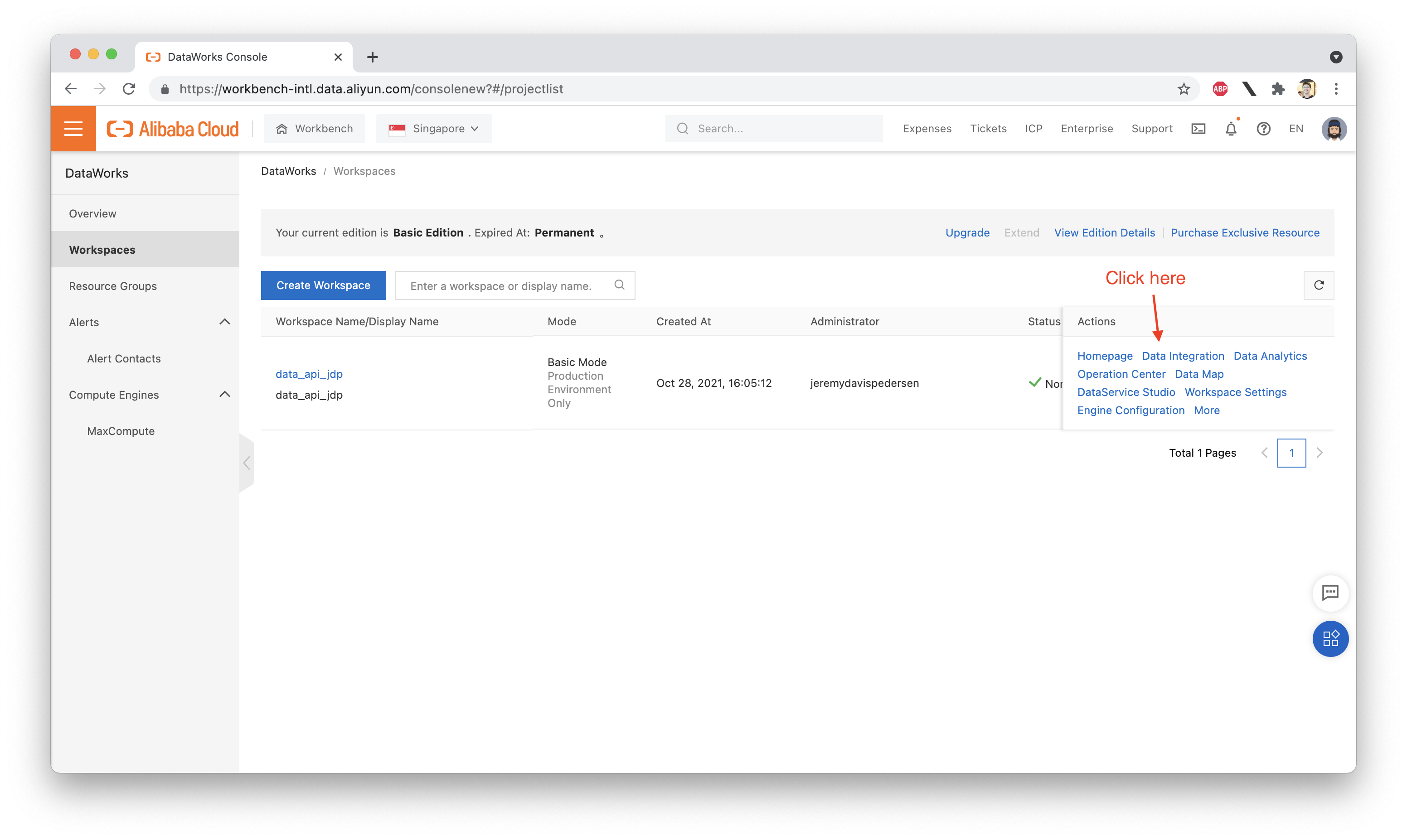

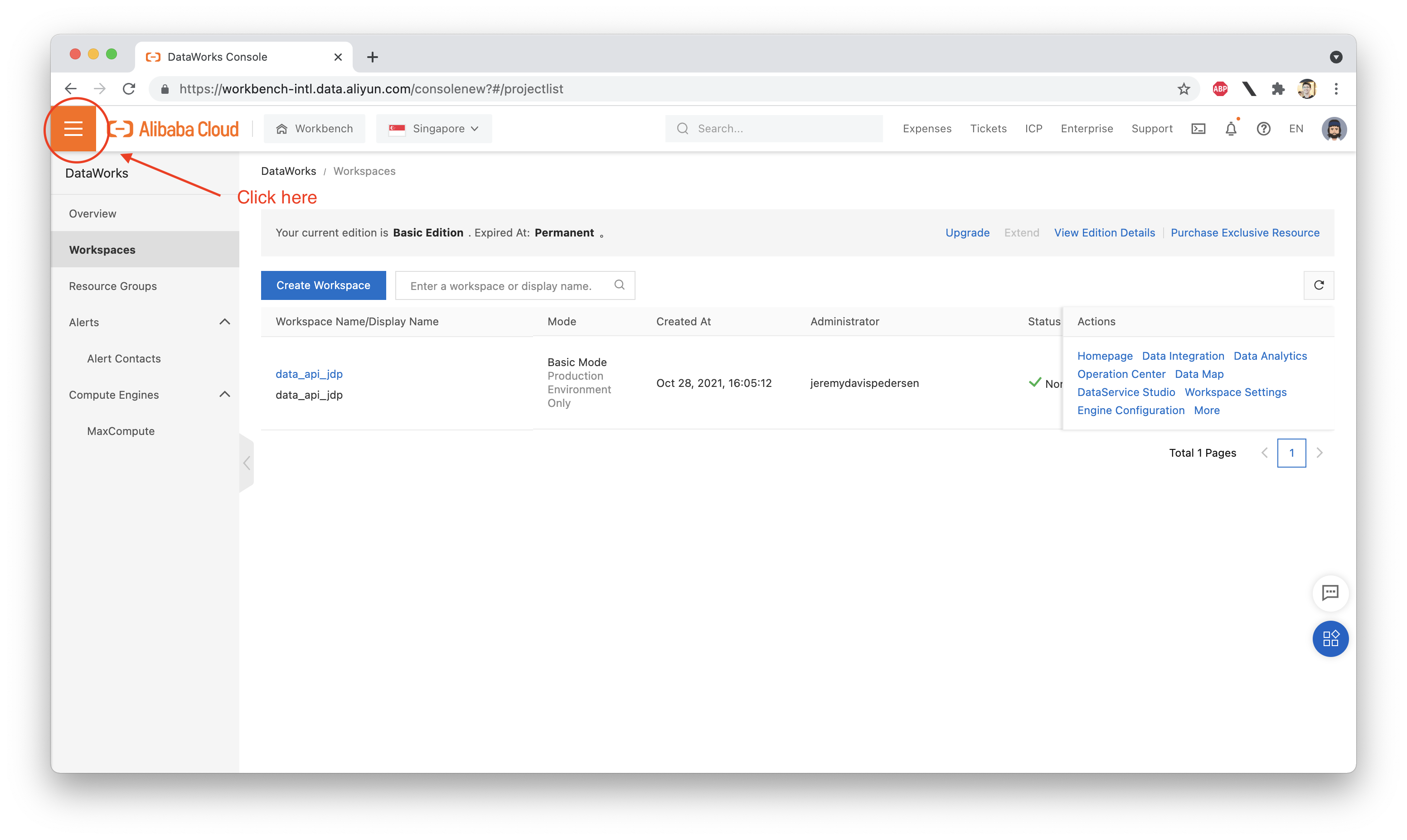

Because DataService Studio is a part of DataWorks, we must first set up a DataWorks Workspace.

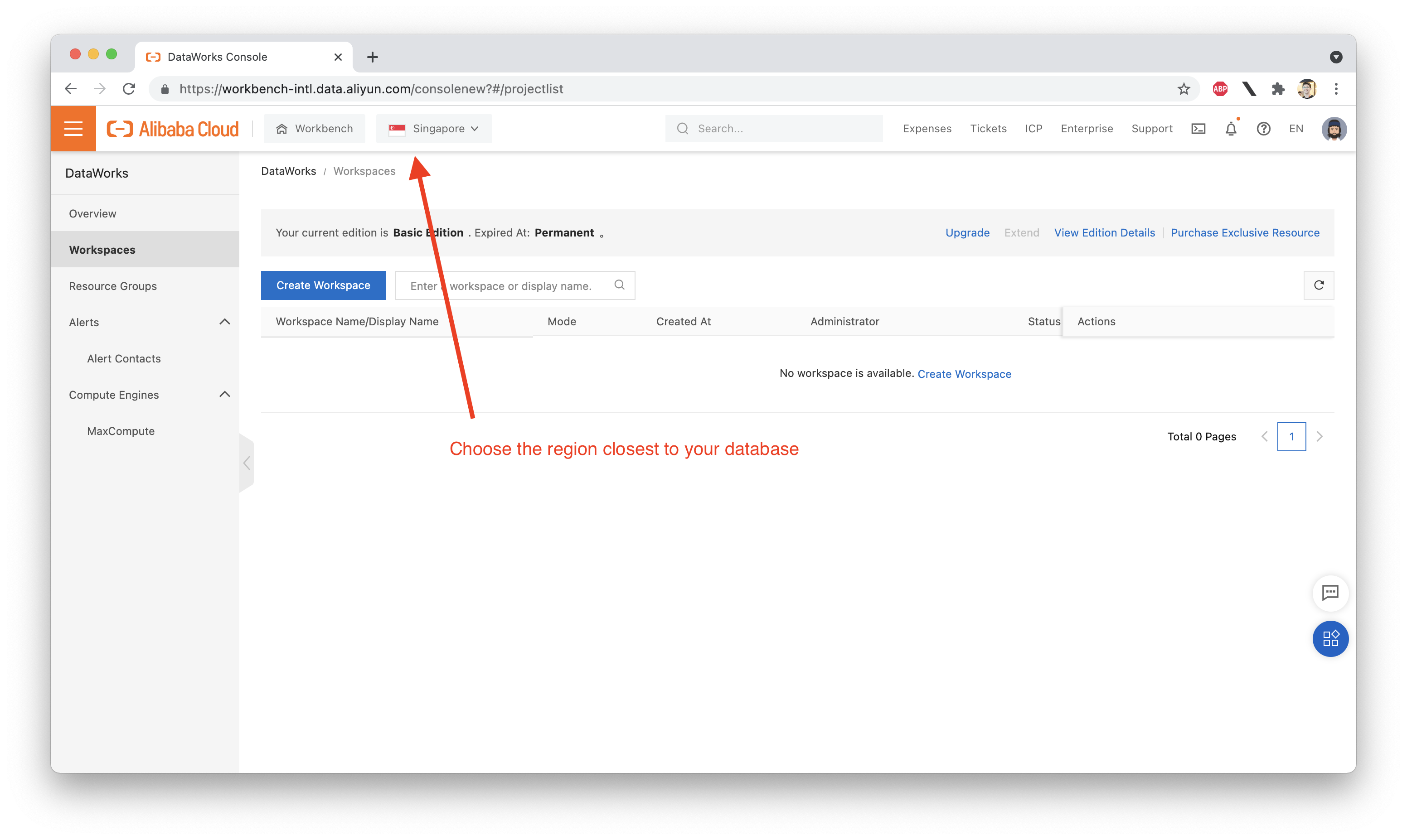

Typically, you will want to do this as close to your data source as possible. In my case, my RDS database (data source) is in Singapore, so I will create my DataWorks Workspace in Singapore. Follow along with the screenshots below to set up your Workspace (note the Workspace and MaxCompute project names can be anything you like):

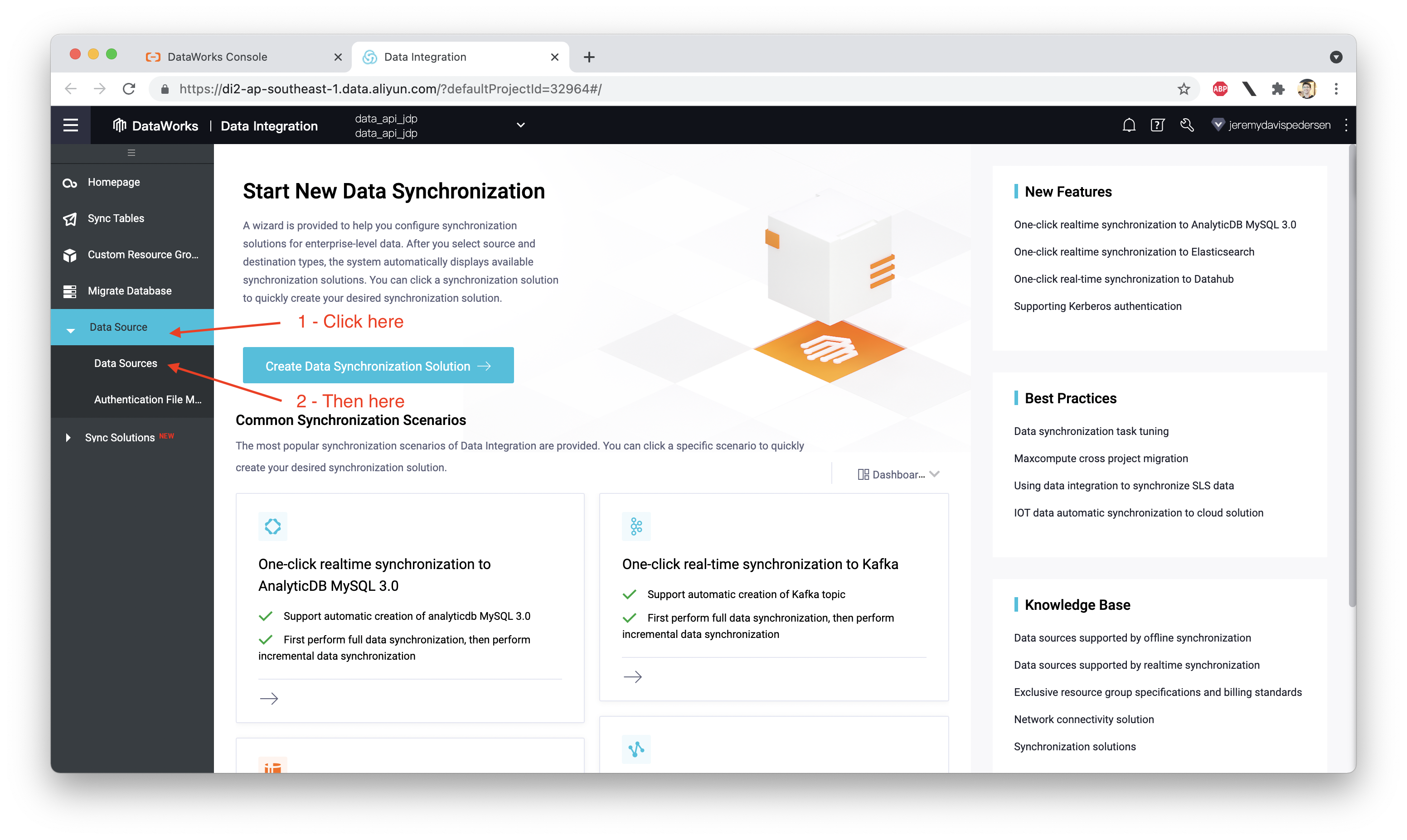

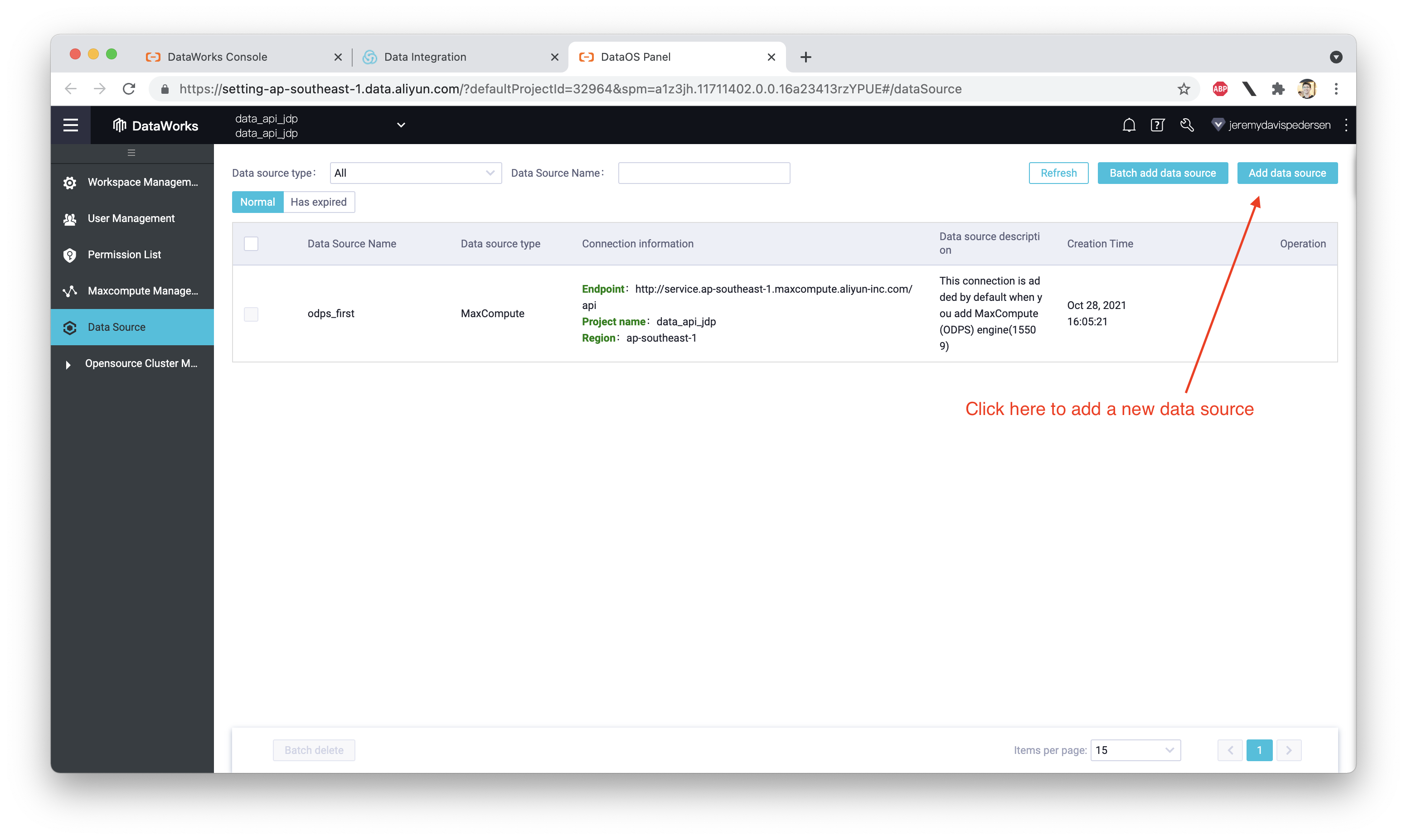

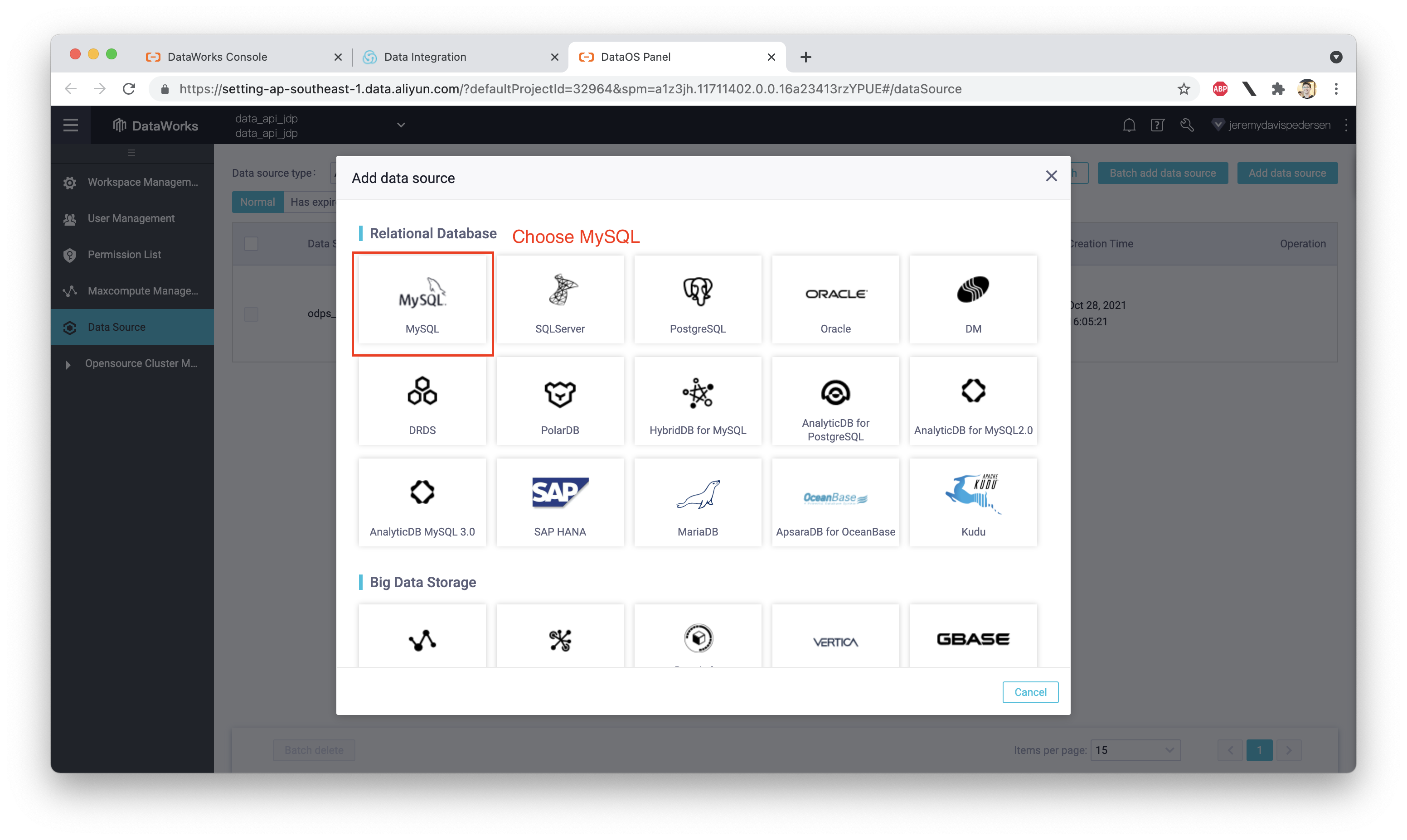

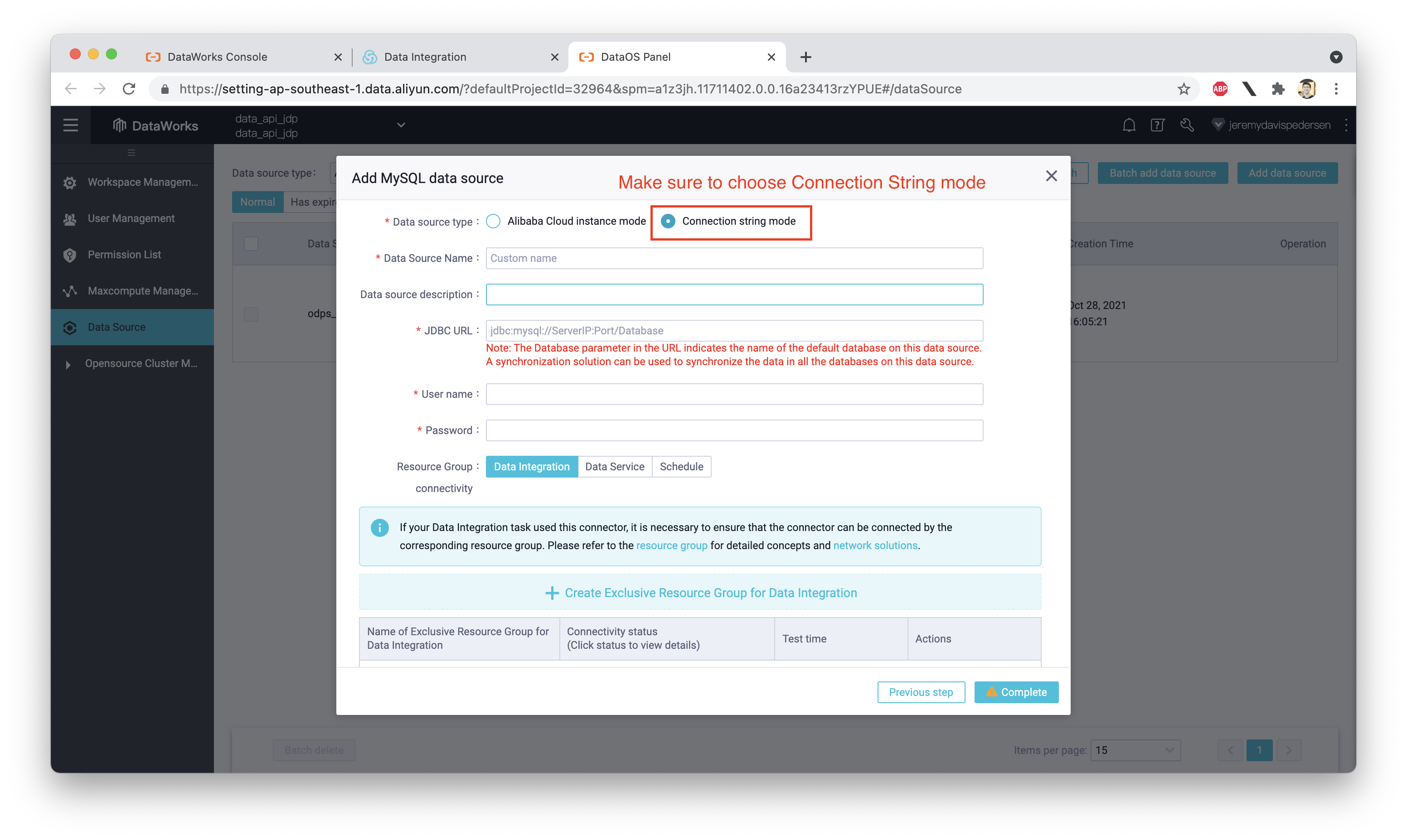

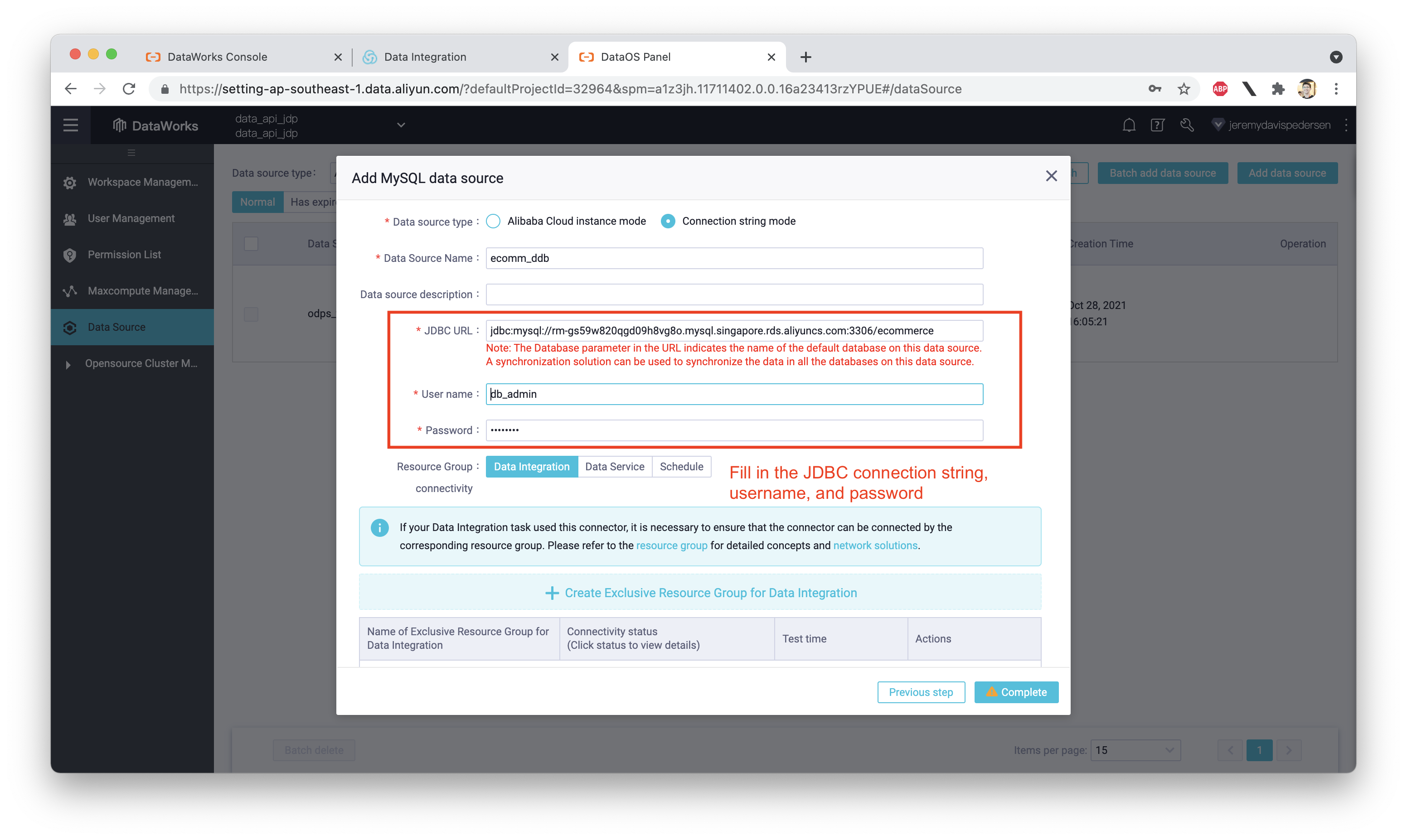

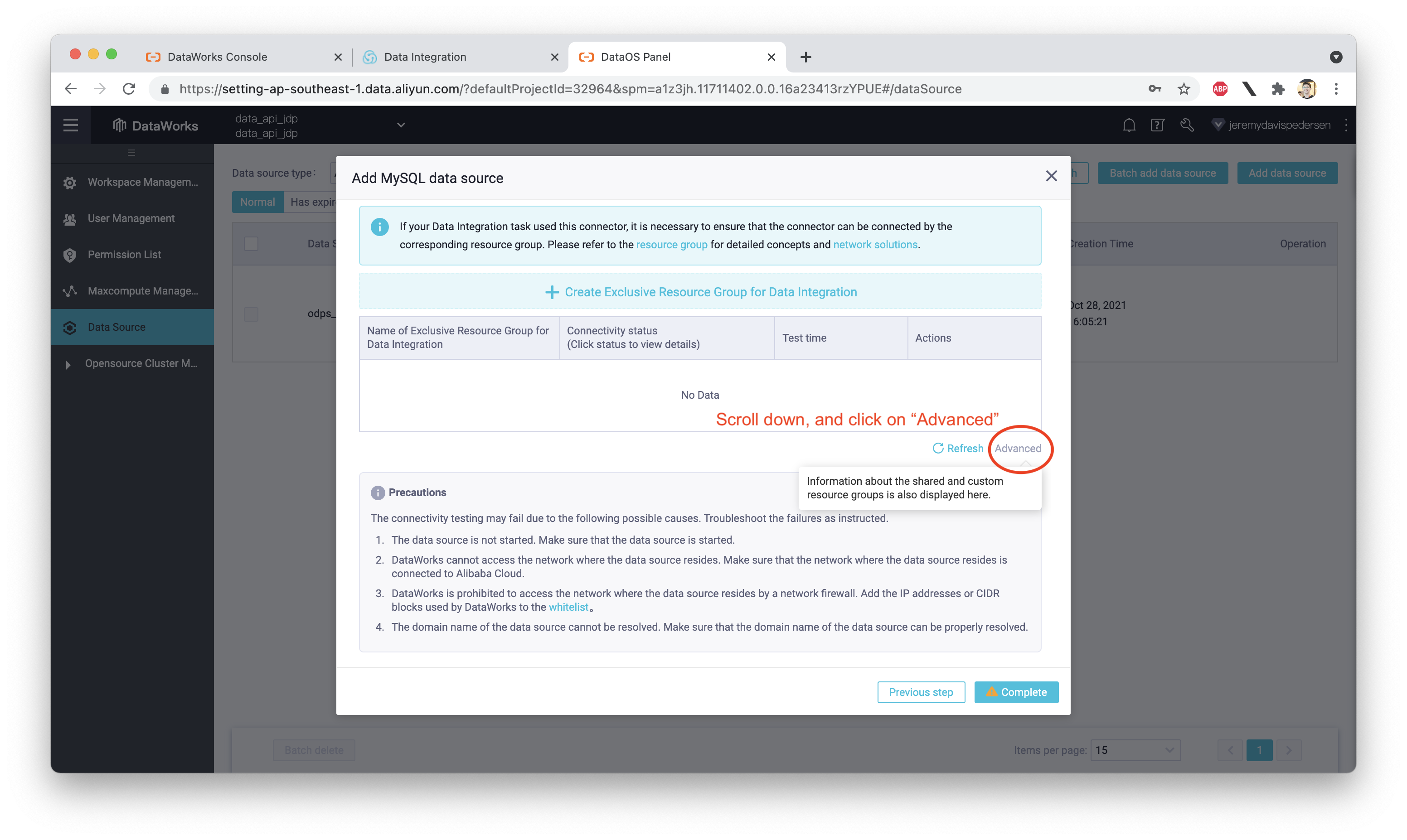

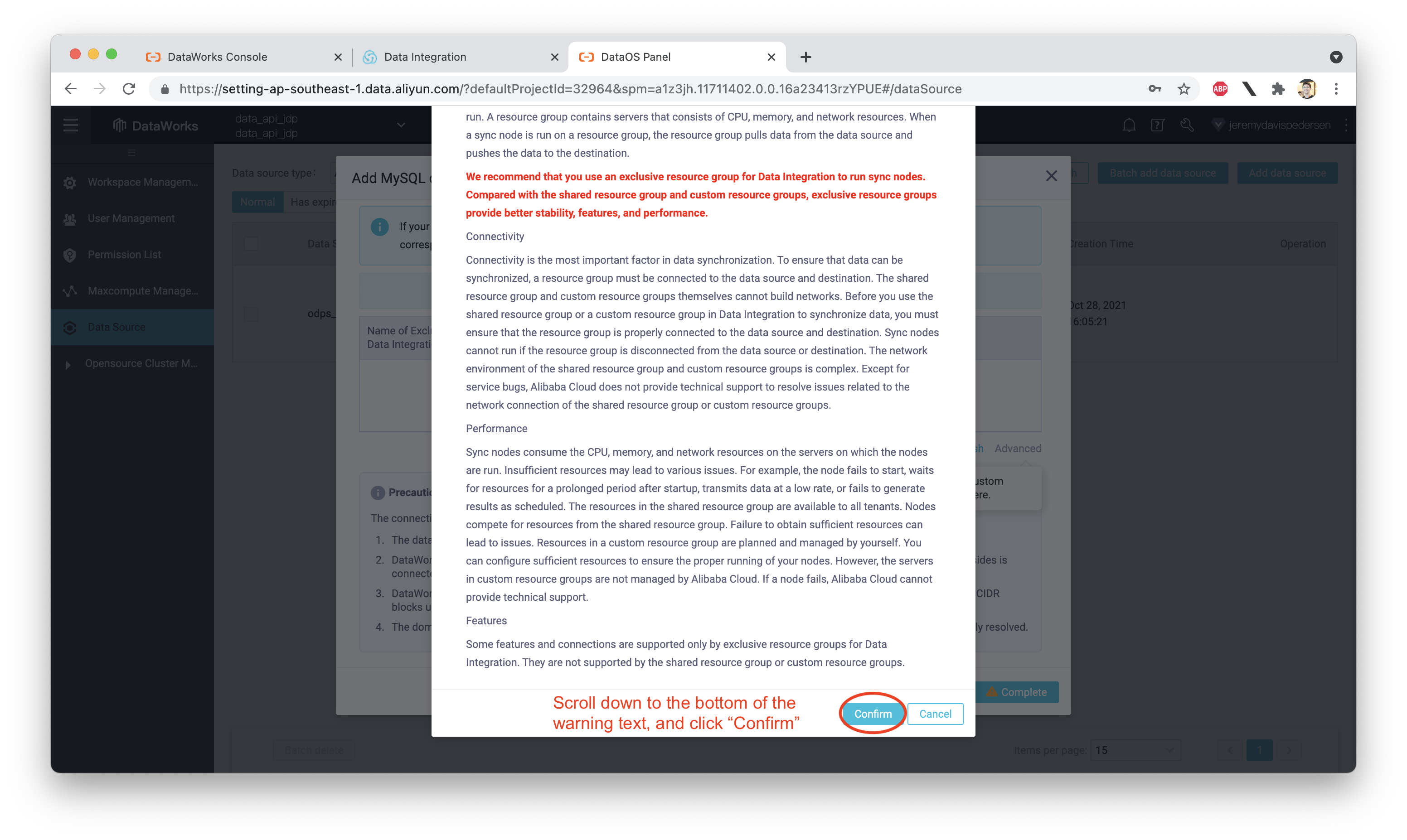

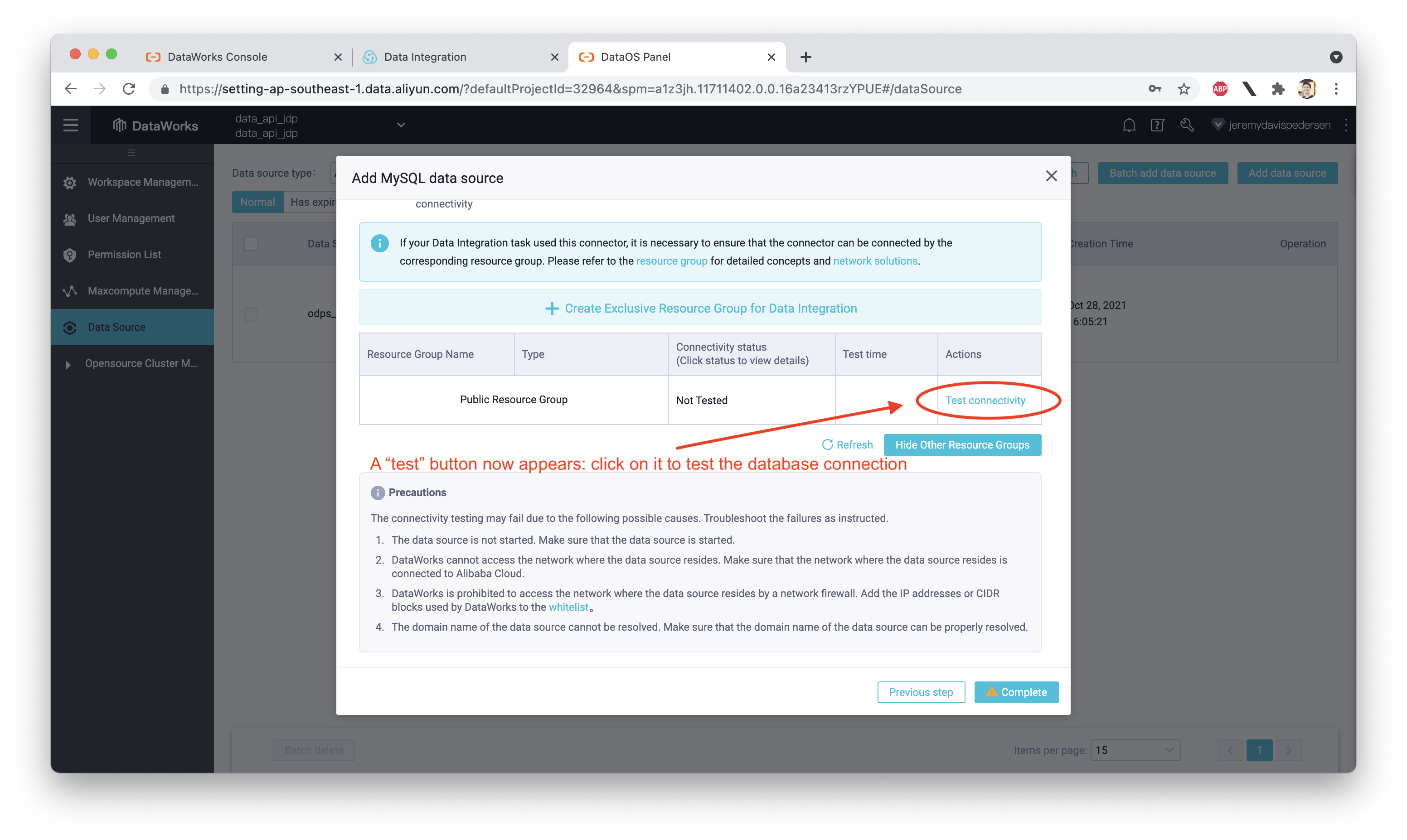

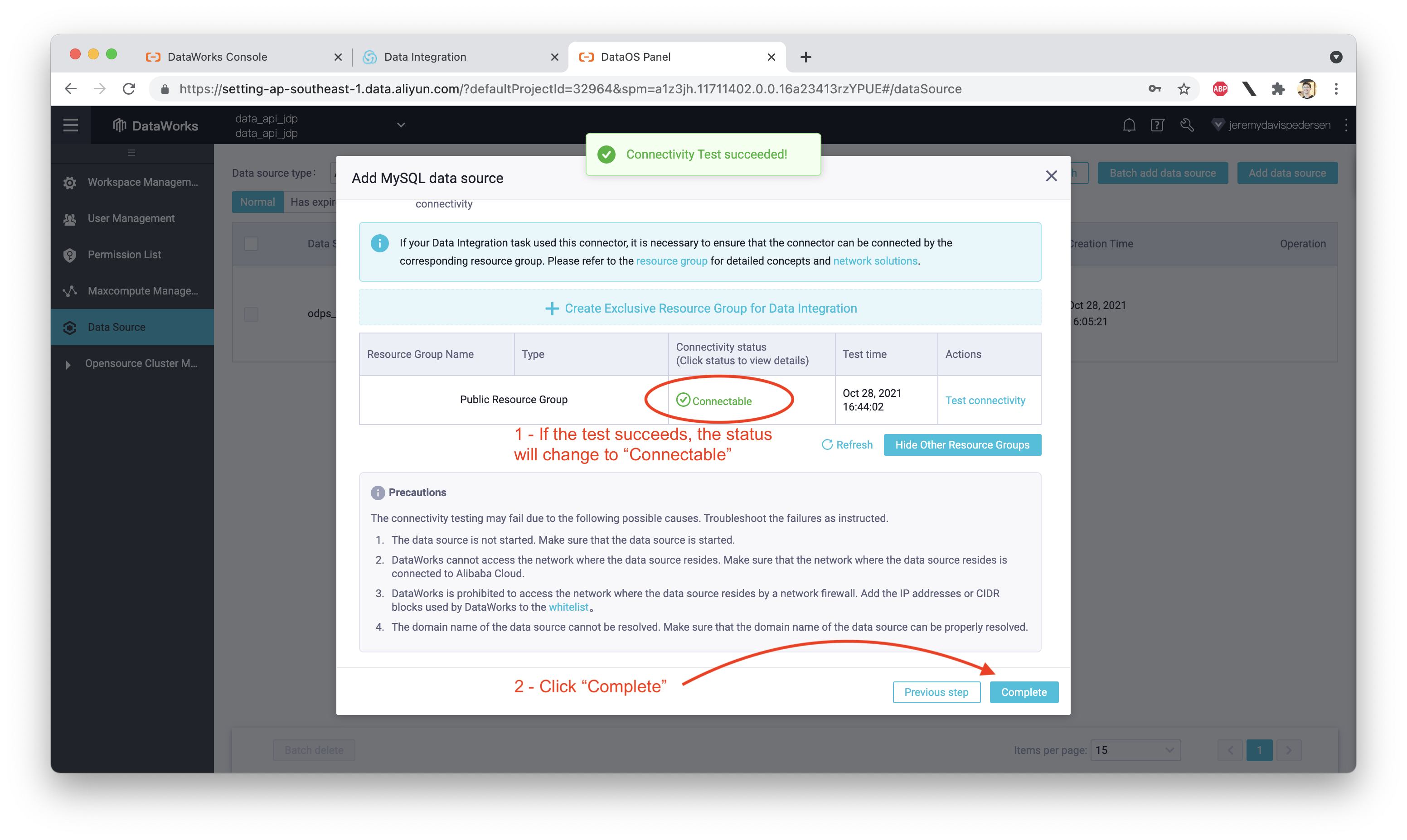

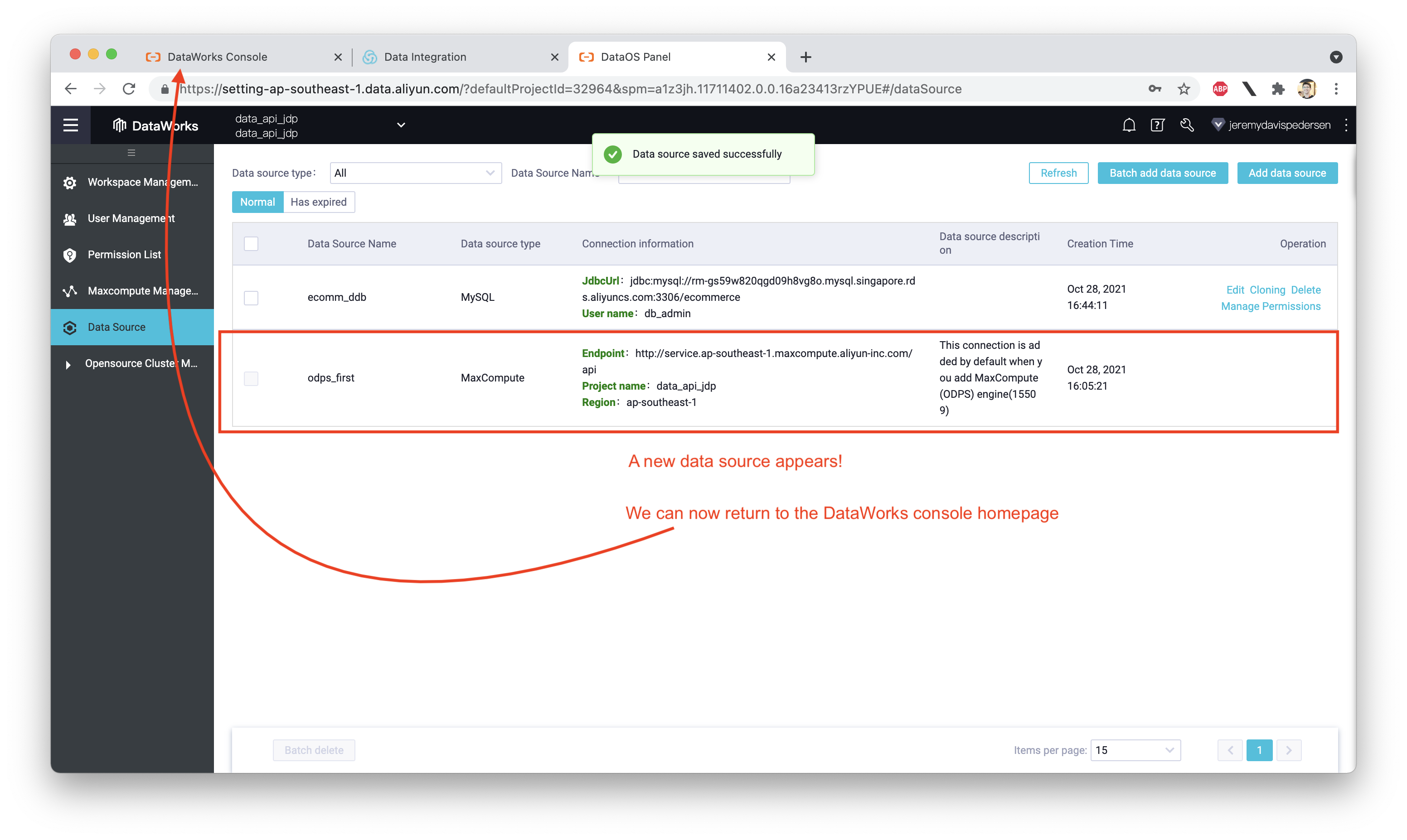

We need to add our MySQL data source to the DataWorks project, in order for DataService Studio to work properly. If DataService Studio cannot query the source database, it won't be able to respond API requests as they come in.

We can set up a new data source using the DataWorks Data Integration Console:

Since we're simulating a self-built database (or a database not hosted on Alibaba Cloud), you should make sure that:

Great! With our DataWorks project up and running and our data source configured, we are well on our way to getting a Data API up and running.

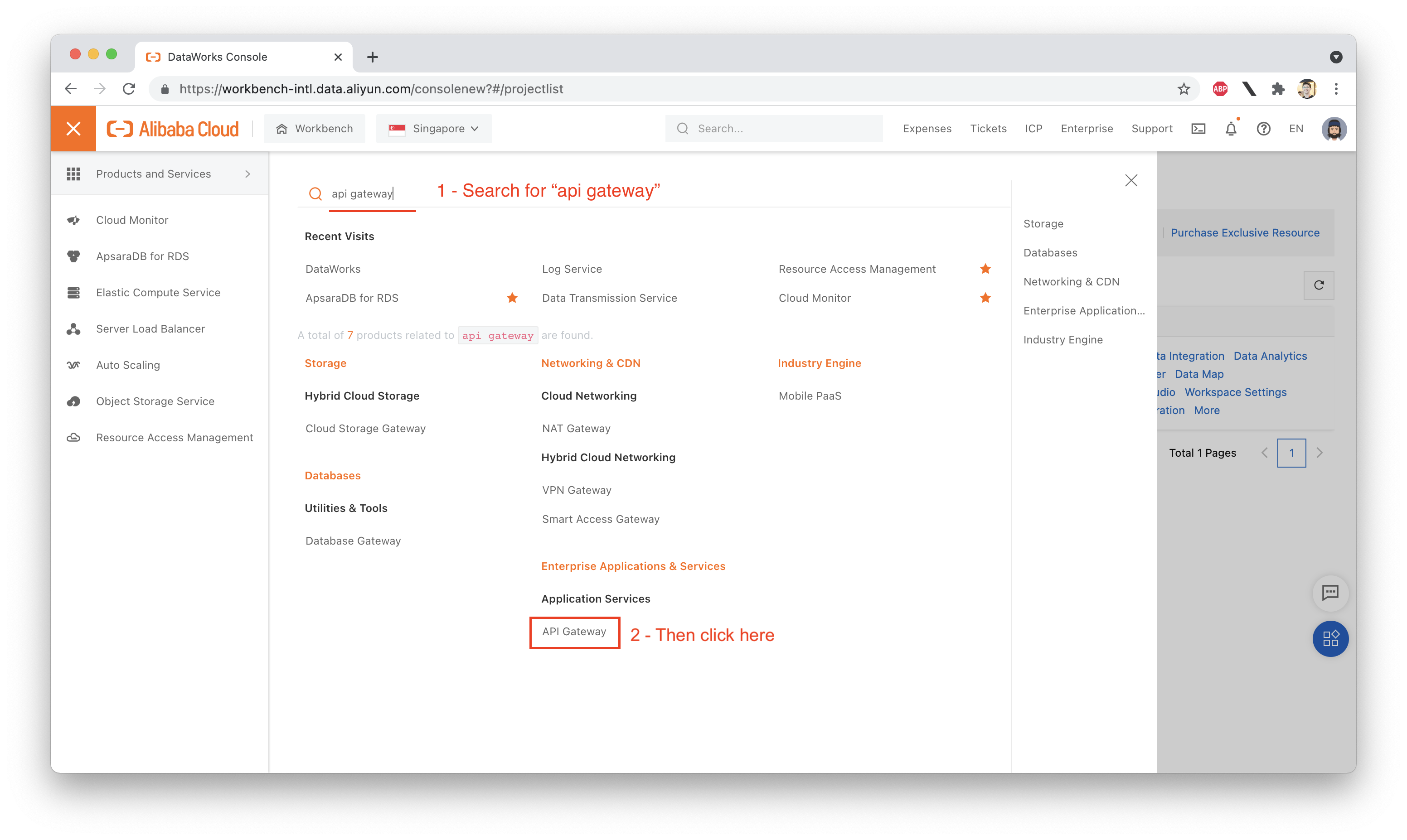

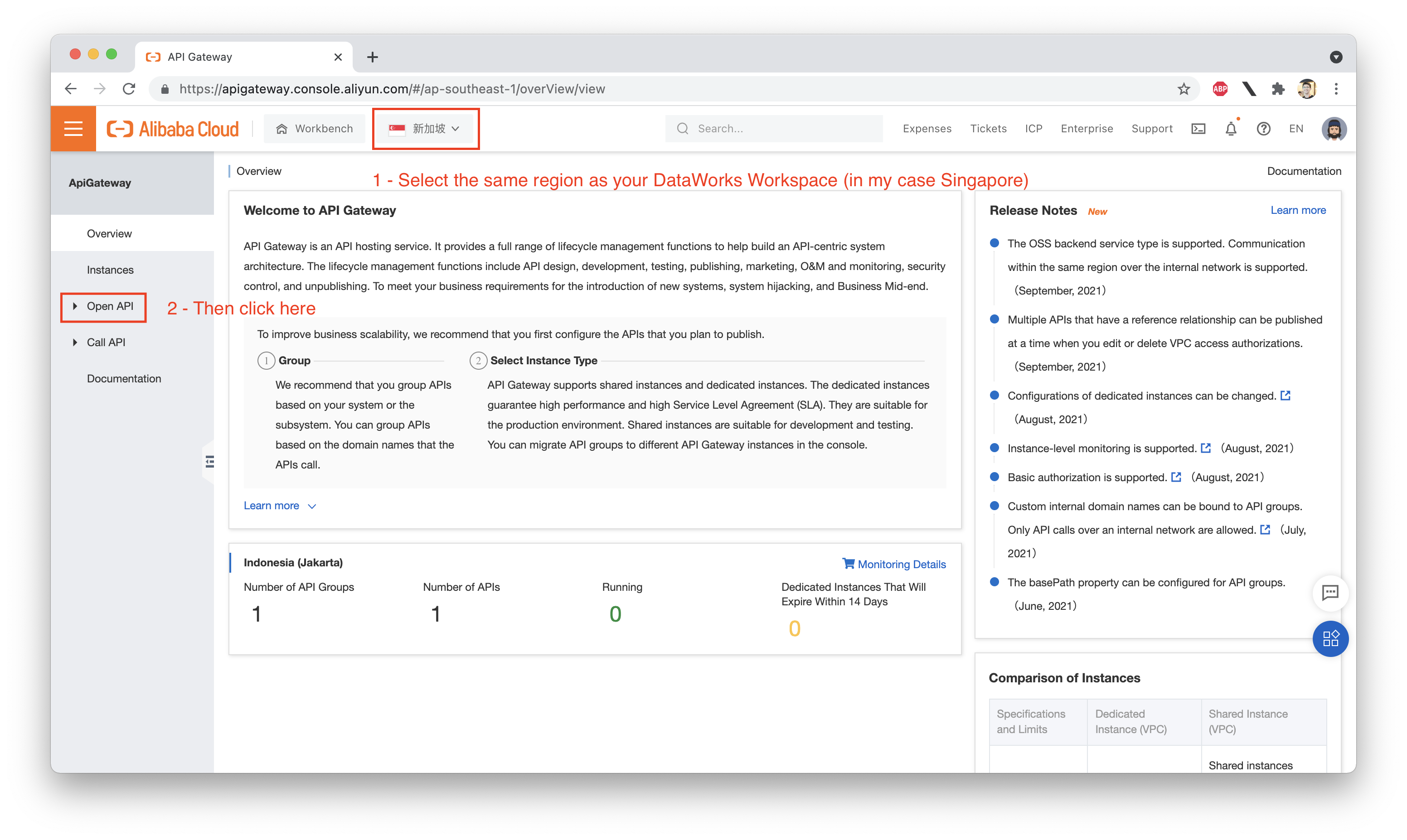

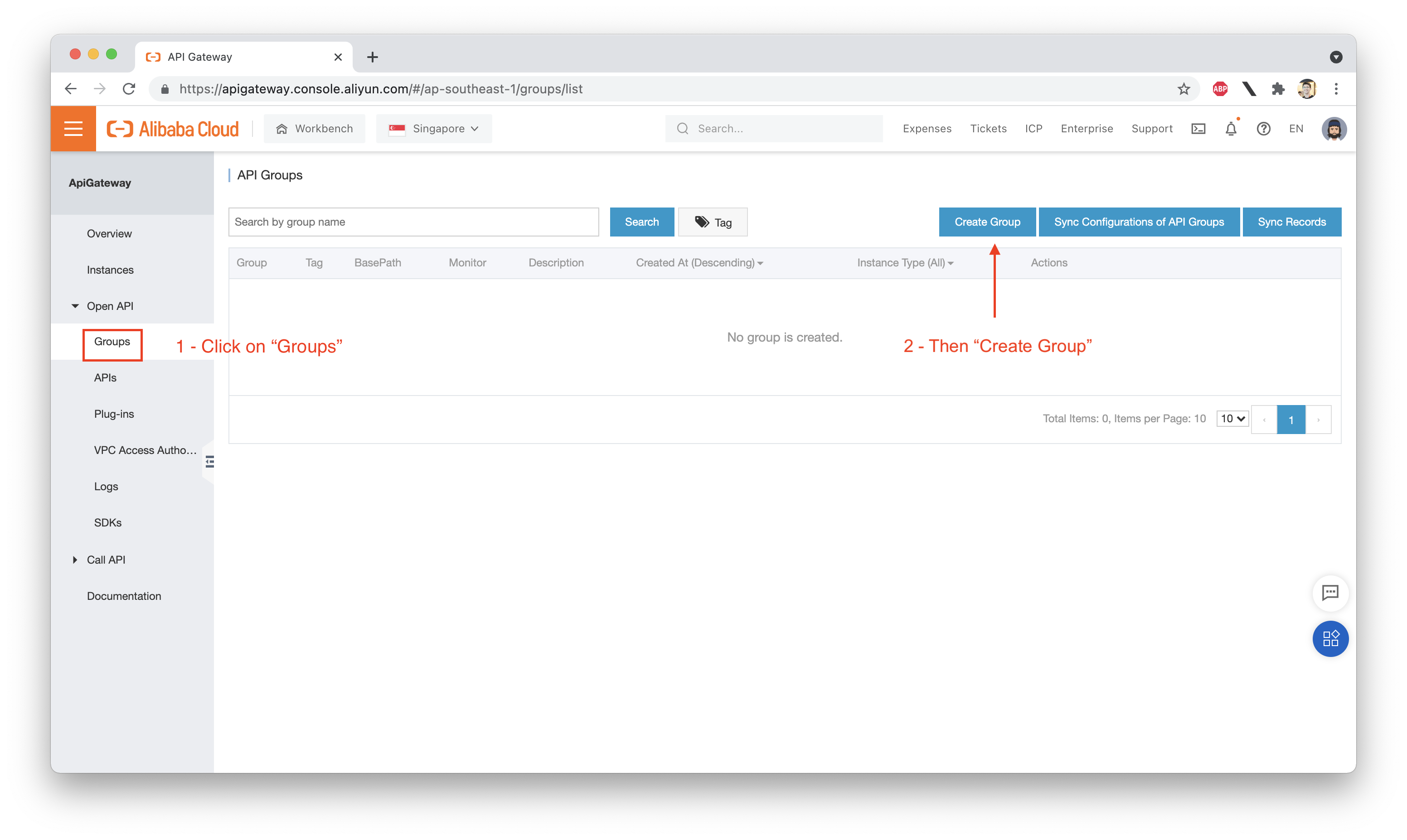

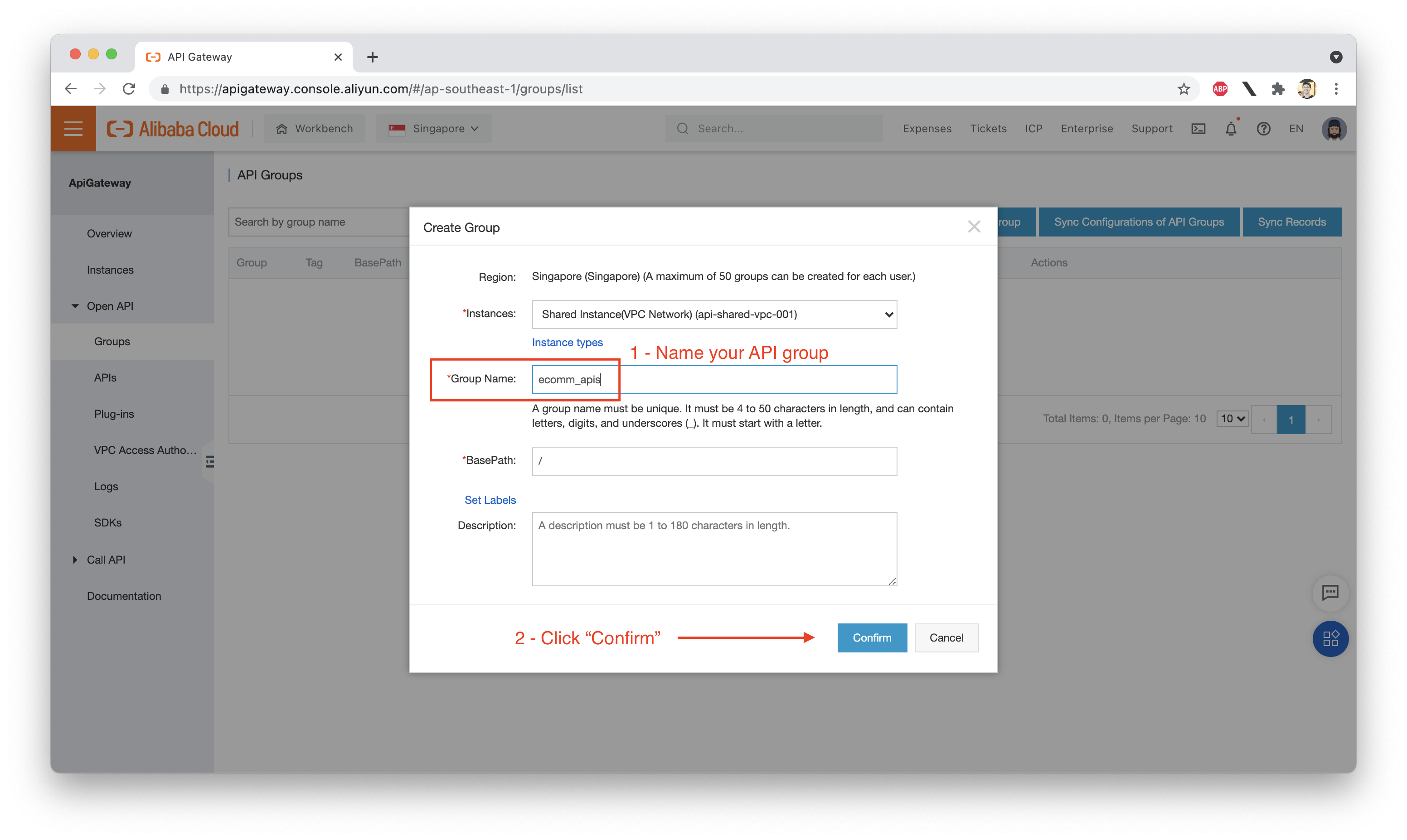

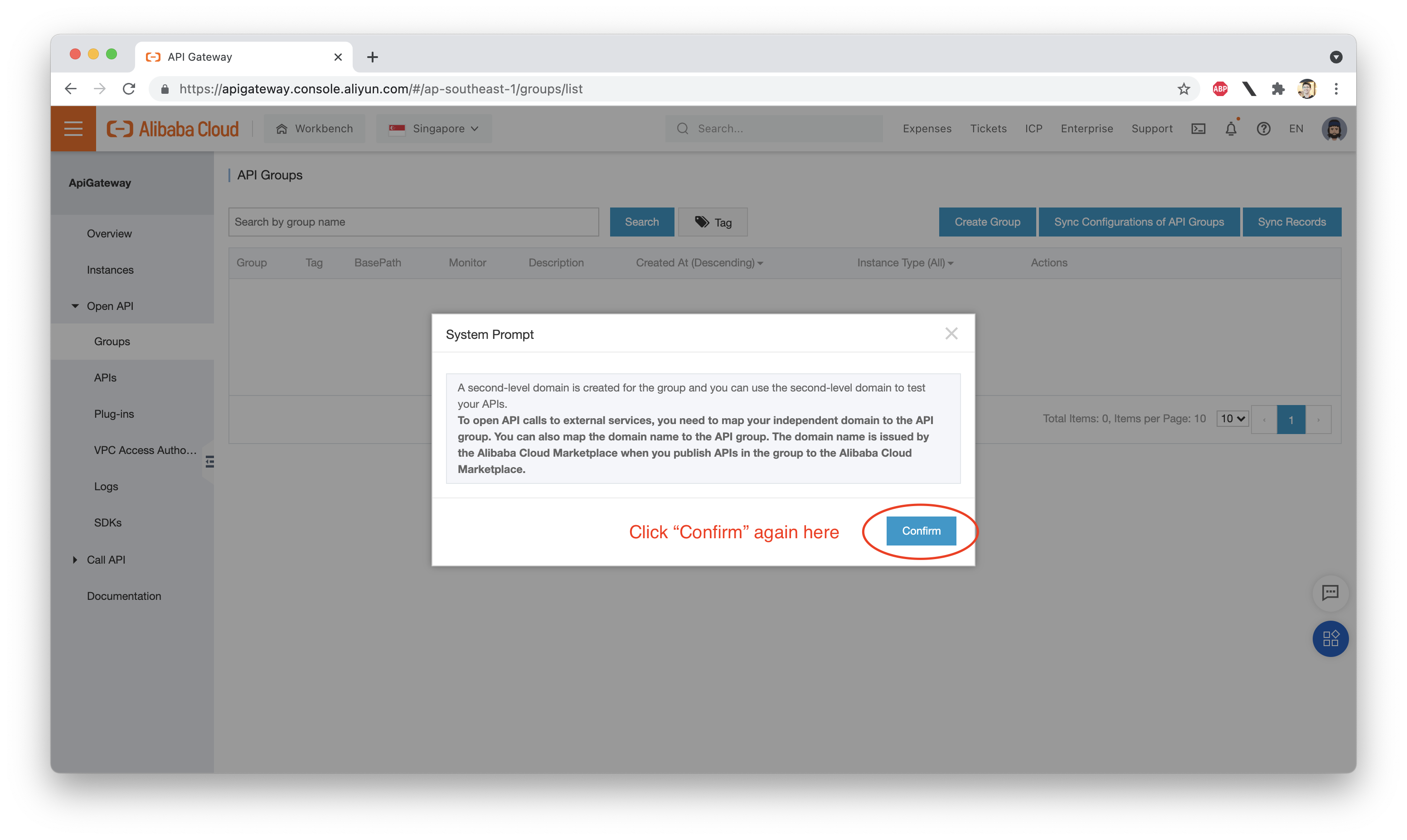

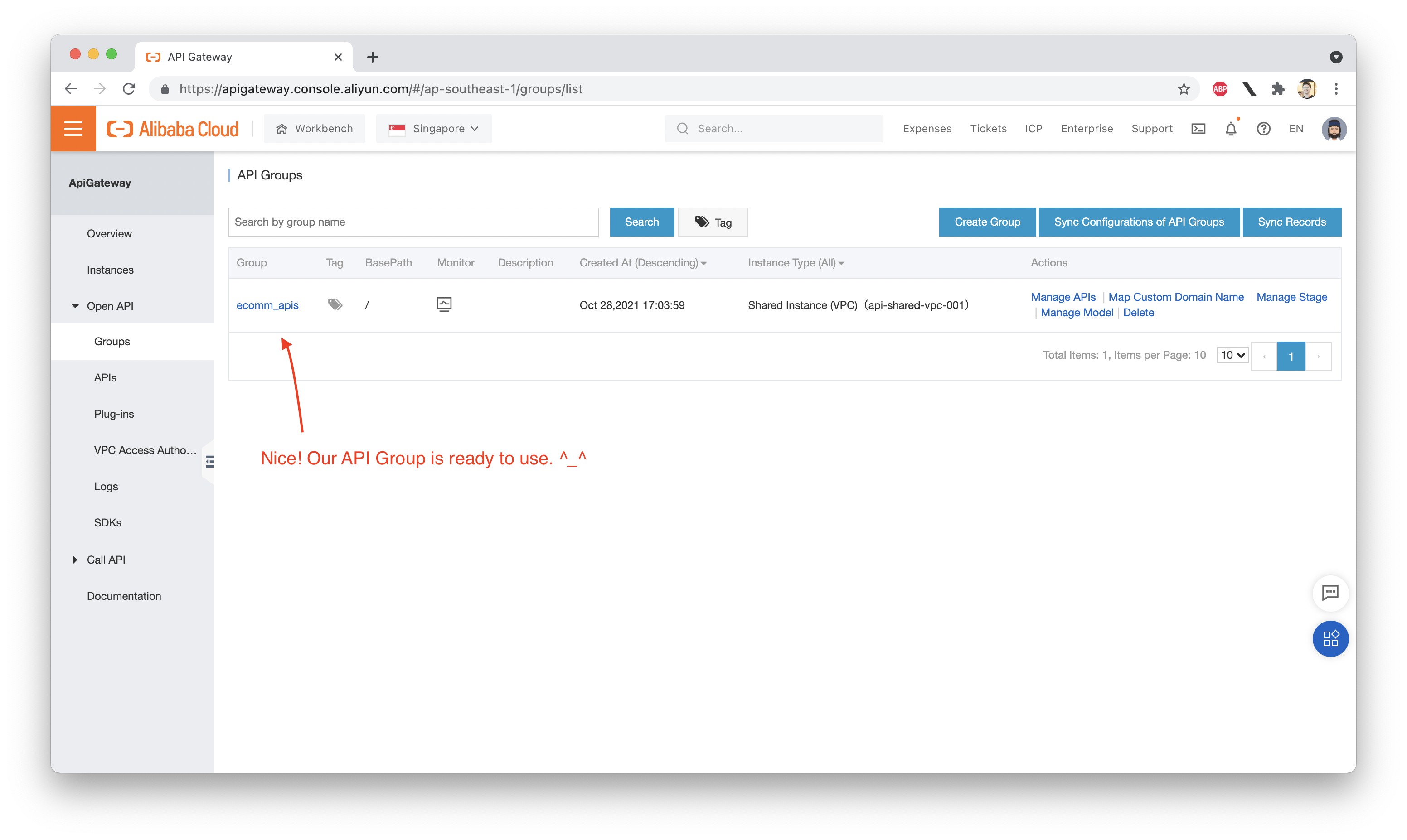

However, there is one more step we need to take care of before diving back into the DataWorks console: we need to create an API Group inside the API Gateway console.

Although DataService Studio can create and publish APIs, it cannot automatically create the API Group that the APIs will be published into. We need to do that step manually from the API Gateway console. Again, make sure you create the API Group in the same region where your DataWorks project is deployed. In my case, that's Singapore:

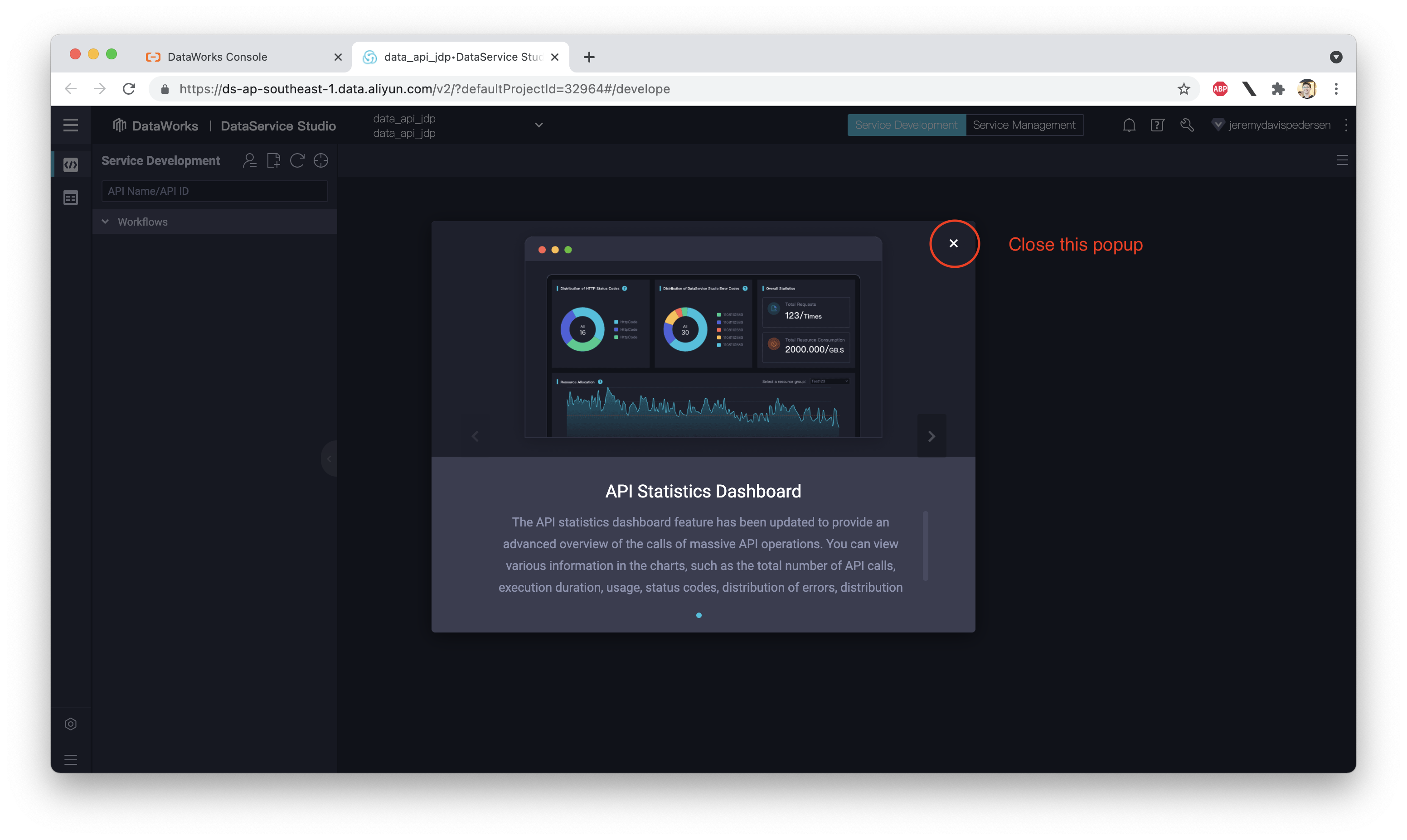

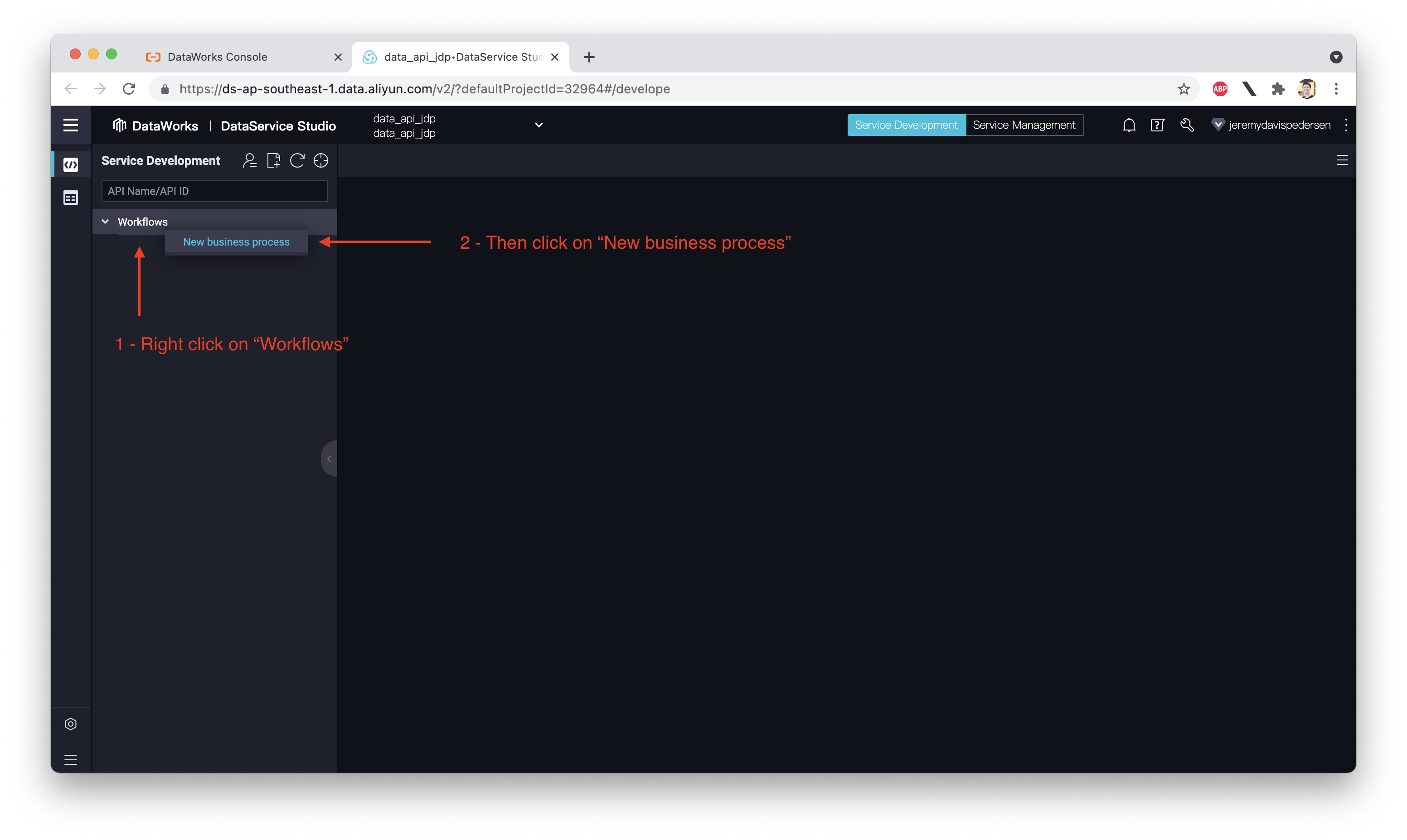

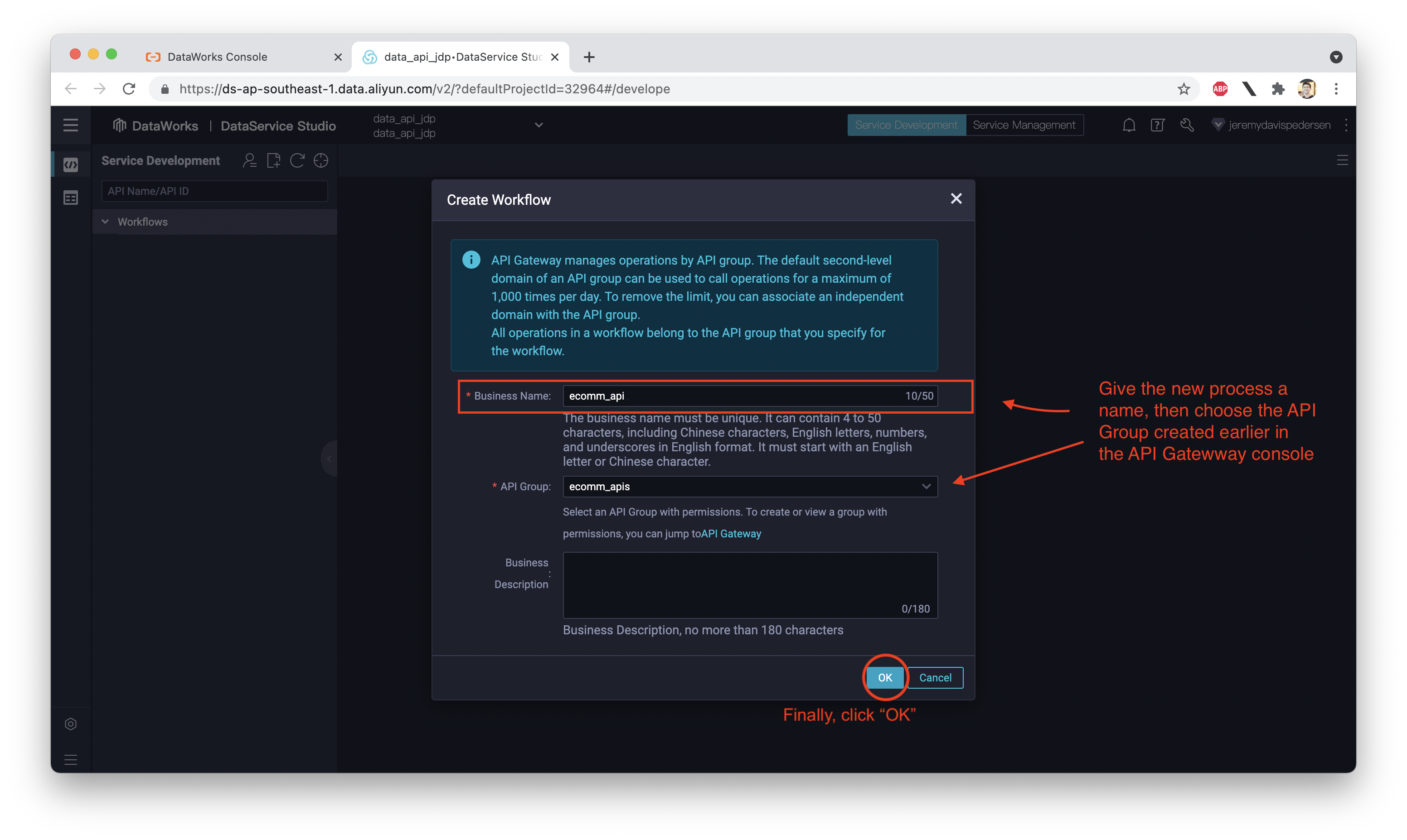

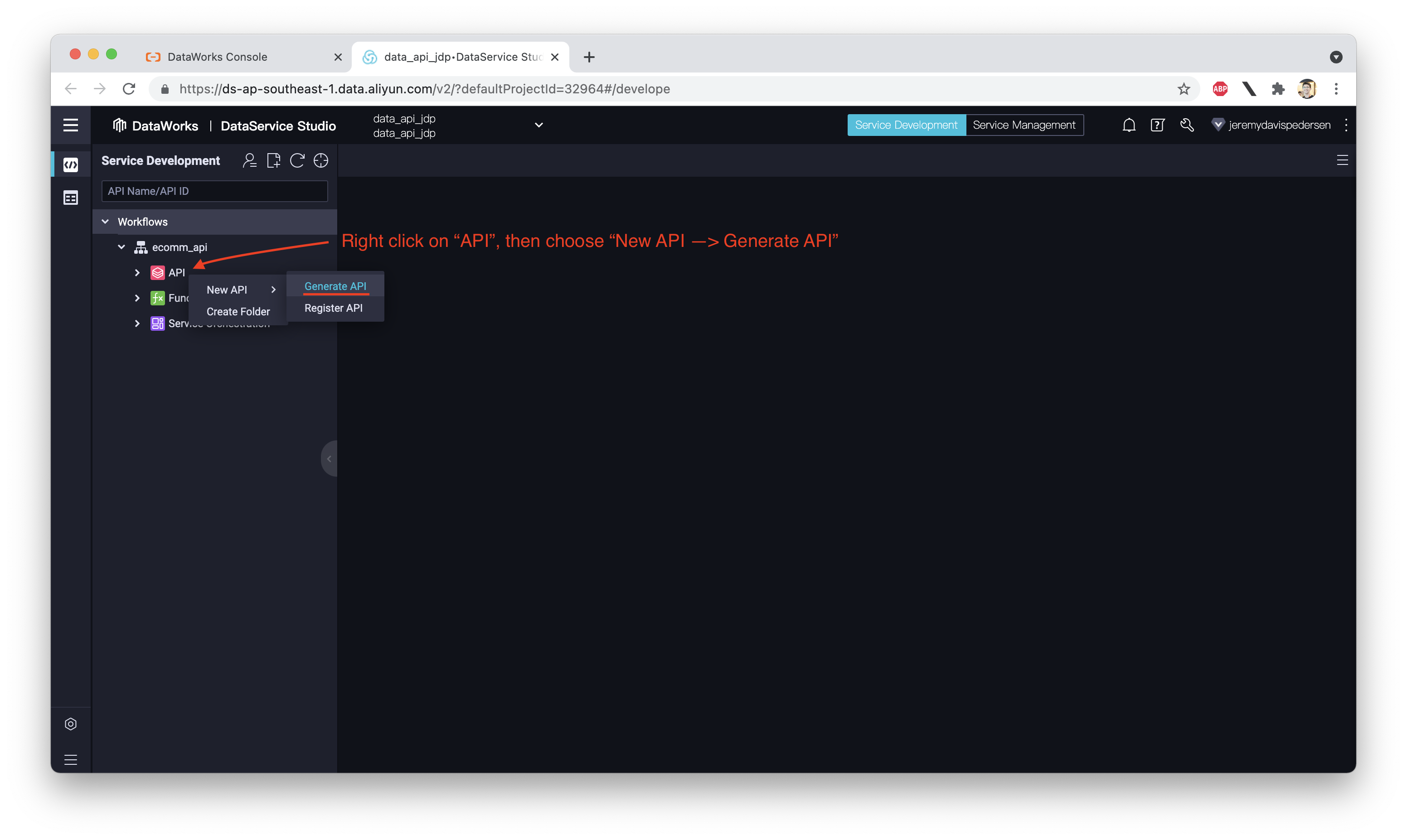

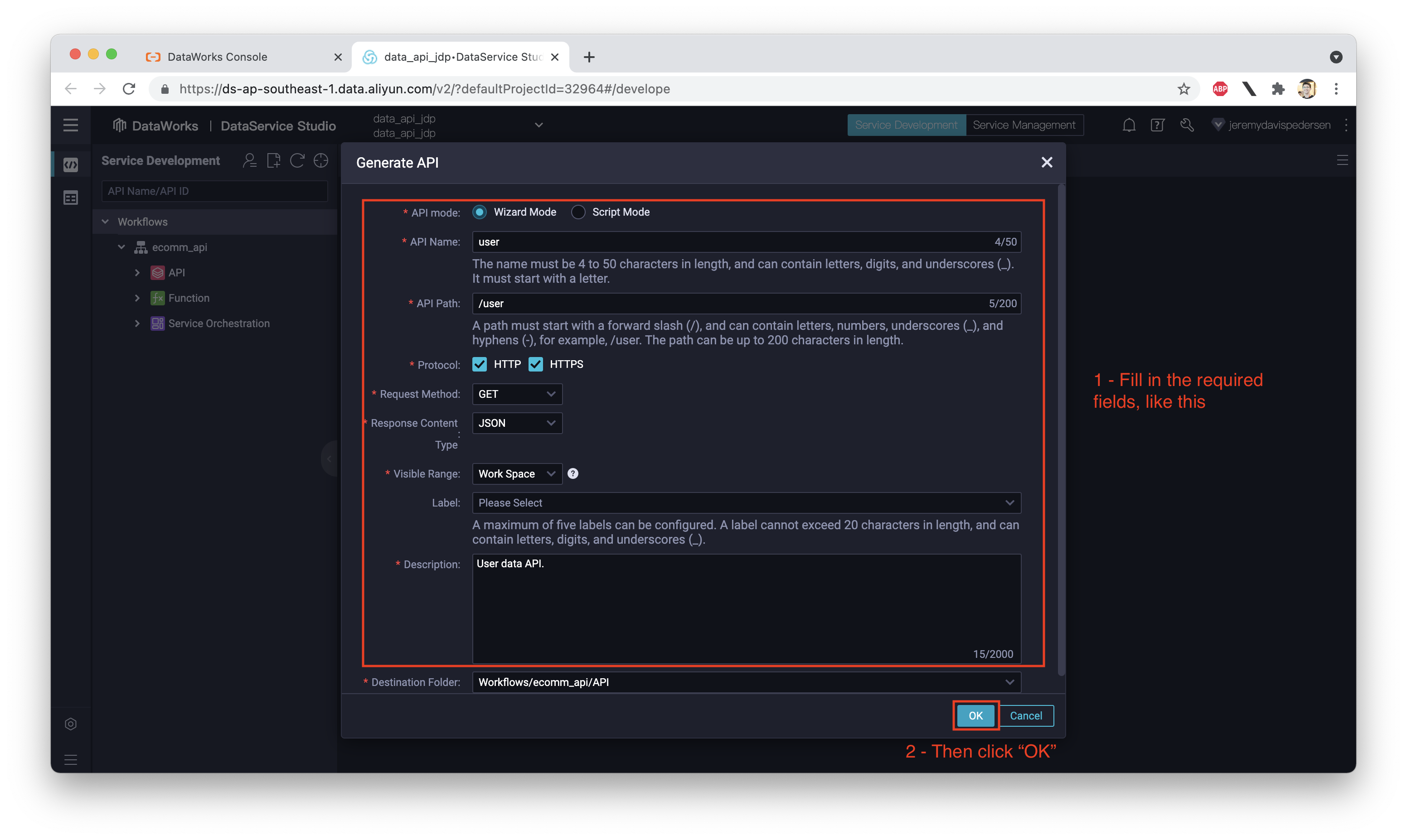

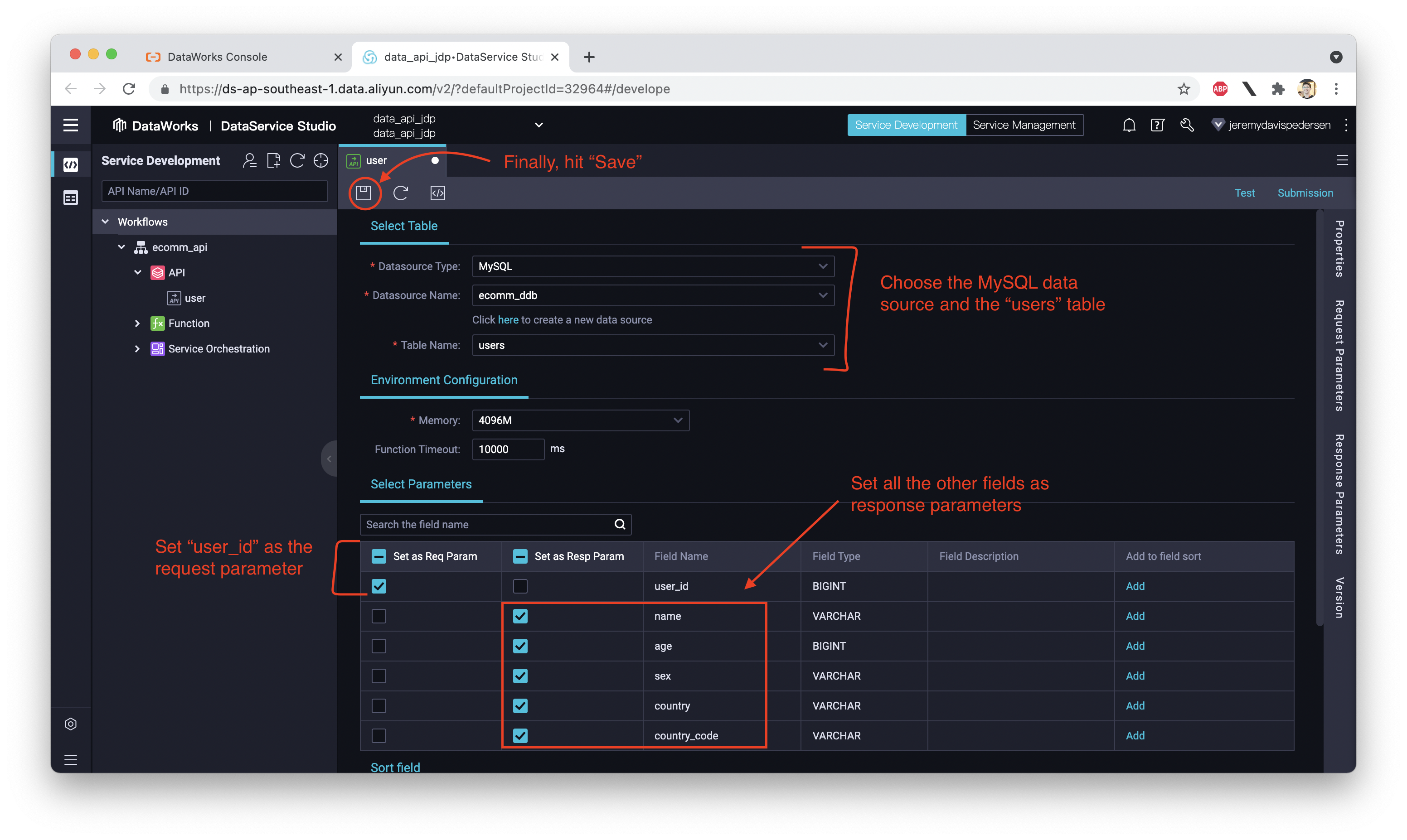

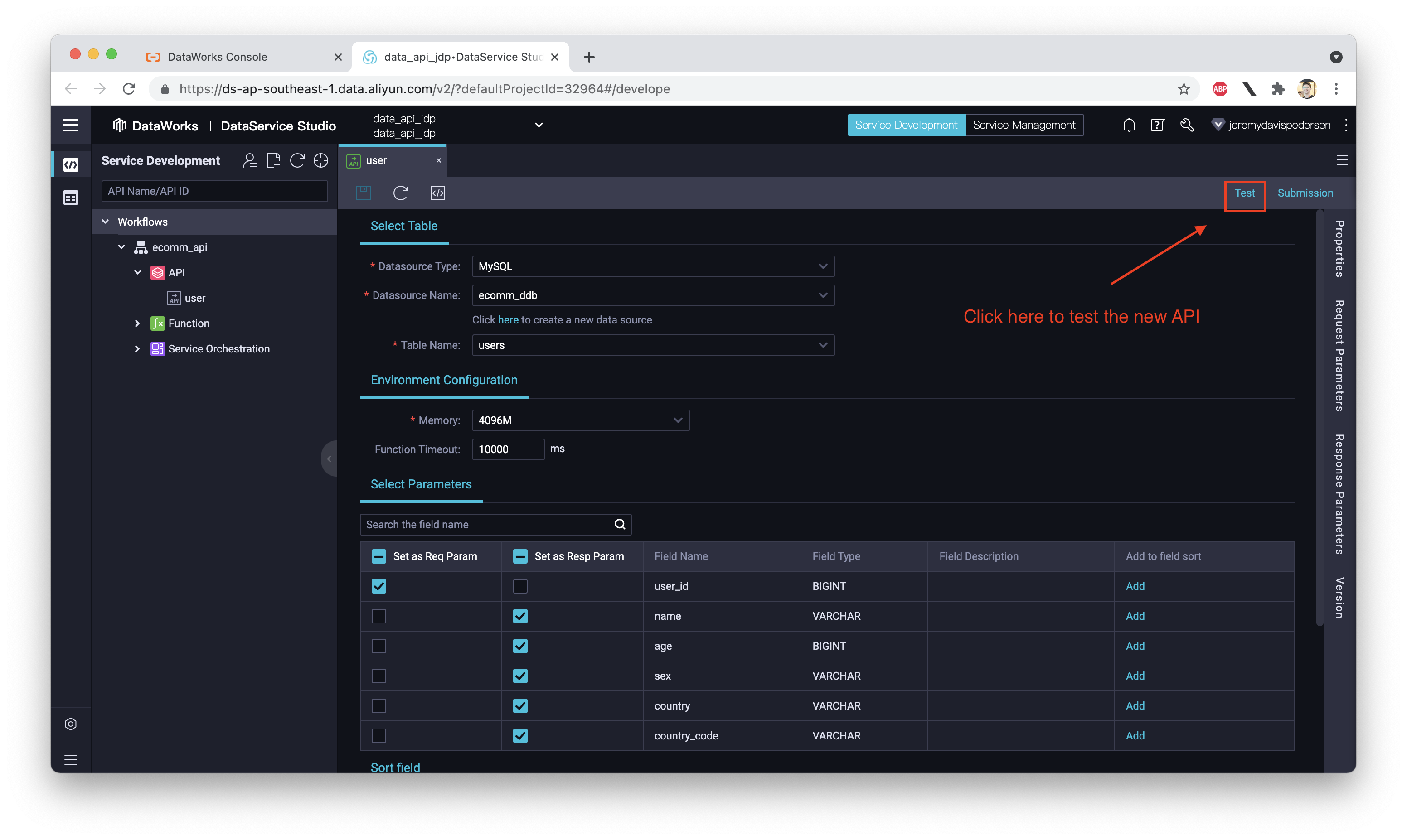

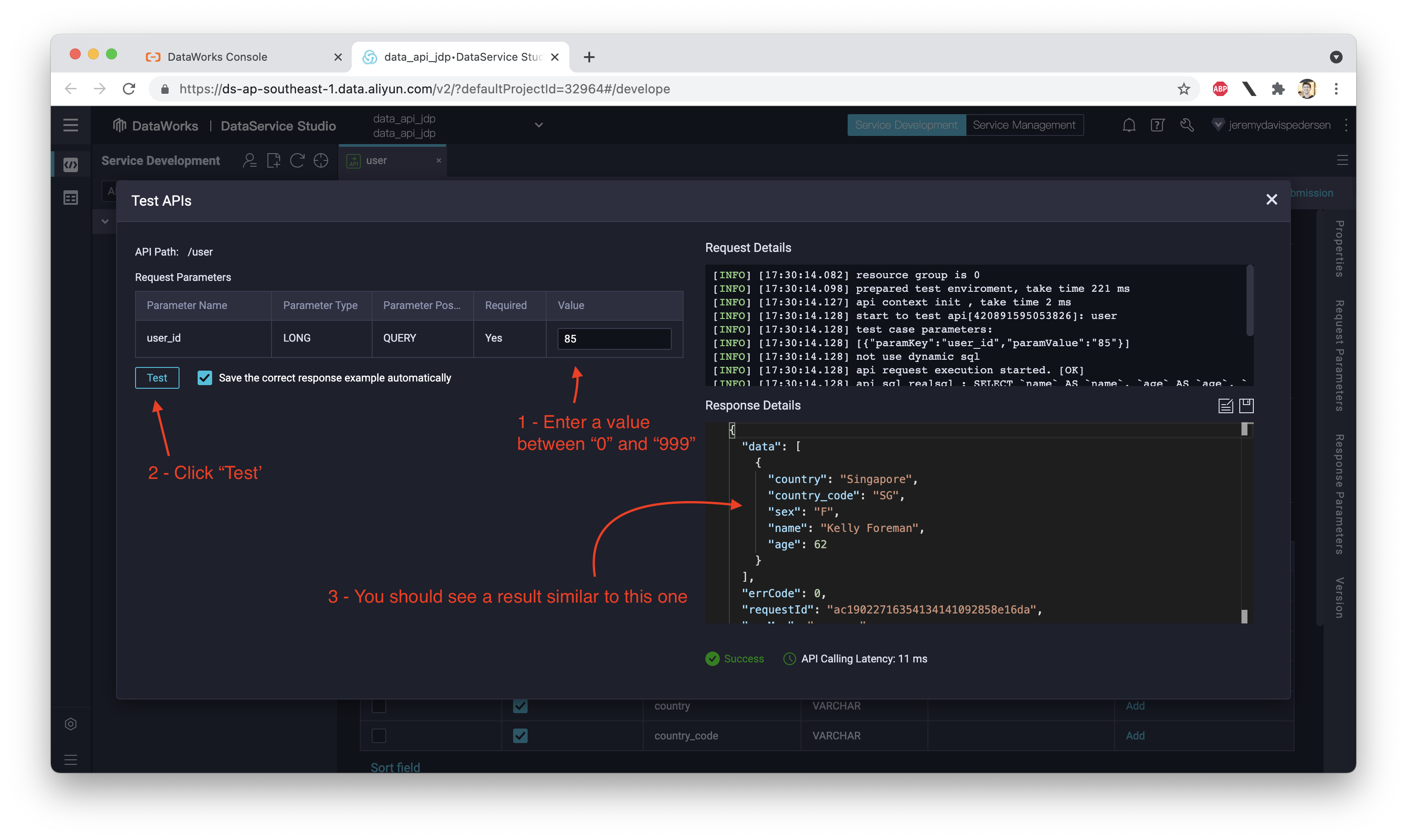

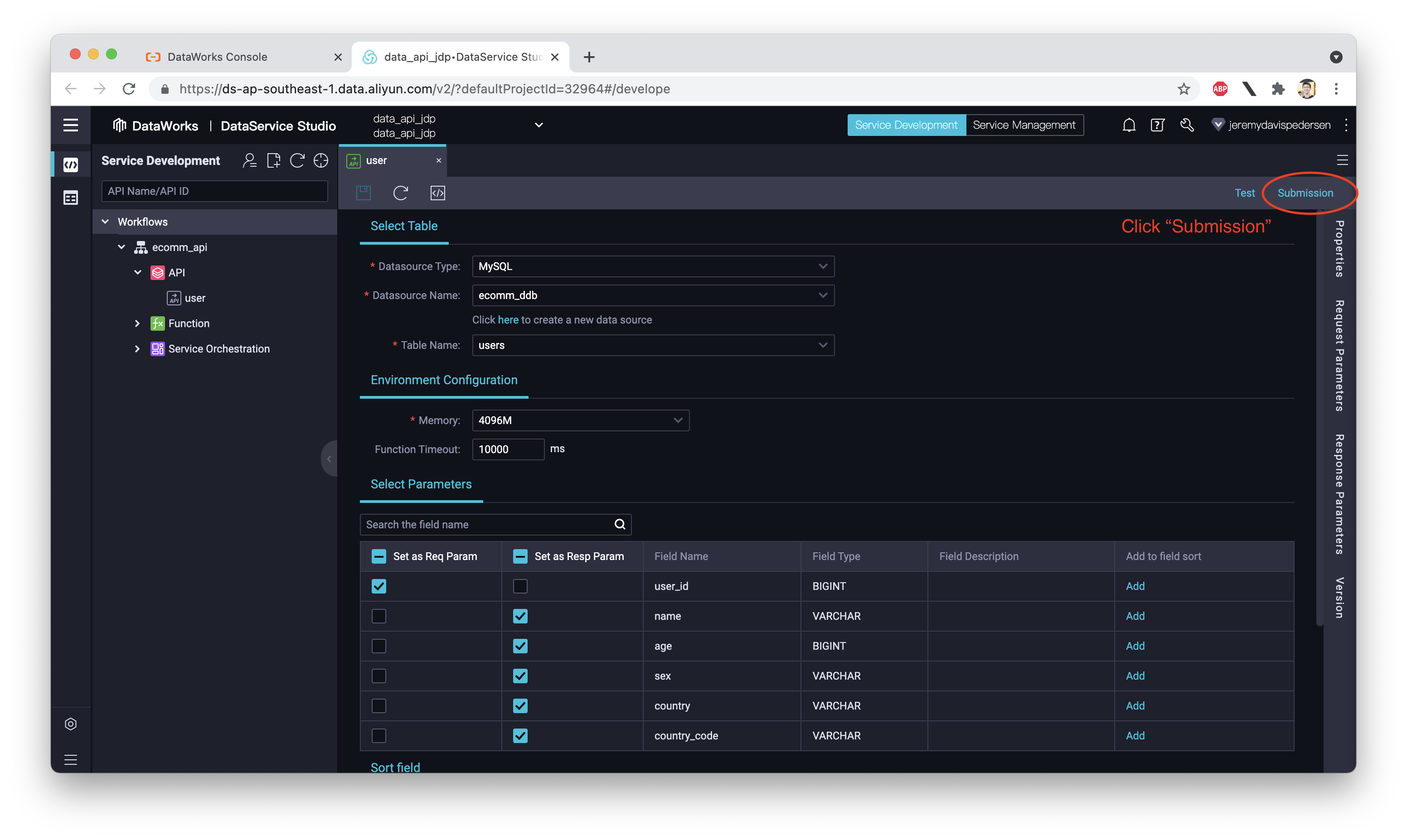

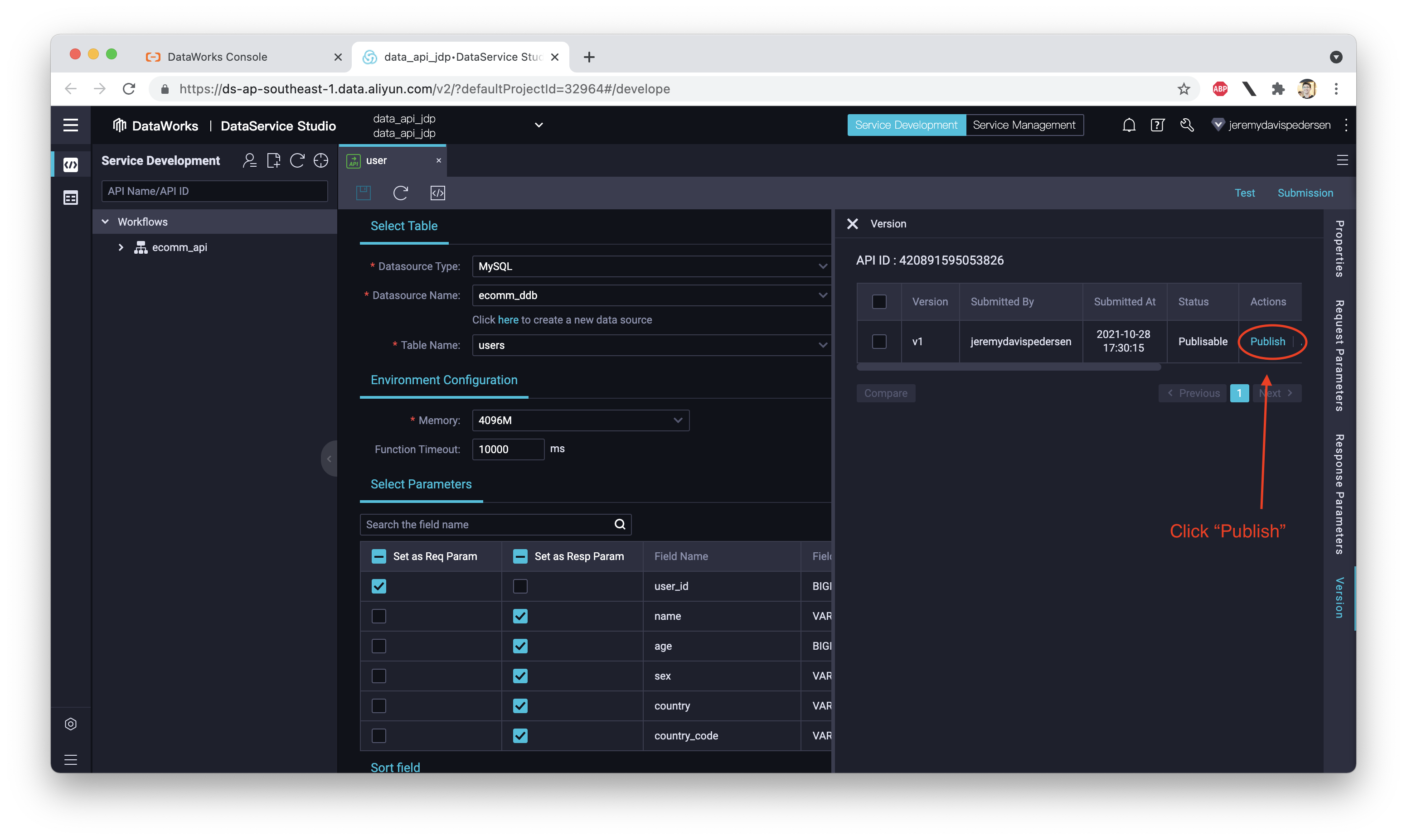

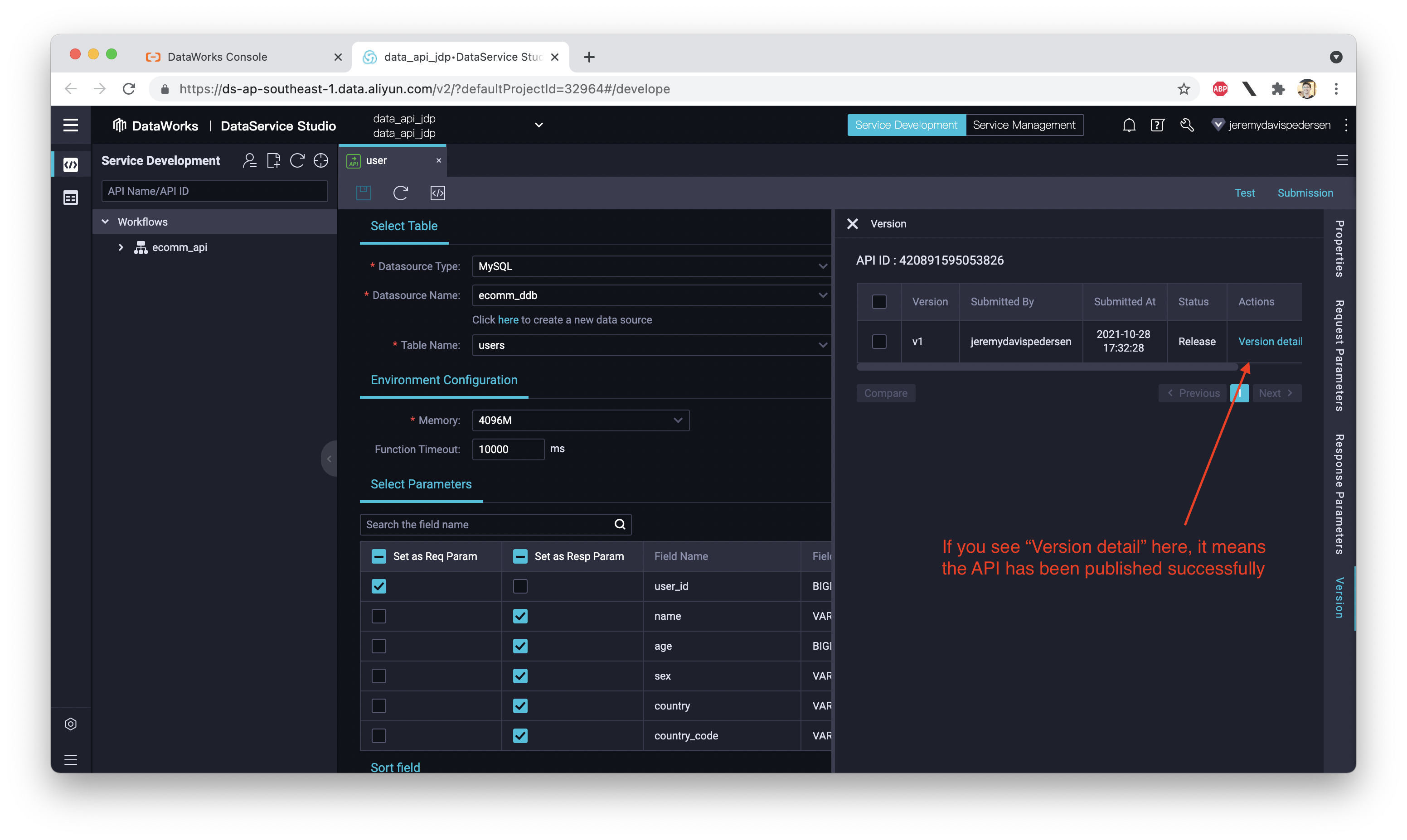

Finally, time for the good stuff: we can now use DataService Studio to publish some APIs! Follow the instructions below to deploy an API that reads from our MySQL database's users table. Whenever an HTTP GET request comes in with a valid user_id, we will return the name, age, sex, and location of the user, in JSON format. Follow the steps below to set up the API in DataService Studio:

Pretty easy huh? We didn't even have to write any code!

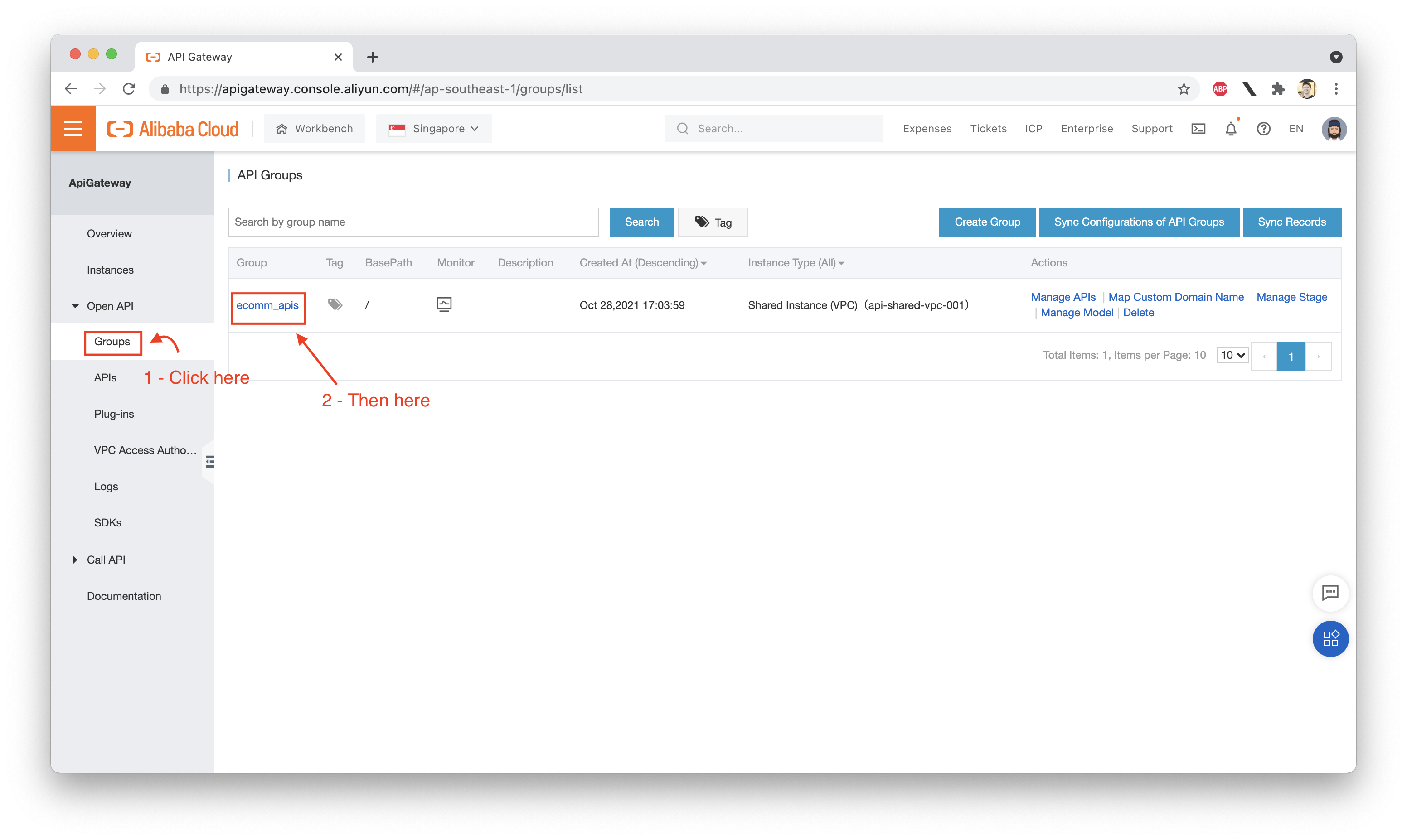

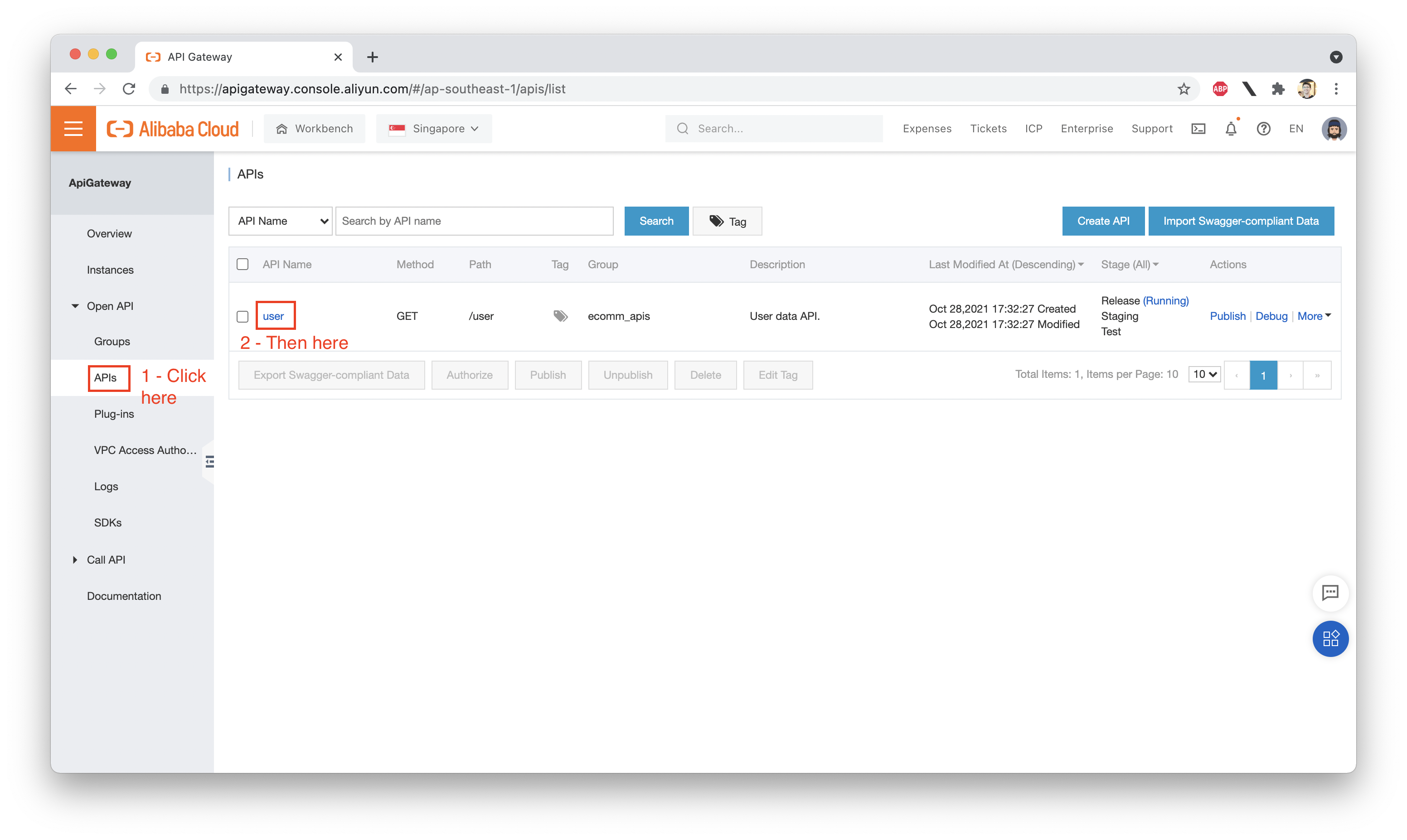

Now that our API is published, how do we use it? If we go back to the API Gateway console, we'll be able to find all the information we need to call the API using curl, Python, or a variety of other languages. Let's start by using Python.

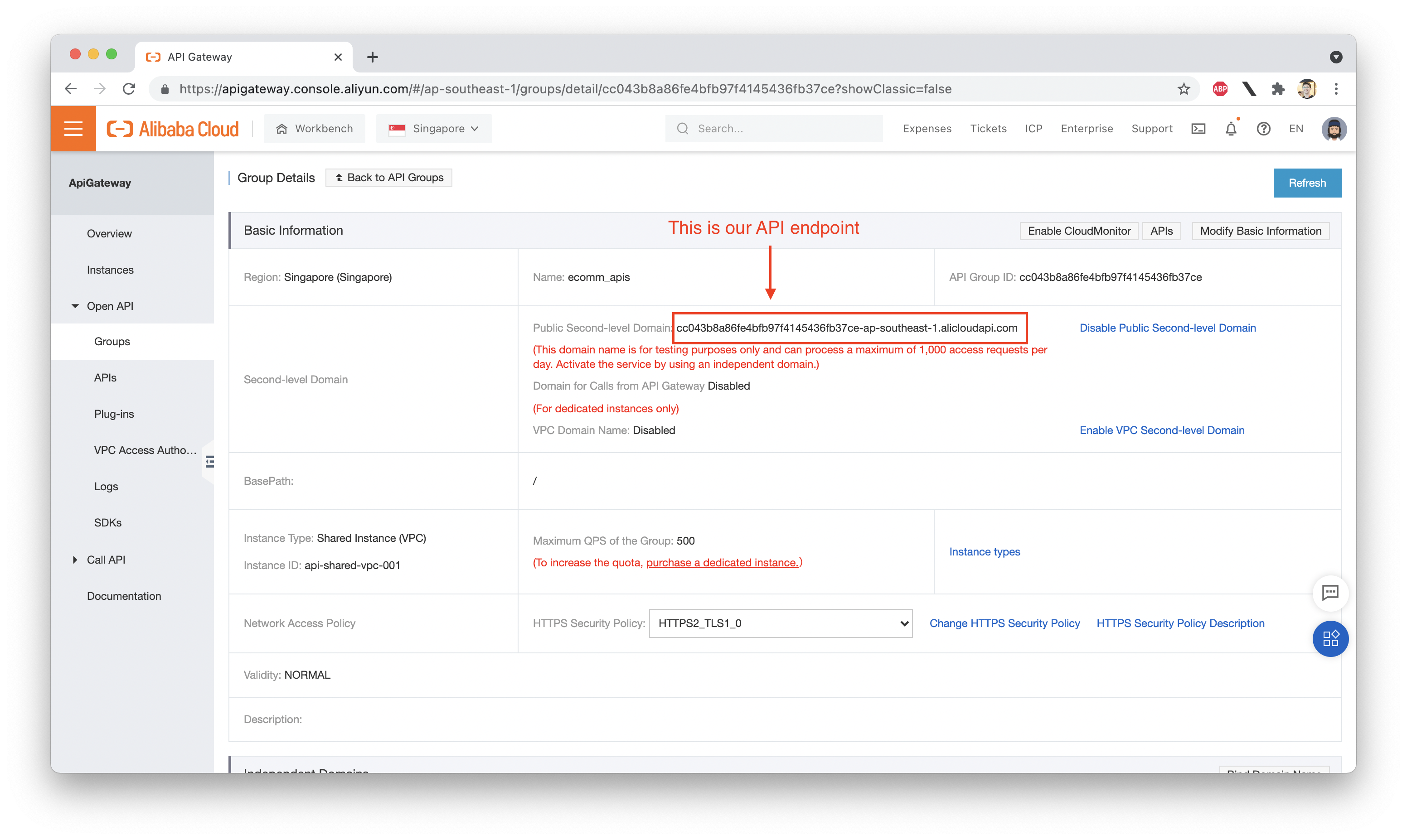

The first thing we need to do is make a note of the URL for our API Group:

In my case, the API endpoint is:

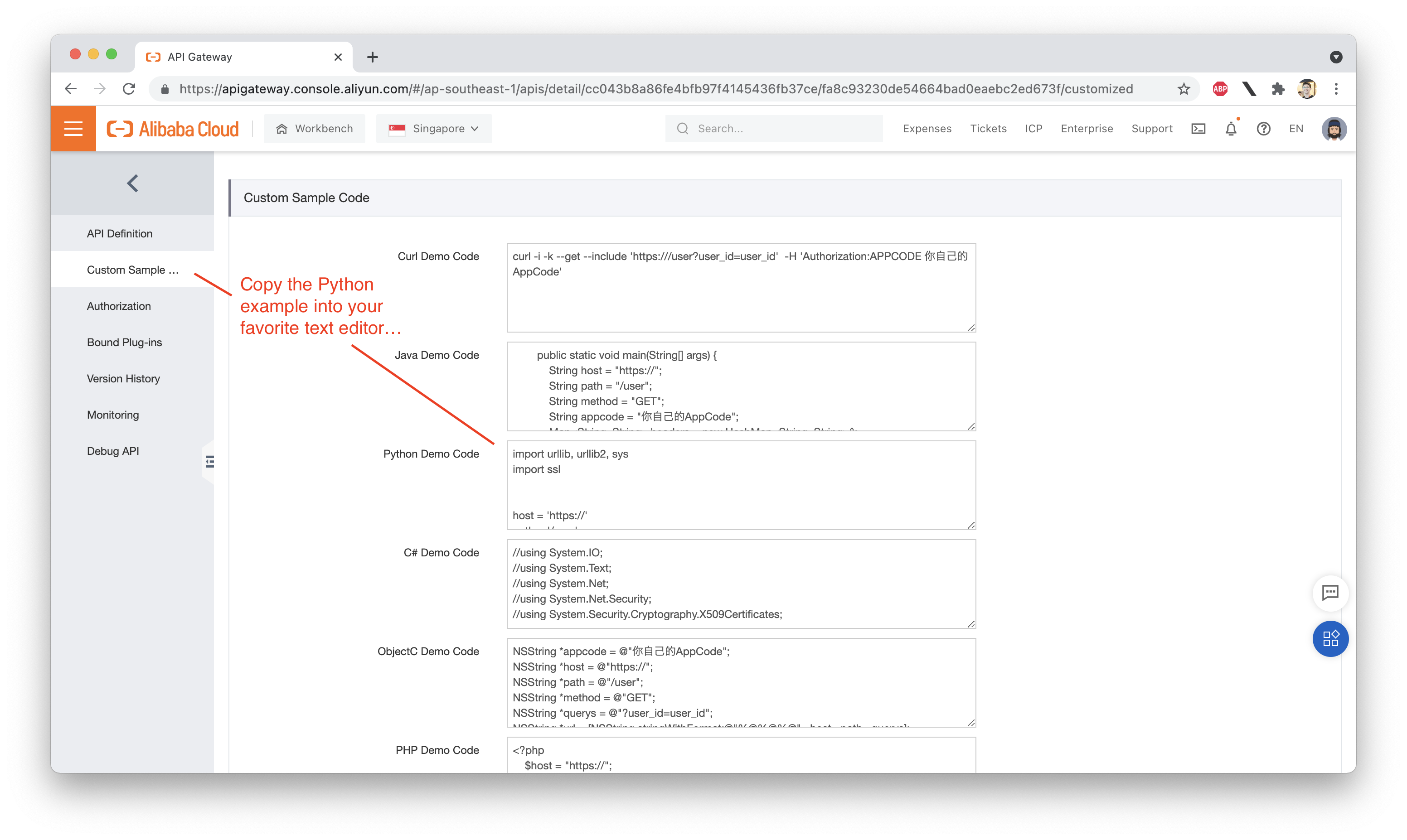

cc043b8a86fe4bfb97f4145436fb37ce-ap-southeast-1.alicloudapi.comYours will be different. Next, we need to get a copy of the Python example code for our users API:

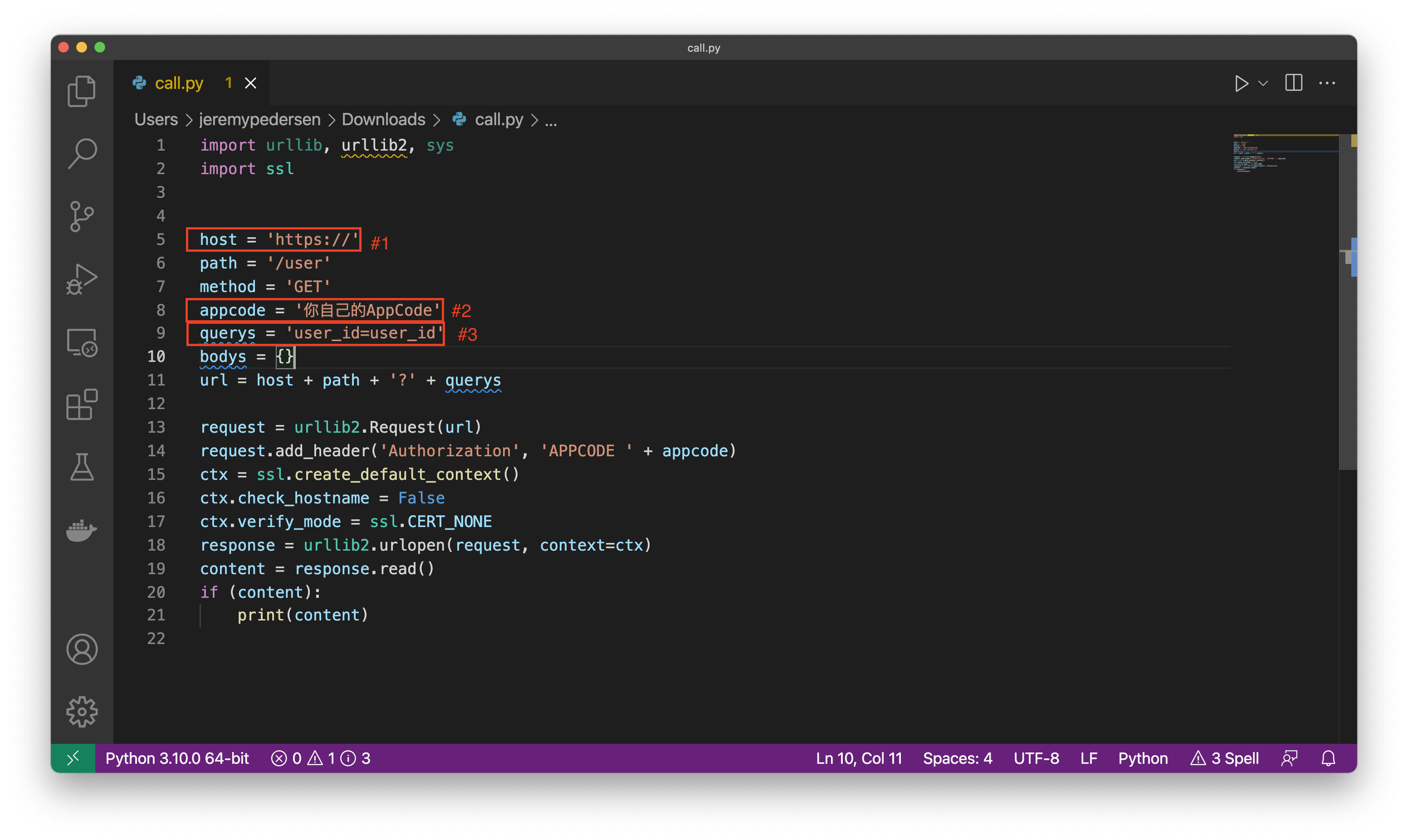

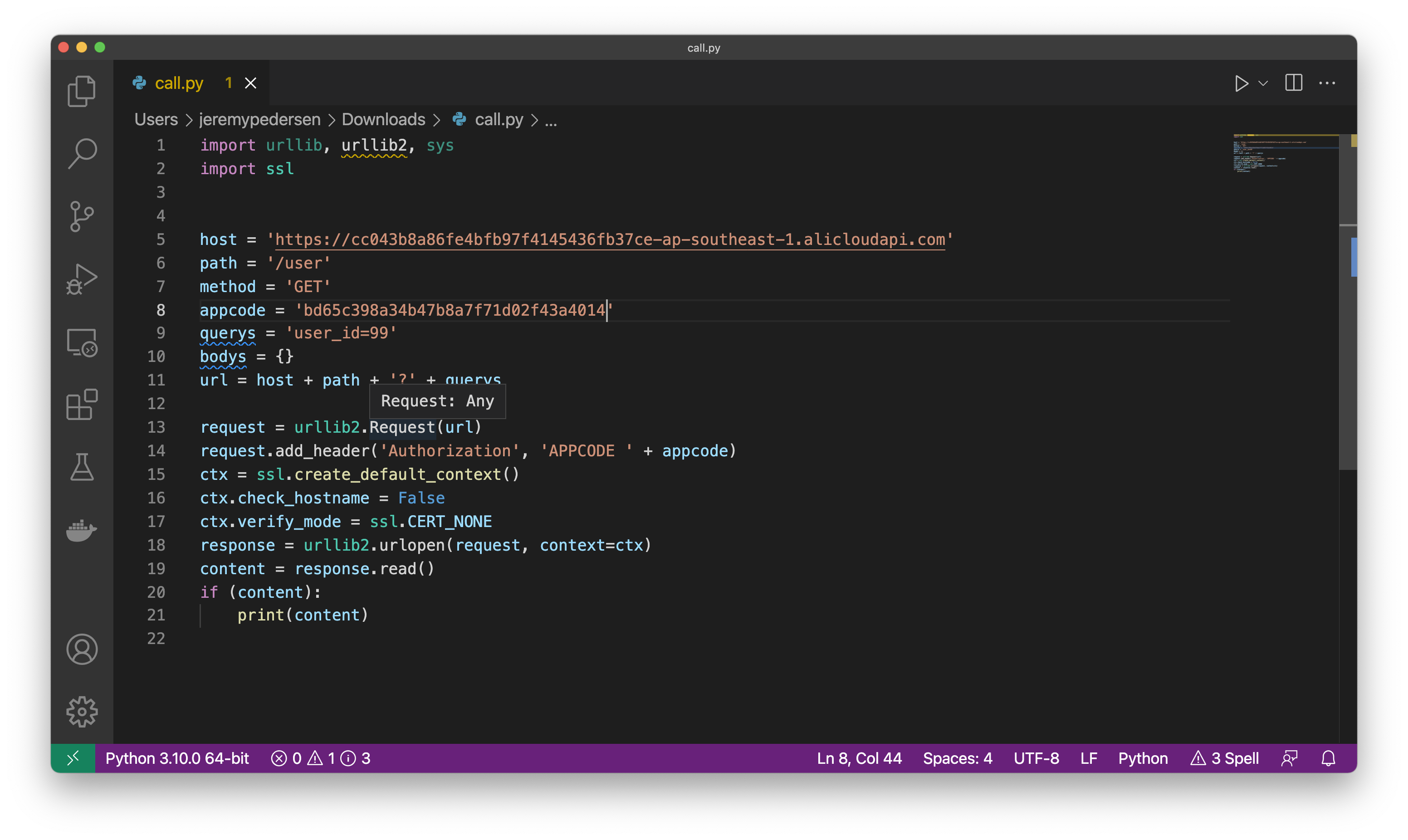

Before we can get this code working, we need to change the API endpoint (#1), the "AppCode" (#2), and the user_id (#3).

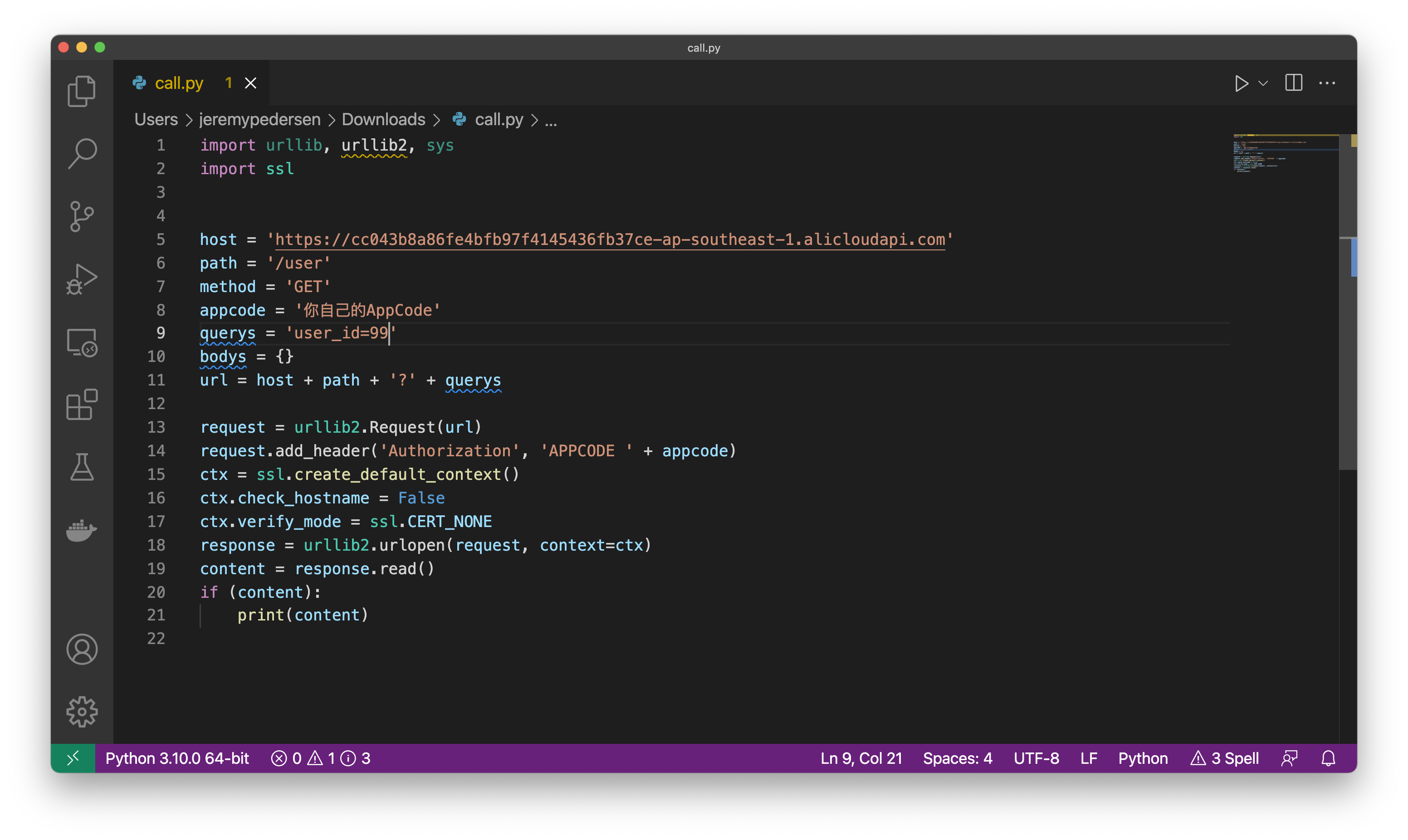

#1 and #3 are relatively easy. We already know our API endpoint address and we can choose any user ID between "0" and "999" for the test (let's go with "99"):

But what about the AppCode? The AppCode is a signing key that is required to successfully call the API. This limits API access to only people we authorize to use our API. It's possible to turn this feature off and make the API entirely public, but I don't recommend it.

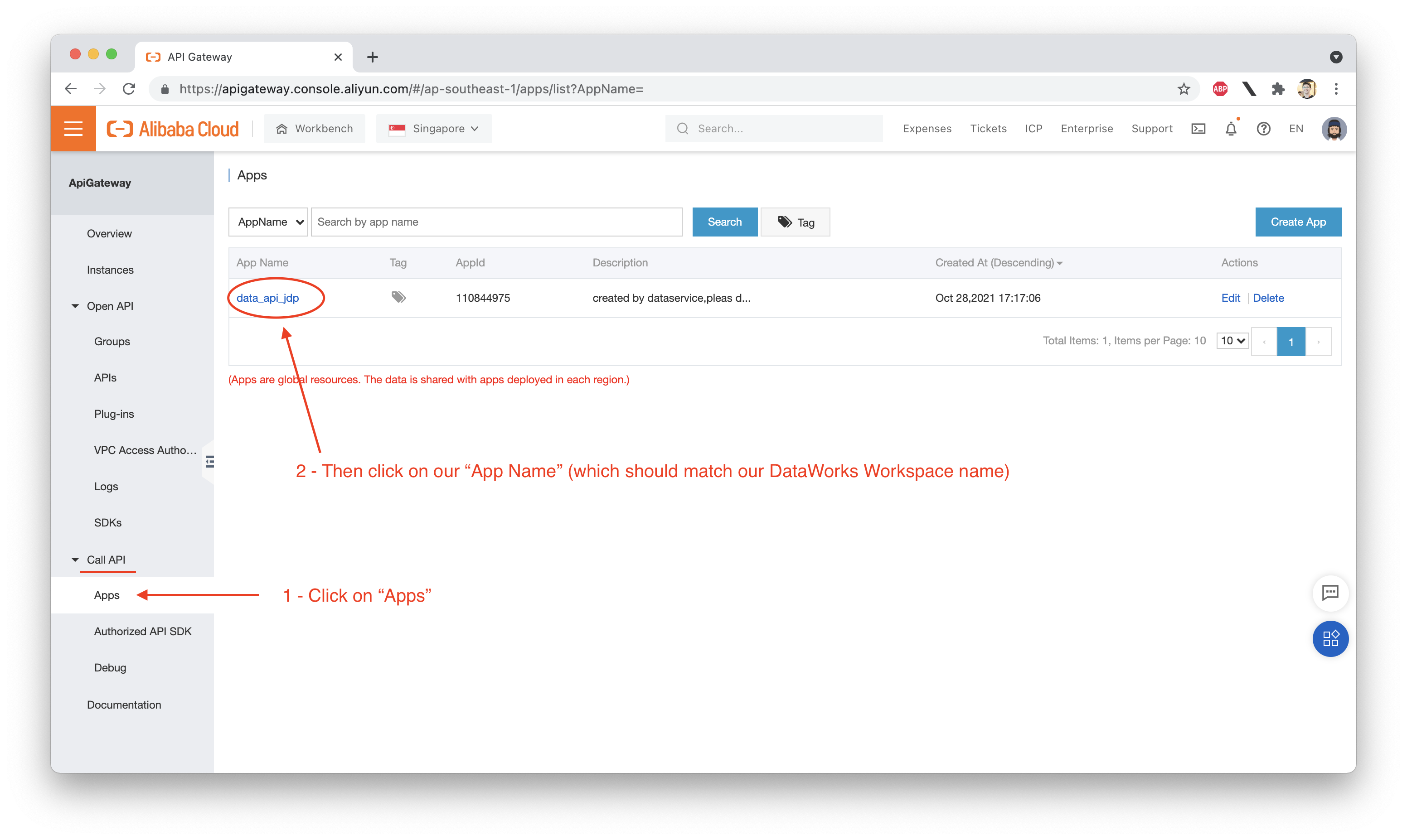

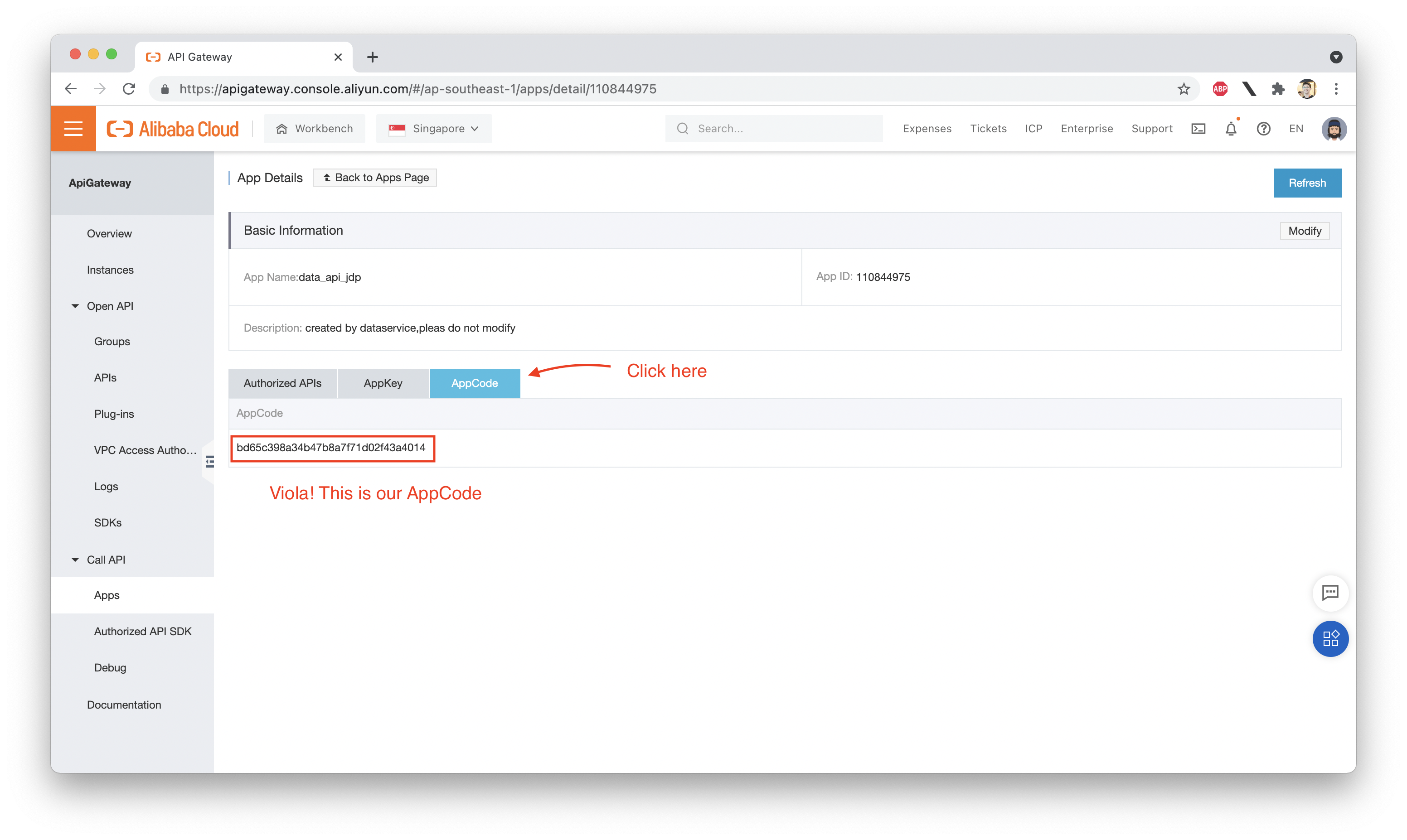

Let's go find our AppCode:

We can now plug this into our Python code:

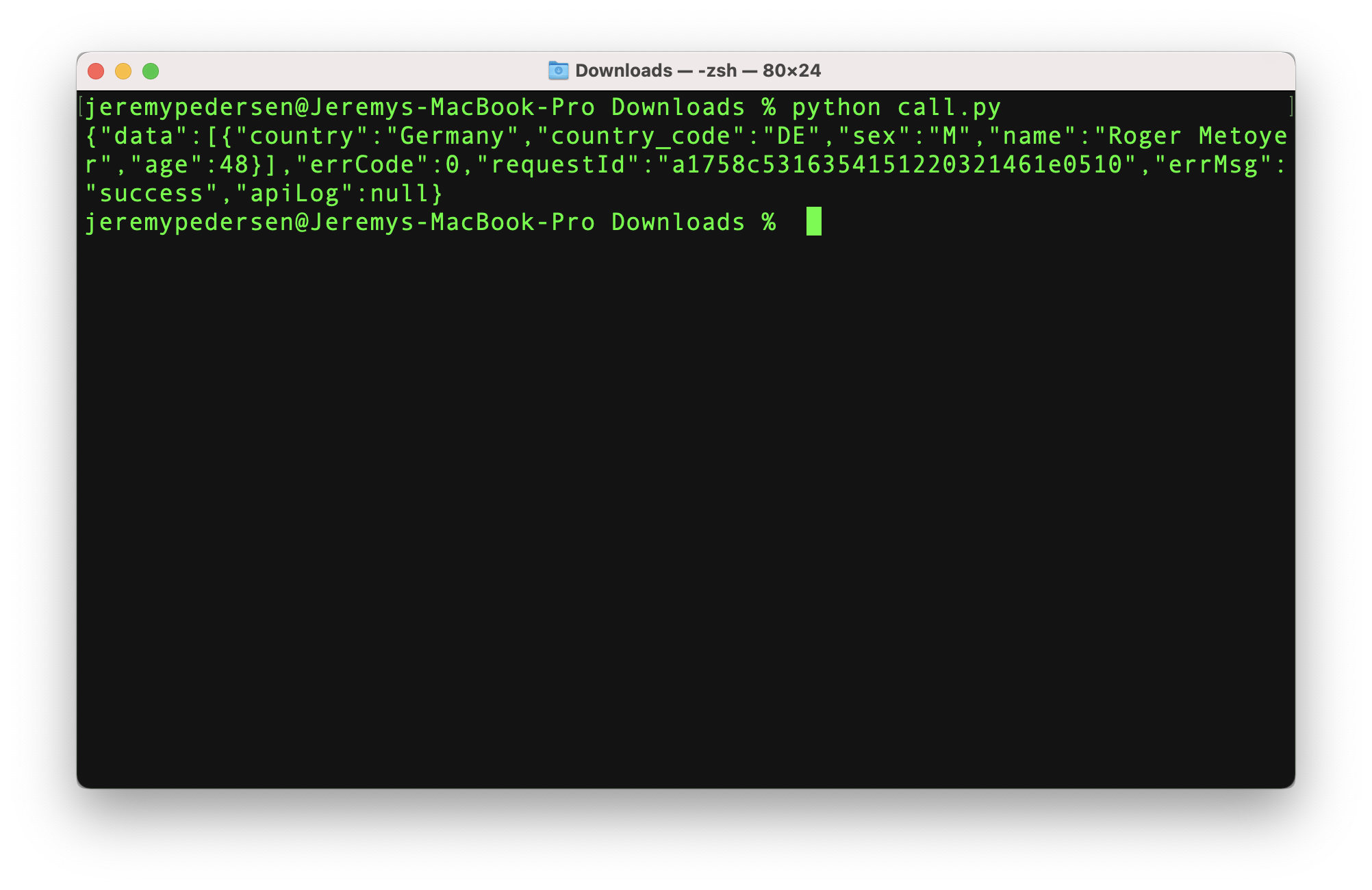

Next, let's try running the code. We should get a result like this one:

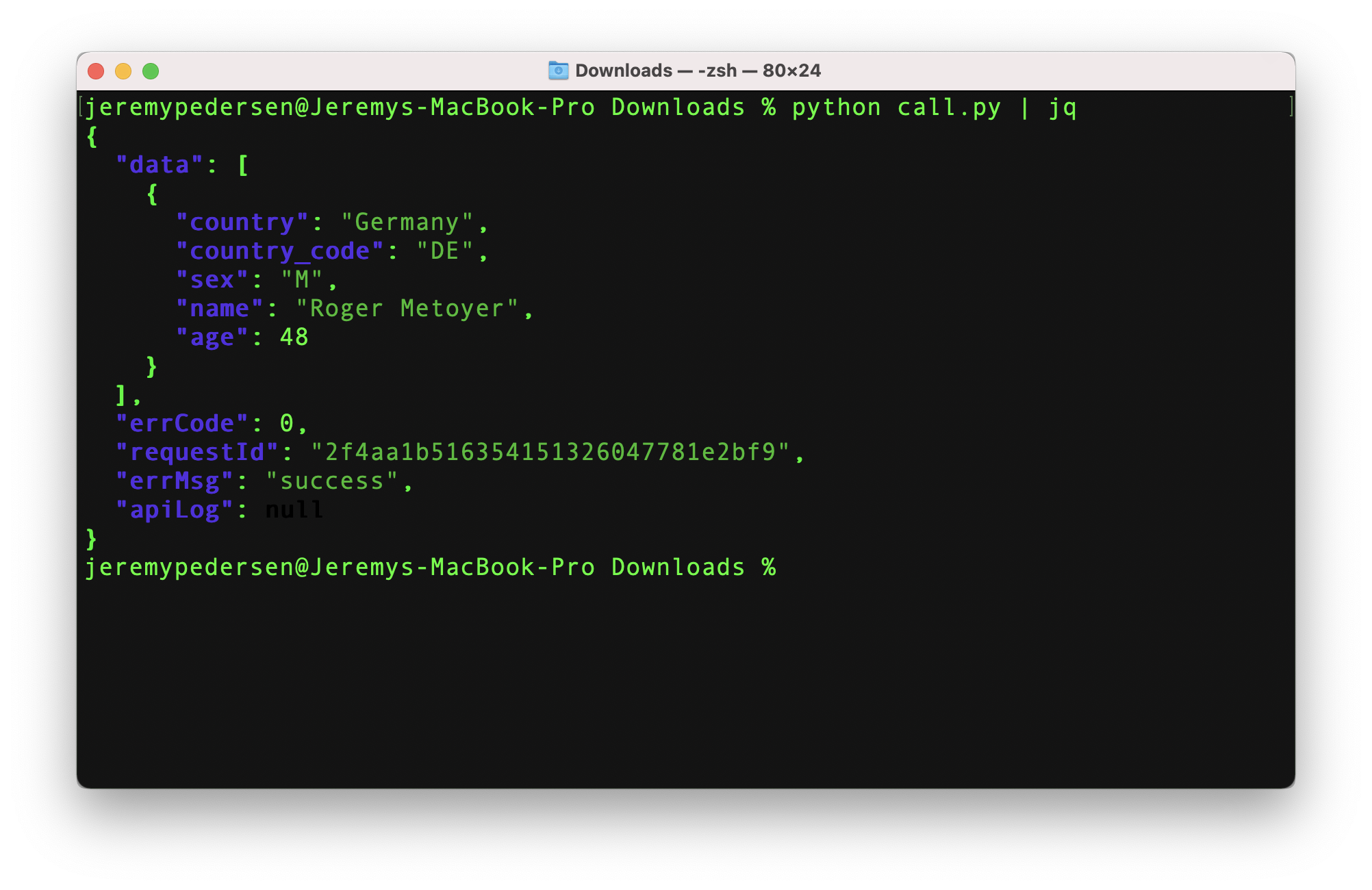

It works! Of course, it's a raw, unformatted JSON response. If we want better-looking results, we can try passing the JSON response to a parsing program like jq, using a command like this:

python call.py | jqIt looks a bit better but the data returned is of course the same:

Awesome! We managed to deploy our API without writing any code, and we were able to call the API using only a couple of lines of Python, which API Gateway handily provided to us.

Now, get out there and make some APIs!

Great! Reach out to me at jierui.pjr@alibabacloud.com and I'll do my best to answer in a future Friday Q&A blog.

You can also follow the Alibaba Cloud Academy LinkedIn Page. We'll re-post these blogs there each Friday.

Friday Blog - Week 31 - Automating Stuff With Function Compute

JDP - November 11, 2021

JDP - November 5, 2021

Alibaba Clouder - February 11, 2021

Alibaba Cloud Indonesia - January 12, 2024

JDP - May 20, 2022

JDP - April 22, 2022

Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More API Gateway

API Gateway

API Gateway provides you with high-performance and high-availability API hosting services to deploy and release your APIs on Alibaba Cloud products.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn MoreMore Posts by JDP