By Mo Yuan

In this article, let's discuss the most important topic in the big data field - storage. Big data is increasingly impacting our everyday life. Data analysis, data recommendation and data decisions based on big data can be found in almost all scenarios, from traveling, buying houses to ordering food delivery and calling rideshare services.

Higher requirements on data multidimensionality and data integrity must be met so that better and more accurate decisions can be made based on big data. In the near future, data volumes are expected to grow bigger and bigger. Especially with 5G on the way, data throughput will increase exponentially, and data dimensions and sources will also increase. Data will also have increasingly heterogeneous types. All these trends bring new challenges to big data platforms. The industry wants low-cost and high-capacity storage options with fast read/write speeds. This article discusses demands, their underlying challenges, and how Alibaba Cloud container services including Spark on Kubernetes can meet these challenges in different business scenarios.

The separation of computing and storage is an issue in the big data field that is frequently discussed from the following angles:

These three problems have become increasingly prominent as we move to the era of containers on the cloud. With Kubernetes, Pods run in the underlying resource pool, and storage needed for Pods are dynamically assigned or mounted by using PVs or PVCs. The architecture of containers at some level features the separation of computing and storage. But, one common question is what changes and advantages may be brought by big data container clusters that adopt the separation of storage and computing?

Generally a D-series machine is used when we create a Spark big data platform on Alibaba Cloud. Then a series of basic components like HDFS and Hadoop are built on it and Spark tasks are scheduled through Yarm and run on this cluster. A D-series machine has Intranet bandwidth ranging from 3 Gbit/s to 20 Gbit/s and can be bound to between four to twenty-eight 5.5 TB local disks by default. Because disk I/O on the cloud and network I/O are shared and the I/O of local disks remains independent, the combination of D-series and local disks shows better I/O performance than the combination of cloud disks and traditional machines with the same specification.

However, in actual production, data stored increases over time. Because data usually features a certain level of timeliness, the computing power in a time unit does not always match the increase in data storage, resulting in wasted costs. There one issue is what changes may happen if we follow the "separation of computing and storage" principle and use external storage products like OSS, NAS or DFS (Alibaba Cloud HDFS)?

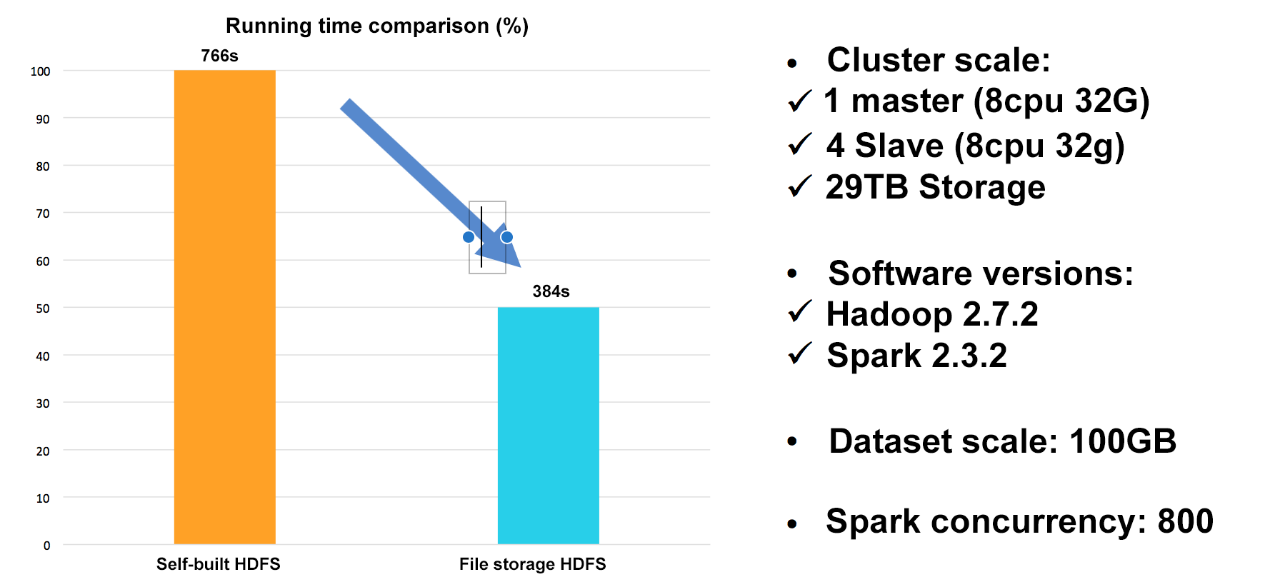

To avoid the impact of storage I/O differences, use a remote DFS as the file storage system. We choose two popular machines and compare their performance: ecs.ebmhfg5.2xlarge (8-core, 32 GB, 6 Gbit/s) and ecs.d1ne. 2xlarge (8-core, 32 GB, 6 Gbit/s), which are designed for computing scenarios and big data scenarios respectively and have the same specifications.

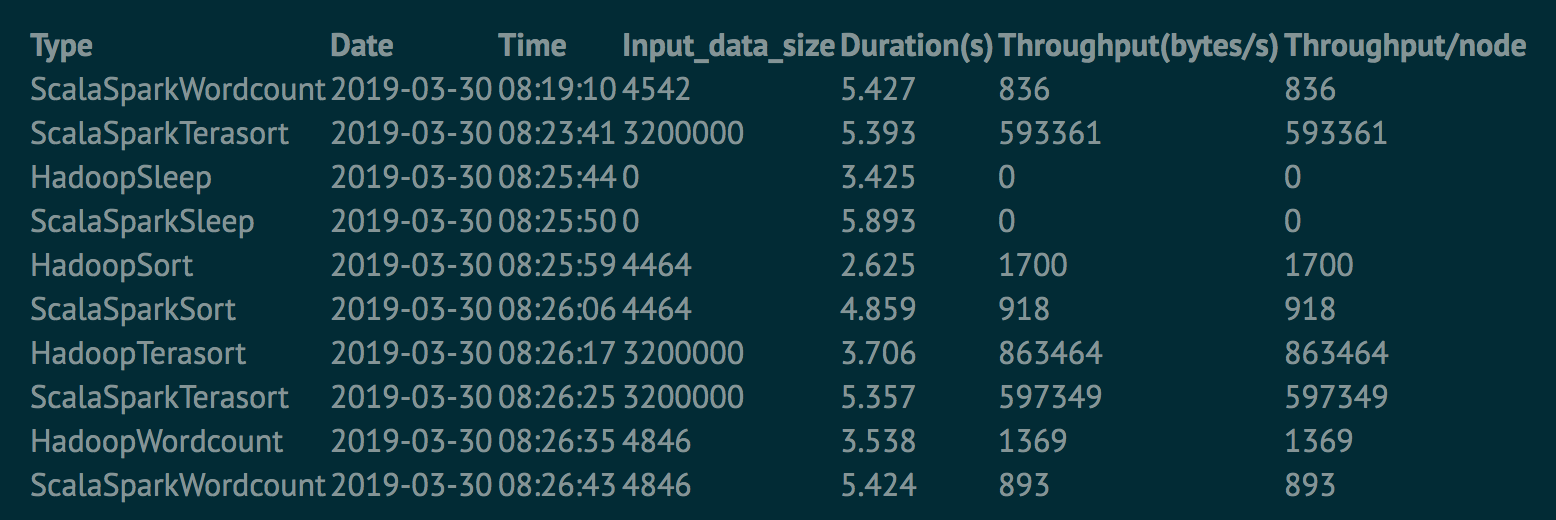

Test result of ecs.ebmhfg5.2xlarge (8-core, 32 GB)

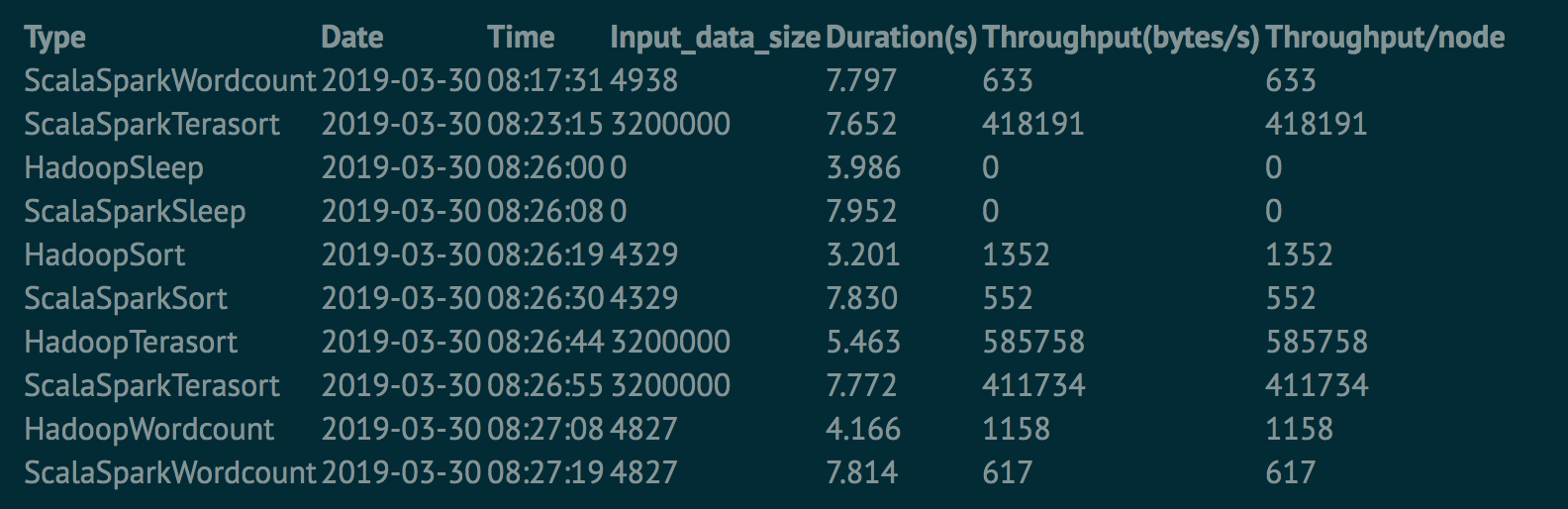

Test result of ecs.d1ne. 2xlarge (8-core, 32 GB)

By using HiBench, we can roughly estimate that, if the I/O is basically the same, ecs.ebmhfg5.2xlarge shows about 30% higher computing performance than ecs.d1ne. 2xlarge and about 25% lower cost than ecs.d1ne. 2xlarge.

That is to say, we can choose a more efficient and cost-effective machine from the perspective of the computing power alone. When storage is separated from computing, we can find an optimal choice from both perspectives: computing and storage. For computing, we can use ECS, which has high clock speed and relatively strong computing power. For storage, we may use OSS or DFS, which is cheaper than local storage. In addition, the ratio of CPU to memory in D-series machines is generally 1:4. As big data task scenarios become more diversified, this CPU/memory ratio of 1:4 is not always the optimal ratio. The separation of storage and computing allows us to choose proper computing resources based on business types and even maintain multiple types of computing resources in one computing resource pool to improve resource usage.

The SLAs for data storage are also completely different from those for computing tasks. For data storage, downtime or interruptions are not allowed. However, computing tasks themselves are already sub-tasks, and if a sub-task experiences some exceptions, we can simply retry that sub-task. Therefore, we can use resources with lower cost like spot instances as the runtime environment for computing tasks and further reduce the cost.

Elasticity is a key characteristic of containers. Elasticity allows containers to obtain tens or even hundreds of times the amount of their original computing resources in a short period of time and automatically release these resources after computing tasks are completed. Currently Alibaba Cloud Container Service provides autoscalers to support auto scaling at the node level and scale up to 500 node in a minute and a half. Storage is a major hindrance to elasticity in traditional scenarios where storage and computing are coupled. However, after the separation of storage and computing, it is possible to achieve elasticity for nearly stateless computing and implement on-demand use and pay-as-you-go billing.

External storage allows unlimited storage space and more options. In the age of big data, however, data features more dimensions and higher heterogeneity, which brings more challenges to data storage methods and types. Using data storage systems and connections like HDFS, HBase, and Kafka cannot meet our requirements. For example, time-series storage is a preferred option for offline storing data collected from Internet of Things (IoT) devices, and structured databases are better for storing data generated from downstream and upstream applications. Underlying infrastructures and dependencies in big data platforms increase as data sources and connections become more and more. Alibaba Cloud provides a variety of storage services to meet data processing requirements in different big data scenarios. In addition to traditional storage services like HDFS, Hbase, Kafka, OSS, NAS, and CPFS, Alibaba Cloud provides storage services including MNS, TSDB, and OAS (Open Archive Service). These storage services allow big data platforms to focus on business development instead of underlying O&M of the architecture. In addition to larger storage capacity, data storage is more secure and cost-effective.

Generally speaking, achieving faster read/write speeds can be near to impossible because independent local disks cannot be improved by mounting enough disks in parallel for to significant change in read/write speeds. However, by split tasks by using MR, the performance bottleneck for each sub-task is usually no longer on the disk I/O. In the preceding test, the Intranet bandwidth of the ECS instance is up to 6 Gbit/s. If this bandwidth is converted into disk I/O, that data throughput I/O would be redundant compared with the computing power of the 8-core and 32-GB machines. Therefore, the "faster read/write speeds" described in this article is to improve the read/write speed primarily through I/O redundancy. OSS is an object storage service provided by Alibaba Cloud that features the parallel I/O. That is to say, if your business scenarios involve read speeds of a large number of medium-sized or small-sized files in parallel (for example, the read/write speeds for directories in Spark), the I/O read/write must be linearly increased linearly. Developers who prefer HDFS can choose the HDFS service from Alibaba Cloud. Switching to HDFS provides many storage and query optimizations and can deliver about a 50% performance improvement.

Alibaba Cloud Container Service for Kubernetes and Spark on Kubernetes can meet the different dimensions and layers of big data processing requirements. Developers can choose proper storage methods based on different business scenarios and I/O requirements. Consider the following three scenarios and how Alibaba Cloud can be used to meet the requirements of these scenarios.

OSS is the most suitable storage option for this scenario. In containers, OSS can be used in two ways. The first is to mount OSS as a file system. The other is to directly use SDKs in Spark. The first method is not suitable for big data scenarios, especially in scenarios that involve a large number of files. If optimization methods like SmartFS are not available, this method may cause long latency and high inconsistency. Using SDKs is a very simple method. Developers can simply put a Jar under the corresponding CLASSPATH. For more information about how to directly process file content in OSS, see the following code:

package com.aliyun.emr.example

object OSSSample extends RunLocally {

def main(args: Array[String]): Unit = {

if (args.length < 2) {

System.err.println(

"""Usage: bin/spark-submit --class OSSSample examples-1.0-SNAPSHOT-shaded.jar <inputPath> <numPartition>

|

|Arguments:

|

| inputPath Input OSS object path, like oss://accessKeyId:accessKeySecret@bucket.endpoint/a/b.txt

| numPartitions the number of RDD partitions.

|

""".stripMargin)

System.exit(1)

}

val inputPath = args(0)

val numPartitions = args(1).toInt

val ossData = sc.textFile(inputPath, numPartitions)

println("The top 10 lines are:")

ossData.top(10).foreach(println)

}

override def getAppName: String = "OSS Sample"

}Alibaba Cloud also provides a solution support Spark SQL scenarios on OSS. The solution allows retrieving and querying a single file by using SparkSQL. Click here to see the code repository (page in Chinese). For this, you need to prepare corresponding Jar packages under the CLASSPATH of the Driver Pod and Executor Pods before you use Spark Operator to run tasks.

OSS performs very well in scenarios where a large number of files are involved, each less than 100 MB. OSS provides the lowest cost of data storage among several popular storage products and supports the separation of cold data and hot data. OSS mainly targets scenarios with reads more than writes or no writes at all.

Alibaba Cloud has released a new distributed file system (DFS) service that provides data management and access solutions similar to the Hadoop Distributed File System (HDFS). You can use DFS without the need to modify the existing applications of big data analytics. DFS features unlimited capacity, performance scaling, single namespace, shared storage, high reliability, and high availability.

DFS is compatible with the HDFS protocol. Developers only need to put corresponding Jar packages under the CLASSPATH of the Driver Pod and Executor Pods. The invocation can be performed in the following way:

/* SimpleApp.scala */

import org.apache.spark.sql.SparkSession

object SimpleApp {

def main(args: Array[String]) {

val logFile = "dfs://f-5d68cc61ya36.cn-beijing.dfs.aliyuncs.com:10290/logdata/ab.log"

val spark = SparkSession.builder.appName("Simple Application").getOrCreate()

val logData = spark.read.textFile(logFile).cache()

val numAs = logData.filter(line => line.contains("a")).count()

val numBs = logData.filter(line => line.contains("b")).count()

println(s"Lines with a: $numAs, Lines with b: $numBs")

spark.stop()

}

}DFS is mainly designed for hot data scenarios with high I/O read/write requirements. The cost is higher than that of using OSS, but lower than that of using NAS or other structured storage services. DFS is the best option for developers who have gotten used to HDFS. Among all storage solution, DFS shows the best I/O performance. When the storage capacity is the same, DFS delivers better I/O performance than local disks.

OSS is not suitable in some scenarios and may cause some inconvenience because data upload and transmission in OSS depends on SDKs. In these scenarios, NAS is an alternative. The protocol for NAS features strong consistency. Follow the steps to use this storage service:

1. Create NAS-related storage PVs and PVCs in the Container Service console.

2. Declare the PodVolumeClaims used in the Spark Operator definition.

apiVersion: "sparkoperator.k8s.io/v1alpha1"

kind: SparkApplication

metadata:

name: spark-pi

namespace: default

spec:

type: Scala

mode: cluster

Image:"Gcr. io/spark-operator/spark: v2.4.0"

imagePullPolicy: Always

mainClass: org.apache.spark.examples.SparkPi

mainApplicationFile: "local:///opt/spark/examples/jars/spark-examples_2.11-2.4.0.jar"

restartPolicy:

type: Never

volumes:

- name: pvc-nas

persistentVolumeClaim:

claimName: pvc-nas

driver:

cores: 0.1

coreLimit: "200m"

memory: "512m"

labels:

version: 2.4.0

serviceAccount: spark

volumeMounts:

- name: "pvc-nas"

mountPath: "/tmp"

executor:

cores: 1

instances: 1

memory: "512m"

labels:

version: 2.4.0

volumeMounts:

- name: "pvc-nas"

mountPath: "/tmp" For developers familiar with Kubernetes, dynamic storage can also be used to mount directly. The specific document address is as follows:

NAS is less used in Spark scenarios, mainly because it has a certain gap in IO compared with HDFS and is much more expensive than OSS in terms of storage price. However, for scenarios where data workflow results need to be reused and IO requirements are not very high, NAS is still very simple to use.

In Spark Streaming scenarios, we often use MNS or Kafka, and sometimes Elasticsearch and HBase. These services are also supported by Alibaba Cloud. Developers can focus more on data modeling through the integration and use of these cloud services.

This article mainly discusses how to reduce resource usage costs by separating storage and computing when the container encounters big data, and how to choose appropriate storage methods base on different scenarios to implement cloud-native big data container-based computing. The next article will introduce how to flexibly save costs on computing resource pools under the scenario of separation of storage and computing.

New Thoughts on Cloud Native: Why Are Containers Everywhere?

223 posts | 33 followers

FollowAlibaba Cloud Native - March 5, 2024

Alibaba EMR - May 11, 2021

Alibaba Cloud Native - January 25, 2024

Alibaba Clouder - July 21, 2020

Alibaba EMR - August 24, 2021

Alibaba Container Service - February 21, 2023

223 posts | 33 followers

Follow Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn MoreMore Posts by Alibaba Container Service