本文将为您介绍如何使用TPC-H(商业智能计算测试)对OLAP查询场景和Key/Value点查场景进行性能测试。

TPC-H简介

以下文字描述引用自TPC Benchmark™ H (TPC-H)规范:

“TPC-H是一个决策支持基准,由一套面向业务的临时查询和并发数据修改组成。选择的查询和填充数据库的数据具有广泛的行业相关性。该基准测试说明了决策支持系统可以检查大量数据,执行高度复杂的查询,并解答关键的业务问题。”

详情请参见TPCH Specification。

本文的TPC-H的实现基于TPC-H的基准测试,并不能与已发布的TPC-H基准测试结果相比较,本文中的测试并不符合TPC-H基准测试的所有要求。

数据集简介

TPC-H(商业智能计算测试)是美国交易处理效能委员会(TPC,Transaction Processing Performance Council)组织制定的用来模拟决策支持类应用的一个测试集。目前在学术界和工业界普遍采用它来评价决策支持技术方面应用的性能。

TPC-H是根据真实的生产运行环境来建模,模拟了一套销售系统的数据仓库。其共包含8张表,数据量可设定从1 GB~3 TB不等。其基准测试共包含了22个查询,主要评价指标各个查询的响应时间,即从提交查询到结果返回所需时间。其测试结果可综合反映系统处理查询时的能力。详情请参见TPC-H基准。

场景说明

本测试场景主要包含如下内容:

-

OLAP查询场景测试,主要使用列存表,直接使用TPC-H测试中的22条查询语句进行测试。

-

Key/Value点查场景测试,主要使用行存表,针对ORDERS使用行存表后,进行主键过滤的点查。

-

数据更新场景,主要用于测试OLAP引擎在有主键的情况下数据更新的性能。

测试数据量会直接影响测试结果,TPC-H的生成工具中使用SF(scale factor)控制生成数据量的大小,1 SF对应1 GB。

以上的数据量仅针对原始数据的数据量,不包括索引等空间占用,因此在准备环境时,您需要预留更多的空间。

注意事项

为了减少可能对测试结果有影响的变量,建议每次新建实例进行测试,请勿使用升或降配的实例。

OLAP查询场景测试

-

准备工作。

基础环境准备:您需要准备OLAP查询场景所需的基础环境。

-

创建Hologres实例,详情请参见购买Hologres实例。本次测试环境使用了独享(按量付费)的实例,由于该实例仅用于测试使用,计算资源配置选择96 核384 GB。您可以根据实际业务需求,选择计算资源的规格。

-

创建ECS实例,详情请参见创建ECS实例。本文使用的ECS实例规格如下:

参数

规格

实例规格

ecs.g6.4xlarge

镜像

Alibaba Cloud Linux 3.2104 LTS 64位

数据盘

类型为ESSD云盘,具体数据容量需根据测试的数据量大小决定。

-

-

下载并配置Hologres Benchmark测试工具包。

-

登录ECS实例,详情请参见连接ECS实例。

-

安装PSQL客户端。

yum update -y yum install postgresql-server -y yum install postgresql-contrib -y -

下载Hologres Benchmark测试工具包并解压。

wget https://oss-tpch.oss-cn-hangzhou.aliyuncs.com/hologres_benchmark.tar.gz tar xvf hologres_benchmark.tar.gz -

进入Hologres Benchmark目录。

cd hologres_benchmark -

执行

vim group_vars/all命令,配置Benchmark所需参数。# db config login_host: "" login_user: "" login_password: "" login_port: "" # benchmark run cluster: hologres cluster: "hologres" RUN_MODE: "HOTRUN" # benchmark config scale_factor: 1 work_dir_root: /your/working_dir/benchmark/workdirs dataset_generate_root_path: /your/working_dir/benchmark/datasets参数介绍:

类型

参数

描述

Hologres服务连接参数

login_host

Hologres实例的指定VPC网络域名。

您可以登录管理控制台,进入实例详情页,从网络信息的域名列获取指定VPC的域名。

说明此处域名不包含端口。例如:

hgpostcn-cn-nwy364b5v009-cn-shanghai-vpc-st.hologres.aliyuncs.comlogin_port

Hologres实例的指定VPC网络端口。

您可以登录管理控制台,进入实例详情页,从网络信息的域名列获取指定VPC的域名的端口。

login_user

账号的AccessKey ID。

您可以单击AccessKey 管理,获取AccessKey ID。

login_password

当前账号的AccessKey Secret。

Benchmark配置参数

scale_factor

数据集的比例因子,控制生成数据量的大小。默认值为1,单位为:GB。

work_dir_root

工作目录的根目录,用于存放TPCH相关的建表语句、执行SQL等数据。默认值为

/your/working_dir/benchmark/workdirs。dataset_generate_root_path

存放生成测试数据集的路径。默认值为

/your/working_dir/benchmark/datasets。

-

-

执行以下命令,进行全流程自动化TPC-H测试。

全流程自动化进行TPC-H测试,主要包括:生成数据、创建测试数据库tpc_h_sf<scale_factor>,例如tpc_h_sf1000、创建表、导入数据。

bin/run_tpch.sh您还可以执行以下命令,单独执行TPC-H查询测试。

bin/run_tpch.sh query -

查看测试结果。

-

测试结果概览。

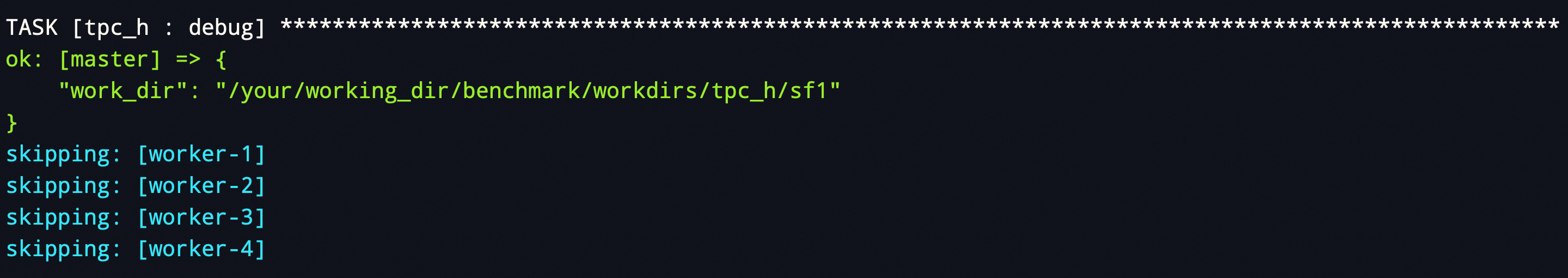

bin/run_tpch.sh命令执行完后会直接输出测试结果。测试结果类似下所示。TASK [tpc_h : debug] ************************************************************************************************** skipping: [worker-1] ok: [master] => { "command_output.stdout_lines": [ "[info] 2024-06-28 14:46:09.768 | Run sql queries started.", "[info] 2024-06-28 14:46:09.947 | Run q10.sql started.", "[info] 2024-06-28 14:46:10.088 | Run q10.sql finished. Time taken: 0:00:00, 138 ms", "[info] 2024-06-28 14:46:10.239 | Run q11.sql started.", "[info] 2024-06-28 14:46:10.396 | Run q11.sql finished. Time taken: 0:00:00, 154 ms", "[info] 2024-06-28 14:46:10.505 | Run q12.sql started.", "[info] 2024-06-28 14:46:10.592 | Run q12.sql finished. Time taken: 0:00:00, 85 ms", "[info] 2024-06-28 14:46:10.703 | Run q13.sql started.", "[info] 2024-06-28 14:46:10.793 | Run q13.sql finished. Time taken: 0:00:00, 88 ms", "[info] 2024-06-28 14:46:10.883 | Run q14.sql started.", "[info] 2024-06-28 14:46:10.981 | Run q14.sql finished. Time taken: 0:00:00, 95 ms", "[info] 2024-06-28 14:46:11.132 | Run q15.sql started.", "[info] 2024-06-28 14:46:11.266 | Run q15.sql finished. Time taken: 0:00:00, 131 ms", "[info] 2024-06-28 14:46:11.441 | Run q16.sql started.", "[info] 2024-06-28 14:46:11.609 | Run q16.sql finished. Time taken: 0:00:00, 165 ms", "[info] 2024-06-28 14:46:11.728 | Run q17.sql started.", "[info] 2024-06-28 14:46:11.818 | Run q17.sql finished. Time taken: 0:00:00, 88 ms", "[info] 2024-06-28 14:46:12.017 | Run q18.sql started.", "[info] 2024-06-28 14:46:12.184 | Run q18.sql finished. Time taken: 0:00:00, 164 ms", "[info] 2024-06-28 14:46:12.287 | Run q19.sql started.", "[info] 2024-06-28 14:46:12.388 | Run q19.sql finished. Time taken: 0:00:00, 98 ms", "[info] 2024-06-28 14:46:12.503 | Run q1.sql started.", "[info] 2024-06-28 14:46:12.597 | Run q1.sql finished. Time taken: 0:00:00, 93 ms", "[info] 2024-06-28 14:46:12.732 | Run q20.sql started.", "[info] 2024-06-28 14:46:12.888 | Run q20.sql finished. Time taken: 0:00:00, 154 ms", "[info] 2024-06-28 14:46:13.184 | Run q21.sql started.", "[info] 2024-06-28 14:46:13.456 | Run q21.sql finished. Time taken: 0:00:00, 269 ms", "[info] 2024-06-28 14:46:13.558 | Run q22.sql started.", "[info] 2024-06-28 14:46:13.657 | Run q22.sql finished. Time taken: 0:00:00, 97 ms", "[info] 2024-06-28 14:46:13.796 | Run q2.sql started.", "[info] 2024-06-28 14:46:13.935 | Run q2.sql finished. Time taken: 0:00:00, 136 ms", "[info] 2024-06-28 14:46:14.051 | Run q3.sql started.", "[info] 2024-06-28 14:46:14.155 | Run q3.sql finished. Time taken: 0:00:00, 101 ms", "[info] 2024-06-28 14:46:14.255 | Run q4.sql started.", "[info] 2024-06-28 14:46:14.341 | Run q4.sql finished. Time taken: 0:00:00, 83 ms", "[info] 2024-06-28 14:46:14.567 | Run q5.sql started.", "[info] 2024-06-28 14:46:14.799 | Run q5.sql finished. Time taken: 0:00:00, 230 ms", "[info] 2024-06-28 14:46:14.881 | Run q6.sql started.", "[info] 2024-06-28 14:46:14.950 | Run q6.sql finished. Time taken: 0:00:00, 67 ms", "[info] 2024-06-28 14:46:15.138 | Run q7.sql started.", "[info] 2024-06-28 14:46:15.320 | Run q7.sql finished. Time taken: 0:00:00, 180 ms", "[info] 2024-06-28 14:46:15.572 | Run q8.sql started.", "[info] 2024-06-28 14:46:15.831 | Run q8.sql finished. Time taken: 0:00:00, 256 ms", "[info] 2024-06-28 14:46:16.081 | Run q9.sql started.", "[info] 2024-06-28 14:46:16.322 | Run q9.sql finished. Time taken: 0:00:00, 238 ms", "[info] 2024-06-28 14:46:16.325 | ----------- HOT RUN finished. Time taken: 3255 mill_sec -----------------" ] } skipping: [worker-2] skipping: [worker-3] skipping: [worker-4] TASK [tpc_h : clear Env] ********************************************************************************************** skipping: [worker-1] skipping: [worker-2] skipping: [worker-3] skipping: [worker-4] ok: [master] TASK [tpc_h : debug] ************************************************************************************************** ok: [master] => { "work_dir": "/your/working_dir/benchmark/workdirs/tpc_h/sf1" } skipping: [worker-1] skipping: [worker-2] skipping: [worker-3] skipping: [worker-4] -

测试结果详情。

成功执行

bin/run_tpch.sh命令后,系统会构建好整个TPC-H测试的工作目录并输出<work_dir>目录的路径信息,您可以切换到该路径下查看相关的Query语句、建表语句和执行日志等信息。如下图所示。

您可以通过命令

cd <work_dir>/logs进入工作目录的Logs下,查看测试结果以及执行SQL的详细结果。<work_dir>的目录结果如下所示。working_dir/ `-- benchmark |-- datasets | `-- tpc_h | `-- sf1 | |-- worker-1 | | |-- customer.tbl | | `-- lineitem.tbl | |-- worker-2 | | |-- orders.tbl | | `-- supplier.tbl | |-- worker-3 | | |-- nation.tbl | | `-- partsupp.tbl | `-- worker-4 | |-- part.tbl | `-- region.tbl `-- workdirs `-- tpc_h `-- sf1 |-- config |-- hologres | |-- logs | | |-- q10.sql.err | | |-- q10.sql.out | | |-- q11.sql.err | | |-- q11.sql.out | | |-- q12.sql.err | | |-- q12.sql.out | | |-- q13.sql.err | | |-- q13.sql.out | | |-- q14.sql.err | | |-- q14.sql.out | | |-- q15.sql.err | | |-- q15.sql.out | | |-- q16.sql.err | | |-- q16.sql.out | | |-- q17.sql.err | | |-- q17.sql.out | | |-- q18.sql.err | | |-- q18.sql.out | | |-- q19.sql.err | | |-- q19.sql.out | | |-- q1.sql.err | | |-- q1.sql.out | | |-- q20.sql.err | | |-- q20.sql.out | | |-- q21.sql.err | | |-- q21.sql.out | | |-- q22.sql.err | | |-- q22.sql.out | | |-- q2.sql.err | | |-- q2.sql.out | | |-- q3.sql.err | | |-- q3.sql.out | | |-- q4.sql.err | | |-- q4.sql.out | | |-- q5.sql.err | | |-- q5.sql.out | | |-- q6.sql.err | | |-- q6.sql.out | | |-- q7.sql.err | | |-- q7.sql.out | | |-- q8.sql.err | | |-- q8.sql.out | | |-- q9.sql.err | | |-- q9.sql.out | | `-- run.log | `-- logs-20240628144609 | |-- q10.sql.err | |-- q10.sql.out | |-- q11.sql.err | |-- q11.sql.out | |-- q12.sql.err | |-- q12.sql.out | |-- q13.sql.err | |-- q13.sql.out | |-- q14.sql.err | |-- q14.sql.out | |-- q15.sql.err | |-- q15.sql.out | |-- q16.sql.err | |-- q16.sql.out | |-- q17.sql.err | |-- q17.sql.out | |-- q18.sql.err | |-- q18.sql.out | |-- q19.sql.err | |-- q19.sql.out | |-- q1.sql.err | |-- q1.sql.out | |-- q20.sql.err | |-- q20.sql.out | |-- q21.sql.err | |-- q21.sql.out | |-- q22.sql.err | |-- q22.sql.out | |-- q2.sql.err | |-- q2.sql.out | |-- q3.sql.err | |-- q3.sql.out | |-- q4.sql.err | |-- q4.sql.out | |-- q5.sql.err | |-- q5.sql.out | |-- q6.sql.err | |-- q6.sql.out | |-- q7.sql.err | |-- q7.sql.out | |-- q8.sql.err | |-- q8.sql.out | |-- q9.sql.err | |-- q9.sql.out | `-- run.log |-- queries | |-- ddl | | |-- hologres_analyze_tables.sql | | `-- hologres_create_tables.sql | |-- q10.sql | |-- q11.sql | |-- q12.sql | |-- q13.sql | |-- q14.sql | |-- q15.sql | |-- q16.sql | |-- q17.sql | |-- q18.sql | |-- q19.sql | |-- q1.sql | |-- q20.sql | |-- q21.sql | |-- q22.sql | |-- q2.sql | |-- q3.sql | |-- q4.sql | |-- q5.sql | |-- q6.sql | |-- q7.sql | |-- q8.sql | `-- q9.sql |-- run_hologres.sh |-- run_mysql.sh |-- run.sh `-- tpch_tools |-- dbgen |-- qgen `-- resouces |-- dists.dss `-- queries |-- 10.sql |-- 11.sql |-- 12.sql |-- 13.sql |-- 14.sql |-- 15.sql |-- 16.sql |-- 17.sql |-- 18.sql |-- 19.sql |-- 1.sql |-- 20.sql |-- 21.sql |-- 22.sql |-- 2.sql |-- 3.sql |-- 4.sql |-- 5.sql |-- 6.sql |-- 7.sql |-- 8.sql `-- 9.sql

-

Key/Value点查场景测试

Key/Value点查场景测试,可继续使用OLAP查询场景测试创建的数据库hologres_tpch以及orders表进行Key/Value点查场景测试。具体步骤如下:

-

创建表

由于Key/Value点查场景使用行存表,因此不能直接使用OLAP查询场景测试中的order表,需新建表。您可使用PSQL客户端连接Hologres,并运行如下命令新建orders_row表。

说明PSQL客户端连接Hologres,详情请参见连接Hologres并开发。

DROP TABLE IF EXISTS public.orders_row; BEGIN; CREATE TABLE public.orders_row( O_ORDERKEY BIGINT NOT NULL PRIMARY KEY ,O_CUSTKEY INT NOT NULL ,O_ORDERSTATUS TEXT NOT NULL ,O_TOTALPRICE DECIMAL(15,2) NOT NULL ,O_ORDERDATE TIMESTAMPTZ NOT NULL ,O_ORDERPRIORITY TEXT NOT NULL ,O_CLERK TEXT NOT NULL ,O_SHIPPRIORITY INT NOT NULL ,O_COMMENT TEXT NOT NULL ); CALL SET_TABLE_PROPERTY('public.orders_row', 'orientation', 'row'); CALL SET_TABLE_PROPERTY('public.orders_row', 'clustering_key', 'o_orderkey'); CALL SET_TABLE_PROPERTY('public.orders_row', 'distribution_key', 'o_orderkey'); COMMIT; -

导入数据

您可以使用如下INSERT INTO语句,将TPC-H数据集中的orders表数据导入至orders_row表。

说明Hologres从V2.1.17版本起支持Serverless Computing能力,针对大数据量离线导入、大型ETL作业、外表大数据量查询等场景,使用Serverless Computing执行该类任务可以直接使用额外的Serverless资源,避免使用实例自身资源,无需为实例预留额外的计算资源,显著提升实例稳定性、减少OOM概率,且仅需为任务单独付费。Serverless Computing详情请参见Serverless Computing,Serverless Computing使用方法请参见Serverless Computing使用指南。

-- (可选)推荐使用Serverless Computing执行大数据量离线导入和ETL作业 SET hg_computing_resource = 'serverless'; INSERT INTO public.orders_row SELECT * FROM public.orders; -- 重置配置,保证非必要的SQL不会使用serverless资源。 RESET hg_computing_resource; -

执行查询

-

生成查询语句。

Key/Value点查场景主要有两种查询场景,具体查询语句如下:

查询方式

查询语句

说明

单值筛选

SELECT column_a ,column_b ,... ,column_x FROM table_x WHERE pk = value_x ;此查询语句主要用于单值筛选,即

WHERE的SQL语句取值唯一。多值筛选

SELECT column_a ,column_b ,... ,column_x FROM table_x WHERE pk IN ( value_a, value_b,..., value_x ) ;此查询语句主要用于多值筛选,即

WHERE的SQL语句可以取多个值。您可以使用如下脚本生成所需的SQL语句。

rm -rf kv_query mkdir kv_query cd kv_query echo " \set column_values random(1,99999999) select O_ORDERKEY,O_CUSTKEY,O_ORDERSTATUS,O_TOTALPRICE,O_ORDERDATE,O_ORDERPRIORITY,O_CLERK,O_SHIPPRIORITY,O_COMMENT from public.orders_row WHERE o_orderkey =:column_values; " >> kv_query_single.sql echo " \set column_values1 random(1,99999999) \set column_values2 random(1,99999999) \set column_values3 random(1,99999999) \set column_values4 random(1,99999999) \set column_values5 random(1,99999999) \set column_values6 random(1,99999999) \set column_values7 random(1,99999999) \set column_values8 random(1,99999999) \set column_values9 random(1,99999999) select O_ORDERKEY,O_CUSTKEY,O_ORDERSTATUS,O_TOTALPRICE,O_ORDERDATE,O_ORDERPRIORITY,O_CLERK,O_SHIPPRIORITY,O_COMMENT from public.orders_row WHERE o_orderkey in(:column_values1,:column_values2,:column_values3,:column_values4,:column_values5,:column_values6,:column_values7,:column_values8,:column_values9); " >> kv_query_in.sql脚本执行完成后,会生成2个SQL文件:

-

kv_query_single.sql表示单值筛选的SQL。 -

kv_query_in.sql表示多值筛选的SQL,该脚本会随机生成一个针对10个值筛选的SQL。

-

-

为了方便统计查询信息,您需要使用pgbench工具。您可以使用如下命令安装pgbench工具。

yum install postgresql-contrib -y为了避免因工具兼容性问题影响测试,建议您安装版本为13及以上的pgbench工具。如果您本地已经安装pgbench工具,请确保其版本为9.6以上。您可以通过执行如下命令查看当前工具版本。

pgbench --version -

执行测试语句。

说明以下命令需在生成查询语句的目录下执行。

-

针对单值筛选的场景,使用pgbench工具进行压测。

PGUSER=<AccessKey_ID> PGPASSWORD=<AccessKey_Secret> PGDATABASE=<Database> pgbench -h <Endpoint> -p <Port> -c <Client_Num> -T <Query_Seconds> -M prepared -n -f kv_query_single.sql -

针对多值筛选的场景,使用pgbench工具进行压测。

PGUSER=<AccessKey_ID> PGPASSWORD=<AccessKey_Secret> PGDATABASE=<Database> pgbench -h <Endpoint> -p <Port> -c <Client_Num> -T <Query_Seconds> -M prepared -n -f kv_query_in.sql

参数说明如下表所示。

参数

说明

AccessKey_ID

当前阿里云账号的AccessKey ID。

您可以单击AccessKey 管理,获取AccessKey ID。

AccessKey_Secret

当前阿里云账号的AccessKey Secret。

您可以单击AccessKey 管理,获取AccessKey Secret。

Database

-

Hologres的数据库名称。

-

开通Hologres实例后,系统自动创建postgres数据库。

-

您可以使用postgres数据库链接Hologres,但是该数据库分配到的资源较少,开发实际业务建议您新建数据库。详情请参见创建数据库。

Endpoint

Hologres实例的网络地址(Endpoint)。

您可以进入Hologres管理控制台的实例详情页,从网络信息区域获取网络地址。

Port

Hologres实例的网络端口。

进入Hologres管理控制台的实例详情页获取网络端口。

Client_Num

客户端数目,即并发度。

例如,本文由于该测试仅测试查询性能,不测试并发,将并发度置为1即可。

Query_Seconds

每个客户端需要执行的每个Query的压测时长(Query_Seconds,秒)。例如,本文该参数取值为300。

-

-

数据更新场景

该场景用于测试OLAP引擎在有主键情况下数据更新的性能,以及在主键冲突时更新整行数据。

-

生成查询

echo " \set O_ORDERKEY random(1,99999999) INSERT INTO public.orders_row(o_orderkey,o_custkey,o_orderstatus,o_totalprice,o_orderdate,o_orderpriority,o_clerk,o_shippriority,o_comment) VALUES (:O_ORDERKEY,1,'demo',1.1,'2021-01-01','demo','demo',1,'demo') on conflict(o_orderkey) do update set (o_orderkey,o_custkey,o_orderstatus,o_totalprice,o_orderdate,o_orderpriority,o_clerk,o_shippriority,o_comment)= ROW(excluded.*); " > /root/insert_on_conflict.sql -

插入及更新,更对参数说明请参见参数说明。

PGUSER=<AccessKey_ID> PGPASSWORD=<AccessKey_Secret> PGDATABASE=<Database> pgbench -h <Endpoint> -p <Port> -c <Client_Num> -T <Query_Seconds> -M prepared -n -f /root/insert_on_conflict.sql -

示例结果

transaction type: Custom query scaling factor: 1 query mode: prepared number of clients: 249 number of threads: 1 duration: 60 s number of transactions actually processed: 1923038 tps = 32005.850214 (including connections establishing) tps = 36403.145722 (excluding connections establishing)

Flink实时写入场景

该场景用于测试实时数据写入能力。

-

Hologres DDL

该场景Hologres的表拥有10列,其中

key列为主键,Hologres DDL如下。DROP TABLE IF EXISTS flink_insert; BEGIN ; CREATE TABLE IF NOT EXISTS flink_insert( key INT PRIMARY KEY ,value1 TEXT ,value2 TEXT ,value3 TEXT ,value4 TEXT ,value5 TEXT ,value6 TEXT ,value7 TEXT ,value8 TEXT ,value9 TEXT ); CALL SET_TABLE_PROPERTY('flink_insert', 'orientation', 'row'); CALL SET_TABLE_PROPERTY('flink_insert', 'clustering_key', 'key'); CALL SET_TABLE_PROPERTY('flink_insert', 'distribution_key', 'key'); COMMIT; -

Flink作业脚本

使用Flink全托管自带的随机数发生器向Hologres写入数据,当主键冲突时选择整行更新,单行数据量超过512 B,Flink作业脚本如下。

CREATE TEMPORARY TABLE flink_case_1_source ( key INT, value1 VARCHAR, value2 VARCHAR, value3 VARCHAR, value4 VARCHAR, value5 VARCHAR, value6 VARCHAR, value7 VARCHAR, value8 VARCHAR, value9 VARCHAR ) WITH ( 'connector' = 'datagen', -- optional options -- 'rows-per-second' = '1000000000', 'fields.key.min'='1', 'fields.key.max'='2147483647', 'fields.value1.length' = '57', 'fields.value2.length' = '57', 'fields.value3.length' = '57', 'fields.value4.length' = '57', 'fields.value5.length' = '57', 'fields.value6.length' = '57', 'fields.value7.length' = '57', 'fields.value8.length' = '57', 'fields.value9.length' = '57' ); -- 创建 Hologres 结果表 CREATE TEMPORARY TABLE flink_case_1_sink ( key INT, value1 VARCHAR, value2 VARCHAR, value3 VARCHAR, value4 VARCHAR, value5 VARCHAR, value6 VARCHAR, value7 VARCHAR, value8 VARCHAR, value9 VARCHAR ) WITH ( 'connector' = 'hologres', 'dbname'='<yourDbname>', --Hologres的数据库名称。 'tablename'='<yourTablename>', --Hologres用于接收数据的表名称。 'username'='<yourUsername>', --当前阿里云账号的AccessKey ID。 'password'='<yourPassword>', --当前阿里云账号的AccessKey Secret。 'endpoint'='<yourEndpoint>', --当前Hologres实例VPC网络的Endpoint。 'connectionSize' = '10', --默认为3 'jdbcWriteBatchSize' = '1024', --默认为256 'jdbcWriteBatchByteSize' = '2147483647', --默认为20971520 'mutatetype'='insertorreplace' --插入或整行替换已有数据 ); -- 进行 ETL 操作并写入数据 insert into flink_case_1_sink select key, value1, value2, value3, value4, value5, value6, value7, value8, value9 from flink_case_1_source ;参数说明请参见Hologres结果表。

-

示例结果

在Hologres的管理控制台的监控信息页面,即可看到RPS的数值。

TPC-H 22条查询语句

TPC-H 22条查询语句如下所示,您可以单击表格中的链接进行查看。

|

名称 |

查询语句 |

|||

|

TPCH 22条查询语句 |

||||

|

- |

- |

|||

-

Q1

select l_returnflag, l_linestatus, sum(l_quantity) as sum_qty, sum(l_extendedprice) as sum_base_price, sum(l_extendedprice * (1 - l_discount)) as sum_disc_price, sum(l_extendedprice * (1 - l_discount) * (1 + l_tax)) as sum_charge, avg(l_quantity) as avg_qty, avg(l_extendedprice) as avg_price, avg(l_discount) as avg_disc, count(*) as count_order from lineitem where l_shipdate <= date '1998-12-01' - interval '120' day group by l_returnflag, l_linestatus order by l_returnflag, l_linestatus; -

Q2

select s_acctbal, s_name, n_name, p_partkey, p_mfgr, s_address, s_phone, s_comment from part, supplier, partsupp, nation, region where p_partkey = ps_partkey and s_suppkey = ps_suppkey and p_size = 48 and p_type like '%STEEL' and s_nationkey = n_nationkey and n_regionkey = r_regionkey and r_name = 'EUROPE' and ps_supplycost = ( select min(ps_supplycost) from partsupp, supplier, nation, region where p_partkey = ps_partkey and s_suppkey = ps_suppkey and s_nationkey = n_nationkey and n_regionkey = r_regionkey and r_name = 'EUROPE' ) order by s_acctbal desc, n_name, s_name, p_partkey limit 100; -

Q3

select l_orderkey, sum(l_extendedprice * (1 - l_discount)) as revenue, o_orderdate, o_shippriority from customer, orders, lineitem where c_mktsegment = 'MACHINERY' and c_custkey = o_custkey and l_orderkey = o_orderkey and o_orderdate < date '1995-03-23' and l_shipdate > date '1995-03-23' group by l_orderkey, o_orderdate, o_shippriority order by revenue desc, o_orderdate limit 10; -

Q4

select o_orderpriority, count(*) as order_count from orders where o_orderdate >= date '1996-07-01' and o_orderdate < date '1996-07-01' + interval '3' month and exists ( select * from lineitem where l_orderkey = o_orderkey and l_commitdate < l_receiptdate ) group by o_orderpriority order by o_orderpriority; -

Q5

select n_name, sum(l_extendedprice * (1 - l_discount)) as revenue from customer, orders, lineitem, supplier, nation, region where c_custkey = o_custkey and l_orderkey = o_orderkey and l_suppkey = s_suppkey and c_nationkey = s_nationkey and s_nationkey = n_nationkey and n_regionkey = r_regionkey and r_name = 'EUROPE' and o_orderdate >= date '1996-01-01' and o_orderdate < date '1996-01-01' + interval '1' year group by n_name order by revenue desc; -

Q6

select sum(l_extendedprice * l_discount) as revenue from lineitem where l_shipdate >= date '1996-01-01' and l_shipdate < date '1996-01-01' + interval '1' year and l_discount between 0.02 - 0.01 and 0.02 + 0.01 and l_quantity < 24; -

Q7

select supp_nation, cust_nation, l_year, sum(volume) as revenue from ( select n1.n_name as supp_nation, n2.n_name as cust_nation, extract(year from l_shipdate) as l_year, l_extendedprice * (1 - l_discount) as volume from supplier, lineitem, orders, customer, nation n1, nation n2 where s_suppkey = l_suppkey and o_orderkey = l_orderkey and c_custkey = o_custkey and s_nationkey = n1.n_nationkey and c_nationkey = n2.n_nationkey and ( (n1.n_name = 'CANADA' and n2.n_name = 'BRAZIL') or (n1.n_name = 'BRAZIL' and n2.n_name = 'CANADA') ) and l_shipdate between date '1995-01-01' and date '1996-12-31' ) as shipping group by supp_nation, cust_nation, l_year order by supp_nation, cust_nation, l_year; -

Q8

select o_year, sum(case when nation = 'BRAZIL' then volume else 0 end) / sum(volume) as mkt_share from ( select extract(year from o_orderdate) as o_year, l_extendedprice * (1 - l_discount) as volume, n2.n_name as nation from part, supplier, lineitem, orders, customer, nation n1, nation n2, region where p_partkey = l_partkey and s_suppkey = l_suppkey and l_orderkey = o_orderkey and o_custkey = c_custkey and c_nationkey = n1.n_nationkey and n1.n_regionkey = r_regionkey and r_name = 'AMERICA' and s_nationkey = n2.n_nationkey and o_orderdate between date '1995-01-01' and date '1996-12-31' and p_type = 'LARGE ANODIZED COPPER' ) as all_nations group by o_year order by o_year; -

Q9

select nation, o_year, sum(amount) as sum_profit from ( select n_name as nation, extract(year from o_orderdate) as o_year, l_extendedprice * (1 - l_discount) - ps_supplycost * l_quantity as amount from part, supplier, lineitem, partsupp, orders, nation where s_suppkey = l_suppkey and ps_suppkey = l_suppkey and ps_partkey = l_partkey and p_partkey = l_partkey and o_orderkey = l_orderkey and s_nationkey = n_nationkey and p_name like '%maroon%' ) as profit group by nation, o_year order by nation, o_year desc; -

Q10

select c_custkey, c_name, sum(l_extendedprice * (1 - l_discount)) as revenue, c_acctbal, n_name, c_address, c_phone, c_comment from customer, orders, lineitem, nation where c_custkey = o_custkey and l_orderkey = o_orderkey and o_orderdate >= date '1993-02-01' and o_orderdate < date '1993-02-01' + interval '3' month and l_returnflag = 'R' and c_nationkey = n_nationkey group by c_custkey, c_name, c_acctbal, c_phone, n_name, c_address, c_comment order by revenue desc limit 20; -

Q11

select ps_partkey, sum(ps_supplycost * ps_availqty) as value from partsupp, supplier, nation where ps_suppkey = s_suppkey and s_nationkey = n_nationkey and n_name = 'EGYPT' group by ps_partkey having sum(ps_supplycost * ps_availqty) > ( select sum(ps_supplycost * ps_availqty) * 0.0001000000 from partsupp, supplier, nation where ps_suppkey = s_suppkey and s_nationkey = n_nationkey and n_name = 'EGYPT' ) order by value desc; -

Q12

select l_shipmode, sum(case when o_orderpriority = '1-URGENT' or o_orderpriority = '2-HIGH' then 1 else 0 end) as high_line_count, sum(case when o_orderpriority <> '1-URGENT' and o_orderpriority <> '2-HIGH' then 1 else 0 end) as low_line_count from orders, lineitem where o_orderkey = l_orderkey and l_shipmode in ('FOB', 'AIR') and l_commitdate < l_receiptdate and l_shipdate < l_commitdate and l_receiptdate >= date '1997-01-01' and l_receiptdate < date '1997-01-01' + interval '1' year group by l_shipmode order by l_shipmode; -

Q13

select c_count, count(*) as custdist from ( select c_custkey, count(o_orderkey) as c_count from customer left outer join orders on c_custkey = o_custkey and o_comment not like '%special%deposits%' group by c_custkey ) c_orders group by c_count order by custdist desc, c_count desc; -

Q14

select 100.00 * sum(case when p_type like 'PROMO%' then l_extendedprice * (1 - l_discount) else 0 end) / sum(l_extendedprice * (1 - l_discount)) as promo_revenue from lineitem, part where l_partkey = p_partkey and l_shipdate >= date '1997-06-01' and l_shipdate < date '1997-06-01' + interval '1' month; -

Q15

with revenue0(SUPPLIER_NO, TOTAL_REVENUE) as ( select l_suppkey, sum(l_extendedprice * (1 - l_discount)) from lineitem where l_shipdate >= date '1995-02-01' and l_shipdate < date '1995-02-01' + interval '3' month group by l_suppkey ) select s_suppkey, s_name, s_address, s_phone, total_revenue from supplier, revenue0 where s_suppkey = supplier_no and total_revenue = ( select max(total_revenue) from revenue0 ) order by s_suppkey; -

Q16

select p_brand, p_type, p_size, count(distinct ps_suppkey) as supplier_cnt from partsupp, part where p_partkey = ps_partkey and p_brand <> 'Brand#45' and p_type not like 'SMALL ANODIZED%' and p_size in (47, 15, 37, 30, 46, 16, 18, 6) and ps_suppkey not in ( select s_suppkey from supplier where s_comment like '%Customer%Complaints%' ) group by p_brand, p_type, p_size order by supplier_cnt desc, p_brand, p_type, p_size; -

Q17

select sum(l_extendedprice) / 7.0 as avg_yearly from lineitem, part where p_partkey = l_partkey and p_brand = 'Brand#51' and p_container = 'WRAP PACK' and l_quantity < ( select 0.2 * avg(l_quantity) from lineitem where l_partkey = p_partkey ); -

Q18

select c_name, c_custkey, o_orderkey, o_orderdate, o_totalprice, sum(l_quantity) from customer, orders, lineitem where o_orderkey in ( select l_orderkey from lineitem group by l_orderkey having sum(l_quantity) > 312 ) and c_custkey = o_custkey and o_orderkey = l_orderkey group by c_name, c_custkey, o_orderkey, o_orderdate, o_totalprice order by o_totalprice desc, o_orderdate limit 100; -

Q19

select sum(l_extendedprice* (1 - l_discount)) as revenue from lineitem, part where ( p_partkey = l_partkey and p_brand = 'Brand#52' and p_container in ('SM CASE', 'SM BOX', 'SM PACK', 'SM PKG') and l_quantity >= 3 and l_quantity <= 3 + 10 and p_size between 1 and 5 and l_shipmode in ('AIR', 'AIR REG') and l_shipinstruct = 'DELIVER IN PERSON' ) or ( p_partkey = l_partkey and p_brand = 'Brand#43' and p_container in ('MED BAG', 'MED BOX', 'MED PKG', 'MED PACK') and l_quantity >= 12 and l_quantity <= 12 + 10 and p_size between 1 and 10 and l_shipmode in ('AIR', 'AIR REG') and l_shipinstruct = 'DELIVER IN PERSON' ) or ( p_partkey = l_partkey and p_brand = 'Brand#52' and p_container in ('LG CASE', 'LG BOX', 'LG PACK', 'LG PKG') and l_quantity >= 21 and l_quantity <= 21 + 10 and p_size between 1 and 15 and l_shipmode in ('AIR', 'AIR REG') and l_shipinstruct = 'DELIVER IN PERSON' ); -

Q20

select s_name, s_address from supplier, nation where s_suppkey in ( select ps_suppkey from partsupp where ps_partkey in ( select p_partkey from part where p_name like 'drab%' ) and ps_availqty > ( select 0.5 * sum(l_quantity) from lineitem where l_partkey = ps_partkey and l_suppkey = ps_suppkey and l_shipdate >= date '1996-01-01' and l_shipdate < date '1996-01-01' + interval '1' year ) ) and s_nationkey = n_nationkey and n_name = 'KENYA' order by s_name; -

Q21

select s_name, count(*) as numwait from supplier, lineitem l1, orders, nation where s_suppkey = l1.l_suppkey and o_orderkey = l1.l_orderkey and o_orderstatus = 'F' and l1.l_receiptdate > l1.l_commitdate and exists ( select * from lineitem l2 where l2.l_orderkey = l1.l_orderkey and l2.l_suppkey <> l1.l_suppkey ) and not exists ( select * from lineitem l3 where l3.l_orderkey = l1.l_orderkey and l3.l_suppkey <> l1.l_suppkey and l3.l_receiptdate > l3.l_commitdate ) and s_nationkey = n_nationkey and n_name = 'PERU' group by s_name order by numwait desc, s_name limit 100; -

Q22

select cntrycode, count(*) as numcust, sum(c_acctbal) as totacctbal from ( select substring(c_phone from 1 for 2) as cntrycode, c_acctbal from customer where substring(c_phone from 1 for 2) in ('24', '32', '17', '18', '12', '14', '22') and c_acctbal > ( select avg(c_acctbal) from customer where c_acctbal > 0.00 and substring(c_phone from 1 for 2) in ('24', '32', '17', '18', '12', '14', '22') ) and not exists ( select * from orders where o_custkey = c_custkey ) ) as custsale group by cntrycode order by cntrycode;