-

Asia Accelerator Hot

借助阿里云在亚洲加速迈向成功

-

信息合规

一站式安全合规咨询服务

-

China Gateway - MLPS 2.0 合规 New

MLPS 2.0 一站式合规解决方案

-

China Gateway - 网络

依托我们的网络进军中国市场

-

China Gateway - 全球应用加速 New

提升面向互联网应用的性能和安全性

-

China Gateway - 安全

保障您的中国业务安全无忧

-

China Gateway - 数据安全 New

通过强大的数据安全框架保护您的数据资产

-

ICP支持服务 Hot

申请 ICP 备案的流程解读和咨询服务

-

China Gateway - 全域数据中台 New

面向大数据建设、管理及应用的全域解决方案

-

China Gateway - 一方数据中台 New

企业内大数据建设、管理和应用的一站式解决方案

-

China Gateway - 业务中台 New

将您的采购和销售置于同一企业级全渠道数字平台上

-

China Gateway - 智能客服解决方案 New

全渠道内置 AI 驱动、拟人化、多语言对话的聊天机器人

-

China Gateway - 在线教育

快速搭建在线教育平台

-

China Gateway - 域名注册

提供域名注册、分析和保护服务

- 容器服务

-

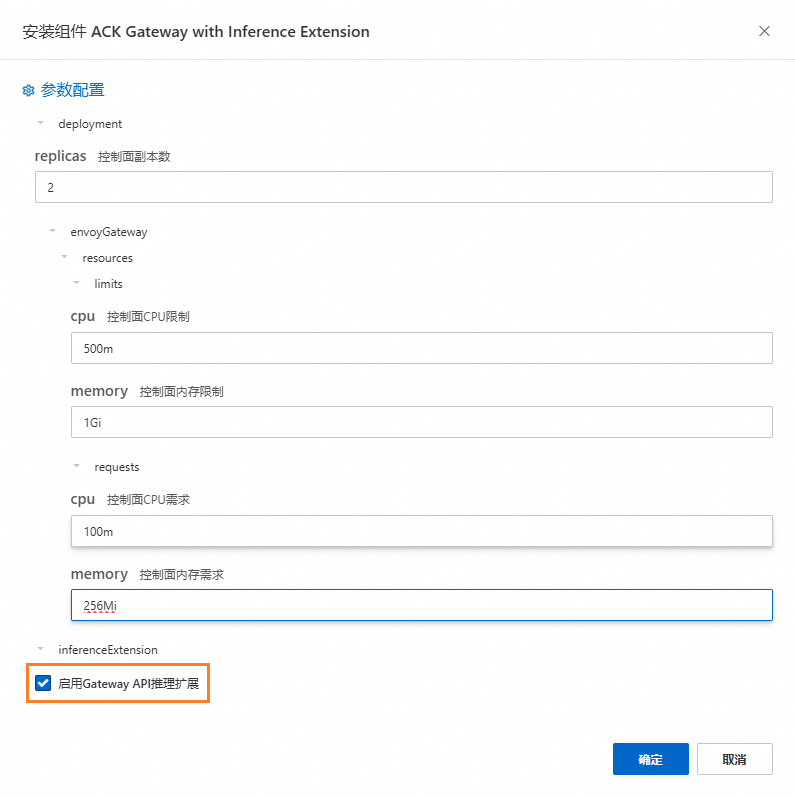

容器服务 Kubernetes 版 ACK Hot

云原生 Kubernetes 容器化应用运行环境

-

容器计算服务 ACS New

以 Kubernetes 为使用界面的容器服务产品,提供符合容器规范的算力资源

-

容器镜像服务 ACR

安全的镜像托管服务,支持全生命周期管理

-

服务网格 ASM

多集群环境下微服务应用流量统一管理

-

分布式云容器平台 ACK One

提供任意基础设施上容器集群的统一管控,助您轻松管控分布式云场景

-

Serverless Kubernetes 服务 ASK

高弹性、高可靠的企业级无服务器 Kubernetes 容器产品

-

弹性容器实例 ECI

敏捷安全的 Serverless 容器运行服务

- 基础存储服务

-

块存储 EBS

为虚拟机和容器提供高可靠性、高性能、低时延的块存储服务

-

对象存储 OSS Hot

一款海量、安全、低成本、高可靠的云存储服务

-

文件存储 NAS Hot

可靠、弹性、高性能、多共享的文件存储服务

-

文件存储 CPFS

全托管、可扩展的并行文件系统服务。

-

表格存储

全托管的 NoSQL 结构化数据实时存储服务

-

存储容量单位包 (SCU)

可抵扣多种存储产品的容量包,兼具灵活性和长期成本优化

- 云上网络

-

负载均衡 SLB Hot

让您的应用跨不同可用区资源自动分配访问量

-

弹性公网 IP Hot

随时绑定和解绑 VPC ECS

-

云数据传输 CDT

云网络公网、跨域流量统一计费

-

通用流量包

高性价比,可抵扣按流量计费的流量费用

-

专有网络 VPC

创建云上隔离的网络,在专有环境中运行资源

-

NAT 网关

在 VPC 环境下构建公网流量的出入口

-

网络智能服务 NIS Beta

具备网络状态可视化、故障智能诊断能力的自助式网络运维服务。

-

私网连接

安全便捷的云上服务专属连接

-

云解析 PrivateZone

基于阿里云专有网络的私有 DNS 解析服务

- 云安全

-

DDoS 防护 Hot

保障在线业务不受大流量 DDoS 攻击影响

-

运维安全中心(堡垒机)

系统运维和安全审计管控平台

-

云防火墙

业务上云的第一个网络安全基础设施

-

办公安全平台 SASE New

集零信任内网访问、办公数据保护、终端管理等多功能于一体的办公安全管控平台

-

云安全中心 Hot

提供7X24小时安全运维平台

-

Web 应用防火墙 WAF Hot

防御常见 Web 攻击,缓解 HTTP 泛洪攻击

- 数据安全

-

数字证书管理服务(原SSL证书) Hot

实现全站 HTTPS,呈现可信的 WEB 访问

-

加密服务 HSM

为云上应用提供符合行业标准和密码算法等级的数据加解密、签名验签和数据认证能力

-

数据安全中心 (原SDDP)

一款发现、分类和保护敏感数据的安全服务

-

密钥管理服务 KMS

创建、控制和管理您的加密密钥

- 微服务工具及平台

-

应用高可用服务 AHAS

快速提高应用高可用能力服务

-

企业分布式应用服务 EDAS

围绕应用和微服务的 PaaS 平台

-

微服务引擎 MSE

兼容主流开源微服务生态的一站式平台

-

服务网格 ASM

多集群环境下微服务应用流量统一管理

- 关系型数据库

-

云原生数据库 PolarDB Hot

Super MySQL 和 PostgreSQL,高度兼容 Oracle 语法

-

云数据库 RDS

全托管 MySQL、PostgreSQL、SQL Server、MariaDB

- NoSQL 数据库

-

云数据库 Tair(兼容 Redis®) Hot

兼容 Redis® 的缓存和KV数据库

-

云原生多模数据库 Lindorm

兼容Apache Cassandra、Apache HBase、Elasticsearch、OpenTSDB 等多种开源接口

-

云数据库 MongoDB 版

文档型数据库,支持副本集和分片架构

-

云数据库HBase版

100%兼容 Apache HBase 并深度扩展,稳定、易用、低成本的NoSQL数据库。

-

时序数据库 InfluxDB® 版

低成本、高可用、可弹性伸缩的在线时序数据库服务

- 数据计算与分析

-

检索分析服务 Elasticsearch 版 Hot

专为搜索和分析而设计,成本效益达到开源的两倍,采用最新的企业级AI搜索和AI助手功能。

-

实时数仓 Hologres

一款兼容PostgreSQL协议的实时交互式分析产品

-

云原生大数据计算服务 MaxCompute Hot

一种快速、完全托管的 TB/PB 级数据仓库

-

实时计算 Flink 版

基于 Flink 为大数据行业提供解决方案

-

大模型服务平台(百炼) New

基于Qwen和其他热门模型的一站式生成式AI平台,可构建了解您业务的智能应用程

-

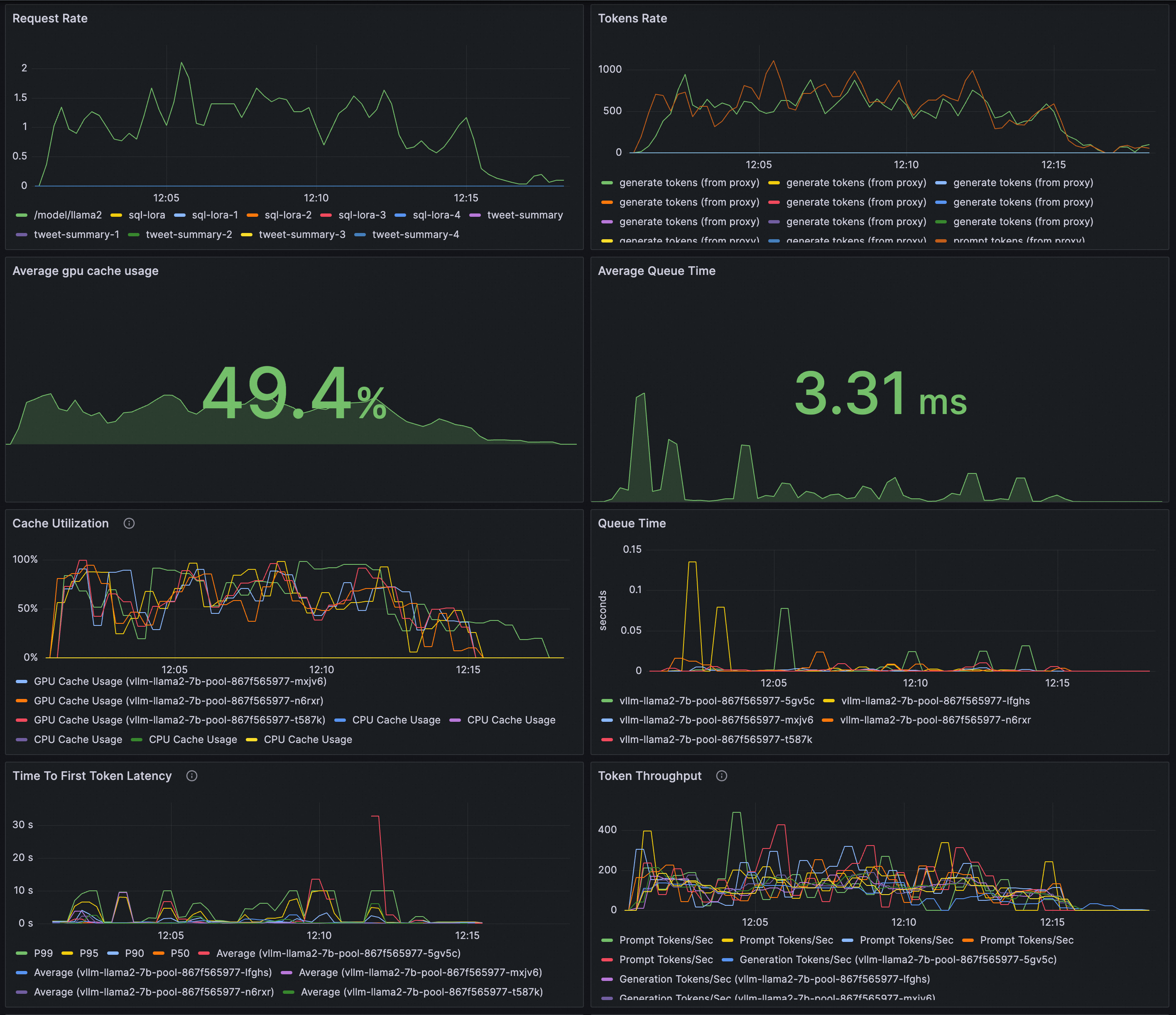

人工智能平台 PAI

一站式机器学习平台,满足数据挖掘分析需求

-

向量检索服务 DashVector

高性能向量检索服务,提供低代码API和高成本效益

-

智能推荐 AIRec

帮助您的应用快速构建高质量的个性化推荐服务能力

-

机器翻译

提供定制化的高品质机器翻译服务

-

PAI灵骏智算服务

全面的AI计算平台,满足大模型训练等高性能AI计算的算力和性能需求

-

智能对话机器人 Beta

具备智能会话能力的会话机器人

-

图像搜索

基于机器学习的智能图像搜索产品

-

离线视觉智能软件包

基于阿里云深度学习技术,为用户提供图像分割、视频分割、文字识别等离线SDK能力,支持Android、iOS不同的适用终端。

-

智能语音交互

语音识别、语音合成服务以及自学习平台

-

智能开放搜索 OpenSearch

一站式智能搜索业务开发平台

- 企业云服务

-

云行情

助力金融企业快速搭建超低时延、高质量、稳定的行情数据服务

-

能耗宝

帮助企业快速测算和分析企业的碳排放和产品碳足迹

-

机器人流程自动化 RPA

企业工作流程自动化,全面提高效率

-

金融分布式架构 SOFAStack™

金融级云原生分布式架构的一站式高可用应用研发、运维平台

-

ZOLOZ Real ID

eKYC 数字远程在线解决方案

-

ZOLOZ SMART AML

可智能检测、大数据驱动的综合性反洗钱 (AML) 解决方案

- 运维与监控

-

应用实时监控服务 ARMS Hot

阿里云APM类监控产品

-

云监控

实时云监控服务,确保应用及服务器平稳运行

-

系统运维管理

为系统运维人员管理云基础架构提供全方位服务的云上自动化运维平台

-

智能顾问 Advisor

面向您的云资源的风险检测服务

-

可观测链路 OpenTelemetry 版

提升分布式环境下的诊断效率

-

日志服务 SLS Hot

日志类数据一站式服务,无需开发就能部署

成本优化方案

ECS 预留实例

让弹性计算产品的成本和灵活性达到最佳平衡的付费方式。相关解决方案

云原生 AI 套件

加速AI平台构建,提高资源效率和交付速度FinOps

实时分析您的云消耗并实现节约SecOps

实施细粒度安全控制DevOps

快速、安全地最大限度提高您的DevOps优势相关解决方案

自带IP上云

自带公网 IP 地址上云全球网络互联

端到端的软件定义网络解决方案,可推动跨国企业的业务发展全球应用加速

提升面向互联网应用的性能和安全性全球互联网接入

将IDC网关迁移到云端相关解决方案

云原生 AI 套件

加速AI平台构建,提高资源效率和交付速度FinOps

实时分析您的云消耗并实现节约SecOps

实施细粒度安全控制DevOps

快速、安全地最大限度提高您的DevOps优势相关解决方案

金融科技云数据库解决方案

利用专为金融科技而设的云原生数据库解决方案游戏行业云数据库解决方案

提供多种成熟架构,解决所有数据问题Oracle 数据库迁移

将 Oracle 数据库顺利迁移到云原生数据库数据库迁移

加速迁移您的数据到阿里云相关解决方案

阿里云上的数据湖

实时存储、管理和分析各种规模和类型的数据相关解决方案

数码信贷

利用大数据和 AI 降低信贷和黑灰产风险面向企业数据技术的大数据咨询服务

帮助企业实现数据现代化并规划其数字化未来人工智能对话服务

全渠道内置 AI 驱动、拟人化、多语言对话的聊天机器人EasyDispatch 现场服务管理

为现场服务调度提供实时AI决策支持相关解决方案

在线教育

快速搭建在线教育平台窄带高清 (HD) 转码

带宽成本降低高达 30%广电级大型赛事直播

为全球观众实时直播大型赛事,视频播放流畅不卡顿直播电商

快速轻松地搭建一站式直播购物平台相关解决方案

用于供应链规划的Alibaba Dchain

构建和管理敏捷、智能且经济高效的供应链云胸牌

针对赛事运营的创新型凭证数字服务数字门店中的云 POS 解决方案

将所有操作整合到一个云 POS 系统中相关解决方案

元宇宙

元宇宙是下一代互联网人工智能 (AI) 加速

利用阿里云 GPU 技术,为 AI 驱动型业务以及 AI 模型训练和推理加速DevOps

快速、安全地最大限度提高您的DevOps优势相关解决方案

数据迁移解决方案

加速迁移您的数据到阿里云企业 IT 治理

在阿里云上构建高效可控的云环境基于日志管理的AIOps

登录到带有智能化日志管理解决方案的 AIOps 环境备份与存档

数据备份、数据存档和灾难恢复

- 金融服务

-

金融服务 Hot

用阿里云金融服务加快创新

-

云端金融科技

在云端开展业务,提升客户满意度

-

资本市场与证券

为全球资本市场提供安全、准确和数字化的客户体验

-

金融科技云数据库解决方案

利用专为金融科技而设的云原生数据库解决方案

-

数字信用贷款

利用大数据和 AI 降低信贷和黑灰产风险

-

外汇交易

建立快速、安全的全球外汇交易平台

- 新零售

-

新零售解决方案 Hot

新零售时代下,实现传统零售业转型

-

电商解决方案 Hot

利用云服务处理流量波动问题,扩展业务运营、降低成本

-

电商直播 Hot

快速轻松地搭建一站式直播购物平台

-

全域数据中台

面向大数据建设、管理及应用的全域解决方案

-

人工智能对话服务

全渠道内置 AI 驱动、拟人化、多语言对话的聊天机器人

- 媒体

-

媒体

以数字化媒体旅程为当今的媒体市场准备就绪您的内容

-

窄带高清 (HD) 转码

带宽成本降低高达 30%

-

电商直播 Hot

快速轻松地搭建一站式直播购物平台

-

广电级大型赛事直播 Hot

为全球观众实时直播大型赛事,视频播放流畅不卡顿

-

远程渲染

使用阿里云弹性高性能计算 E-HPC 将本地渲染农场连接到云端

-

个性化内容推荐

构建发现服务,帮助客户找到最合适的内容

-

媒体存档

保护您的媒体存档安全

- 电信

-

面向电信的云解决方案

通过统一的数据驱动平台提供一致的全生命周期客户服务

-

面向电信运营商的超级应用

在钉钉上打造一个多功能的电信和数字生活平台

-

阿里云盘

在线存储、共享和管理照片与文件

-

面向电信运营商的客户互动平台

提供全渠道的无缝客户体验

- ISV

-

面向中小型企业的 ISV 解决方案

面向中小型企业,为独立软件供应商提供可靠的IT服务

-

云迁移 ISV 解决方案

打造最快途径,助力您的新云业务扬帆起航

-

阿里云上的Aruba EdgeConnect Enterprise

先进的SD-WAN平台,可实现WAN连接、实时优化并降低WAN成本

-

阿里云上的IBM Qradar SOAR

通过自动化和流程标准化实现快速事件响应

-

阿里云上的IBM Qradar SIEM

针对关键网络安全威胁提供集中可见性并进行智能安全分析

-

阿里云上的IBM托管文件传输解决方案

提供大容量、可靠且高度安全的企业文件传输

- 体育

-

体育

用智能技术数字化体育赛事

-

Sports Live+

基于人工智能的低成本体育广播服务

-

实时媒体网关

专业的广播转码及信号分配管理服务

-

实时媒体内容提供商

基于云的音视频内容引入、编辑和分发服务

-

场馆模拟

在虚拟场馆中模拟关键运营任务

-

云胸牌

针对赛事运营的创新型凭证数字服务

-

智能赛事指南

智能和交互式赛事指南

-

适用于现场单元的实时视频传输云网关

轻松管理云端背包单元的绑定直播流

- 广告与营销

-

数字营销解决方案

通过数据加强您的营销工作

- 元宇宙

-

元宇宙

元宇宙是下一代互联网

- AI

-

阿里云生成式AI解决方案 Hot

利用生成式 AI 加速创新,创造新的业务佳绩

-

通义千问 (Qwen) Hot

阿里云高性能开源大模型

-

AI Doc New

借助AI轻松解锁和提炼文档中的知识

-

自动语音识别 New

通过AI驱动的语音转文本服务获取洞察

-

阿里云人工智能和数据智能

探索阿里云人工智能和数据智能的所有功能、新优惠和最新产品

-

人工智能 (AI) 能力中心

该体验中心提供广泛的用例和产品帮助文档,助您开始使用阿里云 AI 产品和浏览您的业务数据。

-

人工智能 (AI) 加速

利用阿里云 GPU 技术,为 AI 驱动型业务以及 AI 模型训练和推理加速

-

元宇宙

元宇宙是下一代互联网

-

个性化内容推荐

构建发现服务,帮助客户找到最合适的内容

-

人工智能对话服务

全渠道内置 AI 驱动、拟人化、多语言对话的聊天机器人

- 数据迁移解决方案

-

数据迁移解决方案

加速迁移您的数据到阿里云

-

Landing Zone

在阿里云上建立一个安全且易扩容的环境,助力高效率且高成本效益的上云旅程

-

数据库迁移

迁移到完全托管的云数据库

-

Oracle 数据库迁移

将 Oracle 数据库顺利迁移到云原生数据库

-

自带 IP 上云(BYOIP)

自带公网 IP 地址上云

- 安全与合规

-

云安全 Hot

利用阿里云强大的安全工具集,保障业务安全、应用程序安全、数据安全、基础设施安全和帐户安全

-

数据库安全

保护、备份和还原您的云端数据资产

-

MLPS 2.0 合规 Hot

MLPS 2.0 一站式合规解决方案

-

阿里云InCountry服务(ACIS)

快速高效地将您的业务扩展到中国,同时遵守适用的当地法规

- 云原生

-

端到端云原生应用管理

实现对 CloudOps、DevOps、SecOps、AIOps 和 FinOps 的高效、安全和透明的管理

-

CloudOps

构建您的原生云环境并高效管理集群

-

DevOps

快速、安全地最大限度提高您的DevOps优势

-

SecOps

实施细粒度安全控制

-

基于容器管理的AIOps

提供运维效率和总体系统安全性

-

FinOps

实时分析您的云消耗并实现节约

- 数据与分析

-

阿里云上的数据湖

实时存储、管理和分析各种规模和类型的数据

-

基于日志管理的AIOps

登录到带有智能化日志管理解决方案的 AIOps 环境

-

面向企业数据技术的大数据咨询服务

帮助企业实现数据现代化并规划其数字化未来

-

面向零售业的大数据咨询服务

帮助零售商快速规划数字化之旅

- 企业服务和应用程序

-

阿里云上的 Salesforce Hot

将全球知名的 CRM 平台引入中国

-

阿里云盘

在线存储、共享和管理照片与文件

-

阿里云上的 Red Hat OpenShift

构建、部署和管理高可用、高可靠、高弹性的应用程序

-

DevOps 解决方案

快速、安全地最大限度提高您的DevOps优势

-

业务中台

将您的采购和销售置于同一企业级全渠道数字平台上

-

一方数据中台

企业内大数据建设、管理和应用的一站式解决方案

-

SAP 云解决方案

帮助企业简化 IT 架构、实现商业价值、加速数字化转型的步伐

-

阿里云InCountry服务(ACIS)

快速高效地将您的业务扩展到中国,同时遵守适用的当地法规

- 物联网解决方案

-

物联网解决方案

快速搜集、处理、分析联网设备产生的数据

-

-

简体中文

-

为何选择阿里云

-

定价

-

产品

-

解决方案

-

云市场

-

开发人员

-

合作伙伴

-

文档

-

服务

- 致电我们 (+1-833-732-2135)

-

联系销售

-

百炼

-

控制台

0.0.201