A global database network (GDN) consists of multiple PolarDB clusters that are deployed in multiple regions within a country. Data is synchronized across all clusters in the GDN. If your service is deployed across multiple regions, you can configure GDN to ensure uninterrupted data access with low latency. This topic describes how to add a secondary cluster, remove a secondary cluster, switch a secondary cluster to the primary cluster, and recreate a secondary cluster.

Prerequisites

A GDN is created. For more information, see Create a GDN.

Precautions

The clusters in the GDN must run one of the following versions:

PolarDB for MySQL 8.0.2

PolarDB for MySQL 8.0.1 with revision version 8.0.1.1.17 or later

PolarDB for MySQL 5.7 with revision version 5.7.1.0.21 or later

PolarDB for MySQL 5.6 with revision version 5.6.1.0.32 or later

The primary cluster and secondary clusters must have the same database engine version, which can be MySQL 8.0, MySQL 5.7, or MySQL 5.6.

You can only create secondary clusters. You cannot specify existing clusters as secondary clusters.

When you create a secondary cluster, we recommend that you configure the same node specifications as the primary cluster to ensure low latency during data replication. You can specify the number of read-only nodes based on local read requests to the secondary cluster.

A GDN consists of one primary cluster and up to four secondary clusters. For more information about limits on their regions, see Region mappings between the primary and secondary clusters.

NoteTo add more secondary clusters, go to Quota Center. Click Apply in the Actions column corresponding to GDN cluster upper limit adjustment.

A cluster belongs to only one GDN.

You cannot specify the node specifications of 2 cores and 4 GB of memory or 2 cores and 8 GB of memory for the clusters in the GDN.

The GDN uses the physical replication mechanism. You do not need to enable binary logging for the GDN.

If you enabled binary logging for the GDN, make sure that the

loose_polar_log_binparameter is set to the same value across the primary and secondary clusters. Otherwise, binary log inconsistency may occur in the event of a primary/secondary cluster switchover.To add a secondary cluster across regions, you can join DingTalk group 30245017864 to obtain technical support.

Pricing

You are not charged for the traffic that is generated during cross-region data transmission within a GDN. You are charged only for the use of PolarDB clusters in the GDN. For more information about the pricing rules of PolarDB clusters, see Billable items overview.

Add a secondary cluster

Log on to the PolarDB console.

In the left-side navigation pane, click Global Database Network.

Find the GDN to which you want to add a secondary cluster and click Add Secondary Cluster in the Actions column.

NoteYou cannot specify existing clusters as secondary clusters.

On the buy page, select the Subscription, Pay-as-you-go, or Serverless billing method.

Configure the parameters. The following table describes the parameters.

Parameter

Description

Region

The region where you want to create the secondary cluster. You cannot change the region after the cluster is created.

NoteYou can select only a region in the same country as the primary cluster. For information about the region mapping relationships between the primary and secondary clusters, see Region mappings between the primary and secondary clusters.

Make sure that your PolarDB cluster and the Elastic Compute Service (ECS) instance to which you want to connect are deployed in the same region. Otherwise, they cannot communicate over an internal network.

Creation Method

The type of cluster that you want to create. Select Create Secondary Cluster.

GDN

The GDN in which you want to create the secondary cluster.

NoteBy default, the GDN that you select before you create the secondary cluster is used.

Database Engine

The database engine of the secondary cluster, which must be the same as the database engine of the primary cluster. Valid values:

MySQL 8.0

MySQL 5.7

MySQL 5.6

Database Edition

By default, Enterprise Edition is selected.

Edition

Only Cluster Edition (Recommended) is supported.

NoteThis parameter is available only if you select the Subscription or Pay-as-you-go billing method.

Specification

You can select the General-purpose or Dedicated specification.

Dedicated: The cluster does not share allocated compute resources such as CPUs with other clusters on the same server. This improves the reliability and stability of the cluster.

General-purpose: Idle compute resources such as CPUs are shared among clusters on the same server for cost-effectiveness.

For more information about the comparison between the two types of specifications, see Comparison between general-purpose and dedicated compute nodes.

NoteThis parameter is available only if you select the Subscription or Pay-as-you-go billing method.

CPU Architecture

By default, X86 is selected.

NoteThis parameter is available only if you select the Subscription or Pay-as-you-go billing method.

Nodes

By default, a cluster of Cluster Edition (Recommended) consists of one primary node and one read-only node. The two nodes have the same specifications. You do not need to configure this parameter.

NoteIf the primary node fails, the system promotes the read-only node as the new primary node and creates another read-only node. For more information about read-only nodes, see the "Architecture" section in Editions.

This parameter is available only if you select the Subscription or Pay-as-you-go billing method.

Primary Zone

The primary zone where you want to deploy the cluster.

A zone is an independent geographical location in a region. All zones in a region provide the same level of service performance.

You can deploy the PolarDB cluster and the ECS instance in the same zone.

You need to only select the primary zone. The system automatically selects a secondary zone.

Network Type

This parameter is automatically set to VPC. You do not need to configure this parameter.

Make sure that the PolarDB cluster is created in the same virtual private cloud (VPC) as the ECS instance to which you want to connect. Otherwise, the cluster and the ECS instance cannot communicate over the internal network.

If an existing VPC meets your network requirements, select the VPC. For example, if you created an ECS instance and the VPC in which the ECS instance resides meets your network requirements, select the VPC.

If you did not create a VPC that meets your network requirements, you must create a VPC. For more information, see Create and manage a VPC.

Enable Hot Standby Cluster

PolarDB provides multiple high availability modes. After you enable the hot standby storage cluster feature for a PolarDB cluster, a hot standby storage cluster is created in the secondary zone of the region in which the PolarDB cluster resides or in a different data center in the same zone. The hot standby storage cluster has independent storage resources. Whether the hot standby storage cluster has independent compute resources varies based on the high availability mode. When the PolarDB cluster in the primary zone fails, the hot standby storage cluster immediately takes over and handles read and write operations and storage tasks.

NoteFor more information about the hot standby storage cluster and related solutions, see High availability modes (hot standby clusters).

Rules for changing high availability modes:

You cannot directly change the high availability mode of a cluster from Double Zones (Hot Standby Storage Cluster Enabled) or Double Zones (Hot Standby Storage and Compute Clusters Enabled) to Single Zone (Hot Standby Storage Cluster Disabled).

For such change of the high availability mode, we recommend that you purchase a new cluster and select the Single Zone (Hot Standby Storage Cluster Disabled) high availability mode for the cluster. Then, migrate the existing cluster to the new cluster by using Data Transmission Service (DTS). For information about how to migrate an existing cluster to a new cluster, see Migrate data between PolarDB for MySQL clusters.

You can select the Three Zones high availability mode only when you purchase a new cluster. You cannot change the high availability mode of a cluster from Three Zones to other high availability modes and vice versa.

You can manually change the high availability mode of a cluster from Single Zone (Hot Standby Storage Cluster Disabled) to a different high availability mode. For more information, see High availability modes (hot standby clusters).

Filter

The specifications of compute nodes.

NoteFor more information about the specifications of compute nodes, see Compute node specifications of PolarDB for MySQL Enterprise Edition.

This parameter is available only if you select the Subscription or Pay-as-you-go billing method.

Minimum Read-Only Nodes

The minimum number of read-only nodes that can be added. To ensure high availability of the serverless cluster, we recommend that you set the minimum number of read-only nodes to 1. Valid values: 0 to 7.

NoteThis parameter is available only if you select the Serverless billing method.

Maximum Read-only Nodes

The maximum number of read-only nodes that can be added. The number of read-only nodes automatically increases or decreases based on your workloads. Valid values: 0 to 7.

NoteThis parameter is available only if you select the Serverless billing method.

Minimum PCUs per Node

The minimum number of PCUs per node in the cluster. Serverless PolarDB clusters are billed and scaled by using PolarDB Capacity Unit (PCU) as a unit of measurement. One PCU is approximately equal to 1 core and 2 GB of memory. The number of PCUs is dynamically adjusted within the specified range based on the workload. Valid values: 1 PCU to 31 PCUs.

NoteThis parameter is available only if you select the Serverless billing method.

Maximum PCUs per Node

The maximum number of PCUs per node in the cluster. Serverless PolarDB clusters are billed and scaled by using PCU as a unit of measurement. One PCU is approximately equal to 1 core and 2 GB of memory. The number of PCUs is dynamically adjusted within the specified range based on the workload. Valid values: 1 PCU to 32 PCUs.

NoteThis parameter is available only if you select the Serverless billing method.

PolarProxy Type

PolarDB provides two PolarProxy editions: Standard Enterprise Edition and Dedicated Enterprise Edition.

General-purpose clusters support the Standard Enterprise Edition edition. The Standard Enterprise edition uses shared CPU resources and offers intelligent, second-level elastic scaling based on business workloads.

Dedicated clusters supports the Dedicated Enterprise Edition edition. The Dedicated Enterprise edition uses dedicated CPU resources and provides better stability.

NoteThe PolarProxy enterprise editions are provided free of charge but may be charged in the future.

Enable No-activity Suspension

Specifies whether to enable the no-activity suspension feature. By default, the no-activity suspension feature is disabled.

NoteThis parameter is available only if you select the Serverless billing method.

Storage Type

PolarDB provides two storage types: PSL5 and PSL4.

PSL5: the storage type supported by historical versions of PolarDB. This was the default storage type for PolarDB clusters purchased before June 7, 2022. PSL5 delivers higher performance, reliability, and availability.

PSL4: a new storage type for PolarDB. This type uses the Smart-SSD technology developed in-house by Alibaba Cloud to compress and decompress data that is stored on SSD disks. PSL4 can reduce the storage costs of data while maintaining high disk performance.

NoteYou cannot change the storage type of existing clusters. To change the storage type of an existing cluster, we recommend that you purchase a new cluster and configure the cluster by using the desired storage type, and then migrate data from the existing cluster to the new cluster.

For more information about differences between the two storage types, see How do I select between PSL4 and PSL5?.

Storage Engine

Valid values: InnoDB and InnoDB & X-Engine.

InnoDB: deploys only the InnoDB storage engine.

InnoDB & X-Engine:: deploys InnoDB and X-Engine. If you select this option, you must specify the X-Engine memory usage ratio. For more information, see Overview.

NoteThis parameter is available only if you select the Subscription or Pay-as-you-go billing method.

Storage Billing Method

PolarDB supports the Pay-as-you-go and Subscription storage billing methods.

Pay-as-you-go: The storage capacity is provided based on a serverless architecture. You do not need to specify the storage capacity when you purchase the cluster. The storage capacity of the cluster can be automatically scaled up as the volume of data increases. You are charged for the actual data volume. For more information, see Pay-as-you-go.

Subscription: You must purchase a specific amount of storage capacity when you create a cluster. For more information, see Subscription.

NoteIf you set the Product Type parameter to Subscription, you can set the Storage Billing Method parameter to Pay-as-you-go or Subscription. If you set the Product Type parameter to Pay-as-you-go, the Storage Billing Method parameter is unavailable, and you are charged for the storage based on the pay-as-you-go billing method.

Storage Cost

You do not need to configure the Storage Cost parameter. You are charged for the amount of storage capacity that is used by data on an hourly basis. For more information, see Pay-as-you-go.

NoteThis parameter is available only if you select the Subscription or Pay-as-you-go billing method.

Cluster Name

Valid values: Auto-generate and Custom.

If you select Auto-generate, the system automatically generates a cluster name after the cluster is created. You can change the automatically generated cluster name.

If you select Custom, you must enter a cluster name. The cluster name must meet the following requirements:

The name cannot start with

http://orhttps://.The name must be 2 to 256 characters in length.

The name must start with a letter and can contain digits, periods (.), underscores (_), and hyphens (-).

Resource Group

Select a resource group from the drop-down list. For more information, see Create a resource group.

NoteA resource group is a group of resources that belong to an Alibaba Cloud account. Resource groups allow you to manage resources in a centralized manner. A resource belongs to only one resource group. For more information, see Classify resources into resource groups and grant permissions on the resource groups.

Enable Binary Logging

Specifies whether to enable binary logging. For more information, see Enable binary logging.

NoteThis parameter is available only if you select the Subscription or Pay-as-you-go billing method.

Release Cluster

The backup retention policy that is used when the cluster is removed or released. The default value is Retain Last Automatic Backup Before Release (Default).

Retain Last Automatic Backup Before Release (Default): The system retains the last backup when you remove or release the cluster.

Retain All Backups: The system retains all backups when you remove or release the cluster.

Delete All Backups (Unrecoverable): The system does not retain backups when you remove or release the cluster.

NoteYou may be charged for the backups that are retained after you remove or release a cluster. For more information, see Release a cluster.

This parameter is available only if you select the Subscription or Pay-as-you-go billing method.

Configure the Quantity and Duration parameters for the cluster and specify whether to enable Auto-renewal.

NoteYou must configure the Duration parameter and specify whether to enable Auto-renewal only if you set the Billing Method parameter to Subscription.

Select the terms of service. Click Buy Now.

After you complete the payment, wait 10 to 15 minutes for the cluster to be created. Then, you can view the cluster on the Clusters page.

NoteIf specific nodes in the cluster are in the Creating state, the cluster is still being created and is unavailable. The cluster is available only when the cluster is in the Running state.

Make sure that you select the region where the cluster is deployed. Otherwise, you cannot view the cluster.

If you want to store a large volume of data, we recommend that you purchase PolarDB storage plans. Storage plans provide storage capacity at discounted rates. Larger storage plans provide more storage at lower costs. For more information, see Combination with storage plans.

Remove a secondary cluster

Log on to the PolarDB console.

In the left-side navigation pane, click Global Database Network.

Find the GDN that you want to manage and click the ID or name of the GDN in the GDN ID/Name column.

In the Clusters section, find the secondary cluster that you want to remove and click Remove in the Actions column.

NoteRemoving a secondary cluster requires approximately 5 minutes to complete.

During the removal process, the endpoints of all clusters in the GDN, including the secondary cluster that is being removed, are available. You can continue to use the endpoints to access the databases.

You can remove only secondary clusters from a GDN. You cannot remove the primary cluster from a GDN.

After you remove a secondary cluster from a GDN, the cluster stops replicating data from the primary cluster. The system switches the cluster to read/write mode.

After you remove a secondary cluster from a GDN, you cannot re-add the cluster to the GDN as a secondary cluster. Exercise caution when you perform this operation.

In the message that appears, click OK.

Switch a secondary cluster to the primary cluster

Log on to the PolarDB console.

In the left-side navigation pane, click Global Database Network.

Find the GDN that you want to manage and click the ID or name of the GDN in the GDN ID/Name column.

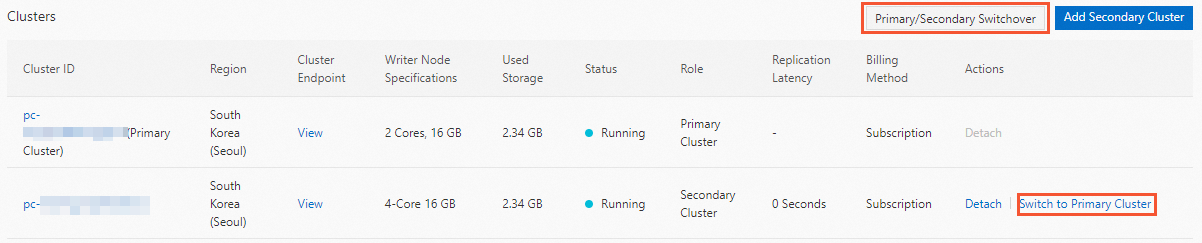

In the Clusters section, click Switch to Primary Cluster in the Actions column. Alternatively, click Primary/Secondary Switchover in the upper-right corner of the Clusters section.

In the Primary/Secondary Switchover dialog box, enter the ID of the secondary cluster in the New Primary Cluster field and click OK.

NoteIf you applied for a public endpoint for the GDN, make sure that the new primary cluster also has a public cluster endpoint. Otherwise, applications cannot access the databases. For more information, see View the endpoint and port number.

During the primary/secondary cluster switchover process, services may be interrupted for up to 160 seconds. We recommend that you perform this operation during off-peak hours and make sure that your application is configured to automatically reconnect to the cluster.

Forcible switchover is in the canary release phase. To apply for a trial, go to Quota Center. Click Apply in the Actions column corresponding to the PolarDB GDN master-slave forced switching trial quota name. After the trial application is approved, you can turn on Forced Switchover in the Primary/Secondary Switchover dialog box.

You cannot specify a new primary cluster for a forcible switchover. By default, the secondary cluster that has the largest log sequence number (LSN) is forcibly promoted as the new primary cluster.

Data may be lost during a forcible switchover. After a switchover, the original primary cluster is automatically removed. Exercise caution when you perform this operation.

Recreate a secondary cluster

You may need to recreate a secondary cluster in a GDN in the following scenarios:

The secondary cluster cannot recover from a failure. You must recreate the secondary cluster to deliver the service.

The underlying configuration or environment of the secondary cluster needs to be updated.

The data of the secondary cluster is not synchronized with the data of the primary cluster for a long period of time.

Recreating a secondary cluster is in the canary release phase. To apply for a trial, go to Quota Center. Click Apply in the Actions column corresponding to the PolarDB GDN re-hitch trial from cluster quota name.

During the recreation process, the secondary cluster becomes unavailable. Exercise caution when you recreate a secondary cluster.

Log on to the PolarDB console.

In the left-side navigation pane, click Global Database Network.

Find the GDN that you want to manage and click the ID or name of the GDN in the GDN ID/Name column.

In the Clusters section, click the

icon in the Actions column and select Recreate Secondary Cluster.

icon in the Actions column and select Recreate Secondary Cluster.

In the Recreate Secondary Cluster message, click OK.