為了避免因誤刪除、誤修改、誤覆蓋等操作引起的資料丟失或受損,您可以定期備份本地碟的檔案,提高資料安全性。您可以參考本文通過以下三種常用方式備份:通過雲備份定期備份、備份至OSS、備份至雲端硬碟或NAS。本文不適用於備份自建資料庫。

本文僅適用於備份本地碟的檔案,如果本地碟儲存了資料庫,且您需要備份該資料庫,可以參見備份ECS自建資料庫。

方式一:通過雲備份定期備份

適用情境 | 限制 | 特點 | 費用 |

雲備份支援對ECS上的檔案或檔案目錄進行定期備份(例如備份本地碟、自建資料庫Oracle/MySQL/SQL Server),並在需要時進行資料恢複,適用於需要高度可靠備份方案的情境。更多關於雲備份的資訊,請參見為什麼要選擇雲備份。 |

|

| 收取檔案備份軟體使用費和儲存容量費等。計費詳情,請參見ECS檔案備份費用。 |

操作步驟

準備工作。

確保本地碟所在地區需支援雲備份服務。支援雲備份的地區,請參見開服地區。

確保本地碟所屬執行個體已經安裝雲助手。

重要如果執行個體是2017年12月01日之後購買的,則預設已預裝雲助手用戶端,無需再安裝;否則需要您自行安裝雲助手Agent。

登入雲備份Cloud Backup控制台,並選擇地區為本地碟所在地區。

左側導覽列選擇,在ECS实例列表頁簽找到本地碟所屬執行個體,在操作欄單擊備份。

進入建立備份計劃頁面,按照介面提示配置,單擊確定。

請注意以下配置(其他配置按需設定,說明請參考建立備份計劃周期性備份Elastic Compute Service檔案):

备份目录规则:選擇指定目录。

備份檔案路徑:填寫需要備份的本地碟資料的絕對路徑,支援多個路徑。具體規則,請參考介面提示。

备份策略:用於指定備份時間、周期、備份保留時間等。若未建立備份策略,請先建立備份策略。

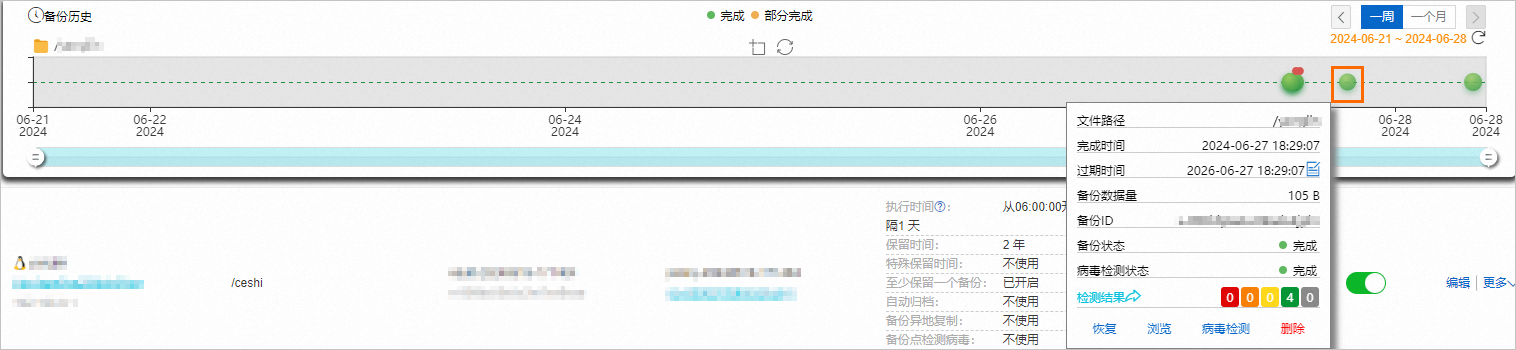

當達到備份執行時間時,系統就會啟動執行備份任務。當備份任務狀態為成功時,表示當天備份完成。您可以在備份歷史中看到備份點。

相關操作

方式二:定期備份至OSS

通過ossutil和crontab命令,並編寫自動化指令碼,定期備份本地碟資料至OSS。

適用情境 | 特點 | 費用 |

適合大規模資料備份,尤其是需要低成本、高可靠性的儲存方案。更多OSS的特性,請參見OSS產品優勢。 | 需要編寫指令碼 | 收取OSS儲存費。計費詳情,請參見儲存費用。 |

本方案是一個最簡單的樣本和基本思路,還存在一些局限性,需要您結合自身業務去做一些完善和補充。

例如,該方案每次都是全量備份,隨著時間推移會佔用越來越多的儲存空間;直接將整個目錄打包成單個ZIP檔案可能影響備份速度和儲存效率。在真實的業務情境中,需要結合自身業務做一些額外的策略,例如:

增量或差異備份:只備份自上次備份以來發生改變的資料,更高效地利用儲存資源並加快備份速度。

分塊備份:將資料集分成多個小塊或根據目錄結構、檔案類型等邏輯進行分組備份。

操作步驟

準備工作。

已經開通OSS服務,並建立了OSS bucket。具體操作,請參見建立儲存空間。

已擷取OSS bucket名稱、OSS的endpoint以及待備份本地碟資料的儲存路徑。

登入ECS執行個體。

安裝OSSutil工具並配置訪問憑證。

重要下載OSSutil工具需要本地碟所屬的ECS執行個體已開通公網。如何為ECS執行個體開通公網?

安裝OSSutil工具。

sudo yum install unzip -y sudo -v ; curl https://gosspublic.alicdn.com/ossutil/install.sh | sudo bash配置OSSutil的訪問憑證。

在使用者目錄下建立

.ossutilconfig檔案並配置憑證。sudo -i # 切換到root使用者(如果目前使用者沒有sudo許可權,則需要通過其他方式登入或提權) cat <<EOF > /root/.ossutilconfig [Credentials] language=EN endpoint=YourEndpoint accessKeyID=YourAccessKeyId accessKeySecret=YourAccessKeySecret EOF將

YourEndpoint、YourAccessKeyId和YourAccessKeySecret替換為您的實際的資訊。

實現定時備份。

安裝壓縮公用程式(本文以安裝

zip為例)。sudo yum install zip編寫備份指令碼(假設指令碼為backup_to_oss.sh)。

給指令碼執行許可權並測試。

sudo chmod +x /home/backup_to_oss.sh ./backup_to_oss.sh確保指令碼運行無誤,且資料能成功上傳至OSS。

執行

crontab -e開啟crontab編輯器,添加一行來定時執行您的備份指令碼。例如每天淩晨2點執行:0 2 * * * /home/backup_to_oss.sh/home/backup_to_oss.sh:替換為指令碼實際的存放路徑。更多配置(按需設定)。

下載已備份資料

您可以通過OSS控制台、命令列工具ossutil等,從OSS下載已經備份的資料。具體操作,請參見簡單下載。

方式三:定期備份至同執行個體的雲端硬碟或NAS

將本地碟資料通過ZIP包的方式定期備份至雲端硬碟或Apsara File Storage NAS的指定路徑下。

適用情境 | 特點 | 費用 |

| 需要編寫指令碼 |

|

本方案是一個最簡單的樣本和基本思路,還存在一些局限性,需要您結合自身業務去做一些完善和補充。

例如,該方案每次都是全量備份,隨著時間推移會佔用越來越多的儲存空間;直接將整個目錄打包成單個ZIP檔案可能影響備份速度和儲存效率。在真實的業務情境中,需要結合自身業務做一些額外的策略,例如:

增量或差異備份:只備份自上次備份以來發生改變的資料,更高效地利用儲存資源並加快備份速度。

分塊備份:將資料集分成多個小塊或根據目錄結構、檔案類型等邏輯進行分組備份。

操作步驟

準備工作。

為本地碟所屬執行個體已建立一個雲端硬碟(資料盤)並完成掛載和初始化;或已掛載檔案系統NAS。

具體操作,請參見建立並使用雲端硬碟指引或建立檔案系統NAS並掛載至ECS。

擷取雲端硬碟或檔案系統NAS的掛載路徑、待備份本地碟資料的儲存路徑。

設定定時備份。

登入ECS執行個體。

安裝ZIP工具(下文以Alibaba Cloud Linux為例)。

sudo yum install zip編寫備份指令碼(假設路徑為/home/backup_script.sh)。

執行以下命令編寫指令碼,並儲存。

vim /home/backup_script.sh儲存指令碼,並給予執行許可權。

sudo chmod +x /home/backup_script.sh/home/backup_script.sh:替換為指令碼實際的存放路徑。執行

crontab -e開啟crontab編輯器,添加一行來定時執行您的備份指令碼。例如每天淩晨2點執行:0 2 * * * /home/backup_script.sh/home/backup_script.sh:替換為指令碼實際的存放路徑。

下載已備份資料

資料備份在雲端硬碟:具體操作,可參見上傳或下載檔案。

資料備份在NAS:具體操作,可參見將NAS資料移轉至本地。

相關操作

將本地碟資料移轉到其他ECS執行個體

您可以將單台或多台本地碟執行個體整體資料一鍵遷移到其他ECS執行個體,儲存至該執行個體的雲端硬碟,完整備份本地碟執行個體的資料。具體操作,請參見原始伺服器遷移至目標執行個體。

本地碟發生損壞後的處理

如果本地碟發生損壞,阿里雲會觸發系統事件,並及時給您發送通知、應對措施和事件周期等資訊。您可以根據情境進行營運。更多資訊,請參見本地碟執行個體營運情境和系統事件。