Knative on ASM provides Knative Pod Autoscaler (KPA). KPA is an out-of-the-box feature that can scale pods for your application to match incoming demand. ASM is short for Service Mesh. If service performance is unstable or resources are wasted due to traffic fluctuations, you can enable autoscaling of pods based on the number of requests. By monitoring and analyzing real-time traffic data, this feature can dynamically adjust the number of service instances. This ensures the service quality and user experience during peak hours and effectively minimizes idle resources during off-peak hours. This improves the overall system performance while reducing costs.

Prerequisites

A Knative Service is created by using Knative on ASM. For more information, see Use Knative on ASM to deploy a serverless application.

In this topic, the default domain name example.com is used to demonstrate how to enable autoscaling of pods based on the number of requests. If you need to use a custom domain name, see Set a custom domain name in Knative on ASM.

Introduction to autoscaling

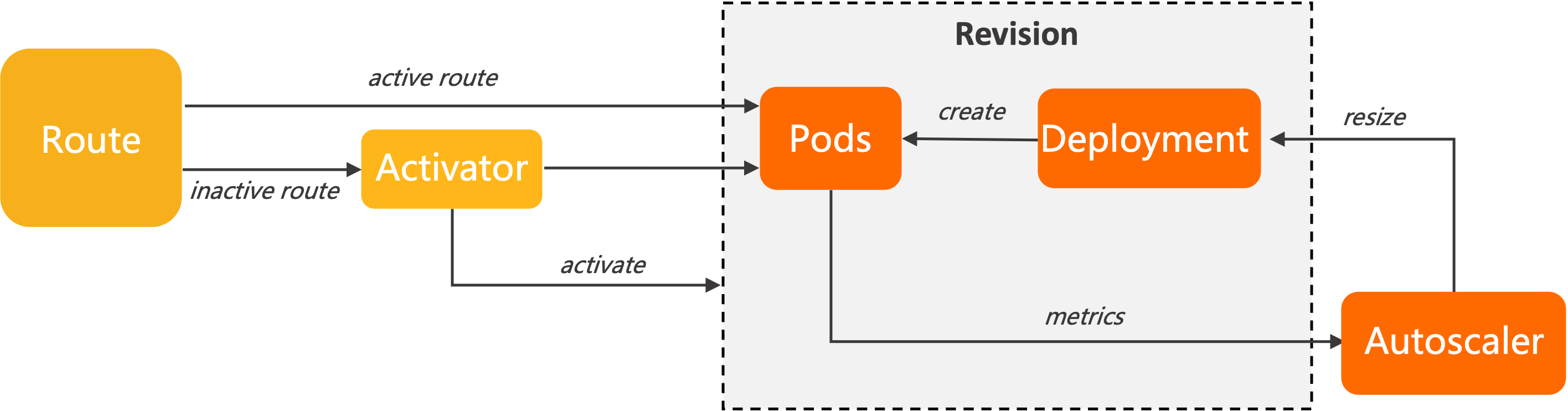

Knative Serving adds the Queue Proxy container to each pod. The Queue Proxy container sends concurrency metrics of the application containers to KPA. After KPA receives the metrics, KPA automatically adjusts the number of pods provisioned for a Deployment based on the number of concurrent requests and related autoscaling algorithms.

Concurrency and QPS

The concurrency refers to the number of requests received by a pod at the same time. Queries per second (QPS) refers to the number of requests that can be handled by a pod per second. QPS also shows the maximum throughput of a pod.

The increase in concurrency does not necessarily increase the QPS. In scenarios where the access load is heavy, if the concurrency is increased, the QPS may be decreased. This is because the system is overloaded and the CPU and memory usage is high, which downgrades the performance and increases the response latency.

Algorithms

KPA performs auto scaling based on the average number of concurrent requests per pod. This value is specified by the concurrency target. The default concurrency target is 100. The number of pods required to handle requests is determined based on the following formula: Number of pods = Total number of concurrent requests to the application/Concurrency target. For example, if you set the concurrency target to 10 and send 50 concurrent requests to an application, KPA creates five pods.

- Stable

In stable mode, KPA adjusts the number of pods provisioned for a Deployment to match the specified concurrency target. The concurrency target indicates the average number of requests received by a pod within a stable window of 60 seconds.

- Panic KPA calculates the average number of concurrent requests per pod within a stable window of 60 seconds. Therefore, the number of concurrent requests must remain at a specific level for 60 seconds. KPA also calculates the number of concurrent requests per pod within a panic window of 6 seconds. If the number of concurrent requests reaches twice the concurrency target, KAP switches to the panic mode. In panic mode, KPA scales pods within a shorter time window than in stable mode. After the burst of concurrent requests lasts for 60 seconds, KPA automatically switches back to the stable mode.

| Panic Target---> +--| 20 | | | <------Panic Window | | Stable Target---> +-------------------------|--| 10 CONCURRENCY | | | | <-----------Stable Window | | | --------------------------+-------------------------+--+ 0 120 60 0 TIME

KPA configurations

To configure KPA, you must configure the config-autoscaler ConfigMap in the knative-serving namespace. Run the following command to query the default configurations in the config-autoscaler ConfigMap. The following content describes the key parameters.

kubectl -n knative-serving get cm config-autoscaler -o yamlExpected output (Annotations in code are ignored.):

apiVersion: v1

kind: ConfigMap

metadata:

name: config-autoscaler

namespace: knative-serving

data:

_example:

container-concurrency-target-default: "100"

container-concurrency-target-percentage: "0.7"

enable-scale-to-zero: "true"

max-scale-up-rate: "1000"

max-scale-down-rate: "2"

panic-window-percentage: "10"

panic-threshold-percentage: "200"

scale-to-zero-grace-period: "30s"

scale-to-zero-pod-retention-period: "0s"

stable-window: "60s"

target-burst-capacity: "200"

requests-per-second-target-default: "200"The settings of the parameters displayed under the _example field are default values. If you want to modify the settings of these parameters, copy the parameters that you want to modify under the _example field to the data field for modification.

Modifications made to the config-autoscaler ConfigMap will be applied to all Knative Services. If you want to modify configurations for a single Knative Service, you can use annotations. For more information, see Scenario 1: Enable autoscaling by setting a concurrency target and Scenario 2: Enable autoscaling by setting scale bounds.

Configure parameters related to the scale-to-zero feature

Parameter | description | Example |

scale-to-zero-grace-period | The time period for which an inactive Revision keeps running before KPA scales the number of pods to zero. The minimum period is 30 seconds. | 30s |

stable-window | In stable mode, KPA scales pods based on the average concurrency per pod within the stable window. You can also specify the stable window by using an annotation in the Revision. Example: | 60s |

enable-scale-to-zero | Set enable-scale-to-zero to | true |

Set a concurrency target for KPA

Parameter | description | Example |

container-concurrency-target-default | This parameter sets a soft limit on the number of concurrent requests handled by each pod within a specified time period. We recommend that you use this parameter to control the concurrency. By default, the concurrency target in the ConfigMap is set to 100. You can change the value by using the | 100 |

containerConcurrency | This parameter sets a hard limit on the number of concurrent requests handled by each pod within a specified time period. The following values are supported:

| 0 |

container-concurrency-target-percentage | This parameter is also known as a concurrency percentage or a concurrency factor. It is used in the calculation of the concurrency target for autoscaling. The following formula applies: Concurrency value that triggers autoscaling = Concurrency target (or the value of containerConcurrency) × The value of container-concurrency-target-percentage. For example, the concurrency target or the value of containerConcurrency is set to | 0.7 |

Set the scale bounds

You can use the minScale and maxScale annotations to set the minimum and maximum number of pods that are provisioned for an application. This helps reduce cold starts and computing costs.

If you do not set the minScale annotation, all pods are removed when no traffic arrives.

If you do not set the maxScale annotation, the number of pods that can be provisioned for the application is unlimited.

If you set enable-scale-to-zero to false in the config-autoscaler ConfigMap, KPA scales the number of pods to one when no traffic arrives.

You can configure the minScale and maxScale parameters in the template section of the Revision configuration file.

spec:

template:

metadata:

autoscaling.knative.dev/minScale: "2"

autoscaling.knative.dev/maxScale: "10"Scenario 1: Enable autoscaling by setting a concurrency target

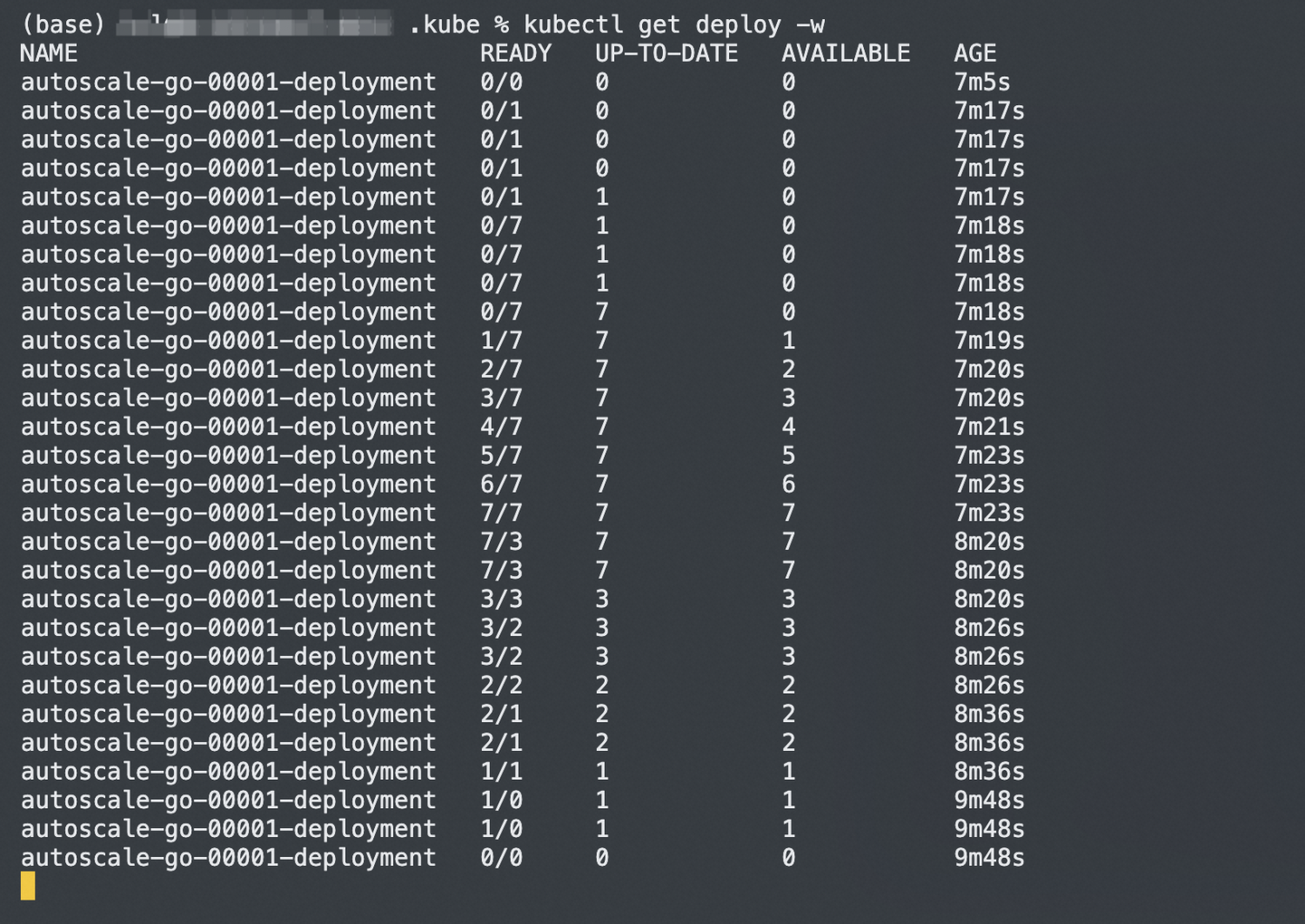

In this scenario, an autoscale-go application is deployed in a cluster. This example shows how to enable KPA to perform autoscaling by setting a concurrency target.

For more information about how to use Knative on ASM to create a Knative Service, see Use Knative on ASM to deploy a serverless application.

Create the autoscale-go.yaml file and set the value of

autoscaling.knative.dev/targetto 10.apiVersion: serving.knative.dev/v1 kind: Service metadata: name: autoscale-go namespace: default spec: template: metadata: labels: app: autoscale-go annotations: autoscaling.knative.dev/target: "10" spec: containers: - image: registry.cn-hangzhou.aliyuncs.com/knative-sample/autoscale-go:0.1Use kubectl to connect to the cluster and run the following command to deploy the autoscale-go application:

kubectl apply -f autoscale-go.yamlLog on to the ASM console console. Click the name of the desired ASM instance and choose . On the Ingress Gateway page, obtain the IP address from the Service address section.

Use the load testing tool hey to send 30 seconds of traffic maintaining 50 in-flight requests.

For more information about how to install the load testing tool hey, see hey.

NoteReplace

xxx.xxx.xxx.xxxwith the gateway address that you actually access. For more information about how to obtain the gateway address, see Step 3: Query the gateway address in Use Knative on ASM to deploy a serverless application.hey -z 30s -c 50 -host "autoscale-go.default.example.com" "http://xxx.xxx.xxx.xxx?sleep=100&prime=10000&bloat=5"Expected output:

The expected output indicates that seven pods are added. When the number of concurrent requests that are handled by a pod exceeds a certain percentage (70% by default) of the concurrency target, Knative will create more pods. This prevents the concurrency target from being exceeded in the case that concurrent requests further increase.

Scenario 2: Enable autoscaling by setting scale bounds

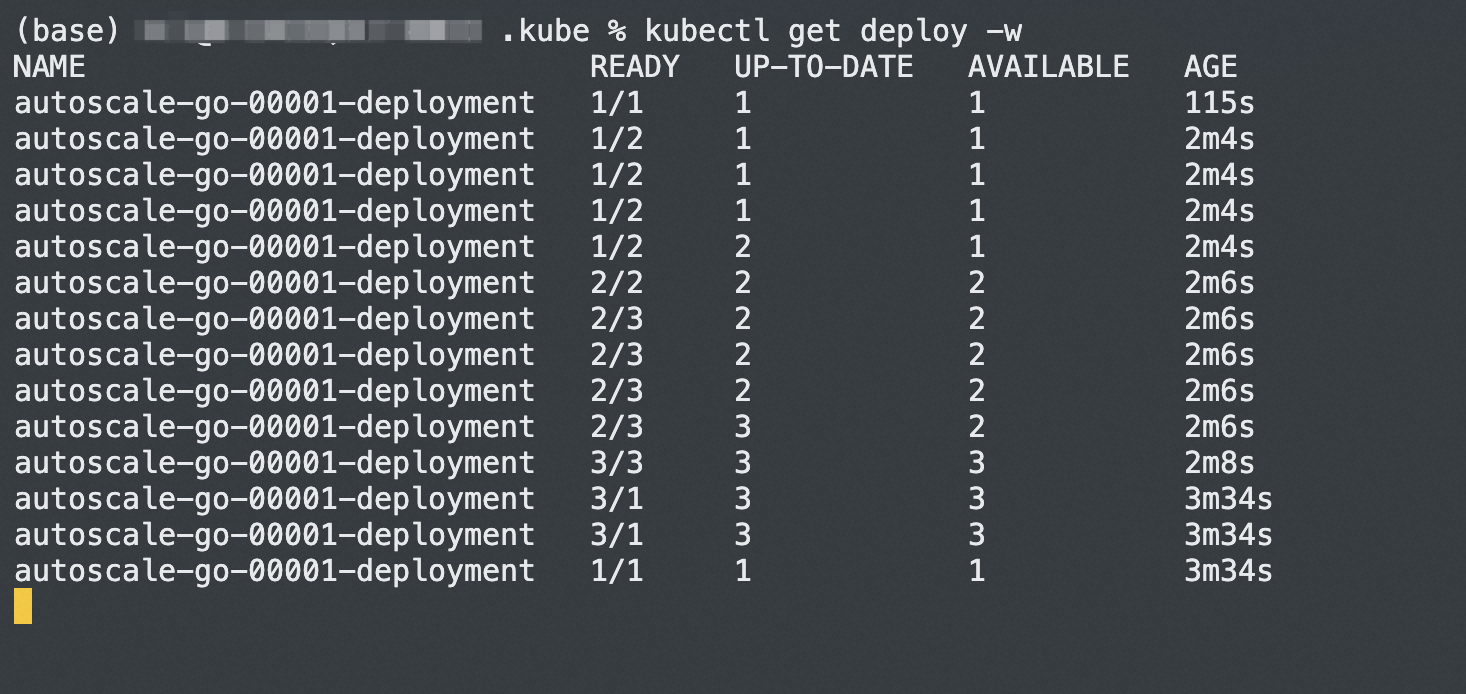

Scale bounds control the minimum and maximum number of pods that can be provisioned for an application. In this scenario, an autoscale-go application is deployed in a cluster. This example shows how to enable autoscaling by setting scale bounds.

For more information about how to use Knative on ASM to create a Knative Service, see Use Knative on ASM to deploy a serverless application.

Create the autoscale-go.yaml file. Set the concurrency target to 10, minScale to 1, and maxScale to 3.

apiVersion: serving.knative.dev/v1 kind: Service metadata: name: autoscale-go namespace: default spec: template: metadata: labels: app: autoscale-go annotations: autoscaling.knative.dev/target: "10" autoscaling.knative.dev/minScale: "1" autoscaling.knative.dev/maxScale: "3" spec: containers: - image: registry.cn-hangzhou.aliyuncs.com/knative-sample/autoscale-go:0.1Use kubectl to connect to the cluster and run the following command to deploy the autoscale-go application:

kubectl apply -f autoscale-go.yamlLog on to the ASM console console. Click the name of the desired ASM instance and choose . On the Ingress Gateway page, obtain the IP address from the Service address section.

Use the load testing tool hey to send 30 seconds of traffic maintaining 50 in-flight requests.

For more information about how to install the load testing tool hey, see hey.

NoteReplace

xxx.xxx.xxx.xxxwith the gateway address that you actually access. For more information about how to obtain the gateway address, see Step 3: Query the gateway address in Use Knative on ASM to deploy a serverless application.hey -z 30s -c 50 -host "autoscale-go.default.example.com" "http://xxx.xx.xx.xxx?sleep=100&prime=10000&bloat=5"Expected output:

The expected output indicates that a maximum of three pods are added and one pod is reserved when no traffic flows to the application. The autoscaling feature works as expected.

References

When you need to securely access and manage microservices that are built by using Knative, you can use an ASM gateway to allow access to a Knative Service only over HTTPS. In addition, you can configure encrypted transmission for service endpoints to protect communications between the services, improving the security and reliability of the overall architecture. For more information, see Use an ASM gateway to access a Knative Service over HTTPS.

If you face compatibility and stability challenges when you release a new version of your application during iterative development, you can perform a canary release based on traffic splitting for a Knative Service by using Knative on ASM. For more information, see Perform a canary release based on traffic splitting for a Knative Service by using Knative on ASM.

You can set the threshold of the CPU metric for a Knative Service. This ensures that pods are automatically scaled to match the fluctuations of user traffic. For more information, see Use HPA in Knative.