藉助於阿里雲ARMS Prometheus監控服務和Grafana的指標儲存和展示能力,雲訊息佇列 RocketMQ 版提供儀錶盤功能。該功能可協助您一站式、全方位、多維度地統計和觀測指標,進而快速瞭解業務的運行狀態。本文介紹儀錶盤的應用情境、業務背景、指標詳情、計費說明和查詢方式。

應用情境

情境一:線上訊息消費有異常,訊息不能及時被處理,需要及時收到警示,並快速定位問題。

情境二:線上某些訂單狀態有異常,需要排查對應的訊息鏈路環節是否正常發送訊息。

情境三:需要分析訊息流程量變化趨勢、流量分布特點或訊息體量,進而進行業務趨勢分析規劃。

情境四:需要查看和分析應用上下遊依賴拓撲情況,進行架構升級最佳化或改造。

業務背景

雲訊息佇列 RocketMQ 版的訊息收發流程中,隊列的堆積情況、緩衝情況以及訊息處理各環節的耗時等,會直接反映當前業務處理的效能和服務端啟動並執行狀態。因此,雲訊息佇列 RocketMQ 版的重點指標主要涉及以下業務情境。

訊息堆積情境

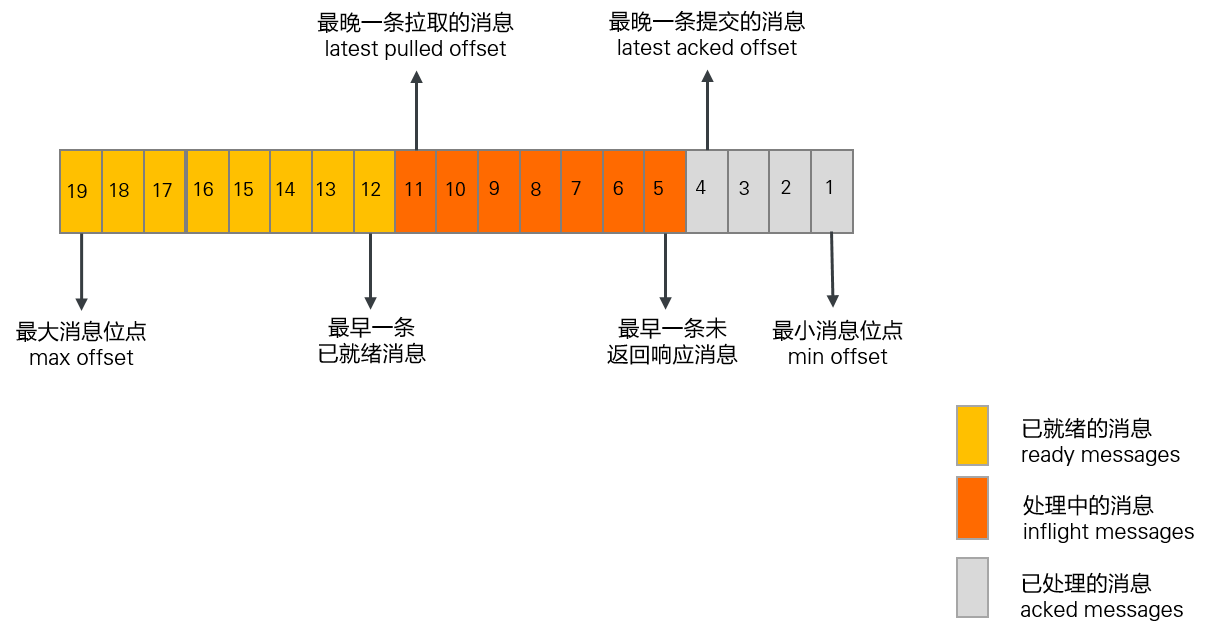

如下圖所示,表示指定主題的某一隊列中各訊息的狀態:

上圖表示指定主題的某一隊列中各訊息的狀態,雲訊息佇列 RocketMQ 版將處於不同處理階段的訊息數量和耗時進行統計,這些指標可直接反映隊列中訊息的處理速率和堆積情況,通過觀察這些指標可初步判斷業務的消費是否異常。具體的指標含義和計算公式如下:

分類 | 指標 | 定義 | 計算公式 |

訊息數量指標 | 處理中的訊息(inflight messages) | 在消費者用戶端正在處理,但用戶端還未返回消費結果的訊息。 | 最晚一條拉取的訊息的位點-最晚一條提交的訊息的位點 |

已就緒的訊息(ready messages) | 訊息在雲訊息佇列 RocketMQ 版服務端已就緒,對消費者可見可被消費的訊息。 | 最大訊息位點-最晚一條拉取的訊息的位點 | |

訊息堆積量(consumer lag) | 所有未處理完成的訊息量。 | 處理中訊息量+已就緒訊息量 | |

訊息耗時指標 | 已就緒訊息的就緒時間(ready time) |

| 不涉及 |

已就緒訊息的排隊時間(ready message queue time) | 最早一條已就緒訊息的就緒時間和當前時刻的時間差。 該時間反映消費者拉取訊息的及時性。 | 目前時間-最早一條已就緒訊息的就緒時間 | |

消費處理延隔時間(comsumer lag time) | 最早一條未返迴響應訊息的就緒時間和當前時刻的時間差。 該時間反映消費者完成訊息處理的及時性。 | 目前時間-最早一條未返迴響應訊息的就緒時間 |

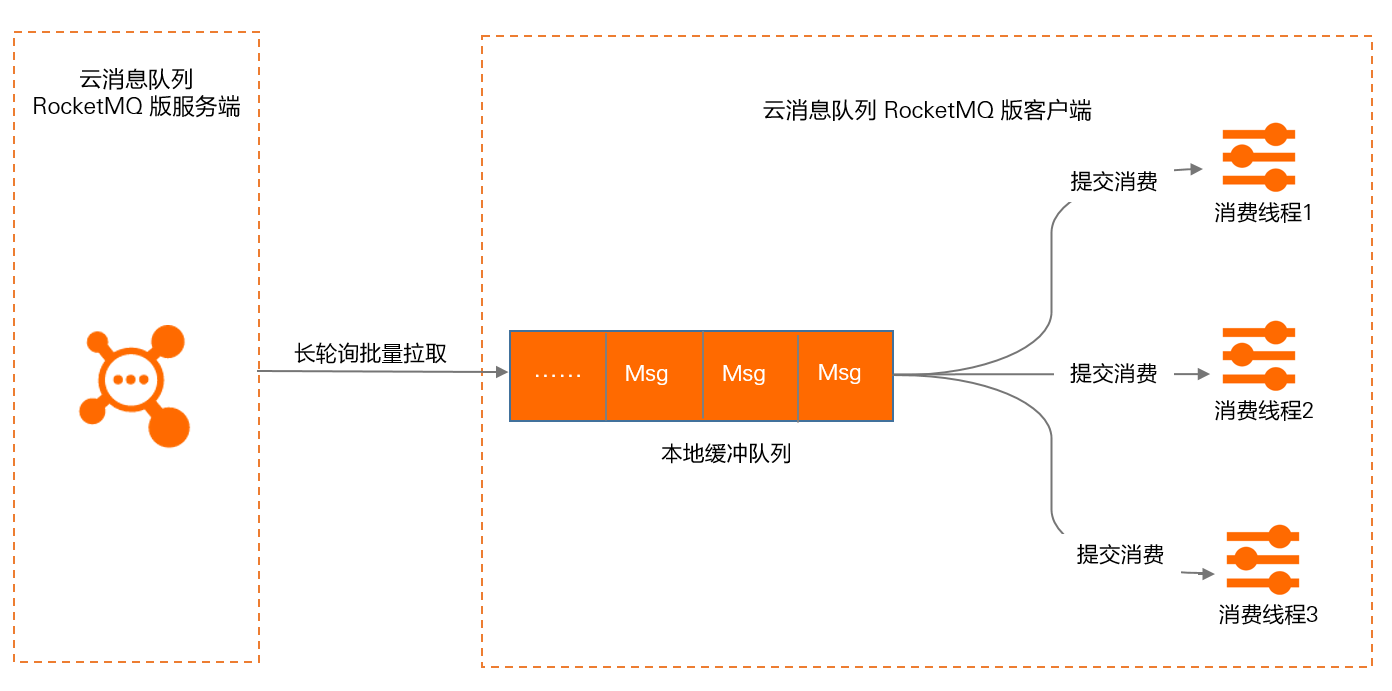

PushConsumer消費情境

在PushConsumer類型中,訊息的即時處理能力是基於SDK內部的典型Reactor執行緒模式實現的。如下圖所示,SDK內建了一個長輪詢線程,先將訊息非同步拉取到SDK內建的緩衝隊列中,再分別提交到消費線程中,觸發監聽器執行本地消費邏輯。

具體原理,請參見PushConsumer。

在PushConsumer消費情境下,本地緩衝隊列相關指標如下:

本地緩衝隊列中的訊息量:本地緩衝隊列中的訊息總條數。

本地緩衝隊列中的訊息大小:本地緩衝隊列中所有訊息大小的總和。

訊息等待處理時間:訊息暫存在本地緩衝隊列中的時間。

Metrics指標詳情

所有訊息收發TPS、訊息收發次數或訊息條數的相關指標,在計算時以訊息大小4 KB和普通訊息類型為基準,在其基礎上需要乘以訊息大小倍數和進階訊息類型倍數。具體計算規則,請參見計算規格說明。

Metrics指標中相關欄位說明如下:

欄位 | 取值 |

Metrics類型 |

|

Label |

|

服務端Metrics指標

Metrics類型 | Metrics name | 單位 | 指標說明 | Label |

Gauge | rocketmq_instance_requests_max | count/s | 執行個體每分鐘訊息收發TPS的最大值(不包含被限流的請求)。 取值規則:以1分鐘為周期,每秒鐘採樣一次,取這60次採樣的最大值。 |

|

Gauge | rocketmq_instance_requests_in_max | count/s | 執行個體每分鐘訊息發送TPS的最大值(不包含被限流的請求)。 取值規則:以1分鐘為周期,每秒鐘採樣一次,取這60次採樣的最大值。 |

|

Gauge | rocketmq_instance_requests_out_max | count/s | 執行個體每分鐘訊息消費TPS的最大值(不包含被限流的請求)。 取值規則:以1分鐘為周期,每秒鐘採樣一次,取這60次採樣的最大值。 |

|

Gauge | rocketmq_topic_requests_max | count/s | 執行個體每分鐘向Topic發送訊息TPS的最大值(不包含被限流的請求)。 取值規則:以1分鐘為周期,每秒鐘採樣一次,取這60次採樣的最大值。 |

|

Gauge | rocketmq_group_requests_max | count/s | 執行個體中消費組每分鐘訊息消費TPS的最大值(不包含被限流的請求)。 取值規則:以1分鐘為周期,每秒鐘採樣一次,取這60次採樣的最大值。 |

|

Gauge | rocketmq_instance_requests_in_threshold | count/s | 執行個體訊息發送的限流閾值。 |

|

Gauge | rocketmq_instance_requests_out_threshold | count/s | 執行個體訊息消費的限流閾值。 |

|

Gauge | rocketmq_throttled_requests_in | count | 訊息發送的限流次數。 |

|

Gauge | rocketmq_throttled_requests_out | count | 訊息消費的限流次數。 |

|

Gauge | rocketmq_instance_elastic_requests_max | count/s | 執行個體訊息收發TPS的最大彈性值。 |

|

Counter | rocketmq_requests_in_total | count | 訊息發送相關API的調用次數。 |

|

Counter | rocketmq_requests_out_total | count | 訊息消費相關API的調用次數。 |

|

Counter | rocketmq_messages_in_total | message | 生產者發送到服務端的訊息數量。 |

|

Counter | rocketmq_messages_out_total | message | 服務端投遞給消費者的訊息數量,其中包含消費者處理中、處理成功和處理失敗的訊息。 |

|

Counter | rocketmq_throughput_in_total | byte | 生產者發送到服務端的訊息輸送量。 |

|

Counter | rocketmq_throughput_out_total | byte | 服務端投遞給消費者的訊息輸送量,其中訊息數量包含消費者處理中、處理成功和處理失敗的訊息。 |

|

Counter | rocketmq_internet_throughput_out_total | byte | 訊息收發所使用的公網下行流量。 |

|

Histogram | rocketmq_message_size | byte | 訊息大小的分布情況,發送成功時統計。 分布區間如下:

|

|

Gauge | rocketmq_consumer_ready_messages | message | 已就緒訊息量。 在服務端已就緒,可以被消費者消費的訊息數量。 指標反映還未被消費者開始處理的訊息規模。 |

|

Gauge | rocketmq_consumer_inflight_messages | message | 處理中訊息量。 在消費者用戶端正在處理,但用戶端還未返回消費結果的訊息的總量。 |

|

Gauge | rocketmq_consumer_queueing_latency | ms | 已就緒訊息排隊時間。 最早一條就緒訊息的就緒時間和目前時間差。 該時間反映消費者拉取訊息的及時性。 |

|

Gauge | rocketmq_consumer_lag_latency | ms | 消費處理延遲時間。 最早一條未消費完成的訊息的就緒時間和當前時刻的時間差。 該時間反映消費者完成訊息處理的及時性。 |

|

Counter | rocketmq_send_to_dlq_messages | message | 每分鐘轉為死信狀態的訊息量。 變成死信狀態指的是訊息達到最大重投次數後不再投遞。 這些不再投遞的訊息會根據Group的死信策略配置儲存至指定Topic或被丟棄。 |

|

Gauge | rocketmq_storage_size | byte | 執行個體所使用的儲存空間大小,包含所有檔案的大小。 |

|

生產者Metrics指標

Metrics類型 | Metrics name | 單位 | 指標說明 | Label |

Histogram | rocketmq_send_cost_time | ms | 調用訊息發送介面成功的耗時分布情況。 分布區間如下:

|

|

消費者Metrics指標

Metrics類型 | Metrics name | 單位 | 指標說明 | Label |

Histogram | rocketmq_process_time | ms | PushConsumer的訊息處理耗時分布情況,包含處理成功和處理失敗。

分布區間如下:

|

|

Gauge | rocketmq_consumer_cached_messages | message | PushConsumer本地緩衝隊列中的訊息條數。 |

|

Gauge | rocketmq_consumer_cached_bytes | byte | PushConsumer本地緩衝隊列中訊息的總大小。 |

|

Histogram | rocketmq_await_time | ms | 訊息在PushConsumer本地緩衝隊列中的排隊時間的分布情況。

分布區間如下:

|

|

計費說明

雲訊息佇列 RocketMQ 版的儀錶盤指標在阿里雲ARMS Prometheus監控服務中屬於基礎指標,基礎指標不收取費用。因此,使用儀錶盤功能不收取費用。

前提條件

建立服務關聯角色

角色名稱:AliyunServiceRoleForOns

角色策略名稱稱:AliyunServiceRolePolicyForOns

許可權說明:允許雲訊息佇列 RocketMQ 版使用該角色訪問您的服務(CloudMonitor和ARMS服務)以完成監控警示和儀錶盤相關功能。

具體文檔說明:服務關聯角色。

查看儀錶盤

雲訊息佇列 RocketMQ 版支援通過以下入口查看儀錶盤:

仪表盘頁面:支援查看執行個體所有Topic和Group的各個指標。

執行個體詳情頁面:主要展示指定執行個體的生產者概覽資訊、計費相關指標以及限流相關指標。

Topic 详情頁面:主要展示指定Topic的生產相關指標以及生產者用戶端相關指標。

Group 详情頁面:主要展示指定Group的消費堆積相關指標以及消費者用戶端相關指標。

登入雲訊息佇列 RocketMQ 版控制台,在左側導覽列單擊執行個體列表。

在頂部功能表列選擇地區,如華東1(杭州),然後在執行個體列表中,單擊目標執行個體名稱。

選擇以下任一方式查看儀錶盤。

執行個體詳情頁面:在執行個體詳情頁單擊仪表盘頁簽。

仪表盘頁面:在左側導覽列單擊仪表盘。

Topic 详情頁面:在左側導覽列單擊Topic 管理,在Topic列表中單擊目標Topic名稱,然後在Topic 详情頁面單擊仪表盘頁簽。

Group 详情頁面:在左側導覽列單擊Group 管理,在Group列表中單擊目標Group名稱,然後在Group 详情頁面單擊仪表盘頁簽。

儀錶盤常見問題

如何擷取儀錶盤指標資料?

使用主帳號登入ARMS控制台。

在左側導覽列單擊接入中心。

在接入中心頁面的搜尋文字框輸入

RocketMQ,並單擊搜尋表徵圖。在搜尋的結果中,選擇需要接入的雲端服務(如阿里雲 RocketMQ(5.0) 服務)。接入的具體操作,請參見步驟一:接入雲端服務監控資料。

接入成功後,在左側導覽列單擊接入管理。

在接入管理頁面,單擊雲端服務地區環境頁簽。

在雲端服務地區環境列表中,單擊目標環境名稱進入雲端服務環境詳情頁面。

在組件管理頁簽的基本資料地區,單擊Prometheus 執行個體後的雲端服務地區。

在設定頁簽上,可以擷取不同的資料訪問方式。

如何將DashBoard的指標資料接入自建Grafana?

雲訊息佇列 RocketMQ 版的所有指標資料已儲存到您的阿里雲可觀測監控 Prometheus 版中,您可以參照如何擷取儀錶盤指標資料?中的步驟,接入雲端服務並擷取環境名稱和HTTP API地址後,通過API將雲訊息佇列 RocketMQ 版的儀錶盤的指標資料接入到本地自建Grafana中。具體操作,請參見使用HTTP API地址對接Grafana或自建應用中接入Prometheus資料。

如何理解執行個體的TPS Max值?

TPS Max值:以1分鐘為統計周期,每秒採樣一次,統計結果取這60個採樣值的最大值。

具體樣本如下:

假設某執行個體在1分鐘內生產60條訊息(均為普通訊息、每條4 KB大小),則該執行個體的生產速率為60條/分鐘。

如果這60條訊息在第1秒發送完成,則該執行個體在這1分鐘內每秒的TPS分別為60、0、0……0。

執行個體TPS Max值=60 TPS。

如果這60條訊息在第1秒發送了40條,第2秒發送了20條,則該執行個體在這1分鐘內每秒的TPS分別為40、20、0、0……0。

執行個體TPS Max值=40 TPS。