本文匯總了使用Container ServiceACK Service時的一些常見問題。包括但不限於為什麼叢集內無法訪問SLB IP、為什麼複用已有SLB沒有生效、CCM升級失敗如何處理等問題的解決方案。

索引

SLB相關

使用已有SLB常見問題

其他

SLB相關

ACK叢集中SLB執行個體的具體用途

如果建立Kubernetes叢集時安裝了Nginx Ingress組件,那麼叢集會預設自動建立兩個SLB執行個體。

兩個SLB執行個體的用途說明如下:

APIServer SLB:該SLB執行個體為API Server的訪問入口,訪問叢集均需要與該SLB執行個體通訊。監聽連接埠為6443,監聽協議為TCP。後端節點為APIServer Pod或者Master ECS。

Nginx Ingress Controller SLB:該SLB執行個體與kube-system命名空間下的

nginx-ingress-controller服務關聯,虛擬伺服器組會動態綁定Nginx Ingress Controller Pod,實現對外部請求的負載平衡和路由轉寄。監聽連接埠為80和443,監聽協議為TCP。

建立Service時,Local和Cluster兩種外部流量策略如何選擇?

不同網路外掛程式,Local和Cluster兩種外部流量策略的功能不同。關於Local和Cluster兩種外部流量策略的詳細區別,請參見外部流量策略。

為什麼看不到Service與LoadBalancer同步過程的事件Event資訊?

如果執行命令kubectl -n {your-namespace} describe svc {your-svc-name}後看不到Event資訊的話,請確認您的CCM組件版本。

SLB建立一直處於Pending狀態如何處理?

執行命令

kubectl -n {your-namespace} describe svc {your-svc-name}查看事件資訊。處理事件中的報錯資訊。關於事件中不同報錯資訊的處理方式,請參見報錯事件及對應處理方式。

如果看不到報錯資訊,請參見為什麼看不到Service與LoadBalancer同步過程的事件Event資訊?。

SLB虛擬伺服器組未更新如何處理?

執行命令

kubectl -n {your-namespace} describe svc {your-svc-name}查看事件資訊。處理事件中的報錯資訊。關於事件中不同報錯資訊的處理方式,請參見報錯事件及對應處理方式。

如果看不到報錯資訊,請參見為什麼看不到Service與LoadBalancer同步過程的事件Event資訊?。

Service註解不生效如何處理?

按照以下步驟查看報錯資訊。

執行命令

kubectl -n {your-namespace} describe svc {your-svc-name}查看事件資訊。處理事件中的報錯資訊。關於事件中不同報錯資訊的處理方式,請參見報錯事件及對應處理方式。

如果沒有報錯資訊,分以下三種情境解決:

確認您的CCM版本是否符合對應註解的版本要求。關於註解和CCM的版本對應關係,請參見通過Annotation配置傳統型負載平衡CLB。

登入Container Service管理主控台,在服務頁面,單擊目標服務的服務名稱,確認服務Service中是否有對應的註解。如果沒有對應的註解,配置註解資訊。

關於如何配置註解,請參見通過Annotation配置傳統型負載平衡CLB。

關於如何查看服務列表,請參見Service管理。

確認註解資訊是否有誤。

為何SLB的配置被修改?

CCM使用聲明式API,會在一定條件下根據Service的配置自動更新SLB配置。您自行在SLB控制台上修改的配置均存在被覆蓋的風險。建議您通過註解的方式配置SLB。關於註解配置方式的更多資訊,請參見通過Annotation配置傳統型負載平衡CLB。

請勿在SLB控制台上手動修改Kubernetes建立並維護的SLB的任何配置,否則有配置丟失的風險,造成Service不可訪問。

為什麼叢集內無法訪問SLB IP?

情境一:設定SLB IP為私網地址,並且沒有通過Service方式建立SLB,那麼SLB後端Pod和訪問SLB Pod將在同一個節點,導致在叢集內訪問SLB IP失敗。

對於四層負載平衡服務,目前不支援負載平衡後端ECS執行個體直接為用戶端提供服務的同時,又作為負載平衡的後端伺服器。因此在叢集內訪問SLB IP會失敗。您可以通過以下方式解決該問題,從而避免訪問端和目的端在同一個節點。

將SLB IP修改為公網地址。

使用Service的方式建立SLB,同時設定外部流量策略為Cluster,這種情況下kube-proxy會劫持叢集內訪問SLB流量,繞過SLB本身限制。

情境二:使用Service發布服務時,設定了外部流量策略為Local,導致在叢集內訪問SLB IP失敗。

關於該問題的詳細原因和解決方案,請參見如何解決Kubernetes叢集中訪問LoadBalancer暴露出去的SLB地址不通?。

如何解決Kubernetes叢集中訪問LoadBalancer暴露出去的SLB地址不通?

問題描述

在Kubernetes叢集中,有部分節點能夠訪問叢集暴露出去的Local類型的SLB,但是也有部分節點不能訪問,且Ingress出現該問題較多。

問題原因

SLB設定了externalTrafficPolicy: Local類型,這種類型的SLB地址只有在Node中部署了對應的後端Pod,才能被訪問。因為SLB的地址是叢集外使用,如果叢集的節點和Pod不能直接存取,請求不會發送到SLB,會被當作Service的擴充IP地址,被kube-proxy的iptables或ipvs轉寄。

如果剛好叢集節點或者Pod所在的節點上沒有相應的後端服務Pod,就會發生網路不通的問題,而如果有相應的後端服務Pod,是可以正常訪問的。相關問題的更多資訊請參見kube-proxy將external-lb的地址添加到節點本地iptables規則。

解決方案

可以參見以下方法解決問題,推薦您使用第一種方法:

在Kubernetes叢集內通過ClusterIP或者服務名訪問內部服務。其中Ingress的服務名為:

nginx-ingress-lb.kube-system。將LoadBalancer的Service中的externalTrafficPolicy修改為Cluster。這種方式雖然可以確保流量能夠被轉寄到所有節點上的Pod,但會導致源IP地址丟失,因為叢集會執行SNAT(源網路位址轉譯)。這意味著後端應用無法擷取到用戶端的真實IP地址。Ingress的服務修改命令如下:

kubectl edit svc nginx-ingress-lb -n kube-system若是Terway的ENI或者ENI多IP的叢集,將LoadBalancer的Service中的externalTrafficPolicy修改為Cluster,並且添加ENI直通的Annotation,例如

annotation: service.beta.kubernetes.io/backend-type:"eni",具體格式如下,可以保留源IP,並且在叢集內訪問也沒有問題。詳細資料,請參見通過Annotation配置傳統型負載平衡CLB。apiVersion: v1 kind: Service metadata: annotations: service.beta.kubernetes.io/backend-type: eni labels: app: nginx-ingress-lb name: nginx-ingress-lb namespace: kube-system spec: externalTrafficPolicy: Cluster

什麼情況下會自動刪除SLB?

自動刪除SLB的策略取決於SLB是否由CCM自動建立。刪除策略如下表所示。

Service操作 | CCM自動建立的SLB | 複用的已有SLB |

刪除Service | 刪除SLB | 保留SLB |

修改LoadBalancer型Service為其他類型 | 刪除SLB | 保留SLB |

刪除Service是否會刪除SLB?

如果是複用已有SLB(Service中有service.beta.kubernetes.io/alibaba-cloud-loadbalancer-id: {your-slb-id}註解),刪除Service時不會刪除SLB;否則刪除Service時會刪除對應的SLB。

如果您更改Service類型(例如從LoadBalancer改為NodePort)也會刪除對應的CCM自動建立的SLB。

請勿通過手動編輯Service的方式,將CCM自動建立的SLB改為使用已有SLB。這將導致Service與CCM自動建立的SLB失去關聯,在刪除該Service時無法自動刪除對應的SLB。

誤刪除SLB怎麼辦?

情境一:誤刪除API Server SLB如何處理?

無法恢複,您需要重建叢集。具體操作,請參見建立ACK Pro版叢集。

情境二:誤刪除Ingress SLB如何處理?

以下步驟以Nginx Ingress為例,示範如何重新建立SLB。

登入Container Service管理主控台,在左側導覽列選擇叢集列表。

在叢集列表頁面,單擊目的地組群名稱,然後在左側導覽列,選擇。

在服務頁面頂部的命名空間下拉式清單中,選擇kube-system。

在服務列表中,找到目標服務nginx-ingress-lb,然後單擊操作列的YAML編輯,移除

status.loadBalancer及之後相關內容,然後單擊確定以讓CCM重新構建SLB。如果服務列表中沒有nginx-ingress-lb,請在當前頁面左上方單擊使用YAML建立資源,使用以下模板建立名為nginx-ingress-lb的Service。

apiVersion: v1 kind: Service metadata: labels: app: nginx-ingress-lb name: nginx-ingress-lb namespace: kube-system spec: externalTrafficPolicy: Local ports: - name: http port: 80 protocol: TCP targetPort: 80 - name: https port: 443 protocol: TCP targetPort: 443 selector: app: ingress-nginx type: LoadBalancer

情境三:誤刪除業務相關的SLB如何處理?

如果不需要SLB對應的Service,刪除Service。

如果對應的Service還需要使用,解決方案如下。

登入Container Service管理主控台,在左側導覽列選擇叢集列表。

在叢集列表頁面,單擊目的地組群名稱,然後在左側導覽列,選擇。

在服務頁面頂部的命名空間下拉框中,單擊全部命名空間,然後在服務列表中,找到業務Service。

在目標服務的操作列,單擊YAML編輯,移除status內容後,然後單擊更新以讓CCM重新構建SLB。

舊版本CCM如何支援SLB重新命名?

Cloud Controller Manager組件V1.9.3.10後續版本建立的SLB支援自動打TAG從而可以重新命名,而V1.9.3.10及之前的版本,您需要手動給該SLB打上一個特定的TAG從而支援SLB重新命名。

只有Cloud Controller Manager組件V1.9.3.10及之前版本建立的SLB才需要手動打TAG的方式來支援重新命名。

Service類型為LoadBalancer。

登入到Kubernetes叢集Master節點,請參見擷取叢集KubeConfig並通過kubectl工具串連叢集。

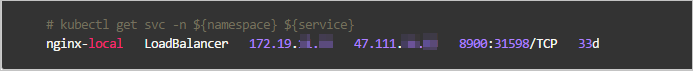

執行

kubectl get svc -n ${namespace} ${service}命令,查看該Service類型及IP。 說明

說明您需要將namespace與service替換為所選叢集的命名空間及服務名稱。

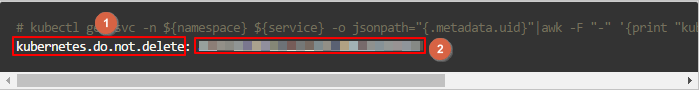

執行以下命令,產生該SLB所需要的Tag。

kubectl get svc -n ${namespace} ${service} -o jsonpath="{.metadata.uid}"|awk -F "-" '{print "kubernetes.do.not.delete: "substr("a"$1$2$3$4$5,1,32)}'

登入負載平衡控制台根據步驟2中所擷取的IP,在其所在的地區搜尋到該SLB。

根據步驟3產生的Key值和Value值(分別對應上圖的1和2),為該SLB打上一個Tag。具體操作,請參見建立和管理CLB執行個體。

Local模式下如何自動化佈建Node權重?

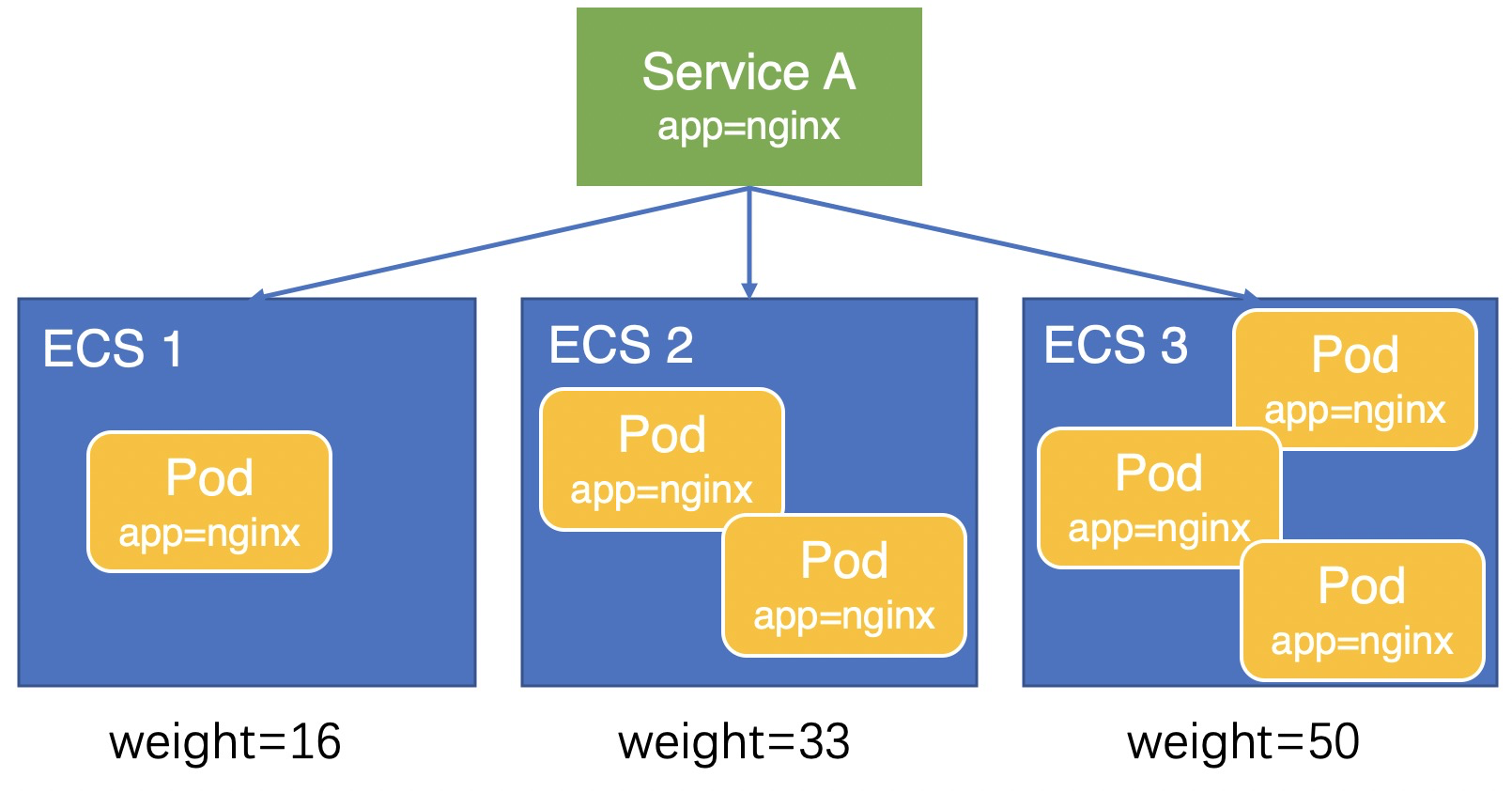

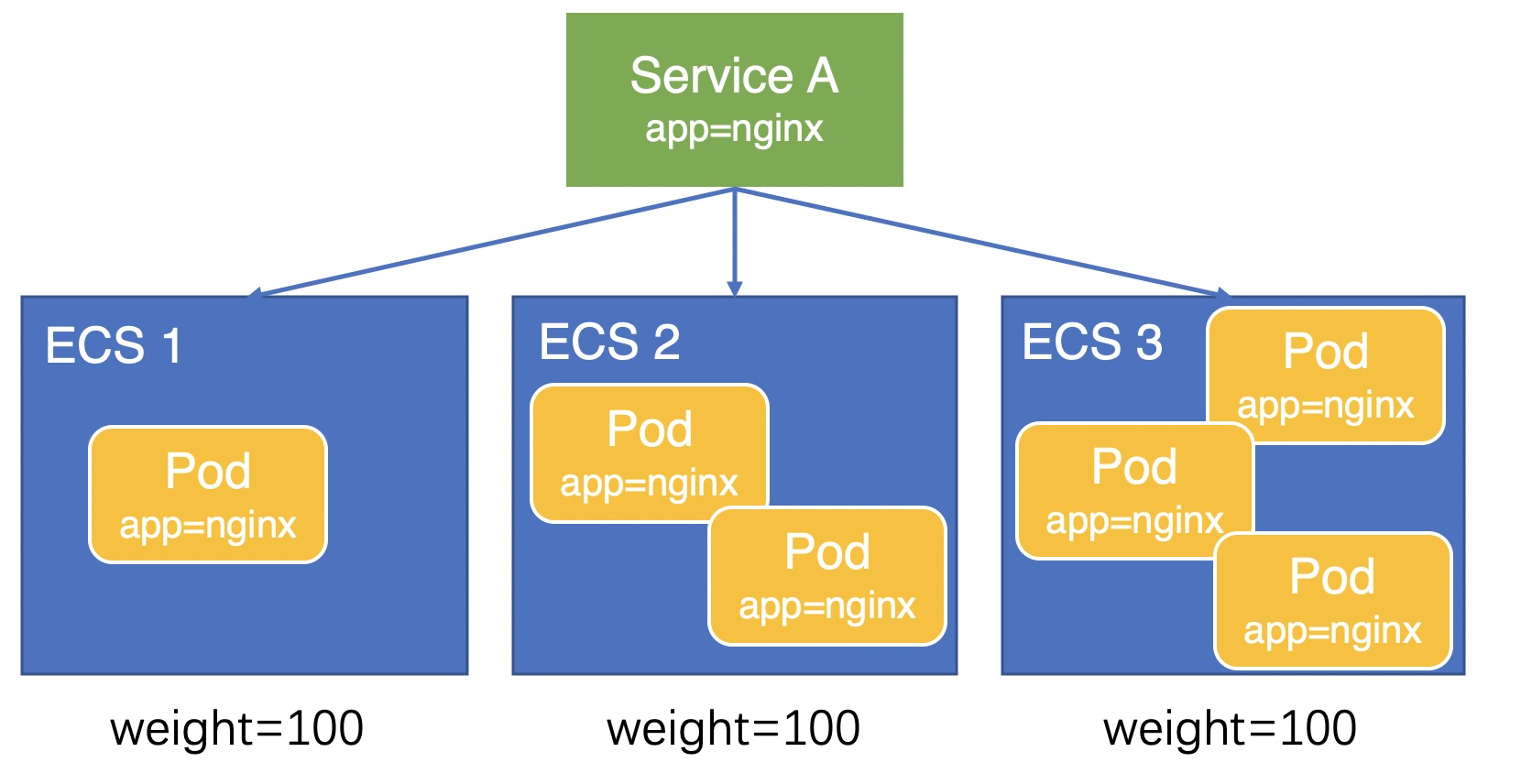

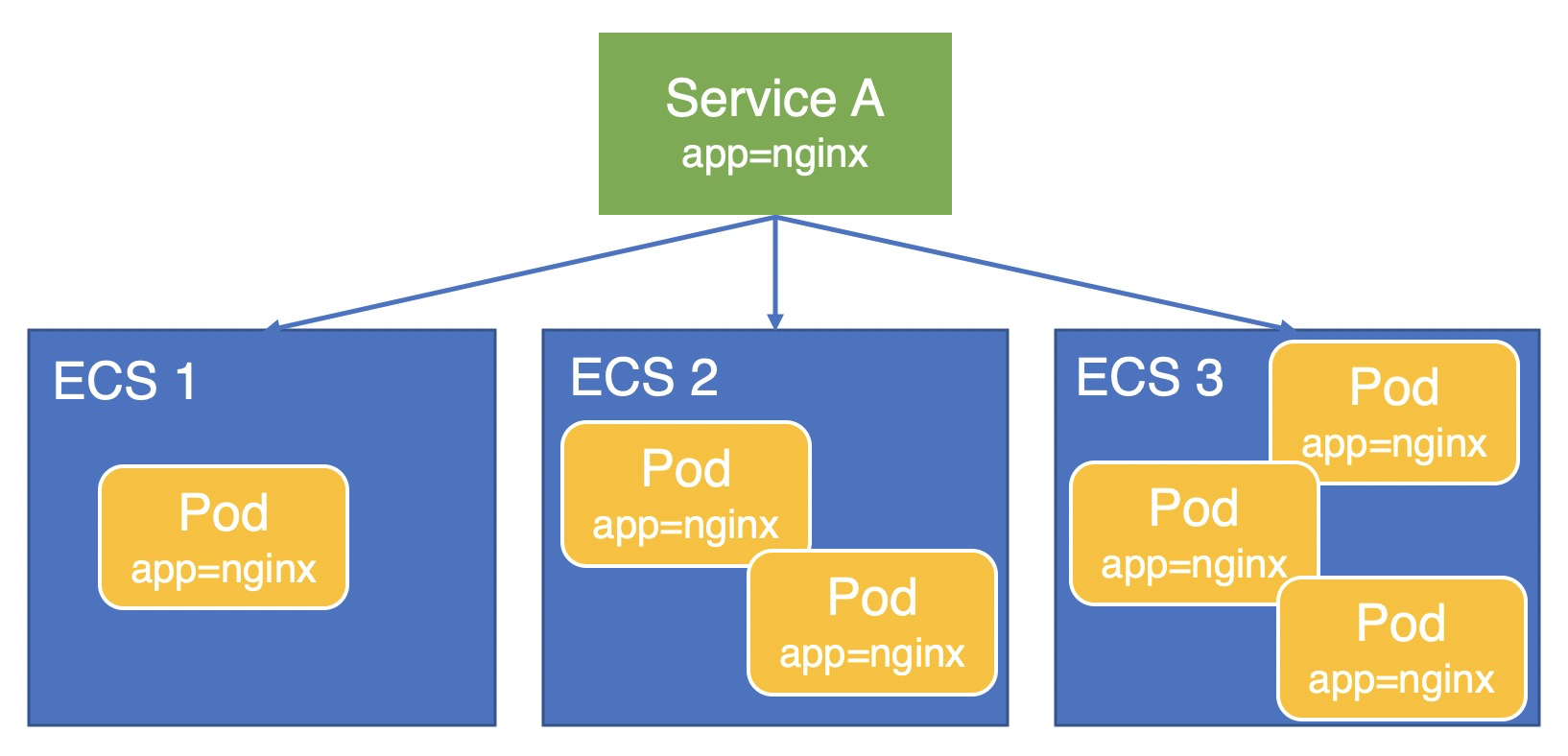

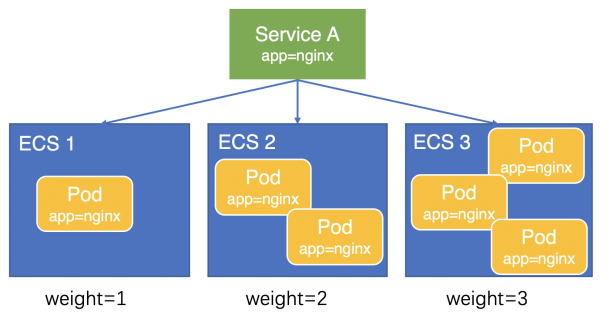

本文以業務Pod(app=nginx)部署在三台ECS上,通過Service A對外提供服務為例,說明在Local模式下Node權重的計算方式。

V1.9.3.276-g372aa98-aliyun及之後版本

權重計算方式因為計算精度問題,Pod間還會存在輕微的負載不均。在V1.9.3.276-g372aa98-aliyun及之後版本,CCM將Node上部署的Pod數量設定為Node權重,如下圖所示,三台ECS的權重分別為1、2、3,流量會按照1:2:3的比例分配給三台ECS,Pod負載會更加均衡。

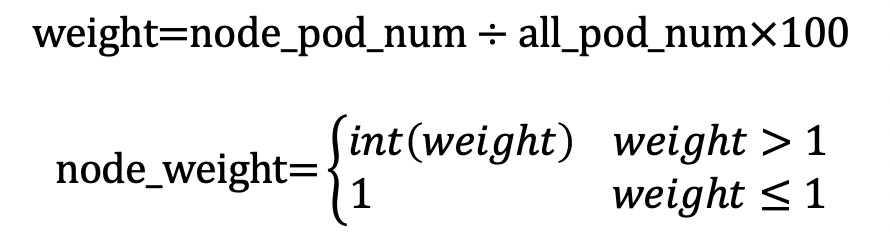

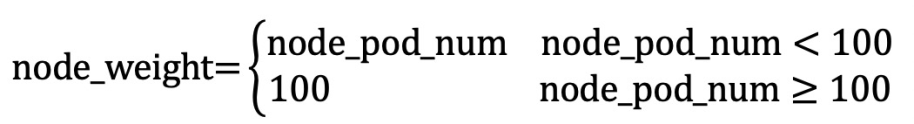

計算公式如下所示:

V1.9.3.164-g2105d2e-aliyun之後及V1.9.3.276-g372aa98-aliyun之前版本

V1.9.3.164-g2105d2e-aliyun之前版本

如何查詢叢集中所有SLB的IP、名稱及類型?

執行如下命令,擷取所有命名空間中每個LoadBalancer類型服務的名稱、IP地址和地址類型資訊。

kubectl get services -A -ojson | jq '.items[] | select(.spec.type == "LoadBalancer") | {name: .metadata.name, namespace: .metadata.namespace, ip: .status.loadBalancer.ingress[0].ip, lb_type: .metadata.annotations."service.beta.kubernetes.io/alibaba-cloud-loadbalancer-address-type"}'預期輸出如下:

{ "name": "test", "namespace": "default", "ip": "192.168.*.*", "lb_type": "intranet" } { "name": "nginx-ingress-lb", "namespace": "kube-system", "ip": "47.97.*.*", "lb_type": "null" }

LoadBalancer類型的Service更替後端時,如何確保LB能夠優雅中斷已有串連?

您可以通過Annotation service.beta.kubernetes.io/alibaba-cloud-loadbalancer-connection-drain和service.beta.kubernetes.io/alibaba-cloud-loadbalancer-connection-drain-timeout配置優雅中斷。從Service移除後端後,LB依然會在drain-timeout期間內保持存量串連的處理。詳細資料,請參見為監聽設定串連優雅中斷。

使用已有SLB常見問題

為什麼複用已有SLB沒有生效?

排查CCM版本,低於v1.9.3.105-gfd4e547-aliyun的CCM版本不支援複用。關於如何查看及升級CCM版本的操作,請參見升級CCM組件。

確認複用的SLB是否為叢集建立的,不支援複用叢集建立的SLB。

確認SLB是否為API Server的SLB,不支援複用API Server的SLB。

如果是私網SLB,請確認SLB是否和叢集在同一個VPC中,不支援跨VPC複用SLB。

為什麼複用已有SLB時沒有配置監聽?

確認是否在註解中配置了service.beta.kubernetes.io/alibaba-cloud-loadbalancer-force-override-listeners為"true"。如果沒有配置,則不會自動建立監聽。

複用已有的負載平衡預設不會覆蓋已有的監聽,原因有以下兩點:

如果已有負載平衡的監聽上綁定了業務,強制覆蓋可能會引發業務中斷。

由於CCM目前支援的後端配置有限,無法處理一些複雜配置。如有複雜的後端配置需求,可以在不覆蓋監聽的情況下,通過控制台自行配置監聽。

考慮到以上兩種情況,不建議您強制覆蓋監聽。如果已有負載平衡的監聽連接埠不再使用,則可以強制覆蓋。

其他

CCM升級失敗如何處理?

關於CCM組件升級失敗的解決方案,請參見Cloud Controller Manager(CCM)組件升級檢查失敗。

Service報錯資訊及對應處理方式

不同報錯資訊的解決方案如下表所示。

報錯資訊 | 說明及解決方案 |

| 指CLB的後端伺服器配額不足。 解決方案:您可以採取以下方式,最佳化配額消耗。

|

| 共用型CLB不支援ENI。 解決方案:如果CLB後端使用的是ENI,您需要選擇效能保障型CLB執行個體。在Service中添加註解 重要 添加註解需要注意是否符合CCM的版本要求。關於註解和CCM的版本對應關係,請參見通過Annotation配置傳統型負載平衡CLB。 |

| CLB無後端伺服器,請確認Service是否已關聯Pod且Pod正常運行。 解決方案:

|

| 無法根據Service關聯CLB。 解決方案:登入負載平衡管理主控台,根據Service的

|

| 帳號欠費。 |

| 賬戶餘額不足。 |

| CLB OpenAPI限流。 解決方案:

|

| 無法刪除虛擬伺服器組關聯的監聽。 解決方案:

|

| 複用內網CLB時,該CLB和叢集不在同一個VPC內。 解決方案:請確保您的CLB和叢集在同一個VPC內。 |

| 虛擬交換器不足。 解決方案:通過 |

| ENI模式不支援String類型的 解決方案:將Service YAML中的 |

| 低版本CCM預設建立共用型CLB,但該類型CLB已停止售賣。 解決方案:升級CCM組件。 |

| CLB資源群組一旦建立後不支援修改。 解決方案:移除Service中的註解 |

| 無法在VPC內找到指定的ENI IP。 解決方案:確認Service中是否配置了註解 |

| CLB計費類型不支援從隨用隨付轉為按規格收費。 解決方案:

|

| 複用了CCM建立的CLB。 解決方案:

|

| CLB的類型一旦建立後不可更改,建立Service後更改了CLB的類型會導致該報錯。 解決方案:刪除重建Service。 |

| Service已經綁定一個CLB,不能再綁定另一個CLB。 解決方案:不支援通過更改 |

當Service類型為NodePort時,如何為Service配置監聽?

CCM僅支援為LoadBalancer的Service配置監聽。您需要將Service類型從NodePort修改為LoadBalancer。

如何訪問NodePort類型的Service?

如果在叢集內(叢集節點內)訪問,您可以使用ClusterIP+Port,或者節點IP+Service中NodePort連接埠訪問Service。預設的NodePort連接埠會大於30000。

如果在叢集外(叢集節點外)訪問,您可以使用節點IP+Service中NodePort連接埠訪問Service。預設的NodePort連接埠會大於30000。

如果在VPC外部(其他VPC或者公網)訪問,您需要發布LoadBalancer類型的Service,然後通過Service的外部端點訪問Service。

說明如果您的Service設定了外部流量轉寄策略為本地,請確保被訪問的節點上存在Service後端Pod。關於更多外部流量策略,請參見外部流量策略。

如何正確配置NodePort範圍?

在Kubernetes中,APIServer提供了ServiceNodePortRange參數(命令列參數 --service-node-port-range),該參數是用於限制NodePort或LoadBalancer類型的Service在節點上所監聽的NodePort連接埠範圍,該參數預設值為30000~32767。在ACK Pro叢集中,您可以通過自訂Pro叢集的管控面參數修改該連接埠範圍。具體操作,請參見自訂ACK Pro叢集的管控面參數。

您在修改NodePort連接埠範圍時必須十分謹慎。務必保證NodePort連接埠範圍與叢集節點上Linux核心提供的

net.ipv4.ip_local_port_range參數中的連接埠範圍不衝突。該核心參數ip_local_port_range控制了Linux系統上任意應用程式可以使用的本地連接埠號碼範圍。ip_local_port_range的預設值為32768~60999。您所建立的ACK叢集在預設配置情況下,ServiceNodePortRange參數和

ip_local_port_range參數不會產生衝突。如果您此前為了提升連接埠數量限制調整了這兩個參數中任意一個,導致兩者範圍出現重合,則可能會產生節點上的偶髮網絡異常,嚴重時會導致業務健全狀態檢查失敗、叢集節點離線等。建議您恢複預設值或同時調整兩個連接埠範圍到完全不重合。調整連接埠範圍後,叢集中可能存在部分NodePort或LoadBalancer類型的Service仍在使用

ip_local_port_range參數連接埠範圍內的連接埠作為NodePort。此時您需要對這部分Service進行重新設定以避免衝突,可通過kubectl edit <service-name>的方式直接將spec.ports.nodePort欄位的值更改為未被佔用的NodePort。

ACK專有叢集升級CCM組件至新版本時,如何添加要求的權限?

為提升效能,CCM 在新版本中逐步引入了需要額外 RAM 許可權的阿里雲 API(例如,v2.11.2 針對 Flannel 網路的路由管理,v2.12.1 針對 NLB 的批量管理)。

因此,如在ACK專有叢集中需升級組件至 v2.11.2 及以上版本時,請務必在升級前為其 RAM 角色授予所需的操作許可權,以確保組件正常工作。

登入Container Service管理主控台,在左側導覽列選擇叢集列表。

在叢集列表頁面,單擊目的地組群名稱,然後在左側導覽列,選擇叢集資訊。

單擊基本資料頁簽,在叢集資源地區,單擊Master RAM 角色對應的角色名稱。

在存取控制控制台的角色詳情頁面,單擊許可權管理,在已有授權中定位以

k8sMasterRolePolicy-Ccm-開頭的自訂策略,單擊策略名稱稱進入權限原則管理頁面。對於較早建立的叢集,可能不存在上述策略。可選擇以

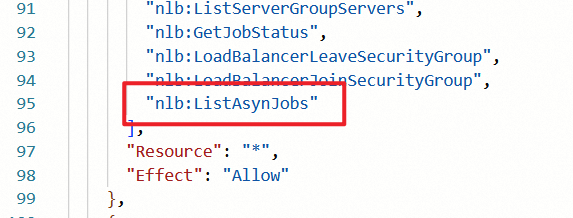

k8sMasterRolePolicy-開頭的自訂策略。單擊編輯策略,在NLB相關的許可權中,添加

nlb:ListAsynJobs許可權。

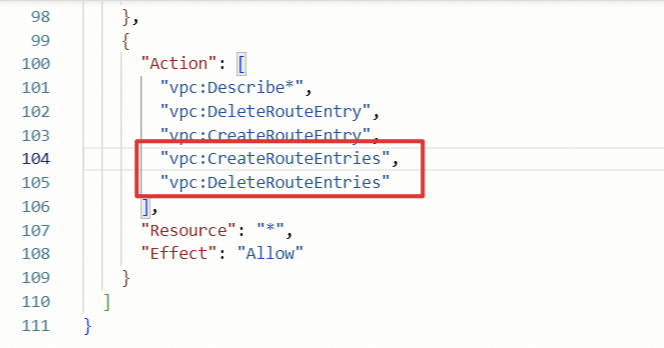

若為Flannel叢集,需要同時在VPC相關許可權中添加vpc:CreateRouteEntries和vpc:DeleteRouteEntries。

添加完成後,按照頁面提示完成策略的提交。更改儲存後,可正常執行CCM組件的升級操作。