PyODPS は、DataWorks などのデータ開発プラットフォームでデータ開発ノードとして呼び出すことができます。これらのプラットフォームは、PyODPS を実行するための環境を提供し、ノードのスケジュールと実行を可能にします。 MaxCompute エントリオブジェクトを手動で作成する必要はありません。 pandas と同様に、PyODPS は高速で柔軟性があり、表現力豊かなデータ構造を提供します。 PyODPS のデータ処理機能は pandas のデータ処理機能に似ています。 PyODPS が提供する DataFrame API を呼び出すことで、PyODPS のデータ処理機能を使用できます。このトピックでは、DataWorks コンソールでプロジェクトに PyODPS を使用する方法について説明します。

前提条件

DataWorks ワークスペースが作成され、MaxCompute 計算リソースにアタッチされている。

手順

PyODPS ノードを作成します。

このセクションでは、DataWorks コンソールで PyODPS 3 ノードを作成する方法について説明します。詳細については、「PyODPS 3 タスクを開発する」をご参照ください。

説明PyODPS 3 ノードを例として使用します。 PyODPS 3 ノードの Python バージョンは 3.7 です。

各 PyODPS ノードは最大 50 MB のデータを処理でき、最大 1 GB のメモリを占有できます。それ以外の場合、DataWorks は PyODPS ノードを終了します。 PyODPS ノードで大量のデータを処理する Python コードを記述しないことをお勧めします。

DataWorks でコードを記述およびデバッグするのは非効率的です。コードを記述するために、ローカルに IntelliJ IDEA をインストールすることをお勧めします。

ワークフローを作成します。

DataWorks コンソールにログインし、DataStudio ページに移動します。ページの左側で、[ビジネスフロー] を右クリックし、[ワークフローの作成] をクリックします。

PyODPS 3 ノードを作成します。

作成したワークフローを見つけ、ワークフローの名前を右クリックして、 を選択します。 [ノードの作成] ダイアログボックスで、[ノード名] を指定し、[コミット] をクリックします。

PyODPS 3 ノードを設定して実行します。

PyODPS 3 ノードのコードを記述します。

PyODPS 3 ノードのコードエディタにテストコードを記述します。この例では、テーブル操作の全範囲を含む次のコードをコードエディタに記述します。テーブル操作と SQL 操作の詳細については、「テーブル」および「SQL」をご参照ください。

from odps import ODPS # my_new_table という名前の非パーティションテーブルを作成します。非パーティションテーブルには、指定されたデータ型のフィールドと指定された名前のフィールドが含まれています。 # DataWorks の各 PyODPS ノードには、MaxCompute エントリであるグローバル変数 odps または o が含まれています。エントリを定義せずに使用できます。詳細については、「DataWorks で PyODPS を使用する」を参照してください。 table = o.create_table('my_new_table', 'num bigint, id string', if_not_exists=True) # my_new_table テーブルにデータを書き込みます。 records = [[111, 'aaa'], [222, 'bbb'], [333, 'ccc'], [444, 'Chinese']] o.write_table(table, records) # my_new_table テーブルからデータを読み取ります。 for record in o.read_table(table): print(record[0],record[1]) # SQL ステートメントを実行して、my_new_table テーブルからデータを読み取ります。 result = o.execute_sql('select * from my_new_table;',hints={'odps.sql.allow.fullscan': 'true'}) # SQL ステートメントの実行結果を取得します。 with result.open_reader() as reader: for record in reader: print(record[0],record[1]) # テーブルを削除します。 table.drop()コードを実行します。

コードを記述した後、上部のツールバーにある

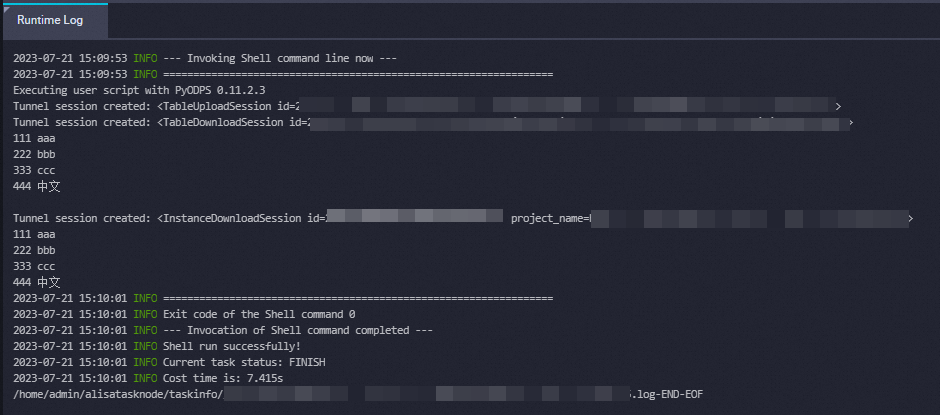

アイコンをクリックします。コードが実行された後、[ランタイムログ] タブで PyODPS 3 ノードの実行結果を表示できます。次の図の結果は、コードが正常に実行されたことを示しています。

アイコンをクリックします。コードが実行された後、[ランタイムログ] タブで PyODPS 3 ノードの実行結果を表示できます。次の図の結果は、コードが正常に実行されたことを示しています。