Function Computeへのデータ同期

1. Function Computeで関数を作成する

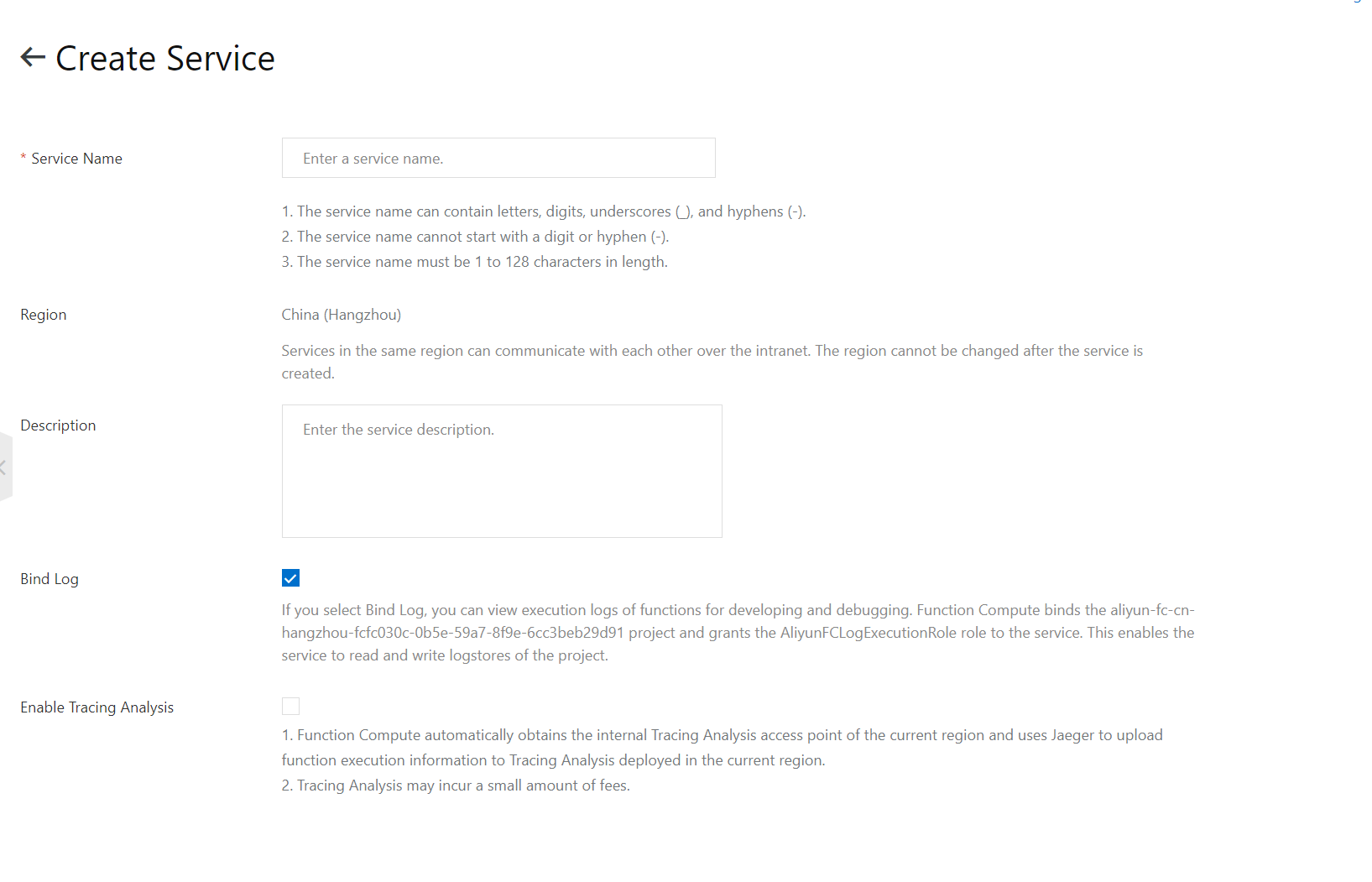

1.1 サービスを作成する

[Function Computeコンソール] でサービスを作成します。サービスがすでに作成されている場合は、この手順をスキップします。

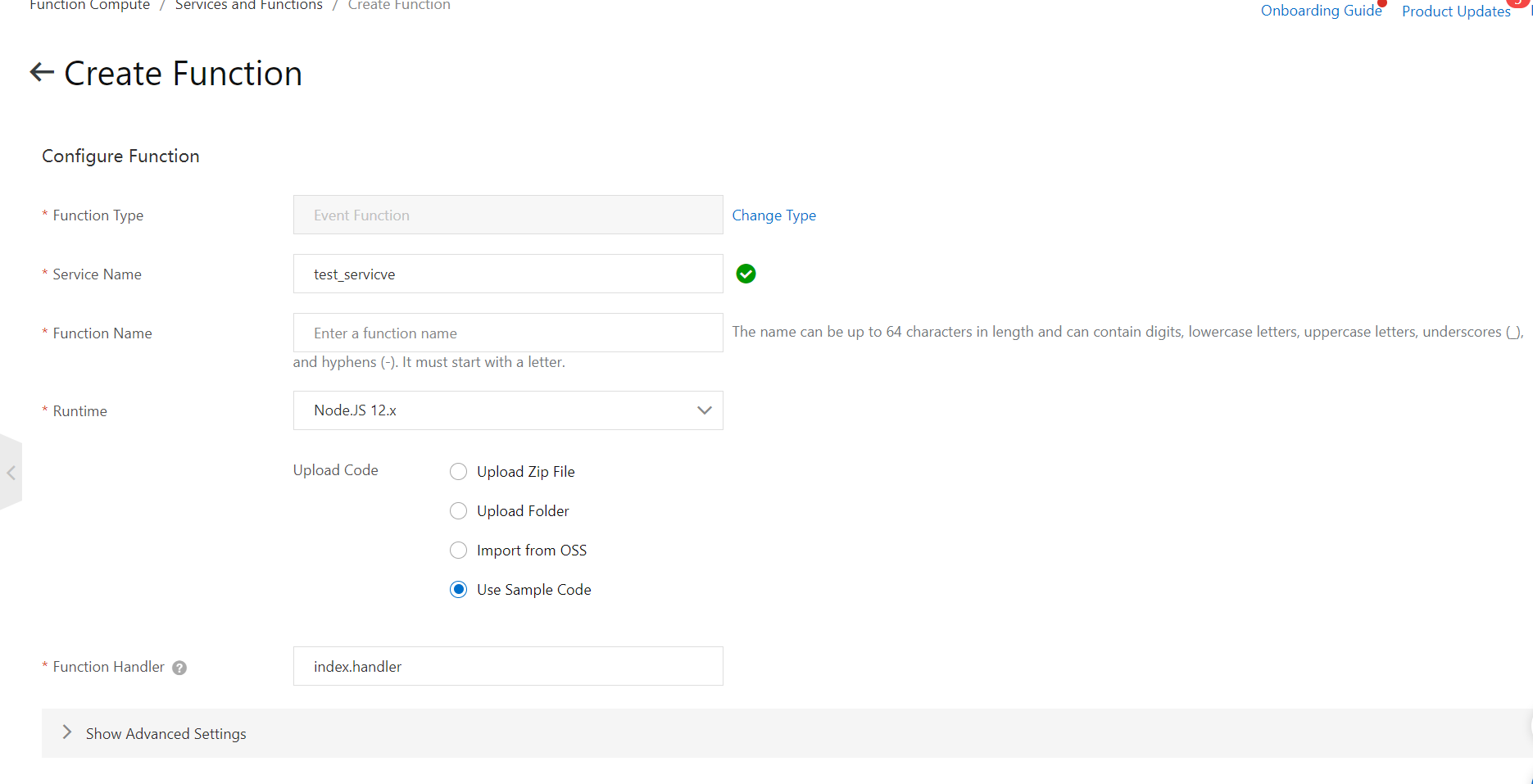

1.2 関数を作成する

[サービスと関数] ページで、作成したサービスをクリックし、[関数の作成] をクリックします。コードをアップロードするか、関数のサンプルコードを使用します。トリガーパラメーターにはデフォルト値を使用します。次に、[作成] をクリックします。

2. DataHubのサービスリンクロールを作成する

Security Token Service ( STS ) から一時的なアクセス認証情報を使用する場合、DataHub のサービスリンクロールが自動的に作成されます。その後、DataHubはサービスリンクロールを使用してデータをFunction Computeに同期します。

3. DataHubトピックを作成する

詳細については、「トピックの管理」をご参照ください。

4. DataConnectorを作成する

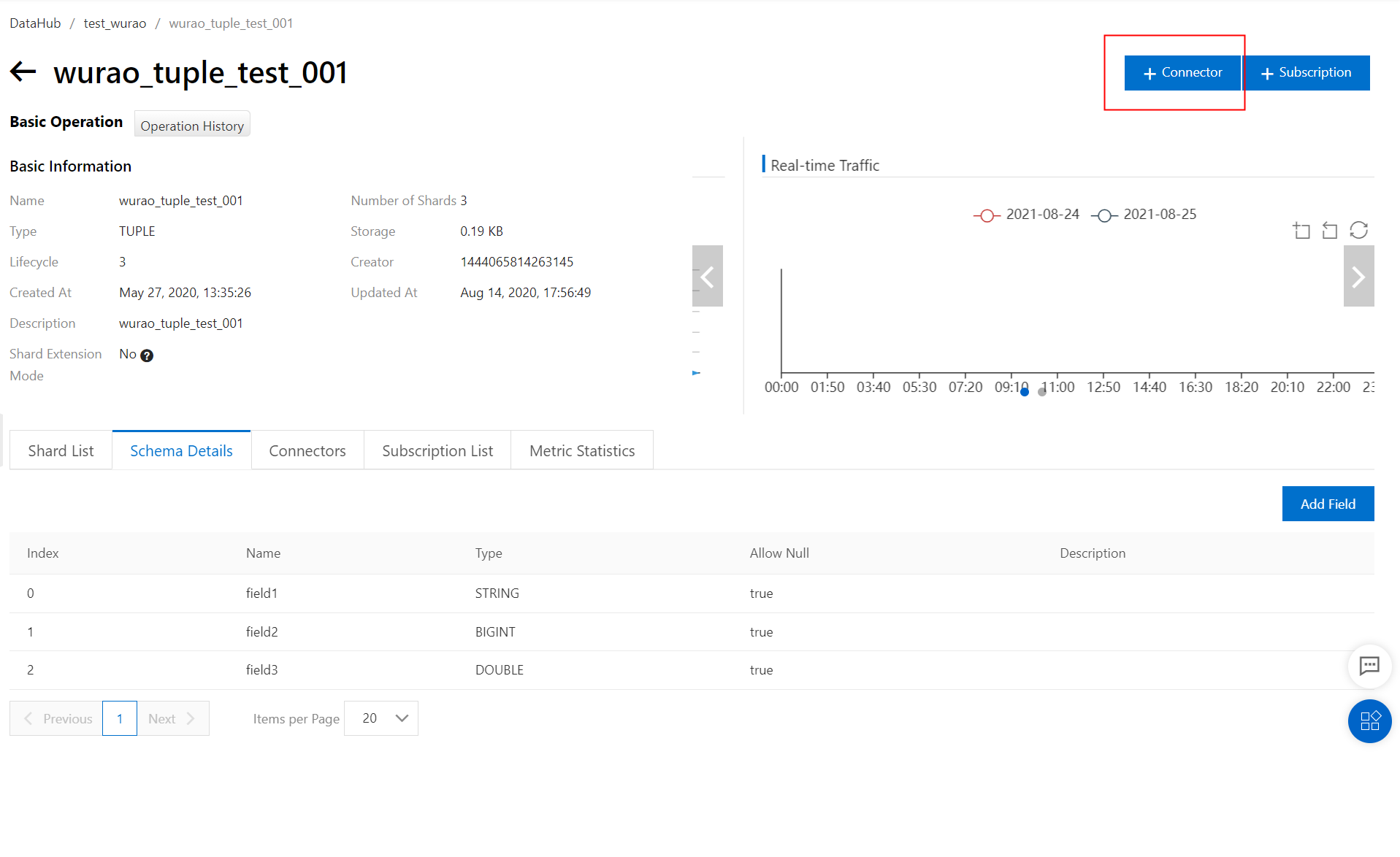

4.1 トピックの詳細ページに移動する

4.2 [Function Compute] をクリックする

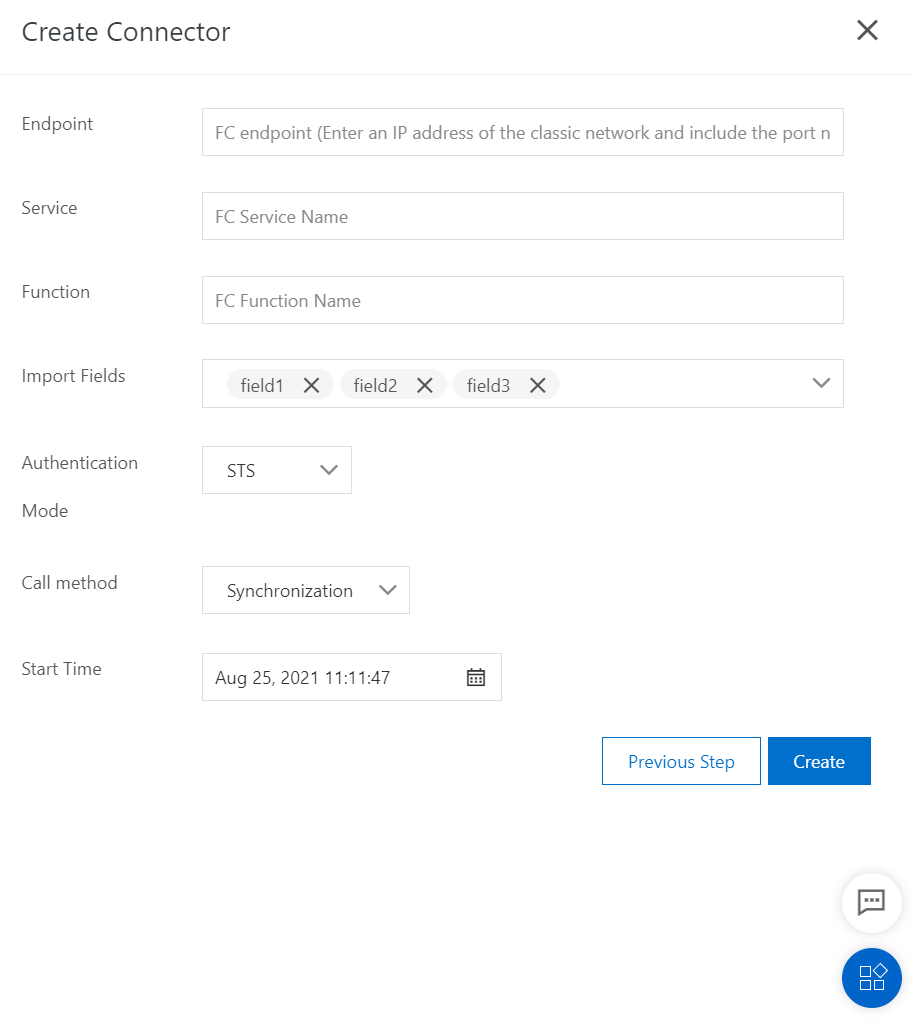

4.3 必要に応じてパラメーターを設定する

エンドポイント: Function Computeのエンドポイント。次の形式で内部エンドポイントを入力する必要があります:

https://<Alibaba CloudアカウントID>.fc.<リージョンID>.aliyuncs.com。中国 (上海) リージョンでFunction Computeにアクセスするために使用される内部エンドポイントの例:https://12423423992.fc.cn-shanghai-internal.aliyuncs.com。詳細については、「エンドポイント」をご参照ください。サービス: 宛先関数が属するサービスの名前。

関数: 宛先関数の名前。

開始時間: トピックデータがFunction Computeに同期される開始時間。

5. イベントのデータ構造

Function Computeに送信されるデータは、次の構造に従う必要があります。

{

"eventSource": "acs:datahub",

"eventName": "acs:datahub:putRecord",

"eventSourceARN": "/projects/test_project_name/topics/test_topic_name",

"region": "cn-hangzhou",

"records": [

{

"eventId": "0:12345",

"systemTime": 1463000123000,

"data": "[\"col1's value\",\"col2's value\"]"

},

{

"eventId": "0:12346",

"systemTime": 1463000156000,

"data": "[\"col1's value\",\"col2's value\"]"

}

]

}パラメーター:

eventSource: イベントのソース。

acs:datahubに設定します。eventName: イベントの名前。データがDataHubからのものである場合、値を

acs:datahub:putRecordに設定します。eventSourceARN: イベントソースの ID 。プロジェクト名とトピック名が含まれます。例:

/projects/test_project_name/topics/test_topic_name。region: イベントが属するDataHubのリージョン。例:

cn-hangzhou。records: イベント内のレコード。

eventId: レコードの ID 。値は

shardId:SequenceNumber形式です。systemTime: イベントがDataHubに書き込まれた時刻 (ミリ秒単位)。

data: イベントのデータ。データがTUPLEタイプのトピックにある場合、パラメーター値はリストです。リスト内の各要素は、トピック内のフィールド値に対応する文字列です。データがBLOBタイプのトピックにある場合、パラメーター値は文字列です。

6. 使用上の注意

Function Computeにアクセスするために使用されるエンドポイントは、内部エンドポイントである必要があります。Function Computeでサービスと関数を作成する必要があります。

DataHubは同期モードでの関数呼び出しのみをサポートします。これにより、データが順番に処理されることが保証されます。

DataHubは、宛先関数の実行時にエラーが発生した場合、1 秒後に同期を再試行します。同期が 512 回失敗した場合、同期は中断されます。

DataHubコンソールに移動して、DataConnectorの状態、同期のオフセット、詳細なエラーメッセージなど、DataConnectorに関する情報を表示できます。