Service Mesh (ASM) は Managed Service for OpenTelemetry と統合されています。Managed Service for OpenTelemetry は、分散アプリケーションのパフォーマンスボトルネックを効率的に特定するのに役立つ幅広いツールを提供します。たとえば、これらのツールを使用して、トレースデータの表示、トレーストポロジの表示、アプリケーションの依存関係の分析、リクエスト数のカウントを行うことができます。これは、分散アプリケーションの開発とトラブルシューティングの効率を向上させるのに役立ちます。このトピックでは、ASM で分散トレーシングを有効にする方法について説明します。

背景情報

分散トレーシングは、アプリケーション、特にマイクロサービスモデルを使用して構築されたアプリケーションのプロファイリングと監視に使用できます。ASM インスタンスのサイドカープロキシは、スパンを自動的に生成して送信できます。ただし、サイドカープロキシによって送信されたスパンを単一のトレースと正しく関連付けるには、アプリケーションが適切な HTTP ヘッダーを伝達する必要があります。サイドカープロキシがスパンを送信する場合、アプリケーションは各受信リクエストから特定のヘッダーを収集し、その受信リクエストによってトリガーされたすべての送信リクエストにヘッダーを転送する必要があります。OpenTelemetry はさまざまな伝達子をサポートしています。伝達子ごとに異なるヘッダーが使用されます。次のセクションでは、OpenTelemetry でサポートされている伝達子と対応するヘッダーを示します。

B3 伝達子

x-request-idx-b3-traceidx-b3-spanidx-b3-parentspanidx-b3-sampledx-b3-flagsx-ot-span-context

W3C Trace Context 伝達子

traceparenttracestate

V1.18.0.124 以前の ASM インスタンスは、デフォルトで B3 伝達子を使用します。V1.18.0.124 以降のバージョンの ASM インスタンスは、デフォルトで W3C Trace Context 伝達子を使用します。

前提条件

Managed Service for OpenTelemetry が Alibaba Cloud アカウントで有効になっていること。このサービスの課金については、請求ルールをご参照ください。

ASM インスタンスが作成され、そのインスタンスで Managed Service for OpenTelemetry が有効になっていること。詳細については、ASM トレーシングデータを Managed Service for OpenTelemetry に収集するをご参照ください。

ASM インスタンスに追加された ACK クラスタにアプリケーションがデプロイされていること。詳細については、ASM インスタンスに追加された ACK クラスタにアプリケーションをデプロイするをご参照ください。

サンプルアプリケーションの説明

Bookinfo 書籍レビューアプリケーションでは、Python で実装された productpage サービスは OpenTracing ライブラリを使用し、B3 伝播形式で HTTP リクエストから必要なヘッダーを抽出します。

def getForwardHeaders(request):

headers = {}

# x-b3-*** ヘッダーは、opentracing スパンを使用して設定できます

span = get_current_span()

carrier = {}

tracer.inject(

span_context=span.context,

format=Format.HTTP_HEADERS,

carrier=carrier)

headers.update(carrier)

# ...

incoming_headers = ['x-request-id']

# ...

for ihdr in incoming_headers:

val = request.headers.get(ihdr)

if val is not None:

headers[ihdr] = val

return headers

Java で実装された reviews サービスも、B3 伝播形式で HTTP ヘッダーを使用します。

@GET

@Path("/reviews/{productId}")

public Response bookReviewsById(@PathParam("productId") int productId,

@HeaderParam("end-user") String user,

@HeaderParam("x-request-id") String xreq,

@HeaderParam("x-b3-traceid") String xtraceid,

@HeaderParam("x-b3-spanid") String xspanid,

@HeaderParam("x-b3-parentspanid") String xparentspanid,

@HeaderParam("x-b3-sampled") String xsampled,

@HeaderParam("x-b3-flags") String xflags,

@HeaderParam("x-ot-span-context") String xotspan) {

if (ratings_enabled) {

JsonObject ratingsResponse = getRatings(Integer.toString(productId), user, xreq, xtraceid, xspanid, xparentspanid, xsampled, xflags, xotspan);アクセス例

http://{イングレスゲートウェイの IP アドレス}/productpage の形式の URL をブラウザのアドレスバーに入力し、Enter キーを押します。Bookinfo アプリケーションのページに移動します。

アプリケーションの表示

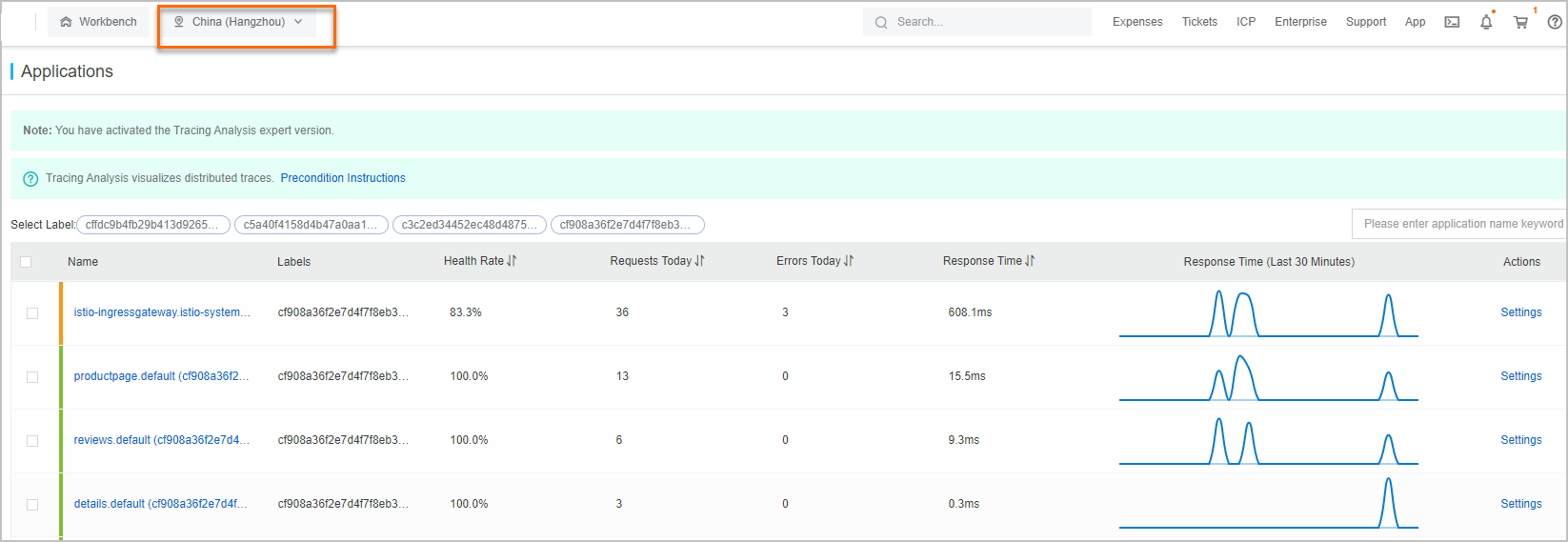

Managed Service for OpenTelemetry コンソールの [アプリケーション] ページで、現在の日付のリクエスト数やエラー数、正常性状態など、監視対象のすべてのアプリケーションの主要なメトリクスを表示できます。また、ラベル別にアプリケーションをフィルタリングできるように、アプリケーションのラベルを設定することもできます。

Managed Service for OpenTelemetry コンソール にログインします。

左側のナビゲーションペインで、[アプリケーション] をクリックします。[アプリケーション] ページの上部で、必要に応じてリージョンを選択します。

アプリケーション詳細の表示

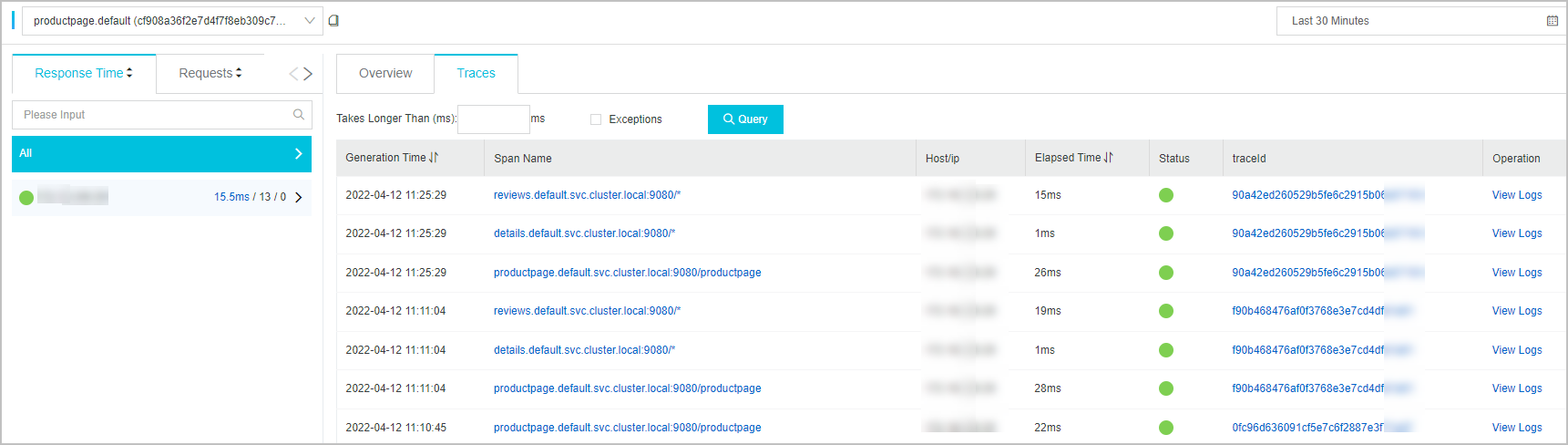

Managed Service for OpenTelemetry コンソールのアプリケーションの [アプリケーション詳細] ページで、アプリケーションがデプロイされている各サーバーの主要なメトリクス、呼び出しトポロジ、およびトレースを表示できます。

Managed Service for OpenTelemetry コンソール にログインします。

左側のナビゲーションペインで、[アプリケーション] をクリックします。[アプリケーション] ページの上部で、必要に応じてリージョンを選択します。次に、確認するアプリケーションの名前をクリックします。

左側のナビゲーションペインで、[アプリケーション詳細] をクリックします。次に、[すべて] をクリックするか、左側のサーバーリストから IP アドレスで表されるサーバーを選択します。

[概要] タブで、呼び出しトポロジと主要なメトリクスを表示できます。また、[トレース] タブをクリックして、選択したサーバー上のアプリケーションのトレースを表示することもできます。最大 100 件のトレースが消費時間の降順に表示されます。

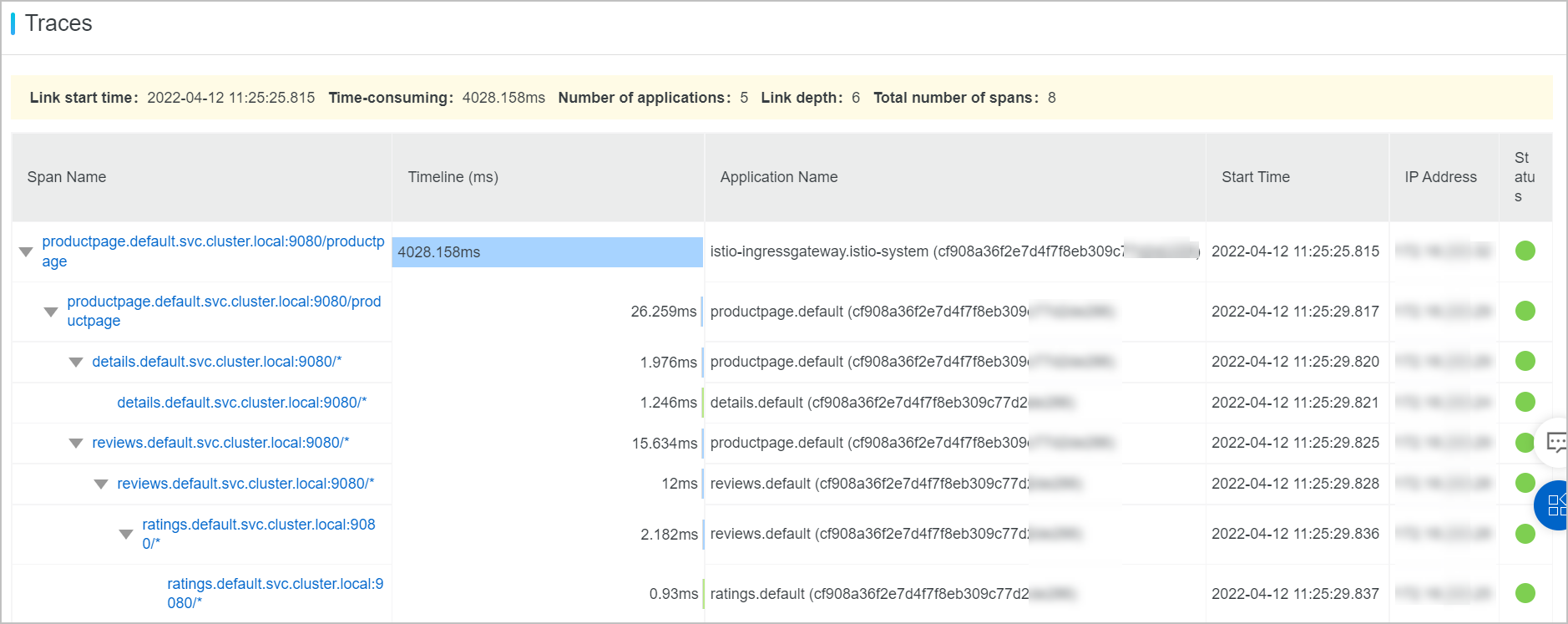

トレースのウォーターフォールチャートの表示

トレースのウォーターフォールチャートには、ログ生成時刻、ステータス、IP アドレスまたはサーバー名、サービス名、タイムラインなど、トレースの情報が表示されます。

確認するアプリケーションの [アプリケーション詳細] ページで、[トレース] タブをクリックします。トレースリストで、確認するトレースの ID をクリックします。

表示されたページで、トレースのウォーターフォールチャートを表示します。

説明[IP アドレス] 列には、IP アドレスまたはサーバー名が表示される場合があります。表示される情報は、[アプリケーション設定] ページで設定された表示設定によって異なります。詳細については、アプリケーションとタグの管理をご参照ください。

サービス名にポインタを合わせると、期間、開始時刻、タグ、ログイベントなど、サービスの情報を表示できます。詳細については、アプリケーション詳細をご参照ください。

FAQ

ASM コンソールで Managed Service for OpenTelemetry にトレーシングデータを収集した後、トレース情報を表示できないのはなぜですか?

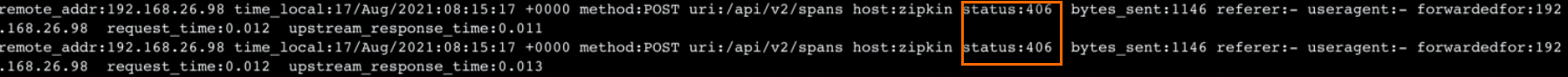

トレースプッシュログを表示します。

クラスタの kubeconfig ファイルを取得し、kubectl を使用してクラスタに接続します。詳細については、クラスタの kubeconfig ファイルを取得し、kubectl を使用してクラスタに接続するをご参照ください。

次のコマンドを実行して、istio-system ネームスペースの tracing-on-external-zipkin デプロイメントに関連するポッドのトレースプッシュログを表示します。

kubectl logs "$(kubectl get pods -n istio-system -l app=tracing -o jsonpath='{.items[0].metadata.name}')" -n istio-system -c nginxステータスコード 406 がトレースプッシュログに記録されます。

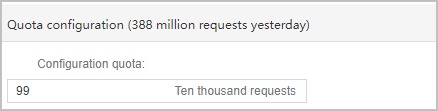

1 日あたりのサービスリクエスト数のクォータと前日の実際のサービスリクエスト数を表示します。

Managed Service for OpenTelemetry コンソール にログインします。

左側のナビゲーションペインで、[クラスタ設定] をクリックして、1 日あたりのサービスリクエスト数のクォータと前日の実際のサービスリクエスト数を表示します。

前日の実際のサービスリクエスト数が、Managed Service for OpenTelemetry コンソールで設定した 1 日あたりのサービスリクエスト数のクォータを超えていることがわかります。

1 日あたりのサービスリクエスト数のクォータを変更します。

1 日の実際のサービスリクエスト数が 1 日あたりのサービスリクエスト数のクォータを超えると、報告されたデータは破棄され、トレース情報は表示されません。この問題を解決するには、1 日の実際のサービスリクエスト数よりも大きくなるように、1 日あたりのサービスリクエスト数のクォータを増やす必要があります。

Managed Service for OpenTelemetry コンソールの左側のナビゲーションペインで、[クラスタ設定] をクリックします。

[クラスタ設定] タブで、構成クォータ[クォータ設定] セクションの 保存 パラメータの値を変更します。1 日の実際のサービスリクエスト数よりも大きくなるように値を増やします。次に、 をクリックします。

表示される [cue] メッセージで、[OK] をクリックします。

イングレスゲートウェイまたはサイドカーによってリクエストが処理された後、traceid が変更されるのはなぜですか?

考えられる原因は、アプリケーションによって開始されたリクエストの 伝播ヘッダー が不完全であるため、サイドカープロキシはトレース情報が不完全であると判断し、伝播ヘッダーを再生成するためです。カスタム traceid を使用する場合は、B3 伝播形式 のリクエストに少なくとも 2 つのヘッダー x-b3-trace-id と x-b3-spanid を含め、W3C Trace Context 伝播形式 のリクエストに少なくとも traceparent ヘッダーを含めます。