FlexVolumeプラグインは非推奨です。 新しいContainer Service for Kubernetes (ACK) クラスターは、FlexVolumeをサポートしなくなりました。 既存のクラスターでは、FlexVolumeからContainer Storage Interface (CSI) にアップグレードすることを推奨します。 このトピックでは、CSIを使用して、FlexVolumeによって管理される静的にプロビジョニングされたFile Storage NAS (NAS) ボリュームを引き継ぐ方法について説明します。

目次

FlexVolumeとCSIの違い

次の表に、CSIとFlexVolumeの違いを示します。

プラグイン | コンポーネント | kubeletパラメーター | 関連ドキュメント |

CSI |

| CSIプラグインに必要なkubeletパラメーターは、FlexVolumeプラグインに必要なものとは異なります。 CSIプラグインを実行するには、各ノードでkubeletパラメーター | |

FlexVolume |

| FlexVolumeプラグインに必要なkubeletパラメーターは、CSIプラグインに必要なものとは異なります。 FlexVolumeプラグインを使用するには、各ノードでkubeletパラメーター |

シナリオ

FlexVolumeはクラスターにインストールされ、静的にプロビジョニングされたNASボリュームのマウントに使用されます。 クラスタ内にFlexVolumeで管理されているディスクボリュームもある場合は、「csi-compatible-controllerを使用してFlexVolumeからCSIに移行する」をご参照ください。

使用上の注意

FlexVolumeからCSIにアップグレードすると、永続ボリュームクレーム (PVC) が再作成されます。 その結果、ポッドが再作成され、ビジネスが中断されます。 CSIへのアップグレード、PVCの再作成、アプリケーションの変更、またはオフピーク時にポッドが再起動するその他の操作を実行することを推奨します。

準備

CSIの手動インストール

という名前のファイルを作成します。csi-plugin.yamlとcsi-provisioner.yaml.

次のコマンドを実行して、csi-pluginとcsi-provisionerをクラスターにデプロイします。

kubectl apply -f csi-plugin.yaml -f csi-provisioner.yaml次のコマンドを実行して、CSIが正常に実行されるかどうかを確認します。

kubectl get pods -nkube-system | grep csi期待される出力:

csi-plugin-577mm 4/4 Running 0 3d20h csi-plugin-k9mzt 4/4 Running 0 41d csi-provisioner-6b58f46989-8wwl5 9/9 Running 0 41d csi-provisioner-6b58f46989-qzh8l 9/9 Running 0 6d20h前の出力が返された場合、CSIは通常通り実行されます。

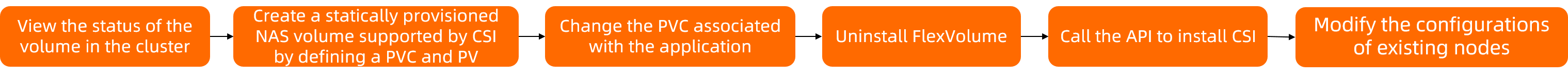

この例では、静的にプロビジョニングされたNASボリュームをStatefulSetによって作成されたポッドにマウントするためにFlexVolumeが使用されます。 この例では、CSIを使用して、FlexVolumeを使用してマウントされたNASボリュームを引き継ぐ方法を示します。 次の図に手順を示します。

手順1: クラスター内のボリュームのステータスを確認する

次のコマンドを実行して、ポッドのステータスを照会します。

kubectl get pod期待される出力:

NAME READY STATUS RESTARTS AGE nas-static-1 1/1 Running 0 11m次のコマンドを実行して、ポッドで使用されるPVCのステータスを照会します。

kubectl describe pod nas-static-1 |grep ClaimName期待される出力:

ClaimName: nas-pvc次のコマンドを実行して、PVCのステータスを照会します。

kubectl get pvc期待される出力:

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE nas-pvc Bound nax-pv 512Gi RWX 7m23s

ステップ2: PVCとPVを定義して、CSIによって管理される静的にプロビジョニングされたNASボリュームを作成する

方法1: Flexvolume2CSI CLIを使用してPVとPVCを変換する

FlexVolumeによって管理されるPVおよびPVCを、CSIによって管理されるPVおよびPVCに変換する。

次のコマンドを実行して、NASボリュームのPVCとPVを作成します。

nas-pv-pvc-csi.yamlは、Flexvolume2CSI CLIを使用して元のPVCおよびPVを変換した後に、CSIによって管理されるPVCおよびPVを定義するYAMLファイルです。kubectl apply -f nas-pv-pvc-csi.yaml次のコマンドを実行して、PVCのステータスを照会します。

kubectl get pvc期待される出力:

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE nas-pvc Bound nas-pv 512Gi RWX nas 30m nas-pvc-csi Bound nas-pv-csi 512Gi RWX nas 2s

方法2: FlexVolumeによって管理されているPVCとPVを保存し、ボリュームプラグインを変更する

FlexVolumeによって管理されるPVおよびPVCオブジェクトを保存します。

次のコマンドを実行して、FlexVolumeによって管理されるPVCオブジェクトを保存します。

kubectl get pvc nas-pvc -oyaml > nas-pvc-flexvolume.yaml cat nas-pvc-flexvolume.yaml期待される出力:

apiVersion: v1 kind: PersistentVolumeClaim metadata: name: nas-pvc namespace: default spec: accessModes: - ReadWriteMany resources: requests: storage: 512Gi selector: matchLabels: alicloud-pvname: nas-pv storageClassName: nas次のコマンドを実行して、FlexVolumeによって管理される永続ボリューム (PV) オブジェクトを保存します。

kubectl get pv nas-pv -oyaml > nas-pv-flexvolume.yaml cat nas-pv-flexvolume.yaml期待される出力:

apiVersion: v1 kind: PersistentVolume metadata: labels: alicloud-pvname: nas-pv name: nas-pv spec: accessModes: - ReadWriteMany capacity: storage: 512Gi flexVolume: driver: alicloud/nas options: path: /aliyun server: ***.***.nas.aliyuncs.com vers: "3" persistentVolumeReclaimPolicy: Retain storageClassName: nas

PVCとPVを定義して、CSIが管理する静的にプロビジョニングされたNASボリュームを作成します。

nas-pv-pvc-csi.yamlという名前のファイルを作成し、次のYAMLコンテンツをファイルに追加して、CSIが管理する静的にプロビジョニングされたNASボリュームを作成します。

apiVersion: v1 kind: PersistentVolumeClaim metadata: name: nas-pvc-csi namespace: default spec: accessModes: - ReadWriteMany resources: requests: storage: 512Gi selector: matchLabels: alicloud-pvname: nas-pv-csi storageClassName: nas --- apiVersion: v1 kind: PersistentVolume metadata: labels: alicloud-pvname: nas-pv-csi name: nas-pv-csi spec: accessModes: - ReadWriteMany capacity: storage: 512Gi csi: driver: nasplugin.csi.alibabacloud.com volumeHandle: nas-pv-csi volumeAttributes: server: "***.***.nas.aliyuncs.com" path: "/aliyun" mountOptions: - nolock,tcp,noresvport - vers=3 persistentVolumeReclaimPolicy: Retain storageClassName: nas次のコマンドを実行して、NASボリュームのPVCとPVを作成します。

kubectl apply -f nas-pv-pvc-csi.yaml次のコマンドを実行して、PVCのステータスを照会します。

kubectl get pvc期待される出力:

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE nas-pvc Bound nax-pv 512Gi RWX 7m23s

ステップ3: アプリケーションに関連付けられているPVCを変更する

次のコマンドを実行して、アプリケーションの設定ファイルを変更します。

kubectl edit sts nas-staticChange the PVC to one managed by CSI.

volumes: - name: pvc-nas persistentVolumeClaim: claimName: nas-pvc-csi次のコマンドを実行して、ポッドが再起動されたかどうかを確認します。

kubectl get pod期待される出力:

NAME READY STATUS RESTARTS AGE nas-static-1 1/1 Running 0 70s次のコマンドを実行して、マウント情報を照会します。

kubectl exec nas-static-1 -- mount |grep nas期待される出力:

# View the mount information ***.***.nas.aliyuncs.com:/aliyun on /var/lib/kubelet/pods/ac02ea3f-125f-4b38-9bcf-9b117f62***/volumes/kubernetes.io~csi/nas-pv-csi/mount type nfs (rw,relatime,vers=3,rsize=1048576,wsize=1048576,namlen=255,hard,nolock,noresvport,proto=tcp,timeo=600,retrans=2,sec=sys,mountaddr=192.168.XX.XX,mountvers=3,mountport=2049,mountproto=tcp,local_lock=all,addr=192.168.XX.XX)上記の出力が返された場合、ポッドは移行されます。

ステップ4: FlexVolumeのアンインストール

にログインします。OpenAPI Explorerコンソールを呼び出し、UnInstallClusterAddons操作を実行して、FlexVolumeプラグインをアンインストールします。

ClusterId: 値をクラスターのIDに設定します。 クラスターIDは、クラスターのクラスター詳細ページの [基本情報] タブで確認できます。

name: 値をFlexvolumeに設定します。

詳細については、「クラスターからのコンポーネントのアンインストール」をご参照ください。

次のコマンドを実行して、alicloud-disk-controllerおよびalicloud-nas-controllerコンポーネントを削除します。

kubectl delete deploy -nkube-system alicloud-disk-controller alicloud-nas-controller次のコマンドを実行して、FlexVolumeプラグインがクラスターからアンインストールされているかどうかを確認します。

kubectl get pods -n kube-system | grep 'flexvolume\|alicloud-disk-controller\|alicloud-nas-controller'出力が表示されない場合、FlexVolumeプラグインはクラスターからアンインストールされます。

次のコマンドを実行して、FlexVolumeを使用するStorageClassをクラスターから削除します。 FlexVolumeを使用するStorageClassのプロビジョニングはalicloud/diskです。

kubectl delete storageclass alicloud-disk-available alicloud-disk-efficiency alicloud-disk-essd alicloud-disk-ssd期待される出力:

storageclass.storage.k8s.io "alicloud-disk-available" deleted storageclass.storage.k8s.io "alicloud-disk-efficiency" deleted storageclass.storage.k8s.io "alicloud-disk-essd" deleted storageclass.storage.k8s.io "alicloud-disk-ssd" deleted上記の出力が表示された場合、StorageClassはクラスターから削除されます。

ステップ5: APIを呼び出してCSIをインストールする

にログインします。OpenAPI Explorerコンソールを呼び出し、InstallClusterAddonsCSIプラグインをインストールします。

ClusterId: 値をクラスターのIDに設定します。

name: 値をcsi-provisionerに設定します。

version: 最新バージョンが自動的に指定されます。 CSIバージョンの詳細については、「csi-provisioner」をご参照ください。

CSIプラグインのインストール方法の詳細については、「ACKクラスターにコンポーネントをインストールする」をご参照ください。

次のコマンドを実行して、クラスターでCSIプラグインが期待どおりに実行されるかどうかを確認します。

kubectl get pods -nkube-system | grep csi期待される出力:

csi-plugin-577mm 4/4 Running 0 3d20h csi-plugin-k9mzt 4/4 Running 0 41d csi-provisioner-6b58f46989-8wwl5 9/9 Running 0 41d csi-provisioner-6b58f46989-qzh8l 9/9 Running 0 6d20h前の出力が表示されている場合、CSIプラグインはクラスターで期待どおりに実行されます。

ステップ6: 既存のノードの設定を変更する

次のコードブロックに基づいてYAMLファイルを作成します。 次に、YAMLファイルをデプロイして、CSIプラグインが依存するkubeletパラメーターを変更します。 このDaemonSetは、既存のノードのkubeletパラメーター -- enable-controller-attach-detachの値をtrueに変更できます。 この手順が完了したら、DaemonSetを削除できます。

YAMLファイルをデプロイすると、kubeletが再起動されます。 YAMLファイルをデプロイする前に、アプリケーションへの影響を評価することを推奨します。

kind: DaemonSet

apiVersion: apps/v1

metadata:

name: kubelet-set

spec:

selector:

matchLabels:

app: kubelet-set

template:

metadata:

labels:

app: kubelet-set

spec:

tolerations:

- operator: "Exists"

hostNetwork: true

hostPID: true

containers:

- name: kubelet-set

securityContext:

privileged: true

capabilities:

add: ["SYS_ADMIN"]

allowPrivilegeEscalation: true

image: registry.cn-hangzhou.aliyuncs.com/acs/csi-plugin:v1.26.5-56d1e30-aliyun

imagePullPolicy: "Always"

env:

- name: enableADController

value: "true"

command: ["sh", "-c"]

args:

- echo "Starting kubelet flag set to $enableADController";

ifFlagTrueNum=`cat /host/etc/systemd/system/kubelet.service.d/10-kubeadm.conf | grep enable-controller-attach-detach=$enableADController | grep -v grep | wc -l`;

echo "ifFlagTrueNum is $ifFlagTrueNum";

if [ "$ifFlagTrueNum" = "0" ]; then

curValue="true";

if [ "$enableADController" = "true" ]; then

curValue="false";

fi;

sed -i "s/enable-controller-attach-detach=$curValue/enable-controller-attach-detach=$enableADController/" /host/etc/systemd/system/kubelet.service.d/10-kubeadm.conf;

restartKubelet="true";

echo "current value is $curValue, change to expect "$enableADController;

fi;

if [ "$restartKubelet" = "true" ]; then

/nsenter --mount=/proc/1/ns/mnt systemctl daemon-reload;

/nsenter --mount=/proc/1/ns/mnt service kubelet restart;

echo "restart kubelet";

fi;

while true;

do

sleep 5;

done;

volumeMounts:

- name: etc

mountPath: /host/etc

volumes:

- name: etc

hostPath:

path: /etc