Container Service for Kubernetes (ACK) を使用すると、トポロジ認識スケジューリングと一緒にギャングスケジューリングを使用して、ポッドの要件を満たすトポロジドメインが見つかるまで、スケジューラがトポロジドメインのリストをループすることができます。 さらに、ACKのノードプールをElastic Compute Service (ECS) デプロイメントセットに関連付けて、トポロジドメインなどの低次元でアフィニティスケジューリングを実行できます。 ポッド間のネットワーク遅延を減らすために、同じデプロイメントセット内のECSインスタンスにポッドをスケジュールできます。 このトピックでは、トポロジ対応のスケジューリングを設定する方法について説明します。

Introduction to topology-aware scheduling

機械学習またはビッグデータ分析ジョブによって作成されたポッドは、通常、頻繁に通信する必要があります。 デフォルトでは、KubernetesスケジューラはACKクラスター内のノード間でポッドを均等に分散します。 その結果、ポッドは高い待ち時間で通信することができ、ジョブを完了するのに必要な時間量が増加する。 前のジョブの効率を向上させるために、同じゾーンまたは同じラックにポッドを配置できます。 これにより、ポッド間のホップ数を減らすことができるため、ポッドはより低いレイテンシで通信できます。 Kubernetesでは、ノードアフィニティとポッドアフィニティに基づいてポッドをスケジュールできます。 ただし、Kubernetesのノードアフィニティとポッドアフィニティには、次の欠点があります。

ポッドスケジューリング中にすべてのトポロジドメインをループするようにスケジューラを設定することはできません。 Kubernetesアフィニティスケジューリングでは、ジョブは、ジョブの最初のポッドがスケジュールされるノードにポッドをスケジュールします。 ノードが他のポッドの要件を満たすことができない場合、一部のポッドは保留になる可能性があります。 ポッドのゾーンは、別のトポロジドメインがこれらのポッドの要件を満たしている場合でも、自動的に変更されません。

これは、ノードにはゾーン固有のラベルしかないためです。 すべてのポッドは、アフィニティスケジューリングによって同じゾーンにスケジュールされます。 スケジューラは、トポロジドメインなどの低次元のポッドをスケジュールできません。

ポッドスケジューリング中にトポロジドメインをループする

ジョブにギャングスケジューリングラベルを追加して、ジョブによって作成されたすべてのポッドのリソース要求が同時に実行されるようにすることができます。 これにより、kube-schedulerは、ポッドスケジューリング中に複数のトポロジドメインをループできます。

次のギャングスケジューリングラベルをポッドに追加します。 ギャングスケジューリングの詳細については、「ギャングスケジューリングの操作」をご参照ください。

... labels: pod-group.scheduling.sigs.k8s.io/name: tf-smoke-gpu # Specify the name of the PodGroup, such as tf-smoke-gpu. pod-group.scheduling.sigs.k8s.io/min-available: "3" # Set the value to the number of pods created by the job. ...ラベルセクションのポッドに、次のトポロジ対応のスケジューリング制約を追加します。

annotations: alibabacloud.com/topology-aware-constraint: {\"name\":\"test\",\"required\":{\"topologies\":[{\"key\":\"kubernetes.io/hostname\"}],\"nodeSelectors\":[{\"matchLabels\":{\"test\":\"abc\"}}]}}alibabacloud.com/topology-aware-constraintの値は有効なJSON文字列である必要があります。 JSON文字列は次の形式である必要があります。{ "name": xxx # Specify a custom name. "required": { "topologies": [ { "key": xxx # The key of the topology domain for affinity scheduling. } ], "nodeSelectors": [ { # You can refer to the format of the LabelSelector in Kubernetes node affinity. "matchLabels": {}, "matchExpressions": {} } ] } }設定が完了すると、スケジューラは

pod-group.scheduling.sigs.k8s.io/name: tf-smoke-gpuラベルを持つすべてのポッドをtest=abcラベルを持つノードにスケジュールします。 次の出力は、スケジューリング結果を示します。kubectl get pod -ojson | jq '.items[] | {"name":.metadata.name,"ann":.metadata.annotations["alibabacloud.com/topology-aware-constraint"], "node": spec.nodeName}' { "name": "nginx-deployment-basic-69f47fc6db-6****" "ann": "{\"name\": \"test\", \"required\": {\"topologies\":[{\"key\": \"kubernetes.io/hostname\"}], \"nodeSelectors\": [{\"matchLabels\": {\"test\": \"a\"}}]}} " "node": "cn-shenzhen.10.0.2.4" } { "name":"nginx-deployment-basic-69f47fc6db-h****" "ann": "{\"name\": \"test\", \"required\": {\"topologies\":[{\"key\": \"kubernetes.io/hostname\"}], \"nodeSelectors\": [{\"matchLabels\": {\"test\": \"a\"}}]}} " "node": "cn-shenzhen.10.0.2.4" }

同じデプロイメントセットにポッドをスケジュールして、ネットワーク待ち時間を短縮する

一部のシナリオでは、トポロジドメインなどの低次元でアフィニティスケジューリングを実行する必要がある場合があります。 ECSでは、デプロイメントセットを作成して、デプロイメントセット内のECSインスタンス間のネットワーク遅延を減らすことができます。 ACKでデプロイメントセットを使用する方法の詳細については、「デプロイメントセットをノードプールに関連付けるためのベストプラクティス」をご参照ください。

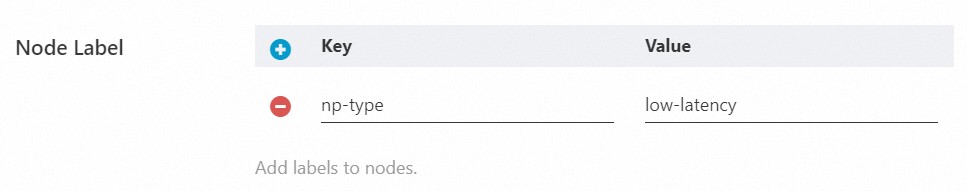

次の図に示すように、デプロイメントセットに関連付けられているノードプールを作成する場合は、カスタムノードラベルを追加して、ノードプールを他のノードプールと区別する必要があります。

設定が完了したら、次の注釈とラベルを追加して、配置セットにジョブをスケジュールします。

次のギャングスケジューリングラベルをポッドに追加します。 ギャングスケジューリングの詳細については、「ギャングスケジューリングの操作」をご参照ください。

labels: pod-group.scheduling.sigs.k8s.io/name: xxx # Specify the name of the PodGroup. pod-group.scheduling.sigs.k8s.io/min-available: "x" # Set the value to the number of pods created by the job.ラベルセクションのポッドに、次のトポロジ対応のスケジューリング制約を追加します。

重要この例では、

matchLabelsをデプロイメントセットに関連付けられているノードプールのカスタムノードラベルに置き換え、nameを実際の値に置き換えます。annotations: alibabacloud.com/topology-aware-constraint: {\"name\":\"test\",\"required\":{\"topologies\":[{\"key\":\"alibabacloud.com/nodepool-id\"}],\"nodeSelectors\":[{\"matchLabels\":{\"np-type\":\"low-latency\"}}]}}