Object Storage Service (OSS) ボリュームは、OSSFSを使用してマウントされたFilesystem in Userspace (FUSE) ファイルシステムです。 OSSボリュームは、読み取り操作に最適です。 OSSは共有ストレージサービスで、ReadOnlyManyモードとReadWriteManyモードでアクセスできます。 OSSFSは、同時読み取りシナリオに適用できます。 同時読み取りシナリオでは、永続ボリュームクレーム (PVC) と永続ボリューム (PV) のアクセスモードをReadOnlyManyに設定することを推奨します。 このトピックでは、OSS SDKとossutilを使用して、読み取り負荷の高いシナリオで読み取り /書き込み分割を設定する方法について説明します。

前提条件

- 説明

静的にプロビジョニングされたOSSボリュームをマウントするポッドをホストするOSSバケットとElastic Compute Service (ECS) インスタンスが同じリージョンにデプロイされている場合、内部エンドポイントを使用してECSインスタンスからOSSバケットにアクセスします。

適用シナリオ

OSSは、読み取り専用および読み取り /書き込みシナリオで一般的に使用されます。 読み取りが多いシナリオでは、OSSの読み取り /書き込み分割を設定し、読み取り操作を高速化するためにキャッシュパラメーターを設定し、OSS SDKを使用してデータを書き込むことを推奨します。

読み取り専用シナリオ

ビッグデータの推論、分析、およびクエリのシナリオでは、データが誤って削除または変更されないように、OSSボリュームのアクセスモードをReadOnlyManyに設定することを推奨します。 詳細については、「静的にプロビジョニングされたOSSボリュームのマウント」をご参照ください。

キャッシュパラメーターを設定して、読み取り操作を高速化することもできます。

パラメーター | 説明 |

kernel_cache | カーネルキャッシュを使用して読み取り操作を高速化します。 この機能は、リアルタイムで最新のデータにアクセスする必要がないシナリオに適しています。 OSSFSがファイルを複数回読み取る必要があり、クエリがキャッシュにヒットした場合、データ取得を高速化するために、カーネルキャッシュ内のアイドルメモリを使用してファイルをキャッシュします。 |

parallel_count | マルチパートダウンロードまたはアップロード中に同時にダウンロードまたはアップロードできるパーツの最大数を指定します。 デフォルトは20です。 |

max_multireq | ファイルメタデータを同時に取得できるクエリの最大数を指定します。 このパラメーターの値は、parallel_countパラメーターの値以上である必要があります。 デフォルトは20です。 |

max_stat_cache_size | メタデータがメタデータキャッシュに格納されているファイルの最大数を指定します。 デフォルトは1000です。 メタデータキャッシュを無効にするには、このパラメーターを0に設定します。 リアルタイムで最新のデータにアクセスする必要がないシナリオでは、現在のディレクトリに多数のファイルが含まれている場合、キャッシュの数を増やしてLIST操作を高速化できます。 |

デフォルトでは、ossfsには、OSSコンソール、OSS SDK、およびossutilを使用してアップロードされるファイルおよびディレクトリに対する640権限があります。 -o gid=xxx -o uid=xxxまたは -o mask=022パラメーターを設定すると、OSSコンソール、OSS SDK、およびossutilを使用してマウントされたディレクトリおよびサブディレクトリに対する読み取り権限がossfsに付与されます。 詳細については、「」をご参照ください。OSSボリュームのマウントに関連する権限を管理するにはどうすればよいですか。. ossfsパラメーターの詳細については、「ossfs/README-CN.md」をご参照ください。

読み書きシナリオ

読み書きシナリオでは、OSSボリュームのアクセスモードをReadWriteManyに設定する必要があります。 ossfsを使用する場合は、次の項目に注意してください。

ossfsは、同時書き込み操作によって書き込まれたデータの一貫性を保証できません。

OSSボリュームがポッドにマウントされた後、ポッドまたはポッドのホストにログインし、マウントパス内のファイルを削除または変更すると、OSSバケット内のソースファイルも削除または変更されます。 重要なデータが誤って削除されないように、OSSバケットのバージョン管理を有効にすることができます。 詳細については、「バージョン管理」をご参照ください。

一部の読み取り量の多いシナリオでは、ビッグデータモデルのトレーニングなど、読み取り要求と書き込み要求が別々に処理されます。 これらのシナリオでは、OSSの読み取りと書き込みを分割することを推奨します。 これを行うには、OSSボリュームのアクセスモードをReadOnlyManyに設定し、キャッシュパラメーターを設定して読み取り操作を高速化し、OSS SDKを使用してデータを書き込みます。 詳細については、「例」をご参照ください。

例

手描きの画像認識トレーニングアプリケーションを例として使用して、OSSの読み書き分離を設定する方法を説明します。 この例では、単純な深層学習モデルが訓練される。 トレーニングセットは読み取り専用OSSボリュームの /data-dirディレクトリから取得され、OSS SDKを使用してチェックポイントを /log-dirディレクトリに書き込みます。

ossfsを使用した読み書き分離の設定

次のテンプレートに基づいて、手描きの画像認識トレーニングアプリケーションを展開します。 アプリケーションはPythonで記述され、静的にプロビジョニングされたOSSボリュームがアプリケーションにマウントされます。 OSSボリュームの設定方法の詳細については、「静的にプロビジョニングされたOSSボリュームのマウント」をご参照ください。

次の例では、アプリケーションはOSSバケットの

/tf-trainサブディレクトリをポッドの/mntディレクトリにマウントします。 MNIST手描き画像トレーニングセットは、/tf-train/train/dataディレクトリに格納されます。 ディレクトリを次の図に示します。

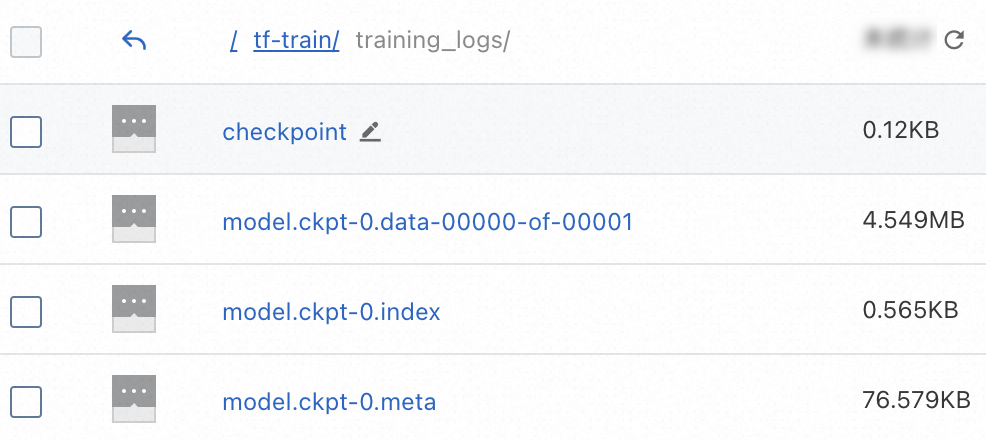

トレーニングを開始する前は、

trainning_logsディレクトリは空です。 トレーニング中、チェックポイントはポッドの/mnt/training_logsディレクトリに書き込まれ、ossfsによってOSSバケットの/tf-train/trainning_logsディレクトリにアップロードされます。データが正常に読み書きできることを確認します。

次のコマンドを実行して、ポッドのステータスを照会します。

kubectl get pod tf-mnistポッドのステータスが実行中から完了に変わるまで数分待ちます。 期待される出力:

NAME READY STATUS RESTARTS AGE tf-mnist 1/1 Completed 0 2m次のコマンドを実行して、ポッドのログを出力します。

ポッドの操作ログでデータの読み込み時間を確認します。 ロード時間には、OSSからファイルをダウンロードしてTensorFlowにファイルをロードするのに必要な時間が含まれます。

kubectl logs pod tf-mnist | grep dataload期待される出力:

dataload cost time: 1.54191803932実際の読み込み時間は、インスタンスのパフォーマンスとネットワーク条件によって異なります。

にログインします。OSSコンソール. ファイルがOSSバケットの

/tf-train/trainning_logsディレクトリにアップロードされていることがわかります。 これは、データを通常どおりOSSに書き込み、OSSから読み取ることができることを示します。

読み取り /書き込み分割を使用してossfsの読み取り速度を向上

手書きの画像認識トレーニングアプリケーションとOSS SDKを例として、読み書き分離をサポートするようにアプリケーションを再構成する方法を説明します。

Container Service for Kubernetes (ACK) 環境にOSS SDKをインストールします。 イメージをビルドするときに、次のコンテンツを追加します。詳細については、「インストール」をご参照ください。

RUN pip install oss2公式のPython SDKデモを参照し、ソースコードを変更します。

次のコードブロックは、前述の手描き画像認識トレーニングアプリケーションを使用した場合のベース画像に関連するソースコードを示しています。

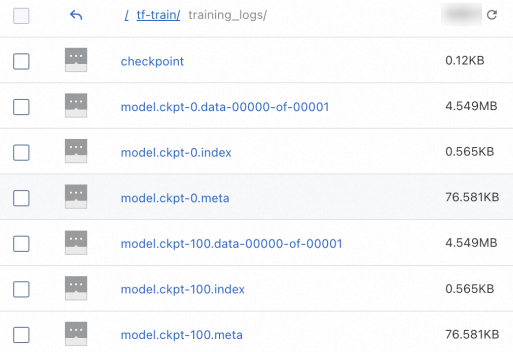

def train(): ... saver = tf.train.Saver(max_to_keep=0) for i in range(FLAGS.max_steps): if i % 10 == 0: # Record summaries and test-set accuracy summary, acc = sess.run([merged, accuracy], feed_dict=feed_dict(False)) print('Accuracy at step %s: %s' % (i, acc)) if i % 100 == 0: print('Save checkpoint at step %s: %s' % (i, acc)) saver.save(sess, FLAGS.log_dir + '/model.ckpt', global_step=i)コードブロックは、100回の繰り返しのたびに、チェックポイントがlog_dirディレクトリ (ポッドの

/mnt/training_logsディレクトリ) に書き込まれることを示しています。 Saverのmax_to_keepパラメーターが0に設定されているため、すべてのチェックポイントが保持されます。 1,000回の反復後、10セットのチェックポイントがOSSに保存されます。次の要件に基づいてコードを変更し、OSS SDKを使用してチェックポイントをアップロードします。

環境変数からAccessKeyペアとバケット情報を読み取るための資格情報を設定します。 詳細については、「アクセス資格情報の設定」をご参照ください。

コンテナーのメモリ使用量を減らすには、

max_to_keepを1に設定します。 このようにして、チェックポイントの最新のセットのみが保持される。 put_object_from_file関数は、OSSバケットの指定されたディレクトリにチェックポイントをアップロードするために使用されます。

説明読み書き分離シナリオでOSS SDKを使用する場合、非同期の読み書きを使用してトレーニングを高速化できます。

import oss2 from oss2.credentials import EnvironmentVariableCredentialsProvider auth = oss2.ProviderAuth(EnvironmentVariableCredentialsProvider()) url = os.getenv('URL','<default-url>') bucketname = os.getenv('BUCKET','<default-bucket-name>') bucket = oss2.Bucket(auth, url, bucket) ... def train(): ... saver = tf.train.Saver(max_to_keep=1) for i in range(FLAGS.max_steps): if i % 10 == 0: # Record summaries and test-set accuracy summary, acc = sess.run([merged, accuracy], feed_dict=feed_dict(False)) print('Accuracy at step %s: %s' % (i, acc)) if i % 100 == 0: print('Save checkpoint at step %s: %s' % (i, acc)) saver.save(sess, FLAGS.log_dir + '/model.ckpt', global_step=i) # FLAGS.log_dir = os.path.join(os.getenv('TEST_TMPDIR', '/mnt'),'training_logs') for path,_,file_list in os.walk(FLAGS.log_dir) : for file_name in file_list: bucket.put_object_from_file(os.path.join('tf-train/training_logs', file_name), os.path.join(path, file_name))変更されたコンテナーイメージは

registry.cn-beijing.aliyuncs.com/tool-sys/tf-train-demo:roです。アプリケーションテンプレートを変更して、アプリケーションが読み取り専用モードでOSSにアクセスすることを要求します。

PVとPVCの

accessModesパラメーターをReadOnlyManyに設定し、OSSバケットのマウントターゲットを/tf-train/train/dataに設定します。-o kernel_cache -o max_stat_cache_size=10000 -oumask=022をotherOptsに追加します。 これにより、ossfsはメモリキャッシュからデータを読み取ることができ、メタデータキャッシュの数が増えます。 10,000メタデータキャッシュは、約40 MBのメモリを占有する。 インスタンスの仕様とデータ量に基づいて数を調整できます。 さらに、umaskは、非rootユーザーとして実行されるコンテナープロセスに読み取り権限を付与します。 詳細については、「シナリオの使用」をご参照ください。OSS_ACCESS_KEY_IDおよびOSS_ACCESS_KEY_SECRET環境変数をポッドテンプレートに追加します。 环境変数の値は、oss-secretから取得できます。 情報がOSSボリュームと同じであることを確認してください。

データが正常に読み書きできることを確認します。

次のコマンドを実行して、ポッドのステータスを照会します。

kubectl get pod tf-mnistポッドのステータスが実行中から完了に変わるまで数分待ちます。 期待される出力:

NAME READY STATUS RESTARTS AGE tf-mnist 1/1 Completed 0 2m次のコマンドを実行して、ポッドのログを出力します。

ポッドの操作ログでデータの読み込み時間を確認します。 ロード時間には、OSSからファイルをダウンロードしてTensorFlowにファイルをロードするのに必要な時間が含まれます。

kubectl logs pod tf-mnist | grep dataload期待される出力:

dataload cost time: 0.843528985977出力は、キャッシュを使用して読み取り専用モードで読み取り操作を高速化することを示します。 この方法は、大規模なトレーニングや継続的なデータ読み込みのシナリオに最適です。

にログインします。OSSコンソール. ファイルがOSSバケットの

/tf-train/trainning_logsディレクトリにアップロードされていることがわかります。 これは、データを通常どおりOSSに書き込み、OSSから読み取ることができることを示します。

Alibaba Cloudが提供するOSS SDKデモ

次の表に、Alibaba Cloudが提供するOSS SDKデモを示します。 OSS SDKは次のプログラミング言語をサポートしています: PHP、Node.js、Browser.js、NET、Android、iOS、およびRuby。 詳細については、「SDKリファレンス」をご参照ください。

プログラミング言语 | 関連ドキュメント |

JAVA | |

Python | |

GO | |

C++ | |

C |

OSS読み書き分離を設定するためのその他のツール

ツール | 関連ドキュメント |

OpenAPI | |

ossutil | |

ossbrowser |