After Log Service collects logs, you can ship the logs to a MaxCompute table for data storage and analysis. This topic describes how to create a data shipping job of the new version to ship data to MaxCompute.

Prerequisites

A project and a Logstore are created. For more information, see Create a project and a Logstore.

Logs are collected. For more information, see Data collection overview.

A MaxCompute partitioned table is created in the region where the Log Service project resides. For more information, see Create tables.

Usage notes

You can use the new version of the data shipping feature to ship data to MaxCompute only in the following regions: China (Beijing), China (Zhangjiakou), China (Hangzhou), China (Shanghai), China East 2 Finance, China (Shenzhen), China South 1 Finance, China (Chengdu), China (Hong Kong), Germany (Frankfurt), Malaysia (Kuala Lumpur), US (Virginia), US (Silicon Valley), Japan (Tokyo), Singapore, Indonesia (Jakarta), and UK (London). If you want to use the feature in other regions, submit a ticket.

If a field value of the char type or varchar type exceeds a specified length, the value is truncated after the value is shipped to MaxCompute, and the excess part is discarded.

For example, if the maximum length is set to 3 and a field value is 012345, the value is truncated to 012 after the value is shipped to MaxCompute.

If a field value of the string type, char type, or varchar type is an empty string, the value is converted to Null after the value is shipped to MaxCompute.

A field value of the datetime type must be in the YYYY-MM-DD HH:mm:ss format. Multiple spaces can exist between DD and HH. If a field value of the datetime type is in an invalid format, the value can be shipped to MaxCompute but the value is converted to Null.

If a field value of the date type is in an invalid format, the value can be shipped to MaxCompute but the value is converted to Null.

If the number of digits after the decimal point in a field value of the decimal type exceeds a specified limit, the value is rounded and truncated. If the number of digits before the decimal point exceeds a specified limit, the system discards the entire log as dirty data and increases the number of failed logs.

By default, dirty data is discarded during data shipping.

If a value that does not exist in a log is shipped to MaxCompute, the value is converted to a default value or Null.

If a default value is specified when you create a MaxCompute table, a value that does not exist in a log is converted to the default value after the value is shipped to MaxCompute.

If no default value is specified when you create a MaxCompute table and the value Null is allowed, a value that does not exist in a log is converted to Null after the value is shipped to MaxCompute.

You can run a maximum of 64 data shipping jobs to ship data to MaxCompute at the same time. A maximum of 10 MB of data can be written to each MaxCompute partition per second.

Procedure

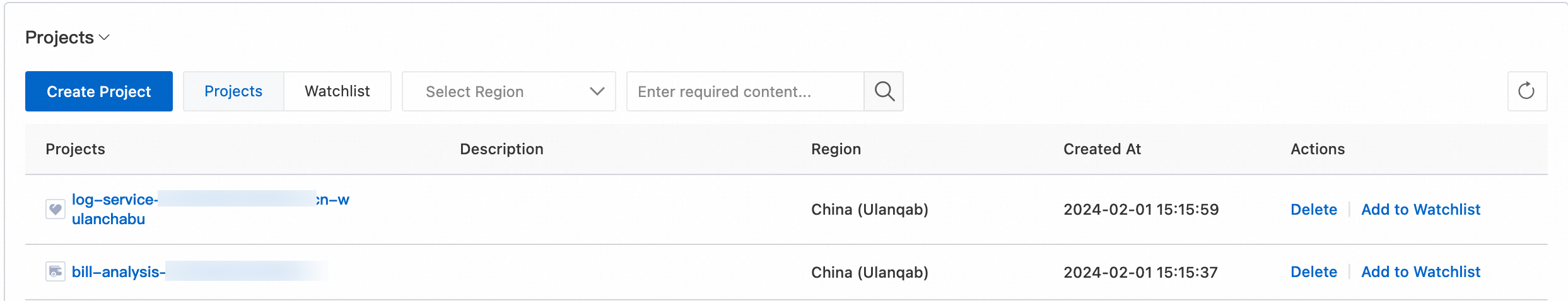

Log on to the Simple Log Service console.

In the Projects section, click the project you want.

Choose . On the Logstores tab, find the Logstore that you want to manage, click >, and then choose .

Move the pointer over MaxCompute and click +.

In the Data Shipping to MaxCompute panel, configure the parameters and click OK.

Set the Shipping Version parameter to New Version(Recommended). The following table describes the parameters.

Parameter

Description

Job Name

The name of the data shipping job.

Display Name

The display name of the data shipping job.

Job Description

The description of the data shipping job.

Destination Region

The region where the project of the MaxCompute table resides.

MaxCompute Endpoint

The endpoint for the region where your MaxCompute project resides. For more information, see Endpoints.

Tunnel Endpoint

The Tunnel endpoint for the region where your MaxCompute project resides. For more information, see Endpoints.

MaxCompute Project Name

The MaxCompute project to which the MaxCompute table belongs.

Table Name

The name of the MaxCompute table.

Read Permissions on Log Service

The method that is used to authorize the MaxCompute data shipping job to read data from the Logstore.

Default Role: The MaxCompute data shipping job assumes the AliyunLogDefaultRole system role to read data from the Logstore. For more information, see Read data from a Logstore by using a default role.

Custom Role: The MaxCompute data shipping job assumes a custom role to read data from the Logstore.

You must grant the custom role the permissions to read data from the Logstore. Then, enter the Alibaba Cloud Resource Name (ARN) of the custom role in the Read Permissions on Log Service field. For more information, see Read data from a Logstore by using a custom role.

Write Permissions on MaxCompute

The method that is used to authorize the MaxCompute data shipping job to write data to the MaxCompute table.

Default Role: The MaxCompute data shipping job assumes the AliyunLogDefaultRole system role to write data to the MaxCompute table. For more information, see Write data to MaxCompute by using a default role.

Custom Role: The MaxCompute data shipping job assumes a custom role to write data to the MaxCompute table.

You must grant the custom role the permissions to write data to the MaxCompute table. Then, enter the ARN of the custom role in the Write Permissions on MaxCompute field.

If the Log Service project and the MaxCompute project belong to the same Alibaba Cloud account, obtain the ARN by following the instructions that are provided in Ship data within an Alibaba Cloud account.

If the Log Service project and the MaxCompute project belong to different Alibaba Cloud accounts, obtain the ARN by following the instructions that are provided in Ship data across Alibaba Cloud accounts.

AccessKey Pair: The MaxCompute data shipping job uses the AccessKey pair of a RAM user to write data to the MaxCompute table. For more information, see Write data to MaxCompute by using a RAM user.

Automatic Authorization

Click Authorize to automatically grant the RAM role the permissions to write data to MaxCompute.

ImportantIf you use a RAM user to perform the operations, the RAM user must have the permissions to manage the MaxCompute account.

If the automatic authorization fails, the following commands are returned. You can copy the commands to the MaxCompute console to manually complete the authorization. For more information, see the Use the CLI to grant permissions to the RAM role section in "Write data to MaxCompute by using a custom role".

USE xxxxx; ADD USER RAM$xxxxx:`role/xxxxx`; GRANT CreateInstance ON PROJECT xxxxx TO USER RAM$xxxxx:`role/xxxxx`; GRANT Describe, Alter, update ON TABLE xxxxx TO USER RAM$xxxxx:`role/xxxxx`;

MaxCompute Common Column

The mappings between log fields and data columns in the MaxCompute table. In the left field, enter the name of a log field that you want to map to a data column in the MaxCompute table. In the right field, enter the name of the column. For more information, see Data model mapping.

ImportantLog Service ships logs to MaxCompute based on the sequence of the specified log fields and MaxCompute table columns. Changing the names of these columns does not affect the data shipping process. If you change the schema of the MaxCompute table, you must reconfigure the mappings between the log fields and MaxCompute table columns.

The name of the log field that you specify in the left field cannot contain double quotation marks ("") or single quotation marks (''). The name cannot be a string that contains spaces.

- If a log contains two fields that have the same name, such as request_time, Log Service displays one of the fields as request_time_0. The two fields are still stored as request_time in Log Service. When you configure a shipping rule, you can use only the original field name request_time.

If a log contains fields that have the same name, Log Service randomly ships the value of one of the fields. We recommend that you do not include fields that have the same name in your logs.

MaxCompute Partition Column

The mappings between log fields and partition key columns in the MaxCompute table. In the left field, enter the name of a log field that you want to map to a partition key column in the MaxCompute table. In the right field, enter the name of the column. For more information, see Data model mapping.

NoteYou cannot specify

_extract_others_,__extract_others__, or__extract_others_all__as a partition key column.Partition Format

The time partition format. For more information about the configuration examples and parameters of partition formats, see References.

NoteThis parameter takes effect only if you set a left field in MaxCompute Partition Column to __partition_time__.

We recommend that you do not specify a time partition format that is accurate to seconds. If you specify a time partition format that is accurate to seconds, the number of partitions in the MaxCompute table may exceed the limit of 60,000.

Time Zone

The time zone that is used to format time and the time partition. For more information, see Time zones.

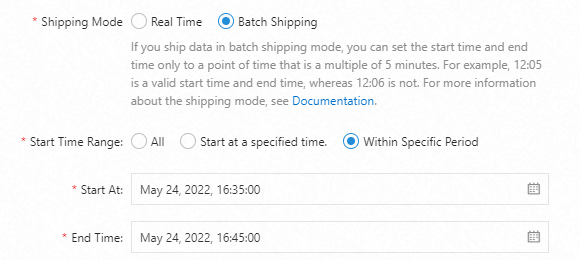

Shipping Mode

The data shipping mode. You can select Real Time or Batch Shipping.

Real Time: reads data from the Logstore in real time and ships the data to MaxCompute.

Batch Shipping: reads the data that is generated 5 to 10 minutes earlier than the current time from the Logstore and ships the data to MaxCompute in a batch.

For more information, see Shipping modes.

Start Time Range

The time range of data that the MaxCompute data shipping job can ship. The time range varies based on the time when logs are received. Valid values:

All: The MaxCompute data shipping job ships data in the Logstore from the first log until the job is manually stopped.

From Specific Time: The MaxCompute data shipping job ships data in the Logstore from the log that is received at the specified start time until the job is manually stopped.

Specific Time Range: The MaxCompute data shipping job ships data in the Logstore from the log that is received at the specified start time to the log that is received at the specified end time.

After a data shipping job is created, log data is shipped to MaxCompute in 1 hour after the data is written to the Logstore. After the log data is shipped, you can view the log data in MaxCompute. For more information, see How do I check the completeness of data that is shipped from Simple Log Service to MaxCompute?

| log_source | log_time | log_topic | time | ip | thread | log_extract_others | log_partition_time | status | +------------+------------+-----------+-----------+-----------+-----------+------------------+--------------------+-----------+ | 10.10.*.* | 1642942213 | | 24/Jan/2022:20:50:13 +0800 | 10.10.*.* | 414579208 | {"url":"POST /PutData?Category=YunOsAccountOpLog&AccessKeyId=****************&Date=Fri%2C%2028%20Jun%202013%2006%3A53%3A30%20GMT&Topic=raw&Signature=******************************** HTTP/1.1","user-agent":"aliyun-sdk-java"} | 2022_01_23_20_50 | 200 | +------------+------------+-----------+-----------+-----------+-----------+------------------+--------------------+-----------+

Data model mapping

If a data shipping job ships data from Log Service to MaxCompute, the data model mapping between the two services is enabled. The following list describes the usage notes and provides examples.

A MaxCompute table contains at least one data column and one partition key column.

We recommend that you use the following reserved fields in Log Service: __partition_time__, __source__, and __topic__.

Each MaxCompute table can have a maximum of 60,000 partitions. If the number of partitions exceeds the upper limit, data cannot be written to the table.

The previous name of the system reserved field __extract_others__ is _extract_others_. Both names can be used together.

The values of a partition key column in a MaxCompute table cannot be the reserved words or keywords of MaxCompute. For more information, see Reserved words and keywords.

The values of a partition key column in a MaxCompute table cannot be empty strings. The fields that are mapped to partition key columns must be reserved fields or log content fields. For the old version of data shipping, use the cast function to convert a field of the string type to the type of the mapped partition key column. If the value of a field that is mapped to a partition key column is an empty string due to conversion failure, the log that records the field value is discarded during data shipping.

A log field in Log Service can be mapped to only one data column or partition key column in a MaxCompute table. Field redundancy is not supported.

The following table describes the mapping relationships between MaxCompute data columns, partition key columns, and Log Service fields. For more information about reserved fields in Log Service, see Reserved fields.

Column type in MaxCompute | Column name in MaxCompute | Data type in MaxCompute | Field name in Log Service | Field type in Log Service | Description |

Data column | log_source | string | __source__ | Reserved field | The source of the log. |

log_time | bigint | __time__ | Reserved field | The UNIX timestamp of the log. This field corresponds to the Time field in the data model. | |

log_topic | string | __topic__ | Reserved field | The topic of the log. | |

time | string | time | Log content field | This field is parsed from logs and corresponds to the key-value pair in the data model. In most cases, the value of the __time__ field in the data that is collected by using Logtail is the same as the value of the time field. | |

ip | string | ip | Log content field | This field is parsed from logs. | |

thread | string | thread | Log content field | This field is parsed from logs. | |

log_extract_others | string | __extract_others__ | Reserved field | Other log fields that are not mapped in the configuration are serialized into JSON data based on key-value pairs. The JSON data uses a single-level structure, and JSON nesting is not supported for the log fields. | |

Partition key column | log_partition_time | string | __partition_time__ | Reserved field | This field is calculated based on the value of the __time__ field in a log. You can specify a partition granularity for the field. |

status | string | status | Log content field | This field is parsed from logs. The value of this field supports enumeration to ensure that the number of partitions does not exceed the upper limit. |

Shipping modes

The new version of the data shipping feature supports the following shipping modes:

Real Time: reads data from a Logstore in real time and ships the data to MaxCompute.

Batch Shipping: reads the data that is generated 5 to 10 minutes earlier than the current time from a Logstore and ships the data to MaxCompute in a batch.

If you set the Shipping Mode parameter to Batch Shipping, you can set the Start At or End Time parameter in the Start Time Range section only to a point in time that is a multiple of 5 minutes. For example,

2022-05-24 16:35:00is a valid start time or end time, whereas2022-05-24 16:36:00is not.

You can ship the data of the __receive_time__ field in batch shipping mode. The value of the __receive_time__ field indicates the time when a log is received by Log Service. You can use a time partition format to configure the value format for the __receive_time__ field. The time can be accurate to 30 minutes. For more information about the time partition format, see References.

If you want to ship the data of the __receive_time__ field, you can add this field only in the MaxCompute Partition Column parameter.

References

__partition_time__ field

In most cases, MaxCompute filters data by time or uses the log time field as a partition key column.

Format

The value of the __partition_time__ field is calculated based on the value of the __time__ field in Log Service. The value is a time string that is generated based on the time zone and time partition format. The value of the date partition key column is specified based on an interval of 1,800 seconds. This prevents the number of partitions in a single MaxCompute table from exceeding the limit.

For example, if the timestamp of a log in Log Service is 27/Jan/2022 20:50:13 +0800, Log Service calculates the value of the __time__ field based on the timestamp. The value is a UNIX timestamp of 1643287813. The following table describes the values of the time partition column in different configurations.

Partition Format

__partition_time__

%Y_%m_%d_%H_%M_00

2022_01_27_20_30_00

%Y_%m_%d_%H_%M

2022_01_27_20_30

%Y%m%d

20220127

Usage

You can use the __partition_time__ field to filter data to prevent a full-table scan. For example, you can execute the following query statement to query the log data of January 26, 2022:

select * from {ODPS_TABLE_NAME} where log_partition_time >= "2022_01_26" and log_partition_time < "2022_01_27";

__extract_others__ and __extract_others_all__ fields

The value of the __extract_others__ field contains all fields that are not mapped, excluding the __topic__, __tag__:*, and __source__ fields.

The value of the __extract_others_all__ field contains all fields that are not mapped, including the __topic__, __tag__:*, and __source__ fields.