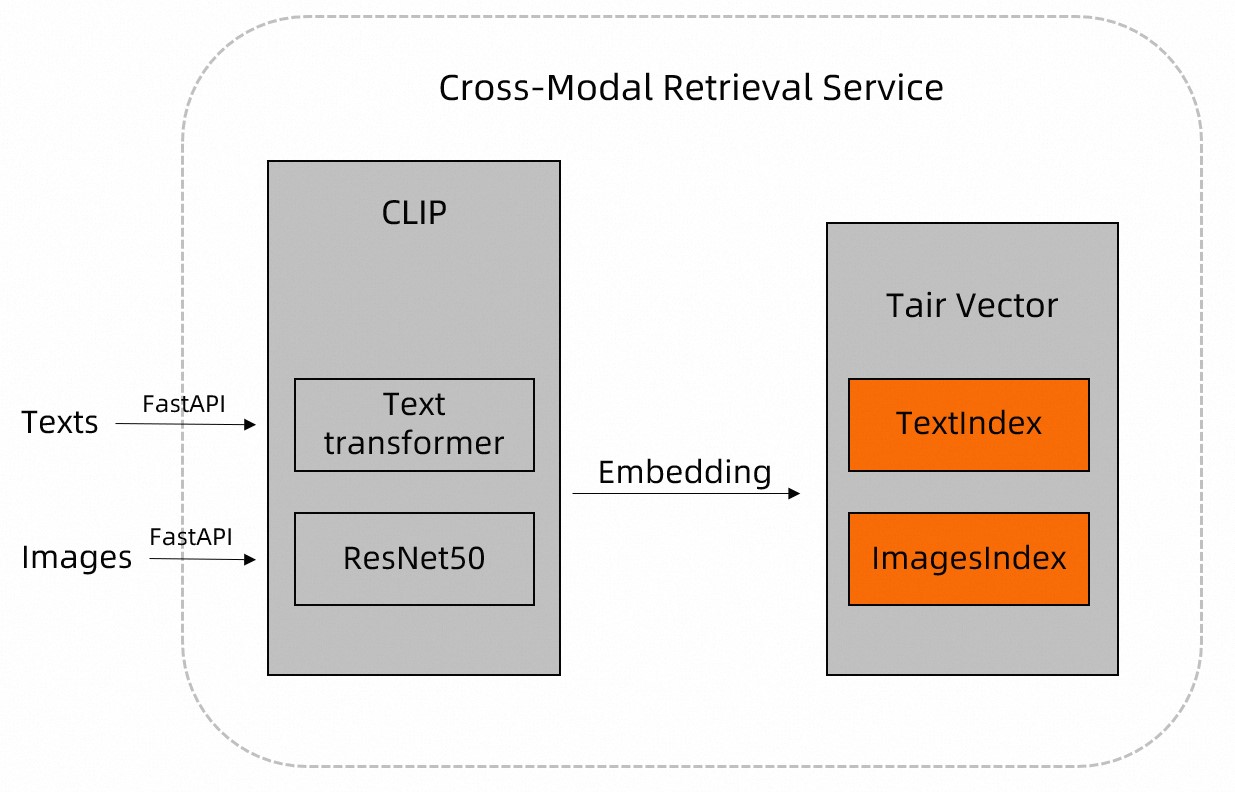

This topic describes how to implement cross-modal image-text retrieval by using TairVector.

Background information

The Internet is flooded with unstructured information. In this context, models like the Contrastive Language-Image Pre-Training (CLIP) neural network emerge to respond to the need for cross-modal data retrieval. DAMO Academy provides an open source CLIP model that has the Text Transformer and ResNet architectures built in to extract features from unstructured data and parse them into structured data.

When it comes to Tair, you can use CLIP to preprocess unstructured data like images and documents, store the results in your Tair instance, and then use the approximate nearest neighbor (ANN) search algorithm of TairVector to implement cross-modal image-text data retrieval. For more information about TairVector, see Vector.

Solution overview

Download images as test data.

In this example, the following test data is used:

Images: over 7,000 images of animal pets from an open source dataset on Extreme Mart.

Texts: "a dog", "a white dog", and "a running white dog".

Connect to your Tair instance. For the specific code implementation, refer to the

get_tairfunction in the following sample code.Create two vector indexes in the Tair instance, one for feature vectors of images and one for feature vectors of texts. For the specific code implementation, refer to the

create_indexfunction in the following sample code.Write the preceding images and texts to the Tair instance.

In this case, CLIP is used to preprocess the images and texts. Then, the TVS.HSET command of TairVector is run to write the names and feature information of the images and texts to the Tair instance. For the specific code implementation, refer to the

insert_imagesandupsert_textfunctions in the following sample code.

Perform cross-modal retrieval on the Tair instance.

Use a text to retrieve images

Use CLIP to preprocess the text and run the TVS.KNNSEARCH command of TairVector to retrieve images that are the most similar to what the text describes from the Tair instance. For the specific code implementation, refer to the

query_images_by_textfunction in the following sample code.Use an image to retrieve texts

Use CLIP to preprocess the image and run the TVS.KNNSEARCH command of TairVector to retrieve texts that are the most similar to what the image shows from the Tair instance. For the specific code implementation, refer to the

query_texts_by_imagefunction in the following sample code.

NoteYou do not need to store the text and image that you use to retrieve data in the Tair instance.

TVS.KNNSEARCH allows you to use the

topKparameter to specify the number of results to return. The smaller the value of thedistanceparameter, the higher similarity between the text or image and the data retrieved.

Sample code

In this example, Python 3.8 is used, and the following dependencies are installed: Tair-py, torch, Image, pylab, plt, and CLIP. You can run the pip3 install tair command to install Tair-py.

# -*- coding: utf-8 -*-

# !/usr/bin/env python

from tair import Tair

from tair.tairvector import DistanceMetric

from tair import ResponseError

from typing import List

import torch

from PIL import Image

import pylab

from matplotlib import pyplot as plt

import os

import cn_clip.clip as clip

from cn_clip.clip import available_models

def get_tair() -> Tair:

"""

This method is used to connect to a Tair instance.

* host: the endpoint that is used to connect to the Tair instance.

* port: the port number that is used to connect to the Tair instance. Default value: 6379.

* password: the password of the default database account of the Tair instance. If you want to connect to the Tair instance by using a custom database account, you must specify the password in the username:password format.

"""

tair: Tair = Tair(

host="r-8vbehg90y9rlk9****pd.redis.rds.aliyuncs.com",

port=6379,

db=0,

password="D******3",

decode_responses=True

)

return tair

def create_index():

"""

Create two vector indexes in the Tair instance, one for feature vectors of images and one for feature vectors of texts.

The vector index for feature vectors of images is named index_images. The vector index for feature vectors of texts is named index_texts.

* The vector dimension is 1024.

* The inner product formula is used.

* The Hierarchical Navigable Small World (HNSW) indexing algorithm is used.

"""

ret = tair.tvs_get_index("index_images")

if ret is None:

tair.tvs_create_index("index_images", 1024, distance_type="IP",

index_type="HNSW")

ret = tair.tvs_get_index("index_texts")

if ret is None:

tair.tvs_create_index("index_texts", 1024, distance_type="IP",

index_type="HNSW")

def insert_images(image_dir):

"""

Specify the directory of the images that you want to store in the Tair instance. This method automatically traverses all images in this directory.

Additionally, this method calls the extract_image_features method to use CLIP to preprocess the images, returns the feature vectors of these images, and then stores these feature vectors in the Tair instance.

The feature vector of an image is stored with the following information:

* Vector index name: index_images.

* Key: the image path that contains the image name. Example: test/images/boxer_18.jpg.

* Feature information: The vector dimension is 1024.

"""

file_names = [f for f in os.listdir(image_dir) if (f.endswith('.jpg') or f.endswith('.jpeg'))]

for file_name in file_names:

image_feature = extract_image_features(image_dir + "/" + file_name)

tair.tvs_hset("index_images", image_dir + "/" + file_name, image_feature)

def extract_image_features(img_name):

"""

This method uses CLIP to preprocess images and returns the feature vectors of these images. The vector dimension is 1024.

"""

image_data = Image.open(img_name).convert("RGB")

infer_data = preprocess(image_data)

infer_data = infer_data.unsqueeze(0).to("cuda")

with torch.no_grad():

image_features = model.encode_image(infer_data)

image_features /= image_features.norm(dim=-1, keepdim=True)

return image_features.cpu().numpy()[0] # [1, 1024]

def upsert_text(text):

"""

Specify the texts that you want to store in the Tair instance. This method calls the extract_text_features method to use CLIP to preprocess the texts, returns the feature vectors of these texts, and then stores the feature vectors in the Tair instance.

The feature vector of a text is stored with the following information:

* Vector index name: index_texts.

* Key: the text content. Example: a running dog.

* Feature information: The vector dimension is 1024.

"""

text_features = extract_text_features(text)

tair.tvs_hset("index_texts", text, text_features)

def extract_text_features(text):

"""

This method uses CLIP to preprocess texts and returns the feature vectors of these texts. The vector dimension is 1024.

"""

text_data = clip.tokenize([text]).to("cuda")

with torch.no_grad():

text_features = model.encode_text(text_data)

text_features /= text_features.norm(dim=-1, keepdim=True)

return text_features.cpu().numpy()[0] # [1, 1024]

def query_images_by_text(text, topK):

"""

This method uses a text to retrieve images.

Specify the text content as the text parameter and the number of results that you want Tair to return as the topK parameter.

This method uses CLIP to preprocess the text and runs the TVS.KNNSEARCH command of TairVector to retrieve images that are the most similar to what the text describes from the Tair instance.

This method returns the values of the distance parameter and keys of the images retrieved. The smaller the value of the distance parameter of an image retrieved, the higher similarity between the specified text and the image.

"""

text_feature = extract_text_features(text)

result = tair.tvs_knnsearch("index_images", topK, text_feature)

for k, s in result:

print(f'key : {k}, distance : {s}')

img = Image.open(k.decode('utf-8'))

plt.imshow(img)

pylab.show()

def query_texts_by_image(image_path, topK=3):

"""

This method uses an image to retrieve texts.

Specify the number of results that you want Tair to return as the topK parameter value and the image path.

This method uses CLIP to preprocess the image and runs the TVS.KNNSEARCH command of TairVector to retrieve texts that are the most similar to what the image shows from the Tair instance.

This method returns the values of the distance parameter and keys of the texts retrieved. The smaller the value of the distance parameter of a text retrieved, the higher similarity between the specified image and the text.

"""

image_feature = extract_image_features(image_path)

result = tair.tvs_knnsearch("index_texts", topK, image_feature)

for k, s in result:

print(f'text : {k}, distance : {s}')

if __name__ == "__main__":

# Create two vector indexes in the Tair instance, one for feature vectors of images and one for feature vectors of texts.

tair = get_tair()

create_index()

# Load CLIP.

model, preprocess = clip.load_from_name("RN50", device="cuda", download_root="./")

model.eval()

# Write the path of the dataset of sample images. Example: /home/CLIP_Demo.

insert_images("/home/CLIP_Demo")

# Write the following sample texts: "a dog", "a white dog", "a running white dog".

upsert_text("a dog")

upsert_text("a white dog")

upsert_text("a running white dog")

# Use the "a running dog" text to retrieve three images that show a running dog.

query_images_by_text("a running dog", 3)

# Specify the path of an image to retrieve texts that describe what the image shows.

query_texts_by_image("/home/CLIP_Demo/boxer_18.jpg",3)Results

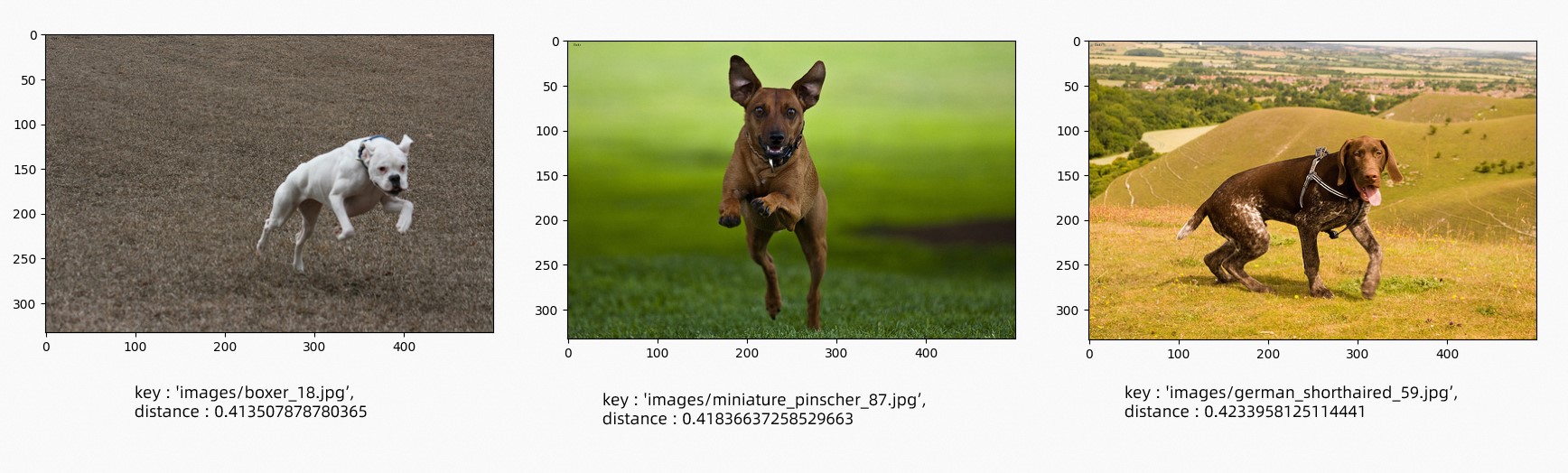

Use the "a running dog" text to retrieve three images that show a running dog.

Use the following image to retrieve texts that describe what the image shows.

The following code shows the results:

{ "results":[ { "text":"a running white dog", "distance": "0.4052203893661499" }, { "text":"a white dog", "distance": "0.44666868448257446" }, { "text":"a dog", "distance": "0.4553511142730713" } ] }

Summary

Tair is an in-memory database service that allows you to use indexing algorithms such as HNSW to accelerate data retrieval and combines CLIP and TairVector for cross-modal image-text retrieval.

Tair can be used in scenarios such as commodity recommendations and writing based on images. Additionally, you can replace CLIP in Tair with other embedding models to implement cross-modal video-text or audio-text retrieval.