PolarDB for MySQL can optimize binary log (binlog) writing for large transactions. This optimization helps prevent other transactions from being delayed or blocked by large transactions. This topic describes how to use optimized binlog writing for large transactions and the usage limits.

Background

After binary logging is enabled, the binlog data of a transaction is written into the binlog file when the transaction is committed. The following figure shows the process. When a transaction is executed, the generated binlogs are temporarily stored in the binlog cache of the transaction. If multiple transactions are committed at the same time, the transactions queue up to write their binlog data from the binlog cache to the binlog file. If one of the transactions such as Trx2 has a large binlog size, such as 1 GB, the transaction requires a longer period to write its binlog data to the binlog file. Consequently, other transactions in the queue are forced to wait, resulting in delays in their binlog writing and transaction commit. This latency can temporarily make the system unwritable and lead to issues such as a surge in slow SQL queries. As a result, applications may repeatedly retry failed operations, which causes a surge of new connections and further increases the system load.

To address this issue, PolarDB for MySQL provides the optimized binlog writing feature for large transactions. After you enable the feature, the binlog writing process of a large transaction no longer impedes the commit process of other transactions. This optimization prevents temporary write unavailability in the system, which could otherwise lead to issues such as a surge in slow SQL write requests or a rapid increase in connections.

Supported versions

Your PolarDB for MySQL cluster must be Enterprise Edition or Standard Edition and runs the following database engine versions:

PolarDB for MySQL 8.0.1 whose revision version is 8.0.1.1.42 or later.

PolarDB for MySQL 8.0.2 whose revision version is 8.0.2.2.25 or later.

For information about how to check the version of your cluster, see the "Query an engine version" section of the Engine versions 5.6, 5.7, and 8.0 topic.

Limits

After you enable optimized binlog writing for large transactions, binlogs generated by a large transaction are written into a dedicated binlog file. The following figure shows that the header of the dedicated binlog file contains events such as FORMAT DESCRIPTION EVENT and PREVIOUS GTIDS LOG EVENT. A special placeholder event called IGNORABLE LOG EVENT is also contained in the header. Downstream systems automatically ignore IGNORABLE LOG EVENT.

The following limits apply due to the special structure of the binlog file:

Downstream replication nodes cannot use database concurrency-based multi-threaded replication but can use logical clock concurrency-based multi-threaded replication. You must not specify

slave_parallel_workers>0andslave_parallel_type='DATABASE'in combination. However, you can specifyslave_parallel_workers> 0andslave_parallel_type= 'logical_clock'in combination.You cannot use checksum for binlog files of large transactions.

Usage

Set the loose_enable_large_trx_optimization parameter to ON to enable optimized binlog writing for large transactions. Use the loose_binlog_large_trx_threshold_up parameter to define the threshold binlog size that triggers optimized binlog writing for large transactions.

For information about how to configure parameters, see Configure cluster and node parameters.

The following table describes the parameters.

Parameter | Level | Description |

loose_enable_large_trx_optimization | Global | Specifies whether to enable or disable optimized binlog writing for large transactions. Valid values:

This parameter immediately takes effect without the need to restart the cluster. |

loose_binlog_large_trx_threshold_up | Global | The threshold binlog size that triggers optimized binlog writing for large transactions. After optimized binlog writing for large transactions is enabled, optimized binlog writing is triggered when the size of binlogs generated by a transaction exceeds the threshold.

This parameter immediately takes effect without the need to restart the cluster. |

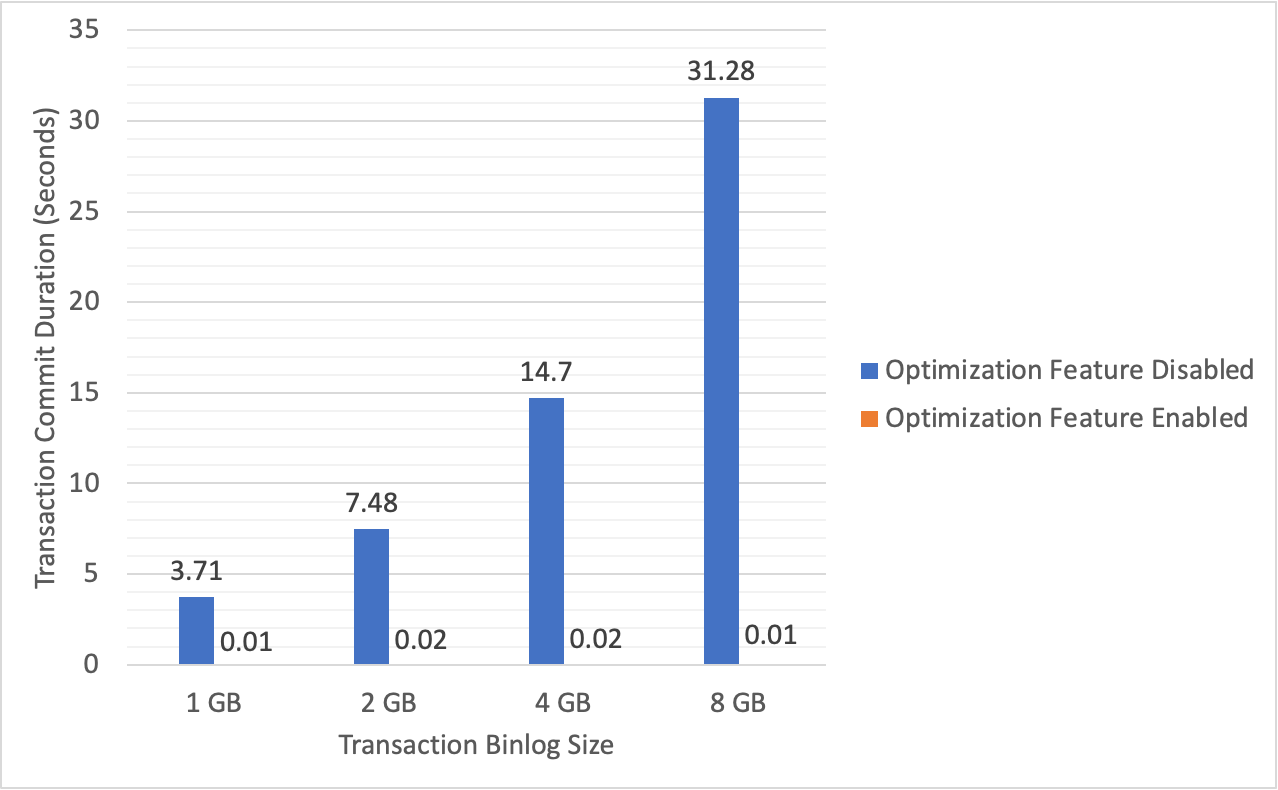

Performance comparison

The following figure shows the differences between the time required for a cluster that uses the PSL5 storage type to commit large transactions before and after optimized binlog writing for large transactions is enabled.

The figure shows a substantial reduction in the time required to commit large transactions after optimized binlog writing for large transactions is enabled. Consequently, issues such as heavy I/O load and prolonged write locks caused by large transaction commits are eliminated.