The Vertex Clustering Coefficient is a metric in network analysis used to quantify the degree of clustering among a node's neighbors. Specifically, it represents the ratio of the actual number of edges present among a node's neighbors to the total possible number of edges that could exist between them. The value of this coefficient ranges from 0 to 1, with a higher value indicating a tighter connection among the neighbors of the node, reflecting the local clustering characteristics within the network.

Algorithm description

In an undirected graph, the clustering coefficient of a node represents the density of connections around that node. A star network has a density of 0, while a fully connected network has a density of 1.

In network analysis, star networks and fully connected networks are two typical network topologies:

Star network: This structure consists of a central node and multiple peripheral nodes, with all peripheral nodes connected only to the central node. The characteristic of a star network is that the clustering coefficient of the central node is 0 because its neighbors (the peripheral nodes) do not have direct connections with each other.

Fully connected network: In this structure, every node is directly connected to all other nodes. The characteristic of a fully connected network is that all nodes have a clustering coefficient of 1 because all possible connections exist between each node and its neighbors.

These two structures represent the extreme cases in network topology, with the star network having the lowest local clustering and the fully connected network having the highest local clustering.

Configure the component

Method 1: Configure the component on the pipeline page

Add a Vertex Clustering Coefficient component on the pipeline page and configure the following parameters:

Category | Parameter | Description |

Fields Setting | Start Vertex | The start vertex column in the edge table. |

End Vertex | The end vertex column in the edge table. | |

Parameters Setting | Largest Vertex Degree | If the vertex degree is larger than the value of this parameter, sampling is required. Default value: 500. |

Tuning | Workers | The number of vertices for parallel job execution. The degree of parallelism and framework communication costs increase with the value of this parameter. |

Memory Size per Worker (MB) | The maximum size of memory that a single job can use. Unit: MB. Default value: 4096. If the size of used memory exceeds the value of this parameter, the | |

Data Split Size (MB) | The data split size. Unit: MB. Default value: 64. |

Method 2: Configure the component by using PAI commands

You can configure the Vertex Clustering Coefficient component by using PAI commands. You can use the SQL Script component to run PAI commands. For more information, see Scenario 4: Execute PAI commands within the SQL script component in the "SQL Script" topic.

PAI -name NodeDensity

-project algo_public

-DinputEdgeTableName=NodeDensity_func_test_edge

-DfromVertexCol=flow_out_id

-DtoVertexCol=flow_in_id

-DoutputTableName=NodeDensity_func_test_result

-DmaxEdgeCnt=500;Parameter | Required | Default value | Description |

inputEdgeTableName | Yes | No default value | The name of the input edge table. |

inputEdgeTablePartitions | No | Full table | The partitions in the input edge table. |

fromVertexCol | Yes | No default value | The start vertex column in the input edge table. |

toVertexCol | Yes | No default value | The end vertex column in the input edge table. |

outputTableName | Yes | No default value | The name of the output table. |

outputTablePartitions | No | No default value | The partitions in the output table. |

lifecycle | No | No default value | The lifecycle of the output table. |

maxEdgeCnt | No | 500 | If the vertex degree is greater than the value of this parameter, sampling is required. |

workerNum | No | No default value | The number of vertices for parallel job execution. The degree of parallelism and framework communication costs increase with the value of this parameter. |

workerMem | No | 4096 | The maximum size of memory that a single job can use. Unit: MB. Default value: 4096. If the size of used memory exceeds the value of this parameter, the |

splitSize | No | 64 | The data split size. Unit: MB. |

Example

Add a SQL Script component. Deselect Use Script Mode and Whether the system adds a create table statement. Enter the following SQL statements.

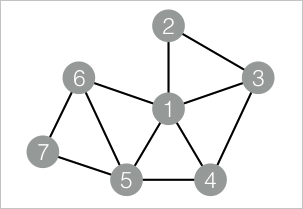

drop table if exists NodeDensity_func_test_edge; create table NodeDensity_func_test_edge as select * from ( select '1' as flow_out_id, '2' as flow_in_id union all select '1' as flow_out_id, '3' as flow_in_id union all select '1' as flow_out_id, '4' as flow_in_id union all select '1' as flow_out_id, '5' as flow_in_id union all select '1' as flow_out_id, '6' as flow_in_id union all select '2' as flow_out_id, '3' as flow_in_id union all select '3' as flow_out_id, '4' as flow_in_id union all select '4' as flow_out_id, '5' as flow_in_id union all select '5' as flow_out_id, '6' as flow_in_id union all select '5' as flow_out_id, '7' as flow_in_id union all select '6' as flow_out_id, '7' as flow_in_id )tmp; drop table if exists NodeDensity_func_test_result; create table NodeDensity_func_test_result ( node string, node_cnt bigint, edge_cnt bigint, density double, log_density double );Data structure

Add a SQL Script component. Deselect Use Script Mode and Whether the system adds a create table statement. Enter the following PAI commands and connect the two SQL Script components.

drop table if exists ${o1}; PAI -name NodeDensity -project algo_public -DinputEdgeTableName=NodeDensity_func_test_edge -DfromVertexCol=flow_out_id -DtoVertexCol=flow_in_id -DoutputTableName=${o1} -DmaxEdgeCnt=500;Click

in the upper left corner to run the pipeline.

in the upper left corner to run the pipeline.Right-click the SQL Script component created in Step 2 and choose View Data > SQL Script Output to view the training results.

| node | node_cnt | edge_cnt | density | log_density | | ---- | -------- | -------- | ------- | ----------- | | 1 | 5 | 4 | 0.4 | 1.45657 | | 2 | 2 | 1 | 1.0 | 1.24696 | | 3 | 3 | 2 | 0.66667 | 1.35204 | | 4 | 3 | 2 | 0.66667 | 1.35204 | | 5 | 4 | 3 | 0.5 | 1.41189 | | 6 | 3 | 2 | 0.66667 | 1.35204 | | 7 | 2 | 1 | 1.0 | 1.24696 |