The object detection training (easycv) component provides mainstream YOLOX and fully convolutional one-stage object detector (FCOS) models for object detection training. You can use the object detection (easycv) component to train object detection models that are used for inference or applied to business scenarios in which you want to identify potentially illicit content in images. This topic describes how to configure the object detection (easycv) component and provides examples on how to use the component in Platform for AI (PAI).

Prerequisites

OSS is activated, and Machine Learning Studio is authorized to access OSS. For more information, see Activate OSS and Grant the permissions that are required to use Machine Learning Designer.

Limits

The object detection (easycv) component is available only in Machine Learning Designer of PAI.

You can use the object detection (easycv) component based only on Deep Learning Containers (DLC).

Configure the component in the PAI console

Input ports

Input port (from left to right)

Data type

Recommended upstream component

Required

TFRecords for Training

OSS

No.

If you do not want to use a port for this input, you need to configure the oss path to training tfrecord parameter on the Fields Setting tab.

TFRecords for Evaluation

OSS

No.

If you do not want to use a port for this input, you need to configure the oss path to evaluation tfrecord parameter on the Fields Setting tab.

yolov5 class list file

OSS

No.

If you do not want to use a port for this input, you need to configure the oss path of class list file parameter on the Fields Setting tab.

Component parameters

Tab

Parameter

Required

Description

Default value

Fields Setting

model type

Yes

The type of the model that you want to train. Valid values:

FCOS

YOLOX

YOLOv5

YOLOv7

YOLOX

oss dir to save model

No

The OSS path in which you want to store the trained model. Example:

examplebucket.oss-cn-shanghai-internal.aliyuncs.com/test/ckpt/. If you leave this parameter empty, the default path of the workspace is used.N/A

oss annotation path for training set

No

If you set the data format to DetSourcePAI, upload the file in the .manifest format.

If you set the data format to COCO, upload the file in the .json format.

If you configured an input port, you can leave this parameter empty. If you configure both the input port and this parameter, the training task uses the values provided by the input port.

N/A

oss annotation path for validation set

No

If you set the data format to DetSourcePAI, upload the file in the .manifest format.

If you set the data format to COCO, upload the file in the .json format.

If you configured an input port, you can leave this parameter empty. When both the input port and this parameter are configured, the training task uses the values provided by the input port.

N/A

oss path of class list file

No

Specify the file that contains a list of tags in the .txt format.

If you configured an input port, you can leave this parameter empty. If you configure both the input port and this parameter, the training task uses the values provided by the input port.

N/A

oss path to pretrained model

No

The Object Storage Service (OSS) path in which your pre-trained model is stored. If you have a pre-trained model, set this parameter to the OSS path of your pre-trained model. If you leave this parameter empty, the default pre-trained model provided by PAI is used.

N/A

oss path to training tfrecord

Yes

The path for the training data. This parameter is required only if you set the data source to COCO.

N/A

oss path to evaluation tfrecord

Yes

The path for the evaluation data. This parameter is required only if you set the data source to COCO.

data format

Yes

The scope of the event. Valid values:

COCO

DetSourcePAI (Model types YOLOv5 and YOLOv7 only support datasets in DetSourcePAI format)

DetSourcePAI

Parameters Setting

YOLOX model Structure

Yes

This parameter is required only if you set the model type to YOLOX. Select the YOLOX model type you want to use from the drop-down list.

yolox-s

yolox-m

yolox-l

yolox-x

yolo-s

num classes

Yes

The number of categories in the dataset.

20

image scale

Yes

The size of the image after resizing. Separate the height and width with a space. Example: 320 320.

320 320

optimizer

Yes

This parameter is required only if you set the model type parameter to YOLOX.

The optimization method for model training. Valid values:

momentum

adam

momentum

initial learning rate

Yes

The initial learning rate.

0.01

batch size

Yes

The size of a training batch. This parameter specifies the number of samples to use during the training process and subsequent iterative training cycles.

8

eval batch size

Yes

The size of an evaluation batch. This parameter specifies the number of samples to use during the training process and subsequent iterative training cycles.

8

num epochs

Yes

The total number of training epochs.

NoteIf you set the model type to YOLOX, the total number of epochs must be greater than the sum of the warmup epochs and the epochs that have a steady learning rate. The epochs that have a steady learning rate are specified by the last no augmented lr epochs parameter.

20

loss frequency

No

The print frequency of the loss value. The default value is 200, which indicates that the loss value is printed every 200 batches.

Verification frequency

No

The default value is 2, which indicates that a verification is performed every three epochs.

warmup epochs

No

This parameter is required only if you set the model type to YOLOX.

5

last no augmented lr epochs

No

This parameter is required only if you set the model type to YOLOX.

5

type of export model

Yes

The format in which the model is exported. Valid values:

raw

jit (Model types YOLOv5 and YOLOv7 do not support exporting in jit format)

onnx

raw

save checkpoint epoch

No

The frequency at which a checkpoint is saved. A value of 1 indicates that all data is saved once for an iteration.

1

Tuning

gpu machine type

Yes

You need to specify a GPU specification for this algorithm.

4

evtorch model with fp16

No

Specifies whether to enable FP16 to reduce memory usage during model training.

false

single worker or distributed on MaxCompute or DLC

Yes

Only distribute_on_dlc is available.

distribute_on_dlc

Output ports

Output port (from left to right)

Data type

Downstream component

Output model

The OSS path in which the output model is stored. The value is the same as the value that you specified for the oss dir to save model parameter on the Fields Setting tab. The output model in the SavedModel format is stored in this OSS path.

Examples

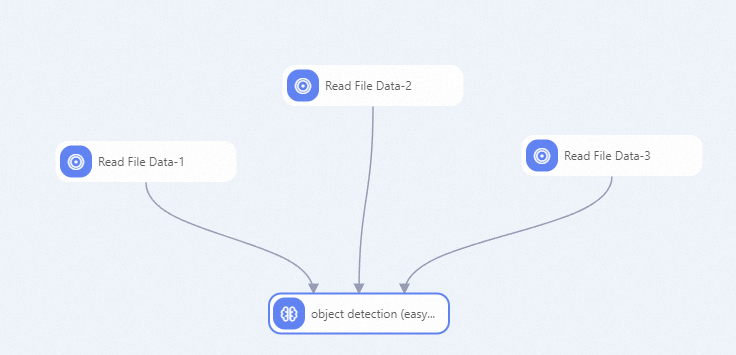

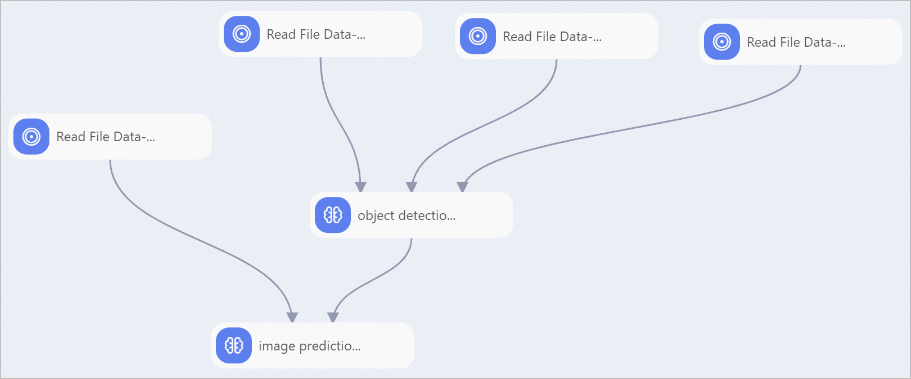

For model types FCOS, YOLOX, YOLOv5 and YOLOv7, the following figure shows a sample pipeline in which the object detection (easycv) component is used.

In this example, the components are configured by performing the following steps:

Label images by using iTAG provided by PAI. For more information, see Process labeling jobs.

Configure the Read File Data-1 component to read the labeling result file xxx.manifest. In this case, you need to set the OSS Data Path parameter of the Read File Data-1 component to the OSS path in which the labeling result file is stored. Example:

oss://examplebucket.oss-cn-shanghai.aliyuncs.com/ev_demo/xxx.manifest.Import the training data and evaluation data to the object detection (easycv) component and configure the component parameters. For more information, see Configure the component in Machine Learning Designer.

For model types FCOS and YOLOX, you can configure the image prediction-1 component to perform offline inference. For more information, see image prediction.

References

After you train an object detection model, you can add a general image prediction component as a downstream component of the object detection (easycv) component to perform prediction on the model. For more information, see image prediction.

For information about Machine Learning Designer components, see Overview of Machine Learning Designer.

Machine Learning Designer provides various preset algorithm components. You can select a component for data processing based on different scenarios. For more information, see Component reference: Overview of all components.