Model Gallery provides a variety of pre-trained models to help you quickly train and deploy models using Platform for AI (PAI). This document describes how to find a model in Model Gallery for your business needs, and how to deploy, perform online predictions with, and fine-tune it

Prerequisites

Before you fine-tune or incrementally train a model, you must create an Object Storage Service (OSS) bucket. For more information, see Create buckets.

Billing

Model Gallery is free to use, but you are charged for model deployment and training based on your Elastic Algorithm Service (EAS) and Deep Learning Containers (DLC). For more information, see Billing of EAS and Billing of Deep Learning Containers (DLC).

Find a model for your use case

Model Gallery provides a wide range of models to help you solve real-world business problems. Consider the following tips to quickly find the model that best suits your needs:

Find models by domain and task.

Most models are labeled with the pre-trained dataset they use. A pre-trained dataset that closely matches your use case yields better performance for both out-of-the-box deployment and fine-tuning. You can find more information about the pre-trained dataset on the model details page.

In general, models with more parameters perform better, but they also incur higher runtime costs and require more data for fine-tuning.

Follow these steps to find a model:

Go to the Model Gallery page.

Log on to the PAI console.

In the left navigation pane, click the workspace list. On the workspace list page, click the name of the workspace that you want to manage.

In the left navigation pane, click QuickStart > Model Gallery.

Find a model that suits your business.

After you have selected a model, you can deploy it and perform online predictions to verify its inference performance. For more information, see Model deployment and online debugging.

Model deployment and online debugging

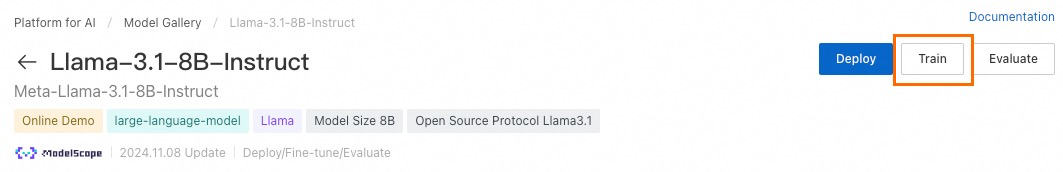

This section uses the Llama-3.1-8B-Instruct model as an example. After you find a suitable model, click the model card to go to the model details page, where you can deploy it and perform online predictions.

Deploy a model service

On the model details page, click Deploy.

(Optional) Configure the model service and resource deployment information.

Model Gallery pre-configures the Basic Information and Resource Information for each model based on its characteristics. You can use the default settings or change the deployment configuration based on your business needs.

Parameter

Description

Service Name

In the Basic Information area, a service name is configured by default. You can change the name as needed. The service name must be unique within the same region.

Resource Type

In the Resource Information section, you can select a public resource group, a dedicated resource group, or a resource quota.

Deployment Resources

In the Resource Information section, a resource specification is configured by default. You can use the default configuration or select another resource specification. For better performance, select a specification with more computing power than the default.

At the bottom of the model deployment details page, click Deploy. In the Billing Notification dialog box, click OK.

You are automatically redirected to the Service Details page. On this page, you can view the Basic Information and Resource Information for the service. When the Status changes to Running, the service is successfully deployed.

Debug a model service online

Switch to the Online Debugging tab, enter the request data in the editor under Body, and click Send Request to display the inference results of the model service in the output below.

You can construct request data in the format specified on the model details page. For some models, such as the Stable Diffusion V1.5 model, you can click View Web App in the upper-right corner of the Overview page to launch a web UI. This lets you more easily test the model's inference capabilities.

If the pre-trained dataset does not perfectly match your business scenario, the model's performance in your application may differ from its theoretical performance. If the prediction results are unsatisfactory, fine-tune the model for your specific use case. For more information, see Train a model.

Train a model

You can use your own dataset to fine-tune a pre-built model from the PAI platform. This section uses the Llama-3.1-8B-Instruct model as an example. Follow these steps:

On the model details page, click Train.

On the fine-tuning page, configure the following parameters.

NoteSupported parameters vary by model. Configure them based on your model's specific requirements.

Parameter Type

Parameter

Description

Training Method

SFT (Supervised Fine-Tuning)

The supported training modes include:

Supervised fine-tuning: Fine-tunes model parameters using paired inputs and outputs.

Direct preference optimization: Directly optimizes a LLM to align with human preferences, which shares the same optimization objective as the Reinforcement Learning from Human Feedback (RLHF) algorithm.

Both training modes support fine-tuning through full-parameter tuning, LoRA, and QLoRA.

DPO (Direct Preference Optimization)

Job Configuration

Task name

A task name is configured by default. You can change the name as needed.

Maximum running time

The maximum duration for which a task can run. After the configuration is complete, if the task runs longer than the maximum duration, the task stops and returns the result.

If you use the default configuration, the task runtime is not limited by this parameter.

Dataset Configuration

Training Dataset

Model Gallery provides a default training dataset. If you do not want to use the default dataset, prepare your training data in the format specified in the model's documentation and upload it in one of the following ways:

OSS file or directory

Click

and select the OSS path of the dataset. In the Select OSS file dialog box, you can either select an existing file or Upload file.

and select the OSS path of the dataset. In the Select OSS file dialog box, you can either select an existing file or Upload file.Custom Dataset

You can use a dataset on a cloud storage service such as OSS. Click

to select a created dataset. If no dataset is available, you can create one. For more information, see Create and manage datasets.

to select a created dataset. If no dataset is available, you can create one. For more information, see Create and manage datasets.

Validate dataset

Click Add validation dataset. The validation dataset is configured in the same way as the Training dataset.

Output Configuration

Select a cloud storage path to save the trained model and TensorBoard log files.

NoteIf you have configured a default OSS storage path for your workspace on the workspace details page, this path is automatically populated and requires no manual entry. For more information about how to configure a workspace storage path, see Manage workspaces.

Computing Resources

Resource Type

Only General computing resources is supported.

Source

Public Resources:

Billing method: pay-as-you-go.

Use case: Public resources may experience queueing delays. Therefore, they are recommended for non-urgent tasks or scenarios with low timeliness requirements.

Resource Quota: Includes general-purpose computing resources or Lingjun intelligent computing resources.

Billing method: Subscription.

Use case: Suitable for a large number of tasks that require high reliability.

Preemptible Resources:

Billing method: Pay-as-you-go.

Use case: To reduce resource costs, use spot instances, which are typically offered at a significant discount.

Limitations: Availability is not guaranteed. You may not be able to acquire an instance immediately, or it may be reclaimed. For more information, see Use preemptible jobs.

Hyperparameter Configuration

Supported hyper-parameter configurations vary by model. You can use the default values or modify the parameter settings based on your business needs.

Click Train.

You are automatically redirected to the Task details page, where you can view the basic information, real-time status, task logs, and monitoring information for the training task.

NoteThe trained model is automatically registered in AI Assets-Model Management. You can then view or deploy the model. For more information, see Register and manage models.