You can create custom algorithm components based on your business requirements in Platform for AI (PAI). You can use custom components together with built-in components in Machine Learning Designer to build pipelines in a flexible manner. This topic describes how to create a custom component.

Background information

Custom components use open source KubeDL of Alibaba Cloud, which is a Kubernetes-based AI framework for managing workloads.

When you create a custom component, you can select the type of jobs in which you want to use the component, such as TensorFlow, PyTorch, XGBoost, or ElasticBatch. You can also create input and output pipelines, and configure hyperparameters. After you create the custom component, you can configure the component in Machine Learning Designer. For more information, see Procedure.

KubeDL assigns environment variables based on job types. You can use the environment variables to obtain the number of instances and topology information. For more information, see Appendix 1: Job types.

For information about how to obtain data of input and output pipelines and hyperparameters by configuring environment variables, see Obtain pipeline hyperparameter data.

You can use environment variables in the code to obtain the input and output pipeline data. You can also use the mount path in the container to access the data. For more information, see Input and output directory structure.

Prerequisites

A workspace is created. The custom components that you created are associated with the workspace. For more information, see Create and manage a workspace.

Procedure

Go to the Custom Components page.

Log on to the PAI console.

In the left-side navigation pane, click Workspaces. On the Workspaces page, click the name of the workspace in which you want to create custom components.

In the left-side navigation pane, choose AI Asset Management > Custom Components.

On the Custom Components page, click Create Component. On the Create Component page, configure the parameters that are described in the following table.

Basic Information

Parameter

Description

Component Name

The name of the custom component. The name must be unique in the Alibaba Cloud account in the same region.

Component Description

The description of the custom component.

Component Version

The version number of the custom component.

NoteWe recommend that you manage component versions in the

x.y.zformat. For example, if the first major version is 1.0.0, you can upgrade the version to 1.0.1 when you fix minor issues, and to 1.1.0 when you upgrade minor features. This helps you manage component versions in a simple and effective manner.Version Description

The description of the current version of the custom component. Example: the initial version.

Execution Configuration

Parameter

Description

Job Type

The type of jobs in which you want to use the custom component. Valid values: Tensorflow for TFJob, PyTorch for PyTorchJob, XGBoost for XGBoostJob, and ElasticBatch for ElasticBatchJob of KubeDL. For more information about job types, see Appendix: Job types.

Image

The image that you want to use. Valid values: Community Image, Alibaba Cloud Image, and Custom Image. You can select an image from the drop-down list, or select Image Address and specify an image address.

NoteTo ensure the stability of the job, we recommend that you use Alibaba Cloud Container Registry (ACR) in the same region.

You can use only Container Registry Personal Edition. Container Registry Enterprise Edition is not supported. Specify the image address in the

registry-vpc.${region}.aliyuncs.comformat.If you use a custom image, we recommend that you do not frequently update the image in the same version of the custom component. If you frequently update the image, the image cache may not be updated at the earliest opportunity, resulting in a delay of job startups.

To ensure that the image runs as expected, the image must contain

sh shellcommands. The image uses thesh -cmethod to run commands.If you use a custom image, make sure that the image contains the required environment and pip command for Python. Otherwise, the job may fail.

Code

Valid values:

Mount OSS Path: When you run the component, all files in the Object Storage Service (OSS) path are downloaded to the

/ml/usercode/path. You can run commands to execute the files in the path.NoteTo prevent potential delays or timeouts during component startup, we recommend that you store only the required algorithm files in this path.

If the requirements.txt file exists in the code directory, the algorithm automatically executes the

pip install -r requirements.txtcommand to install related dependencies.

Code Configuration: Configure the Git code repository.

Command

The command that the image executes. Specify the command in the following format:

python main.py $PAI_USER_ARGS --{CHANNEL_NAME} $PAI_INPUT_{CHANNEL_NAME} --{CHANNEL_NAME} $PAI_OUTPUT_{CHANNEL_NAME} && sleep 150 && echo "job finished"To obtain information about hyperparameters and input and output pipelines, you can configure the following environment variables: PAI_USER_ARGS, PAI_INPUT_{CHANNEL_NAME}, and PAI_OUTPUT_{CHANNEL_NAME}. For more information about how to obtain data, see Obtain pipeline and hyperparameter data.

For example, the names of the input pipelines are test and train, and the names of the output pipelines are model and checkpoints. Sample command:

python main.py $PAI_USER_ARGS --train $PAI_INPUT_TRAIN --test $PAI_INPUT_TEST --model $PAI_OUTPUT_MODEL --checkpoints $PAI_OUTPUT_CHECKPOINTS && sleep 150 && echo "job finished"The main.py file provides an example of the logic for parsing arguments. You can modify the file based on your business requirements. Sample file content:

import os import argparse import json def parse_args(): """Parse the arguments.""" parser = argparse.ArgumentParser(description="PythonV2 component script example.") # input & output channels parser.add_argument("--train", type=str, default=None, help="input channel train.") parser.add_argument("--test", type=str, default=None, help="input channel test.") parser.add_argument("--model", type=str, default=None, help="output channel model.") parser.add_argument("--checkpoints", type=str, default=None, help="output channel checkpoints.") # parameters parser.add_argument("--param1", type=int, default=None, help="param1") parser.add_argument("--param2", type=float, default=None, help="param2") parser.add_argument("--param3", type=str, default=None, help="param3") parser.add_argument("--param4", type=bool, default=None, help="param4") parser.add_argument("--param5", type=int, default=None, help="param5") args, _ = parser.parse_known_args() return args if __name__ == "__main__": args = parse_args() print("Input channel train={}".format(args.train)) print("Input channel test={}".format(args.test)) print("Output channel model={}".format(args.model)) print("Output channel checkpoints={}".format(args.checkpoints)) print("Parameters param1={}".format(args.param1)) print("Parameters param2={}".format(args.param2)) print("Parameters param3={}".format(args.param3)) print("Parameters param4={}".format(args.param4)) print("Parameters param5={}".format(args.param5))You can obtain information about input and output pipelines and parameters in the logs of the command execution. Sample logs:

Input channel train=/ml/input/data/train Input channel test=/ml/input/data/test/easyrec_config.config Output channel model=/ml/output/model/ Output channel checkpoints=/ml/output/checkpoints/ Parameters param1=6 Parameters param2=0.3 Parameters param3=test1 Parameters param4=True Parameters param5=2 job finishedPipeline and Parameter

Click the

icon to configure the input and output pipelines and parameters of the custom component. Specify the names of pipelines and parameters in the following format:

icon to configure the input and output pipelines and parameters of the custom component. Specify the names of pipelines and parameters in the following format: The names must be globally unique.

The name can contain digits, letters, underscores (_), and hyphens (-), but cannot start with an underscore (_).

NoteIf the name contains characters that are not supported, the characters are replaced with underscores (_) when the system generates environment variables. Take note that hyphens (-) are also replaced with underscores (_). Lowercase letters in the name are automatically converted into uppercase letters. For example, if you specify the parameter names as test_model and test-model, the names are converted into PAI_HPS_TEST_MODEL, which may cause conflicts.

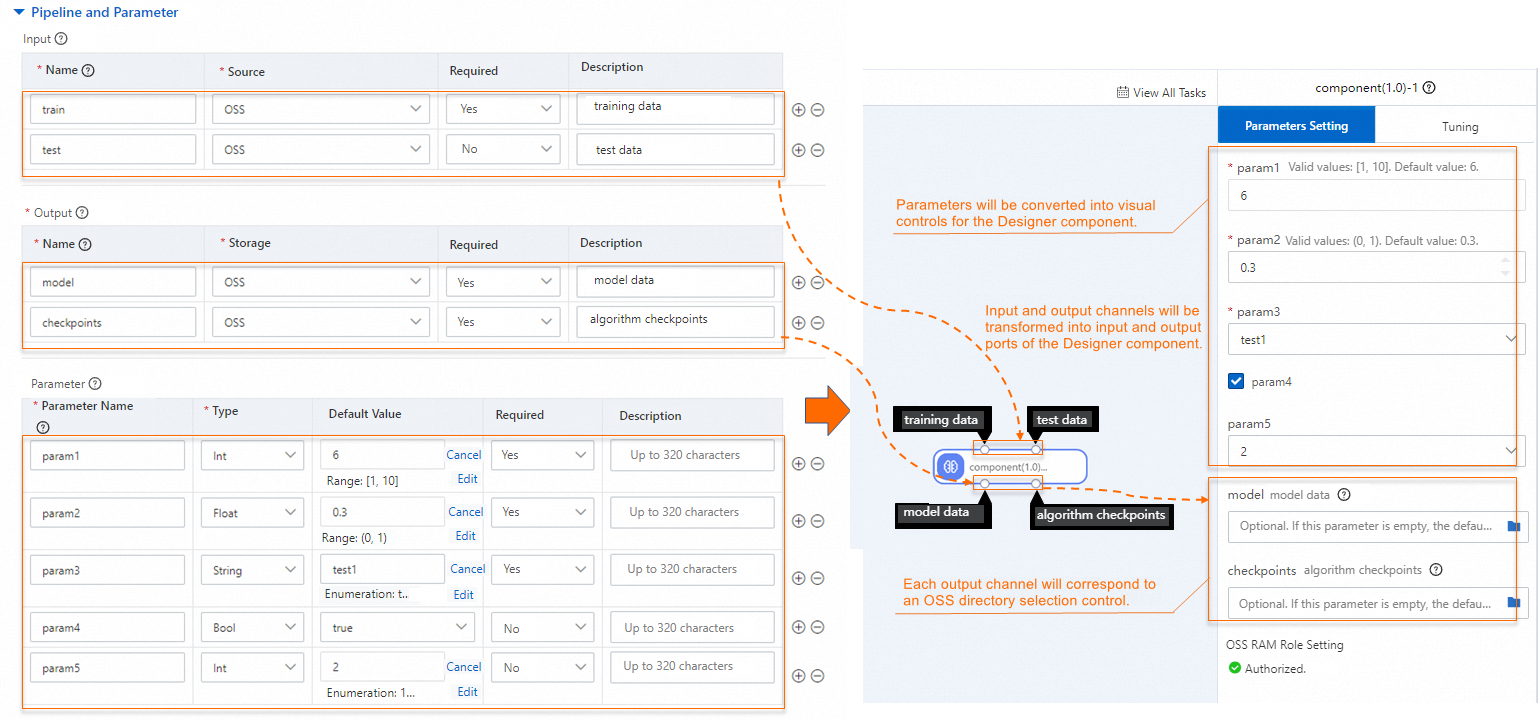

The following figure shows the mapping between the pipeline and parameter configurations and the component parameters in Machine Learning Designer.

The following table describes the parameters.

Parameter

Description

Input

The source from which the custom component obtains input data or the model. Configure the following parameters:

Name: Specify the name of the input pipeline.

Source: Specify a path in OSS, File Storage NAS (NAS), or MaxCompute in which the input pipeline obtains data. The input data is mounted to the

/ml/input/data/{channel_name}/directory of the training container. The component can read data from OSS, NAS, or MaxCompute by reading on-premises files.

Output

The output pipeline is used to save the results such as trained models and checkpoints. Configure the following parameters:

Name: the name of the output pipeline.

Storage: Specify an OSS or MaxCompute directory for each output pipeline. The specified directory is mounted to the

/ml/output/{channel_name}/directory of the training container.

Parameter

The information about hyperparameters. Configure the following parameters:

Parameter Name: the name of the parameter.

Type: the type of the parameter. Valid values: Int, Float, String, and Bool.

Constraint: After selecting a type other than Bool (Int, Float, or String), in the Default Value column, click Constraint to configure parameter constraints. Valid values for Constraint Type:

Range: Specify the maximum and minimum values to specify the value range.

Enumeration: Configure enumerated values for the parameter.

Constraints

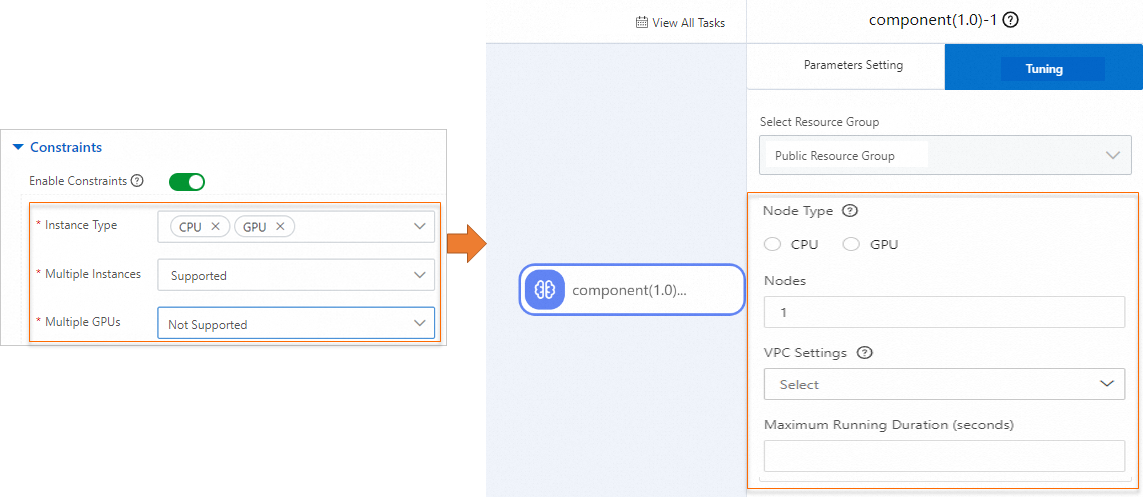

The training constraint that is used to specify the computing resources required by a training job. Turn on Enable Constraints to configure a training constraint.

The following figure shows the mapping between the training constraints and the tuning parameters of the component in Machine Learning Designer.

The following table describes the parameters.

Parameter

Description

Instance Type

Valid values: CPU and GPU.

Multiple Instances

Specifies whether the component supports distributed training on multiple instances. Valid values:

Supported: You can configure the number of instances when the component runs.

Not Supported: The number of instances can only be 1 when the component runs and cannot be changed.

Multiple GPUs

This parameter is available only if you set the Instance Type parameter to GPU.

Specify whether the custom component supports multiple GPUs:

If you set this parameter to Supported, you can select single-GPU or multi-GPU instance types to run the component.

If you set this parameter to Not Supported, you can select only single-GPU instance types to run the component.

Click Submit.

The custom component that you created is displayed on the Custom Components page.

After you create a custom component, you can use the component in Machine Learning Designer. For more information, see Use a custom component.

Appendix 1: Job types

TensorFlow (TFJob)

If you set the Job Type parameter to TensorFlow, the system injects the topology information of the instance on which the job is run by using the TF_CONFIG environment variable. The following example shows the format of the environment variable:

{

"cluster": {

"chief": [

"dlc17****iui3e94-chief-0.t104140334615****.svc:2222"

],

"evaluator": [

"dlc17****iui3e94-evaluator-0.t104140334615****.svc:2222"

],

"ps": [

"dlc17****iui3e94-ps-0.t104140334615****.svc:2222"

],

"worker": [

"dlc17****iui3e94-worker-0.t104140334615****.svc:2222",

"dlc17****iui3e94-worker-1.t104140334615****.svc:2222",

"dlc17****iui3e94-worker-2.t104140334615****.svc:2222",

"dlc17****iui3e94-worker-3.t104140334615****.svc:2222"

]

},

"task": {

"type": "chief",

"index": 0

}

}The following table describes the parameters in the preceding code.

Parameter | Description |

cluster | The description of the TensorFlow cluster.

|

task |

|

PyTorch (PyTorchJob)

If you set the Job Type parameter to PyTorch, the system injects the following environment variables:

RANK: the role of the instance. A value of 0 specifies that the instance is a master node. A value other than 0 specifies that the instance is a worker node.

WORLD_SIZE: the number of instances in the job.

MASTER_ADDR: the address of the master node.

MASTER_PORT: the port of the master node.

XGBoost (XGBoostJob)

If you set the Job Type parameter to XGBoost, the system injects the following environment variables:

RANK: the role of the instance. A value of 0 specifies that the instance is a master node. A value other than 0 specifies that the instance is a worker node.

WORLD_SIZE: the number of instances in the job.

MASTER_ADDR: the address of the master node.

MASTER_PORT: the port of the master node.

WORKER_ADDRS: the addresses of worker nodes, sorted by RANK.

WORKER_PORT: the port of the worker node.

Examples:

Distributed jobs (multiple instances)

WORLD_SIZE=6 WORKER_ADDRS=train1pt84cj****-worker-0,train1pt84cj****-worker-1,train1pt84cj****-worker-2,train1pt84cj****-worker-3,train1pt84cj****-worker-4 MASTER_PORT=9999 MASTER_ADDR=train1pt84cj****-master-0 RANK=0 WORKER_PORT=9999Single-instance jobs

NoteIf a job has only one instance, the instance is a master node, and the WORKER_ADDRS and WORKER_PORT environment variables are unavailable.

WORLD_SIZE=1 MASTER_PORT=9999 MASTER_ADDR=train1pt84cj****-master-0 RANK=0

ElasticBatch (ElasticBatchJob)

ElasticBatch is a type of distributed offline inference job. ElasticBatch jobs have the following benefits:

Provides high parallelism that supports double throughput.

Reduces waiting time for jobs. Worker nodes can run immediately after the nodes are allocated resources.

Supports monitoring of instance startups and automatically replaces instances that undergo delayed startups with backup worker nodes. This prevents long tails or job hangs.

Supports global dynamic distribution of data shards, which uses resources in a more efficient manner.

Supports early stop of jobs. After all data is processed, the system does not start worker nodes that are not started to avoid increasing the job uptime.

Supports fault tolerance. If a single worker node fails, the system automatically restarts the instance.

An ElasticBatch job consists of the following types of nodes: AIMaster and Worker.

An AIMaster node is used for global control of jobs, including dynamic distribution of data shards, monitoring of data throughput performance of each worker node, and fault tolerance.

A worker node obtains a shard from AIMaster, and reads, processes, and then writes back data. The dynamic distribution of data shards allows efficient instances to process more data than slow instances.

After you start an ElasticBatch job, the AIMaster node and worker node are started. The code is run on the worker node. The system injects the ELASTICBATCH_CONFIG environment variable into worker nodes. The following example shows the format of environment variable values:

{

"task": {

"type": "worker",

"index": 0

},

"environment": "cloud"

}Take note of the following parameters:

task.type: the job type of the current instance.

task.index: the index of the instance in the list of network addresses of the role.

Appendix 2: Principles of custom components

Obtain pipeline and hyperparameter data

Obtain input pipeline data

The data of input pipelines is injected into the job container by using the PAI_INPUT_{CHANNEL_NAME} environment variable.

For example, if a custom component has two input pipelines named train and test, and the values are oss://<YourOssBucket>.<OssEndpoint>/path-to-data/ and oss://<YourOssBucket>.<OssEndpoint>/path-to-data/test.csv, the following environment variables are injected:

PAI_INPUT_TRAIN=/ml/input/data/train/

PAI_INPUT_TEST=/ml/input/data/test/test.csvObtain output pipeline data

The system obtains the data by using the PAI_OUTPUT_{CHANNEL_NAME} the environment variable.

For example, if a custom component has two output pipelines named model and checkpoints, the following environment variables are injected:

PAI_OUTPUT_MODEL=/ml/output/model/

PAI_OUTPUT_CHECKPOINTS=/ml/output/checkpoints/Obtain hyperparameter data

The system obtains the hyperparameter data by using the following environment variables.

PAI_USER_ARGS

When a component runs, all hyperparameter information of the job is injected into the job container by using the PAI_USER_ARGS environment variable in the

--{hyperparameter_name} {hyperparameter_value}format.For example, if you specify the following hyperparameters for the job:

{"epochs": 10, "batch-size": 32, "learning-rate": 0.001}, the following section shows the value of the PAI_USER_ARGS environment variable:PAI_USER_ARGS="--epochs 10 --batch-size 32 --learning-rate 0.001"PAI_HPS_{HYPERPARAMETER_NAME}

The value of a single hyperparameter is injected into the job container by using the PAI_HPS_{HYPERPARAMETER_NAME} environment variable. In a hyperparameter name, characters that are not supported are replaced with underscores (_).

For example, if you specify the following hyperparameters for the job:

{"epochs": 10, "batch-size": 32, "train.learning_rate": 0.001}, then the value of the environment variable is:PAI_HPS_EPOCHS=10 PAI_HPS_BATCH_SIZE=32 PAI_HPS_TRAIN_LEARNING_RATE=0.001PAI_HPS

The hyperparameter information of the training job is injected into the container by using the PAI_HPS environment variable in JSON format.

For example, if you specify the following hyperparameters for the job:

{"epochs": 10, "batch-size": 32}, the following section shows the value of the PAI_HPS environment variable:PAI_HPS={"epochs": 10, "batch-size": 32}

Input and output directory structure

You can use environment variables in the code to obtain the input and output pipeline information. You can also use the mount path in the container to access the data. When a job submitted by a component runs in a container, paths are created based on the following rules:

Code path:

/ml/usercode/.Configuration file of hyperparameters:

/ml/input/config/hyperparameters.json.Configuration file of the training job:

/ml/input/config/training_job.json.Directory of input pipelines:

/ml/input/data/{channel_name}/.Directory of output pipelines:

/ml/output/{channel_name}/.

The following code provides an example of the input and output directory structure of a job submitted by a custom component:

/ml

|-- usercode # The user code is loaded into the /ml/usercode directory, which is also the working directory of the user code. You can obtain the value by using the PAI_WORKING_DIR environment variable.

| |-- requirements.txt

| |-- main.py

|-- input # The input data and configuration information of the job.

| |-- config # The config directory contains the configuration information of the job. You can obtain the configuration information by using the PAI_CONFIG_DIR environment variable.

| |-- training_job.json # The configuration of the job.

| |-- hyperparameters.json # The hyperparameters of the job.

| |-- data # The input pipelines of the job. In this example, the directory contains the pipelines named train_data and test_data.

| |-- test_data

| | |-- test.csv

| |-- train_data

| |-- train.csv

|-- output # The output pipelines of the job: In this example, the directory contains the pipelines named the model and checkpoints.

|-- model # You can use the PAI_OUTPUT_{OUTPUT_CHANNEL_NAME} environment variable to obtain the output path.

|-- checkpointsHow do I determine whether the instance is a GPU-accelerated instance and the number of GPUs it contains?

After you start a job, you can use the NVIDIA_VISIBLE_DEVICES environment variable to check the number of GPUs. For example, NVIDIA_VISIBLE_DEVICES=0,1,2,3 indicates that the instance has four GPUs.