The large language model (LLM) data processing algorithms allow you to edit and transform data samples, filter low-quality samples, and remove duplicates. You can combine different algorithms to filter data and generate text that meets your requirements. This provides high-quality data for subsequent LLM training. This topic uses a small amount of data from the open source Alpaca-CoT project as an example. It shows how to use the LLM data processing components in PAI to clean and process supervised fine-tuning (SFT) data.

Dataset description

The LLM Data Processing-Alpaca-Cot (SFT Data) preset template in Machine Learning Designer uses a dataset of 5,000 samples. These samples are extracted from the raw data of the open source Alpaca-CoT project.

Create and run a pipeline

Go to the Machine Learning Designer page.

Log in to the PAI console.

In the upper-left corner, select a region.

In the navigation pane on the left, click Workspaces, and then click the name of the target workspace.

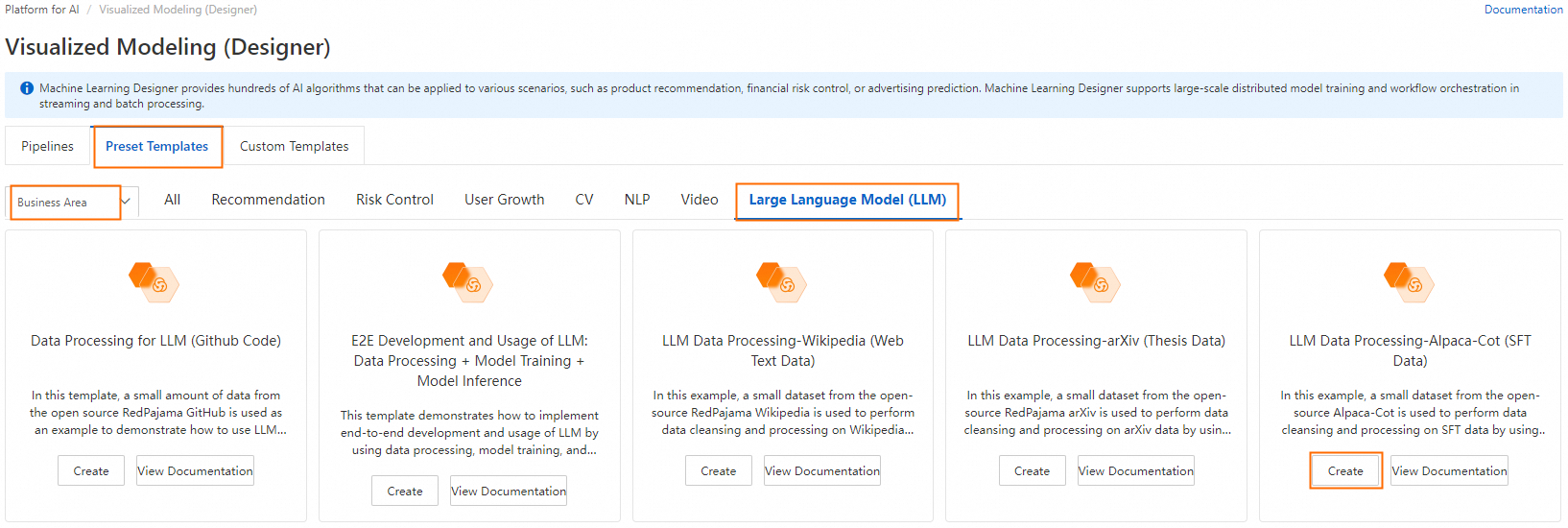

In the navigation pane on the left, choose Model Training > Visualized Modeling (Designer).

Create a pipeline.

On the Preset Templates tab, select Business Area > LLM, and then click Create on the LLM Data Processing-Alpaca-Cot (SFT Data) template card.

Configure the pipeline parameters or keep the default settings, and then click OK.

In the pipeline list, select the pipeline that you created and click Enter Pipeline.

Pipeline description:

The following table describes the key algorithm components in the pipeline:

LLM-MD5 Deduplicator (MaxCompute)-1

Calculates the hash value of the text in the "text" field and removes duplicate text. Only one instance of text with the same hash value is retained.

LLM-Count Filter (MaxCompute)-1

Removes samples from the "text" field that do not meet the required number or ratio of alphanumeric characters. Most characters in an SFT dataset are letters and numbers. This component can remove some dirty data.

LLM-N-Gram Repetition Filter (MaxCompute)-1

Filters samples based on the character-level N-gram repetition rate of the "text" field. The component uses a sliding window of size N to create a sequence of N-character segments from the text. Each segment is called a gram. The component counts the occurrences of all grams. Finally, it calculates the ratio of

total frequency of grams that appear more than once / total frequency of all gramsand uses this ratio to filter samples.LLM-Sensitive Word Filter (MaxCompute)-1

Uses the system's preset sensitive word file to filter samples in the "text" field that contain sensitive words.

LLM-Length Filter (MaxCompute)-1

Filters samples based on the length of the "text" field and the maximum line length. The maximum line length is determined by splitting the sample by the line feed character (

\n).LLM-MinHash Deduplicator (MaxCompute)-1

Removes similar samples based on the MinHash algorithm.

Run the pipeline.

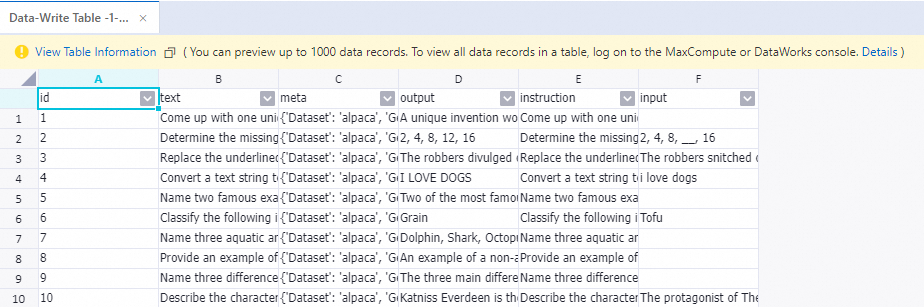

After the run is complete, right-click the Write Table-1 component and choose View Data > Outputs to view the processed samples.

References

For more information about the LLM algorithm components, see LLM data processing (MaxCompute).