NimoShake (also known as DynamoShake) is a data synchronization tool developed by Alibaba Cloud. It allows you to migrate Amazon DynamoDB databases to Alibaba Cloud.

Prerequisites

An ApsaraDB for MongoDB instance is created. For more information, see Create a replica set instance or Create a sharded cluster instance.

Background information

NimoShake is primarily designed for migrations from an Amazon DynamoDB database. The destination must be an ApsaraDB for MongoDB database.For more information, see NimoShake overview.

Important considerations

Resource consumption. A full data migration consumes resources in both the source and destination databases. This may increase the load of your database servers. If your database experiences high traffic or if the server specifications are excesssively low, this could lead to increased database pressure. We strongly recommend carefully evaluating the potential impact on performance before migrating data, and performing the migration during off-peak hours.

Storage capacity. Make sure that the storage space of the ApsaraDB for MongoDB instance is larger than that of the Amazon DynamoDB database.

Key terms

Resumable transmission: This feature divides a task into multiple parts for transmission. If transmission is interrupted due to network failures or other reasons, the task can resume from where it left off, rather than restarting from the beginning.

NoteFull migration does not support resumable transmission.

Incremental migration does support resumable transmission. If an incremental synchronization connection is lost and recovered within a short timeframe, the synchronization can continue. However, in certain situations, such as prolonged disconnection or loss of the previous checkpoint, a full synchronization may be triggered again.

Checkpoint: Resumable transmission for incremental synchronization is achieved through checkpoints. By default, checkpoints are written to the destination MongoDB database, specifically to a database named

nimo-shake-checkpoint. Each collection records its own checkpoint table, and astatus_tablerecords whether the current synchronization is a full or incremental task.

NimoShake features

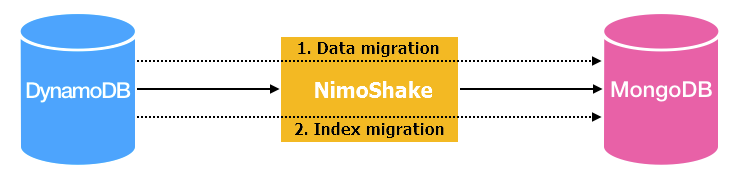

NimoShake currently supports a separated synchronization mechanism, carried out in two steps:

Full data migration.

Incremental data migration.

Full migration

Full migration consists of two parts: data migration and index migration. The following figure shows the basic architecture:

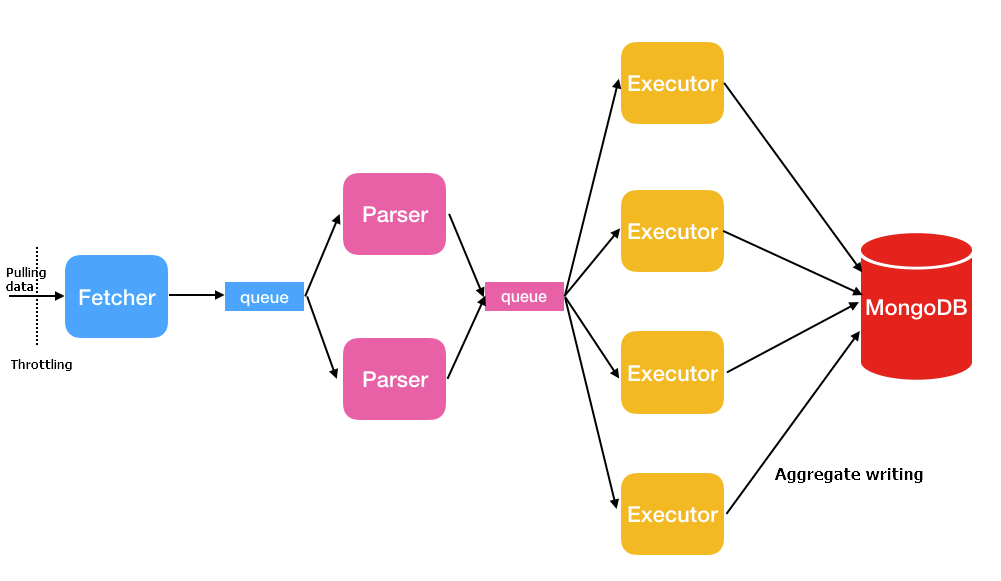

Data migration: NimoShake uses multiple concurrent threads to pull source data, as shown in the following figure:

Thread

Description

Fetcher

Calls the protocol conversion driver provided by Amazon to batch-retrieve data from the source table and place it in queues until all source data is pulled.

NoteOnly one fetcher thread is provided.

Parser

Reads data from queues and parses it into a BSON structure. After data is parsed, the parser writes data to the executor's thread. Multiple parser threads can be started. The default value is 2. Adjust the number of parser threads using the

FullDocumentParserparameter .Executor

Pulls data from queues, then aggregates and writes data to the destination ApsaraDB for MongoDB database. Up to 16 MB of data or 1,024 entries can be aggregated. Multiple executor threads can be started. The default value is 4. Adjust the number of executor threads using the

FullDocumentConcurrencyparameter.Index migration: NimoShake creates indexes after data migration is complete. Indexes are categorized into auto-generated and user-created indexes:

Auto-generated indexes:

If you have a partition key and a sort key, NimoShake will create a unique composite index and write it to MongoDB.

NimoShake will also create a hash index for the partition key and write it to MongoDB.

If you have only a partition key, NimoShake will create a hash index and a unique index in MongoDB.

User-created indexes: If you have a user-created index, NimoShake creates a hash index based on the primary key and writes the index to the destination ApsaraDB for MongoDB database.

Incremental migration

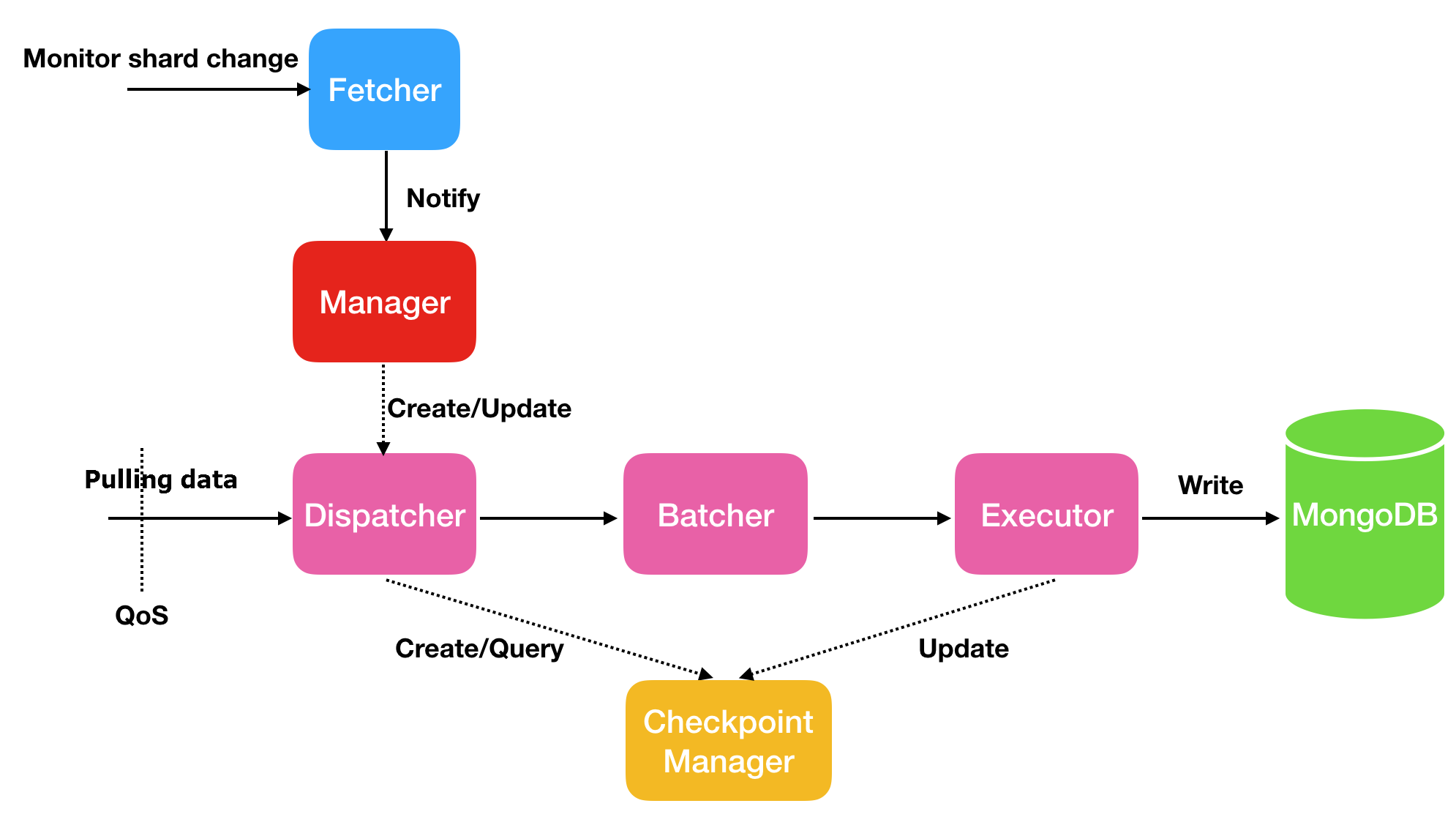

Incremental migration only synchronizes data; it does not synchronize indexes generated during the incremental synchronization process. The basic architecture is as follows:

Thread | Description |

Fetcher | Monitors shard changes in the stream. |

Manager | Manages message notification and dispatcher creation; each shard corresponds to one Dispatcher. |

Dispatcher | Retrieves incremental data from the source. For resumable transmission, data retrieval resumes from the last Checkpoint instead of the beginning. |

Batcher | Parses, packages, and aggregates incremental data retrieved by the Dispatcher thread. |

Executor | Writes the aggregated data to the destination ApsaraDB for MongoDB database and updates the checkpoint. |

Steps: Migrate Amazon DynamoDB to Alibaba Cloud (Ubuntu example)

This section demonstrates how to use NimoShake to migrate an Amazon DynamoDB database to ApsaraDB for MongoDB, using an Ubuntu system as an example.

Download NimoShake: Run the following command to download the NimoShake package:

wget https://github.com/alibaba/NimoShake/releases/download/release-v1.0.14-20250704/nimo-shake-v1.0.14.tar.gzNoteWe recommend downloading the latest version of the NimoShake package.

Decompress the package: Run the following command to decompress the NimoShake package:

tar zxvf nimo-shake-v1.0.14.tar.gzAccess the directory: After decompression, run the

cd nimo-shake-v1.0.14command to enter thenimofolder.Open configuration file: Run the

vi nimo-shake.confcommand to open the NimoShake configuration file.Configure NimoShake: Configure the parameters in the

nimo-shake.conffile. The following table describes each configuration item:Parameter

Description

Example

id

The ID of the migration task. This is customizable and used for outputting PID files, log names, the database name for checkpoint storage, and the destination database name.

id = nimo-shakelog.file

The path of the log file. If this parameter is not configured, logs are displayed in

stdout.log.file = nimo-shake.loglog.level

The logging level. Valid values:

none: No logserror: Error messageswarn: Warning informationinfo: System statusdebug: Debugging information

Default value:

info.log.level = infolog.buffer

Specifies whether to enable log buffering. Valid values:

true: Log buffering is enabled. Log buffering ensures high performance but may lose a few of the latest log entries upon exit.false: Log buffering is disabled. If disabled, performance may be degraded. However, all log entries are displayed upon exit.

Default value:

true.log.buffer = truesystem_profile

The PPROF port, used for debugging and displaying stackful coroutine information.

system_profile = 9330full_sync.http_port

The RESTful port for the full migration phase. Use

curlto view internal monitoring statistics. For more information, see the wiki.full_sync.http_port = 9341incr_sync.http_port

The RESTful port for the incremental migration phase. Use

curlto view internal monitoring statistics. For more information, see the wiki.incr_sync.http_port = 9340sync_mode

The type of data migration. Valid values:

all: Full migration and incremental migrationfull: Only full migration

Default value:

all.NoteOnly

fullis supported when the source is an ApsaraDB for MongoDB instance compatible with the DynamoDB protocol.sync_mode = allincr_sync_parallel

Specifies whether to perform parallel incremental migration. Valid values:

true: Parallel incremental migration is enabled. This consumes more memory.false: Parallel incremental migration is disabled.

Default value:

false.incr_sync_parallel = falsesource.access_key_id

The AccessKey ID for the Amazon DynamoDB database.

source.access_key_id = xxxxxxxxxxxsource.secret_access_key

The AccessKey secret for the Amazon DynamoDB database.

source.secret_access_key = xxxxxxxxxxsource.session_token

The temporary key for accessing the Amazon DynamoDB database. Optional if no temporary key is used.

source.session_token = xxxxxxxxxxsource.region

The region of the Amazon DynamoDB database. Optional if region is not applicable or auto-detected.

source.region = us-east-2source.endpoint_url

Configurable if the source is an endpoint type.

ImportantEnabling this parameter overrides the preceding source-related parameters.

source.endpoint_url = "http://192.168.0.1:1010"source.session.max_retries

The maximum number of retries after a session failure.

source.session.max_retries = 3source.session.timeout

The session timeout period.

0indicates that the session timeout is disabled. Unit: milliseconds.source.session.timeout = 3000filter.collection.white

A whitelist of collection names to migrate. For example,

filter.collection.white = c1;c2indicates that thec1andc2collections are migrated and other collections are filtered out.filter.collection.white = c1;c2filter.collection.black

The names of collections to be filtered out. For example,

filter.collection.black = c1;c2indicates that thec1andc2collections are filtered out and other collections are migrated.ImportantCannot be used with

filter.collection.whitesimultaneously. If both are specified, all collections are migrated.filter.collection.black = c1;c2qps.full

Limits the execution frequency of the

Scancommand during full migration (maximum calls per second).Default value: 1000.

qps.full = 1000qps.full.batch_num

The number of data entries to pull per second during full migration.

Default value: 128.

qps.full.batch_num = 128qps.incr

Limits the execution frequency of the

GetRecordscommand during incremental migration (maximum calls per second).Default value: 1000.

qps.incr = 1000qps.incr.batch_num

The number of data entries to pull per second in incremental migration.

Default value: 128.

qps.incr.batch_num = 128target.type

The type of the destination database. Valid values:

mongodb: an ApsaraDB for MongoDB instance.aliyun_dynamo_proxy: a DynamoDB-compatible ApsaraDB for MongoDB instance.

target.type = mongodbtarget.address

The connection string of the destination database. Supports MongoDB connection strings and DynamoDB-compatible connection addresses.

For more MongoDB addresses, see Connect to a replica set instance or Connect to a sharded cluster instance.

target.address = mongodb://username:password@s-*****-pub.mongodb.rds.aliyuncs.com:3717target.mongodb.type

The type of the destination ApsaraDB for MongoDB instance. Valid values:

replica: replica set instance.sharding: sharded cluster instance.

target.mongodb.type = shardingtarget.db.exist

Specifies how to handle existing collections with the same name at the destination. Valid values:

rename: NimoShake renames existing collections by adding a timestamp suffix to the name. For example, NimoShake changes c1 to c1.2019-07-01Z12:10:11.WarningThis may affect your business. Ensure prior preparation.

drop: Deletes the existing collection at the destination.

If not configured, the migration will terminate with an error if a collection with the same name already exists in the destination.

target.db.exist = dropsync_schema_only

Specifies whether to migrate only the table schema. Valid values:

true: Only the table schema is migrated.false: False.

Default value:

false.sync_schema_only = falsefull.concurrency

The maximum number of collections that can be migrated concurrently in full migration.

Default value: 4.

full.concurrency = 4full.read.concurrency

The document-level concurrency within a table during full migration. This parameter indicates the maximum number of threads that can concurrently read from the source for a single table, corresponding to the TotalSegments parameter of the Scan interface.

The number of concurrent threads to read documents from a single table at the source during full migration. Corresponds to the Scan interface's TotalSegments parameter.

full.read.concurrency = 1full.document.concurrency

A parameter for full migration. The number of concurrent threads to write documents from a single table to the destination during full migration. Default: 4

Default value: 4.

full.document.concurrency = 4full.document.write.batch

The number of data entries to be aggregated and written at a time. If the destination is a DynamoDB protocol-compatible database, the maximum value is 25.

full.document.write.batch = 25full.document.parser

A parameter for full migration. The number of concurrent parser threads to convert DynamoDB protocol data to the corresponding protocol for the destination.

Default value: 2.

full.document.parser = 2full.enable_index.user

A parameter for full migration. Specifies whether to migrate user-defined indexes. Valid values:

true: Yes.false: No.

Default value:

true.full.enable_index.user = truefull.executor.insert_on_dup_update

A parameter for full migration. Specifies whether to change the

INSERToperation to theUPDATEoperation if a duplicate key is encountered on the destination. Valid values:true: Yes.false: No.

Default value:

true.full.executor.insert_on_dup_update = trueincrease.concurrency

A parameter for incremental migration. The maximum number of shards that can be captured concurrently.

Default value: 16.

increase.concurrency = 16increase.executor.insert_on_dup_update

A parameter for incremental migration. Specifies whether to change the

INSERToperation to theUPDATEoperation if the same keys exist on the destination. Valid values:true: Yes.false: False.

Default value:

true.increase.executor.insert_on_dup_update = trueincrease.executor.upsert

A parameter for incremental migration. Specifies whether to change the

UPDATEoperation to theUPSERToperation if no keys are found at the destination. Valid values:true: Yesfalse: False

NoteAn

UPSERToperation checks whether the specified keys exist. If they do, theUPDATEoperation is performed. Otherwise, theINSERToperation is performed.increase.executor.upsert = truecheckpoint.type

The storage type for resumable transmission (checkpoint) information. Valid values:

mongodb: Checkpoint information is stored in the ApsaraDB for MongoDB database. This value is available only when thetarget.typeparameter is set tomongodb.file: Checkpoint information is stored in your computer.

checkpoint.type = mongodbcheckpoint.address

The address for storing checkpoint information.

If the

checkpoint.typeparameter is set tomongodb, enter the connection string of the ApsaraDB for MongoDB database. If not configured, checkpoint information will be stored in the destination ApsaraDB for MongoDB database. See Connect to a replica set instance or Connect to a sharded cluster instance for details.If the

checkpoint.typeparameter is set tofile, enter a relative path (such as a checkpoint). Defaults to the checkpoint folder relative to the NimoShake executable if not configured.

checkpoint.address = mongodb://username:password@s-*****-pub.mongodb.rds.aliyuncs.com:3717checkpoint.db

The name of the database for checkpoint information. If not configured, the database name will be in the

<id>-checkpointformat.Example:

nimo-shake-checkpoint.checkpoint.db = nimo-shake-checkpointconvert._id

Adds a prefix to the

_idfield in DynamoDB to avoid conflicts with the_idfield in MongoDB.convert._id = prefull.read.filter_expression

The DynamoDB expression used for filtering during the full migration .

:beginand:endare variables that start with a colon. The actual values are specified infilter_attributevalues.full.read.filter_expression = create_time > :begin AND create_time < :endfull.read.filter_attributevalues

The values corresponding to the variables in

filter_expressionfor full migration filtering.Nrepresents Number andSrepresents String.full.read.filter_attributevalues = begin```N```1646724207280~~~end```N```1646724207283Start migration: Run the following command to start data migration by using the configured

nimo-shake.conffile:./nimo-shake.linux -conf=nimo-shake.confNoteUpon completion of the full migration,

full sync done!will be displayed. If the migration is terminated due to an error, the program will automatically close and print the corresponding error message to assist you in troubleshooting.