Large language models (LLMs) lack private knowledge, and their general knowledge can be outdated. The industry commonly uses retrieval-augmented generation (RAG) technology to retrieve relevant information from external sources based on user input. This retrieved content is then combined with the user's query and provided to the LLM to generate more accurate answers. The knowledge base feature, which is the RAG capability of Model Studio, effectively supplements private knowledge and provides the latest information.

Console restrictions: Only International Edition users who created applications before April 21, 2025 can access the Application Development tab, as shown in the following figure.

This tab contains the following features: Applications (agent application and workflow application), Components (prompt engineering and plug-in), and Data (knowledge base and application data). These are all preview features. Use them with caution in production environments.

API call limits: Only International Edition users who created applications before April 21, 2025, can call the application data, knowledge base, and prompt engineering APIs.

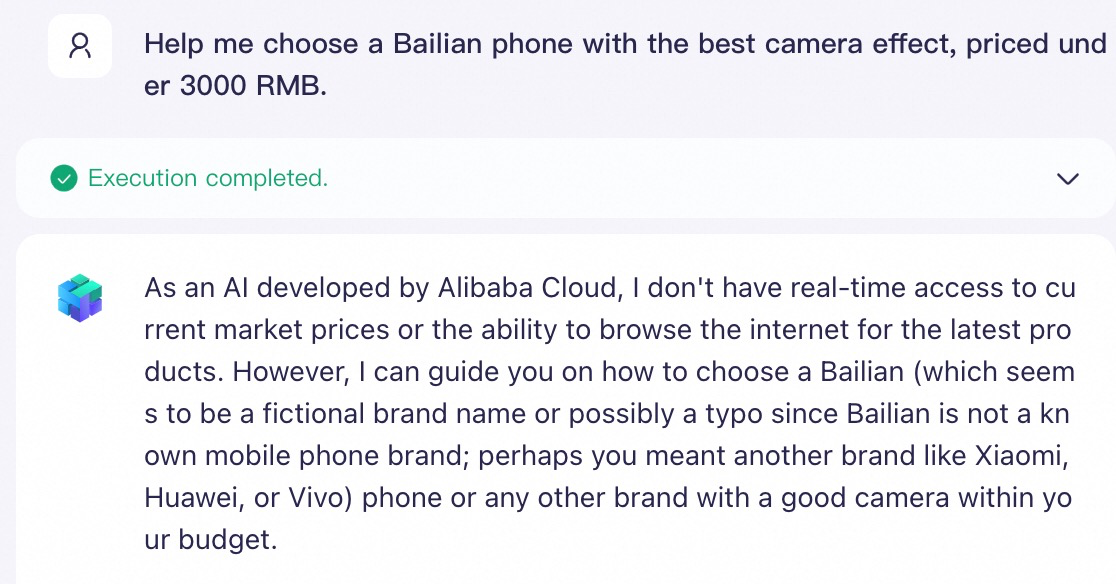

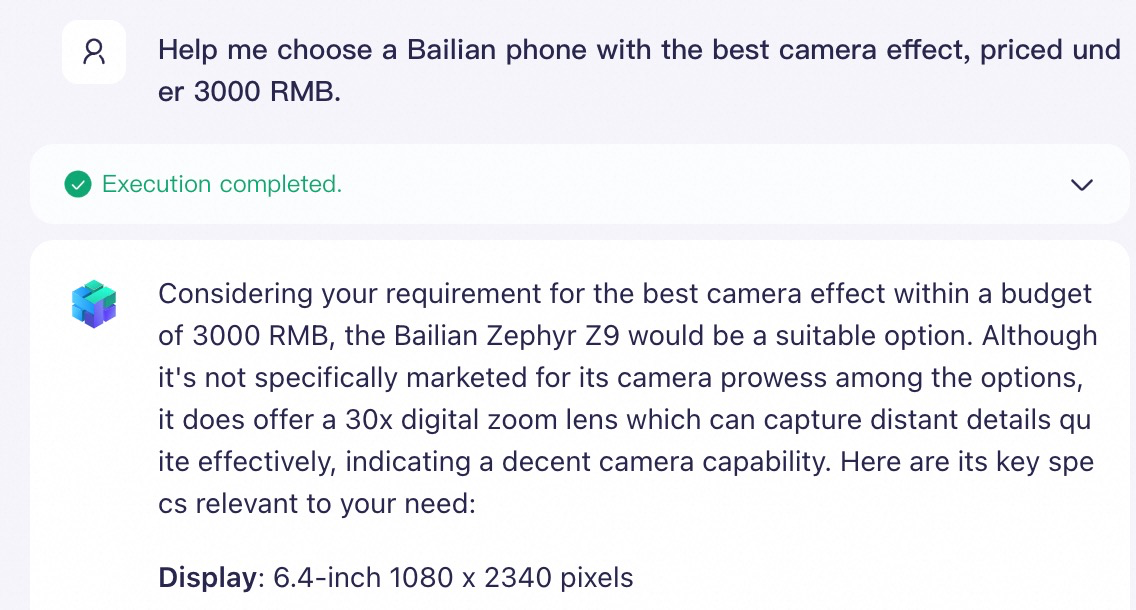

Application without a dedicated knowledge base Without a dedicated knowledge base, the LLM cannot accurately answer questions about specific domains.

| Application with a dedicated knowledge base With a dedicated knowledge base, the LLM can accurately answer questions about specific domains.

|

Model availability

You can use a knowledge base with the following models. Set up a Qwen knowledge base

Qwen-Max/Plus/Turbo

QwenVL-Max/Plus

Qwen (Qwen2.5, etc.)

The list above may be updated at any time. Refer to the models that are available on the My Applications page when you create an application for the most current list.

Getting started

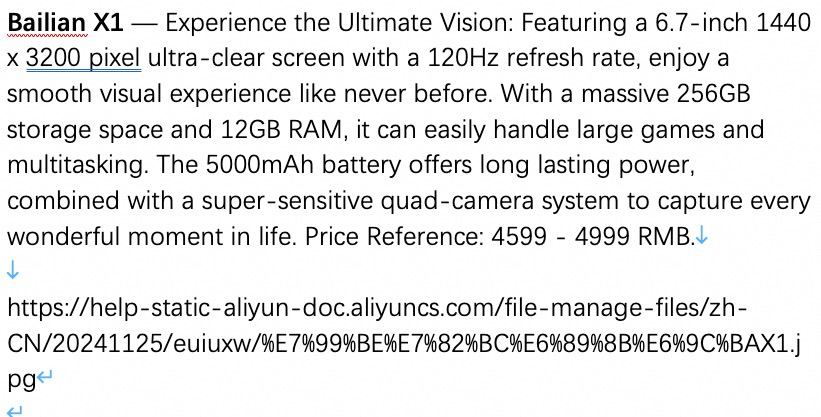

This section describes how to build a LLM Q&A application that can answer questions about a specific domain (in this case, a fictional "Bailian phone") without writing any code.

1. Build a knowledge base

Go to the Knowledge Base page and click Create Knowledge Base. Enter a Name, leave the other settings at their default values, and click Next Step.

Select the Default Category and upload the Bailian Phones Specifications.docx file. Click Next Step, and then click Import.

2. Integrate with a business application

After you create a knowledge base, you can associate it with a specific application (which must be in the same workspace as the knowledge base) or an external application to process retrieval requests.

Integrate with an agent application

Go to the My Applications page, find the target agent application, and click Configure on its card. Then, select a model for the application.

Click the + button to the right of Document to add the knowledge base that you created in the previous step. You can keep the default values for the similarity threshold and weight.

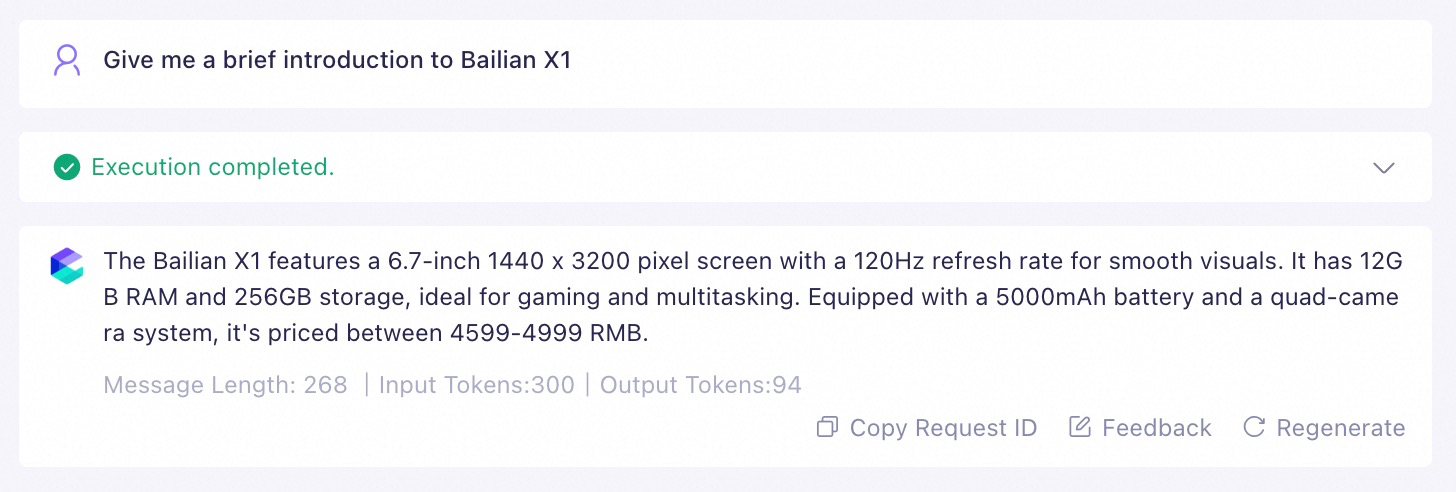

Ask a question in the input box on the right. The LLM will use the knowledge base that you created to generate an answer.

For example: "Please help me choose the best Bailian phone for photography that costs less than 3,000 yuan."

Integrate with a workflow application

Go to the My Applications page, find the target workflow application, and click Configure on its card. Then, drag a Knowledge Base node onto the canvas and connect it after the Start node.

Configure the Knowledge Base node:

Input: In the Value drop-down list to the right of the variable name

content, select .Select Knowledge Base: Select the knowledge base that you created in the previous step.

Set topK (Optional): This determines the number of knowledge segments returned to descendant nodes (usually LLM nodes).

Increasing this value usually improves the accuracy of the LLM's answers, but it also increases the number of input tokens consumed by the LLM.

Drag a LLM node onto the canvas and connect it after the Knowledge Base node and before the End node.

Configure the LLM node:

In the Model Configuration list, select a model for the node.

In the Prompt field, enter a prompt that instructs the LLM to use the knowledge base. You must enter / to insert the

resultvariable, which represents the results returned by the knowledge base retrieval.

Configure the End node: Enter

/, then select to output the result returned by the LLM.Click Test in the upper-right corner of the page. Then, ask a question in the input box on the right. The LLM will use the knowledge base that you created to generate an answer.

For example: "Please help me choose the best Bailian phone for photography that costs less than 3,000 yuan."

Integrate with an external application

In addition to building applications in Model Studio, you can also use the retrieval capability of a knowledge base as an independent RAG service. Using the GenAI Service Platform SDK, you can quickly integrate this service into external AI applications.

For detailed integration steps, see the Knowledge base API guide.

3. Optimize the knowledge base (Optional)

If knowledge retrieval is incomplete or the content is inaccurate during the Q&A process, see Optimize knowledge base performance.

User guide

On the Knowledge Base page, you can view and manage all knowledge bases in the current workspace.

Knowledge Base ID: The value of the ID field on each knowledge base card. It is used for API calls and other scenarios.Create a knowledge base

On the Knowledge Base page, click Create Knowledge Base.

Select the appropriate Knowledge Base Type based on your application scenario. A single knowledge base can only support one type. You cannot change the knowledge base type after creation.

Document Search (for retrieval scenarios)

Scenarios:

This type is suitable for retrieving unstructured data (data that is not organized in a predefined table schema, including text, tables, and images), such as internal company documents and product manuals.

If a file contains images and you need the application to return them in the answer, select Document Search.

Data Source Integration: You can upload local files or import from Alibaba Cloud Object Storage Service (OSS).

Data Query (for Chatbot or NL2SQL scenarios)

Scenarios:

This type is suitable for building Q&A systems based on structured data (data organized in a predefined table schema), such as FAQ, product data, or personnel information query assistants.

If your data consists of complete FAQ Q&A pairs, select Data Query. For example, if you have an Excel file that contains two columns,

QuestionandAnswer, a Data Query knowledge base lets you retrieve information only from theQuestioncolumn and use only the content from theAnswercolumn as a reference for the LLM's response.A Document Search knowledge base cannot easily achieve this effect.

You can import multiple Excel files with completely identical table schemas.

Data Source Integration: You can upload local XLS or XLSX files.

Image Q&A (for search-by-image scenarios)

Scenarios:

This type is suitable for building multimodal retrieval applications such as search by image and search by image plus text, like a product shopping guide or Image Q&A assistant.

Data Source: You can upload local XLS or XLSX files.

XLS and XLSX files must contain publicly accessible image URLs to build image indexes. For details, see the creation instructions below.

During peak hours, the entire creation process may take several hours, depending on the data volume. Please wait patiently.

Update a knowledge base

Any changes to the knowledge base content are synchronized in real time with all applications that reference it.

Document Search knowledge bases

Automatic update (Recommended)

You can achieve this by integrating the APIs for OSS, FC, and Model Studio knowledge bases. Just follow these simple steps:

Create a bucket: Go to the OSS console and create an OSS Bucket to store your original files.

Create a knowledge base: Create an unstructured knowledge base to store private knowledge content.

Create a user-defined function: Go to the FC console and create a function for file change events, such as file creation and deletion. For more information, see Create a function. These functions synchronize file changes in OSS to the created knowledge base by calling the relevant APIs from the Knowledge base API guide.

Create an OSS trigger: In FC, associate an OSS trigger with the user-defined function that you created in the previous step. When a file change event is detected (for example, when a new file is uploaded to OSS), the corresponding trigger is activated, which triggers FC to execute the corresponding function.

Manual update

On the Knowledge base page, find the target knowledge base, and click View Details on its card.

How to add a new file: Click Upload Data and select the existing files in the application data. For more information, see How to upload files to application data.

How to delete a file: Find the target file and click Delete on its right.

This operation only removes the file from the knowledge base and does not delete the source file in Application Data.

How to modify file content: In-place updates and overwriting file uploads are not supported. You must first delete the old version of the file from the knowledge base and then re-import the new, modified version.

Note: Keeping the old version of the file may lead to outdated content being retrieved and recalled.

Data Query and Image Q&A knowledge bases

Automatic update

Not supported.

Manual update

When the data source of a knowledge base is a data table in Application Data, it can only be updated manually. The process involves two steps.

Step 1: Update the data table

Go to the Application Data tab. In the list on the left, select the target data table, and click Import Data.

How to insert new data: Set Import Type to Incremental Upload. You need to upload an Excel file that only contains the table header and the new data rows.

The file's header must match the current table schema. You can use the Download Template feature on the page to obtain a standard header file and fill in the new data directly.

How to delete data: Set Import Type to Upload and Overwrite. You need to upload an Excel file that contains the header and the latest full data (with the records to be deleted removed).

How to obtain the full data: Click the

icon on the page to download the data in XLSX format.

icon on the page to download the data in XLSX format.How to modify data: Set Import Type to Upload and Overwrite. You need to upload an Excel file that contains the header and the latest full data (including the corresponding modifications).

Step 2: Synchronize changes to the knowledge base

Return to the Knowledge Base list, find the target knowledge base, and click View Details on its card. Click the

icon at the top left of the data table. After you confirm the operation, the latest content of the data table is synced to the knowledge base.

icon at the top left of the data table. After you confirm the operation, the latest content of the data table is synced to the knowledge base.You still need to manually repeat the above steps after each subsequent update. Data changes cannot be automatically synchronized for knowledge bases that use "Application Data" as the data source.

Edit a knowledge base

After a knowledge base is created, you can only modify the Knowledge Base Name, Knowledge Base Description, and Similarity Threshold. Other configurations cannot be changed. Editing a knowledge base using an API is not supported.

Procedure: On the Knowledge Base page, find the target knowledge base, click the ![]() icon on its card, and then click Edit.

icon on its card, and then click Edit.

Delete a knowledge base

This operation does not delete the source files or data tables in Application Data.

This operation is irreversible. Please proceed with caution.

Before you can delete a knowledge base, you must first disassociate it from all published applications.

Associated unpublished applications will not block the delete operation.

Hit testing

Imagine that you have built a knowledge base, but you find that in actual use, the AI application often provides irrelevant answers or fails to find information that exists in the knowledge base. Hit testing is a key tool that helps you identify and resolve these issues proactively.

With hit testing, you can:

Verify whether the knowledge base can provide effective knowledge input for the AI application.

Fine-tune the similarity threshold to balance recall rate and accuracy.

Discover content gaps or quality issues in the knowledge base.

Scenario examples

Scenario 1: Customer inquires about product price

Test input: "How much is your Bailian phone?" Expected result: Should be able to retrieve relevant text segments containing price information.Scenario 2: Troubleshooting a technical issue

Test input: "What should I do if my device can't connect to WiFi?" Expected result: Should be able to retrieve relevant text segments about WiFi connection troubleshooting.

Procedure

On the Knowledge Base page, find the target knowledge base, and click Hit Test on its card.

Enter a question in the test interface (we recommend collecting frequently asked user questions in advance) and observe the retrieval results.

Retrieval result: The hit result for this test keyword (sorted by similarity in descending order). Click any segment to view its specific content.

Icon: For a Image Q&A knowledge base, the system first converts the input image into a vector and retrieves relevant records. Then, it sends these records along with the question to the LLM to generate an answer. For Document Search or Data Query knowledge bases, the uploaded image does not participate in the retrieval.

Icon: For a Image Q&A knowledge base, the system first converts the input image into a vector and retrieves relevant records. Then, it sends these records along with the question to the LLM to generate an answer. For Document Search or Data Query knowledge bases, the uploaded image does not participate in the retrieval.

Confirm whether the relevant text segments are correctly retrieved. If not, you need to adjust the similarity threshold and repeat the previous step.

Click View Historical Retrieval Records to compare the retrieval performance under different past threshold settings.

Quotas and limits

For information about the data sources and capacity supported by a knowledge base, see Knowledge base quotas and limits.

Each application can be associated with a maximum of 5 Document Search knowledge bases, 5 Data Query knowledge bases, and 1 Image Q&A knowledge base.

Billing

The knowledge base feature is free of charge, but you may incur fees when you call an application that references a knowledge base.

Step | Billing information | |

Free of charge. | ||

When you call an application, text segments retrieved from the knowledge base increase the number of input tokens for the LLM. This may lead to an increase in model inference (call) costs. For details about model inference (call) costs, see Billable items. Note: If you only retrieve from a specified knowledge base by calling the Retrieve API and do not use an application for generation, you will not be charged. | ||

Free of charge. | ||

API reference

For the latest complete list of knowledge base APIs and their input and output parameters, see API references (knowledge base).

For specific usage instructions and code examples for the related APIs, see Knowledge base API guide.