This topic describes the features of Queen SDK and how to download the demo of Queen SDK.

Demos

Platform | Demo | Sample project | Integration guide |

Android | Scan the QR code in DingTalk to download the Queen SDK demo for Android or iOS. | ||

iOS | |||

Web |

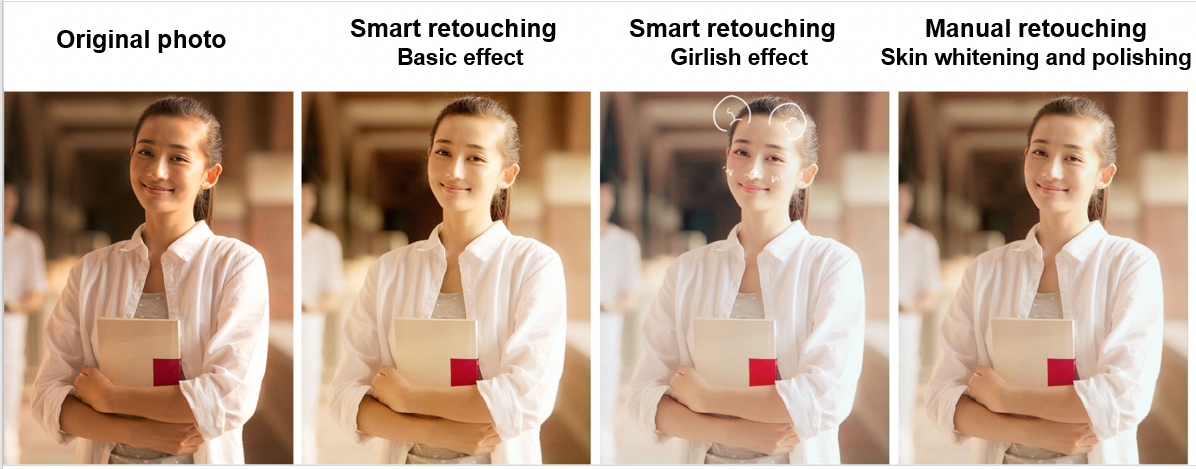

Face retouching

Queen SDK provides various face retouching effects, such as skin whitening, skin smoothing, blemish concealing, and teeth whitening. These effects can be applied either automatically or manually. You can adjust the levels of these effects to achieve a naturally beautiful look.

The face retouching effects automatically adapt to all kinds of lighting conditions and environments.

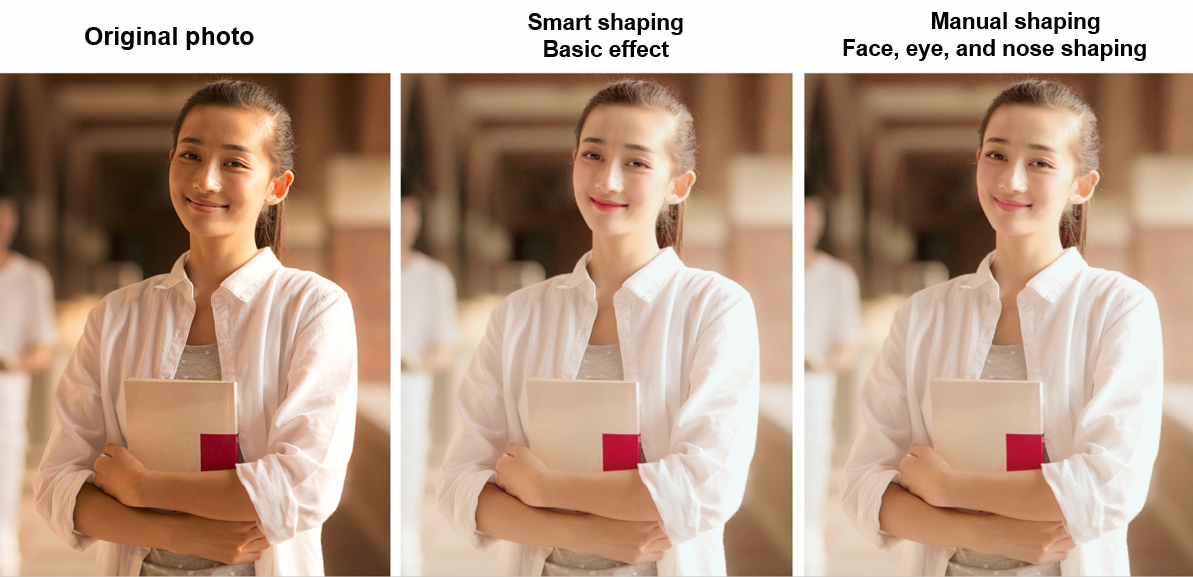

Face shaping

Face shaping is performed based on a highly-accurate facial keypoint detection technology and an advanced intelligent vision algorithm. You can use face shaping effects to modify facial features and facial contours. For example, you can change the size of your eyes, face, and chin. You can also change the level of each effect.

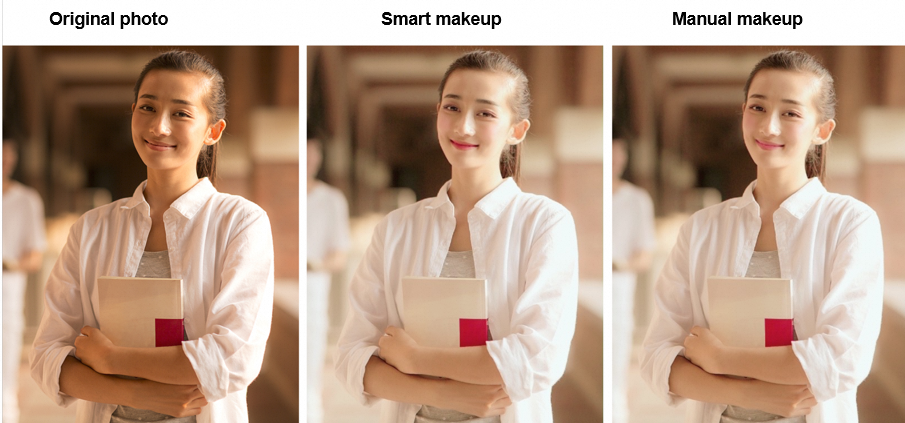

Makeup

Makeup effects adapt to changes in facial expressions and movements. This delivers a consistent makeup effect on videos.

The library of makeup types and materials is continually expanded to fit more use cases.

Face play

Based on proprietary algorithms and technologies, Queen SDK supports fun facial effects such as pixelation and facemasks. In the future, more and more interesting effects will be added.

Filters

Queen SDK provides various filters, which are rendered in real time.

The filter library is continuously expanded, and filter effects are continuously improved. In addition, we're preparing to launch a platform where you can manage your filters.

Stickers

Queen SDK provides stickers that adapt to changes in facial expressions and movements.

The sticker library is continuously expanded to include more animated and static stickers.

Chroma key

Chroma key is used based on color gamut detection and an image segmentation algorithm. Chroma key supports both blue and green screens. You can use blue or green screens of different textures and color gamuts. This helps you better manage background segmentation and color spills.

Chroma key can accurately extract still or moving subjects under all kinds of lighting conditions and angles.

Background replacement

Background replacement can accurately extract still or moving subjects under all kinds of lighting conditions and angles, even if the real background is complex.

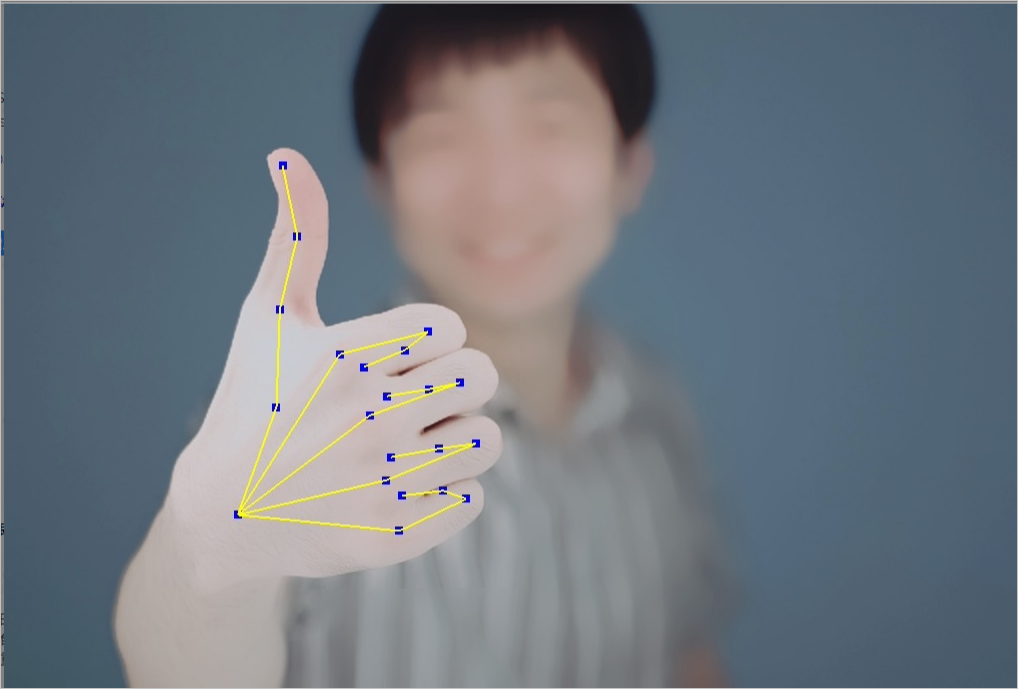

Gesture recognition

Gesture recognition can accurately detect 21 key points on hands in real time and identify 25 common gestures by using proprietary algorithms. Up to 8 hands can be recognized within an image.

Movement detection

Based on proprietary algorithms, movement detection can accurately detect 18 key points on the human body in real time and identify 13 static postures, such as standing upright, raising hands, hand heart, arms akimbo, and superman pose, and 9 movements, such as rope jumping, jumping jack, squat, push-up, and sit-up. The number of times these movements are done is provided in real time.

Body shaping

Body shaping is performed by using proprietary algorithms. You can slim bodies, legs, arms, necks, and waists, lengthen legs, resize heads, and enlarge breasts based on different human body shapes. The natural body shaping effects make bodies well-proportioned. Body shaping is suitable for various scenarios such as live streaming and panoramic photographing.

Hairdressing

Queen SDK can accurately recognize hair in real time by using proprietary algorithms. It also allows for hair color changes using a dye effect. This capability can distinguish different hairstyles in various postures and background environments. You can specify the hair color based on your business requirements.

AR writing

Queen SDK can recognize the trajectory of finger key points by using gesture recognition algorithms. The content that you write is rendered in the video based on whether your hand starts or stops writing. This lets you achieve an AR writing effect for videos. AR writing can be used in various scenarios such as live streaming, teaching, and online interaction.

Animoji

Queen SDK can capture different angles and expression changes of human faces, and allow specific Animojis to make corresponding changes based on the proprietary face recognition and expression recognition algorithms. This creates a silly and entertaining effect. It supports 51 different expressions, including winking, blinking, and mouth, eye, and eyebrow movements.

High-fidelity background

High-fidelity background reduces the impact on the background tone and texture during retouching in common scenarios. By default, high-fidelity background is enabled for skin whitening, rosy cheeks, skin smoothing, image sharpening, and whole face retouching. You do not need to configure related parameters.