DataWorks is an important platform as a service (PaaS) provided by Alibaba Cloud. DataWorks supports multiple computing engines and storage engines. This topic describes how to use DataWorks to migrate offline data from ApsaraDB for MongoDB to LindormTable.

Background information

For information about DataWorks, see What is DataWorks?

Considerations

To migrate offline data from ApsaraDB for MongoDB to the ApsaraDB for LindormTable, unnest the nested JSON fields in the offline data. Take note that you do not need to convert the data.

Perform the following steps if you want to process data during the migration process. For example, perform the following steps if you want to perform MD5 hashing on the primary key during the migration process:

Use DataWorks to migrate the data from ApsaraDB for MongoDB to MaxCompute. MaxCompute is also known as Open Data Processing Service (ODPS).

Execute SQL statements to process data in MaxCompute.

Use DataWorks to migrate data from MaxCompute to LindormTable.

Preparations

Before you migrate offline data from ApsaraDB for MongoDB to LindormTable, complete the following preparations:

Prepare the data that you want to migrate in ApsaraDB for MongoDB:

{ "id" : ObjectId("624573dd7c0e2eea4cc8****"), "title" : "ApsaraDB for MongoDB tutorial", "description" : "ApsaraDB for MongoDB is a NoSQL database", "by" : "beginner tutorial", "url" : "http://www.runoob.com", "map" : { "a" : "mapa", "b" : "mapb" }, "likes" : 100 }Prepare schema data in LindormTable:

CREATE TABLE t1(title varchar, desc varchar, by1 varchar, url varchar, a varchar, b varchar, likes int, primary key(title));Use the Data Integration service of DataWorks to configure a DataX task. For more information, see Use DataWorks to configure synchronization tasks in DataX.

Procedure

Configure a MongoDB data source in the DataWorks console. For more information, see Add a MongoDB data source.

Configure a batch synchronization task by using the code editor. For more information, see Configure a batch synchronization task by using the code editor.

Create a workflow.

Log on to the DataWorks console.

In the left-side navigation pane, click Workspace

In the top navigation bar, select the region in which the desired workspace resides. On the Workspaces page, find the workspace that you want to manage and choose in the Actions column.

On the DataStudio page, move the pointer over the

icon and select Create Workflow.

icon and select Create Workflow. In the Create Workflow dialog box, specify Workflow Name and Description.

NoteThe name must be 1 to 128 characters in length and can contain letters, digits, underscores (_), and periods (.).

Click Create.

Create a batch synchronization node.

Click the new workflow and right-click Data Integration.

Choose .

In the Create Node dialog box, specify the Name parameter.

NoteThe node name must be 1 to 128 characters in length and can contain letters, digits, underscores (_), and periods (.).

Click Submit.

Configure the reader and writer of the batch synchronization node.

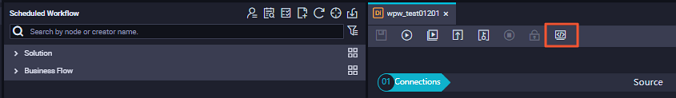

On the node configuration tab that appears, click the Conversion script icon in the top toolbar.

In the Tips message, click OK to open the code editor.

The code editor has generated basic reader and writer settings. You can manually configure the data sources of the reader and writer for the batch synchronization node and specify the information about the tables to be synchronized. The following sample code provides an example.

NoteFor information about the parameters of MongoDB Reader, see MongoDB Reader.

For information about the parameters of Lindorm Writer, see Lindorm Writer.

{ "type": "job", "version": "2.0", "steps": [ { "stepType": "mongodb", "parameter": { "datasource": "test_mongo", //The name of the ApsaraDB for MongoDB data source. "column": [ { "name": "title", "type": "string" }, { "name": "description", "type": "string" }, { "name": "by", "type": "string" }, { "name": "url", "type": "string" }, { "name": "map.a", "type": "document.string" }, { "name": "map.b", "type": "document.string" }, { "name": "likes", "type": "int" } ], "collectionName": "testdatax" }, "name": "Reader", "category": "reader" }, { "stepType": "lindorm", "parameter": { "configuration": { "lindorm.client.seedserver": "ld-xxxx-proxy-lindorm.lindorm.rds.aliyuncs.com:30020", "lindorm.client.username": "root", "lindorm.client.namespace": "test", "lindorm.client.password": "root" }, "nullMode": "skip", "datasource": "", "writeMode": "api", "envType": 1, "columns": [ "title", "desc", "by", "url", "a", "b", "likes" ], "dynamicColumn": "false", "table": "t1", "encoding": "utf8" }, "name": "Writer", "category": "writer" } ], "setting": { "executeMode": null, "errorLimit": { "record": "" }, "speed": { "concurrent": 2, "throttle": false } }, "order": { "hops": [ { "from": "Reader", "to": "Writer" } ] } }After you configure the batch synchronization node, save the node configurations and click the

icon in the upper-left part of the code editor. On the Runtime Log tab, you can view the progress of the migration task.

icon in the upper-left part of the code editor. On the Runtime Log tab, you can view the progress of the migration task.