The Lindorm compute engine includes the Spark UI, which you can use to view detailed information about Spark jobs, such as their running status, submission time, and resource usage.

Access the Spark UI

To obtain the Spark UI address, see View the Spark UI address.

For more information about the open source Spark UI, see Open source Spark UI.

You can copy the Spark UI address into the address bar of a browser to access the Spark UI.

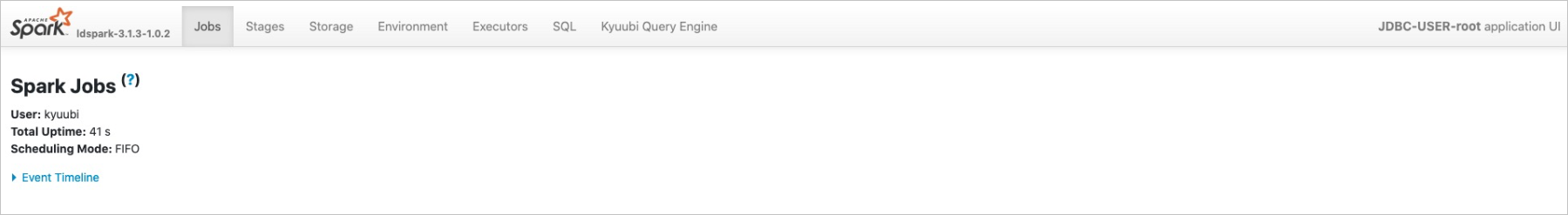

Table 1. Spark UI tabs

Tab | Description |

Jobs | Details about all running jobs. |

Stages | Status information for all stages of a Spark job. |

Storage | Storage information for persisted RDDs and DFs. |

Environment | The environment where the Spark job runs. When the job starts, this environment is built from the runtime environment, configuration files, and user input parameters. |

Executors | Status information for the Spark job's executors. |

SQL | Detailed information about the SQL statements that the compute engine executes. |

Kyuubi Query Engine | JDBC session information. |