This topic describes how to use Lindorm streams to implement data subscription in Push mode. After you create a Lindorm stream for a table in your Lindorm database, the change tracking feature is enabled for the table. The change tracking feature records each data insert, update, and delete operation that is performed on the table. Incremental data involved in the recorded operations is pushed to a Message Queue for Apache Kafka topic that you specify when you create the Lindorm stream. Then, you can consume and use the incremental data to build or implement business services based on your requirements.

Process

The following figure shows how the messages of incremental data in a Lindorm table are pushed to Message Queue for Apache Kafka.

Prerequisites

The IP addresses of your Lindorm client and Message Queue for Apache Kafka client are added to the whitelist of your Lindorm instance. For more information, see Configure whitelists.

The source Lindorm instance and the destination Message Queue for Apache Kafka instance are connected to Lindorm Tunnel Service (LTS). For more information, see Network connection.

A LindormTable data source is created. For more information, see Add a LindormTable data source.

A Kafka data source is created. For more information, see Add a Kafka data source.

The change tracking feature is enabled. For more information, see Enable change tracking.

Create a Lindorm stream

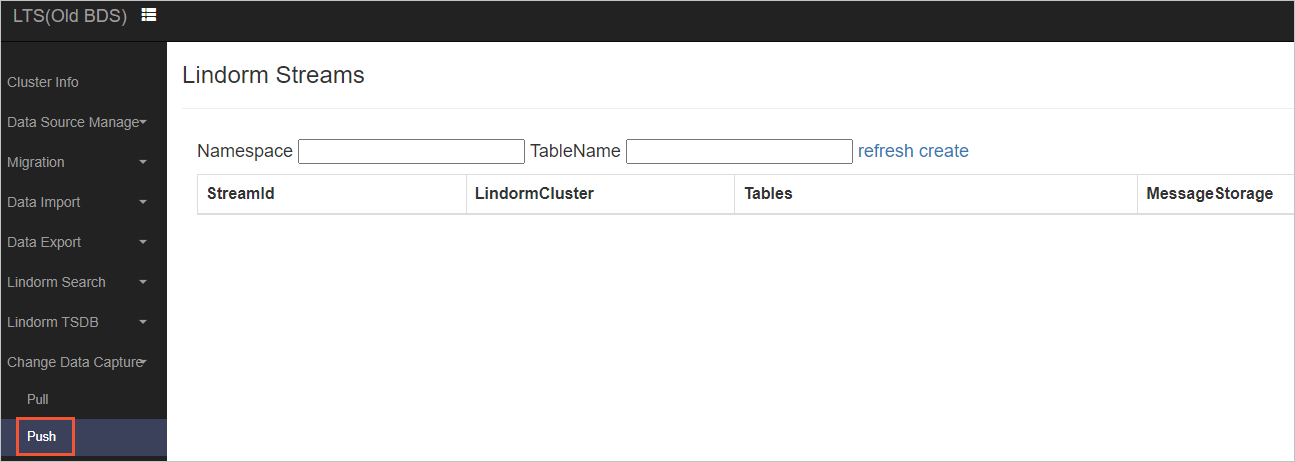

Log on to the LTS web user interface (UI) of your Lindorm instance. In the left-side navigation pane, choose Change Data Capture > Push.

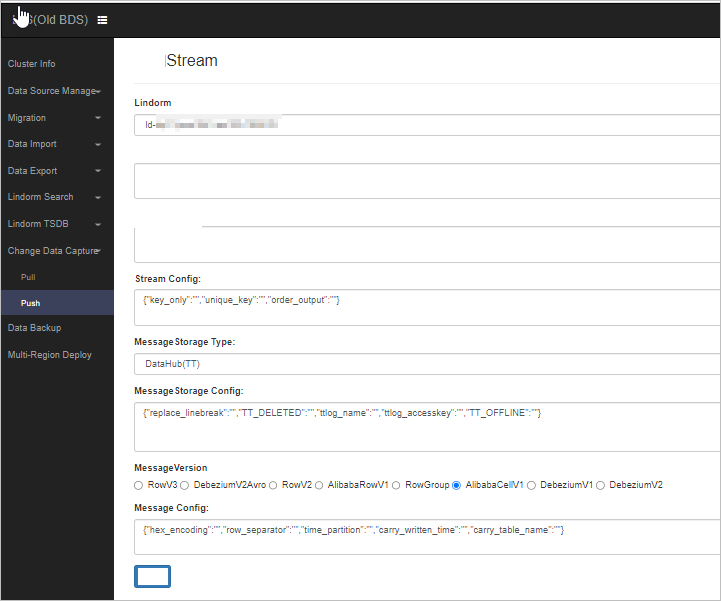

On the page that appears, click create. Then, configure the parameters described in the following table.

Parameter

Description

Lindorm Cluster

Select the created LindormTable data source.

The table name.

Enter the name of the table to which the incremental data you want to subscribe. Enter the table name in the Namespace.Table name format. The following examples show how to specify table names:

ns1.table1: specifies the table1 table in the ns1 namespace.

ns2.*: specifies all tables in the ns2 namespace.

Stream Config

key_only: specifies whether to export only values of the primary key. Default value: false.order_output: specifies whether to record insert, delete, and update operations in sequences based on the values of the primary key. Default value: false.

MessageStorage Type

Select KAFKA.

Storage Datasource

Select the created Kafka data source.

MessageStorage Config

kafka_topic: specifies the name of the Message Queue for Apache Kafka topic to which the tracking data is sent.MessageVersion

The data format. Default value: DebeziumV1.

Message Config

old_image: specifies whether data that is stored in rows before insert, update, and delete operations are performed is included in the stream records that Lindorm generates for the operations. Default value: true.new_image: specifies whether data that is stored in rows after insert, update, and delete operations are performed is included in the stream records that Lindorm generates for the operations. Default value: true.

Click Commit.