You can use the rules engine to forward data from IoT Platform to Message Queue for Apache Kafka for storage. This ensures high-reliability data transmission from devices to IoT Platform, Message Queue for Apache Kafka, and application servers. This topic describes the data forwarding process. In this example, a Thing Specification Language (TSL) communication topic is used as a source topic.

Prerequisites

A data source named DataSource is created and a TSL communication topic is added to the data source. For more information, see Create a data source.

An ApsaraMQ for Kafka instance and a topic that can be used to receive data are created. For more information about how to use ApsaraMQ for Kafka, see Overview.

ImportantThe ApsaraMQ for Kafka instance must reside in the same region as the current IoT Platform instance.

The current Alibaba Cloud account is added to a whitelist that allows you to forward data to ApsaraMQ for Kafka. You can Submit a ticket. apply for whitelist permissions.

Background information

After you configure the data destination, the following configurations are automatically completed. Then, you can forward device data to ApsaraMQ for Kafka by using the rules engine of IoT Platform.

Two IP addresses of the vSwitch to which the ApsaraMQ for Kafka instance is connected are assigned to IoT Platform.

A managed security group is created in the virtual private cloud (VPC) in which the ApsaraMQ for Kafka instance resides. By default, the name of the security group starts with sg-nsm-.

Create a data destination

- Log on to the IoT Platform console.

On the Overview page, click All environment. On the All environment tab, find the instance that you want to manage and click the instance ID or instance name.

- In the left-side navigation pane, choose .

In the upper-right corner of the Data Forwarding page, click Go to New Version to go to the new version.

NoteIf you have performed this step, the Data Forwarding page of the new version appears after you choose Message Forwarding > Data Forwarding.

- Click the Data Destination tab. On this tab, click Create Data Destination.

In the Create Data Destination dialog box, enter a data destination name. In this example, DataPurpose is used. Configure the parameters and click OK.

Parameter

Description

Operation

Select Send Data to Message Queue for Apache Kafka.

Role

Authorize IoT Platform to write data to ApsaraMQ for Kafka.

If you do not have Resource Access Management (RAM) roles, click Create RAM Role to go to the RAM console, create a RAM role, and then grant permissions to the role. For more information, see Create a RAM role.

Region

Select the region where your IoT Platform instance resides.

Instance

Select the ApsaraMQ for Kafka instance.

You can click Create Instance to go to the ApsaraMQ for Kafka console and create an ApsaraMQ for Kafka instance. For more information, see Create an instance.

Topic

Select the ApsaraMQ for Kafka topic that you want to use to receive data from IoT Platform.

You can click Create Topic to go to the ApsaraMQ for Kafka console and create an ApsaraMQ for Kafka topic. For more information, see Create a topic.

Configure and start a parser

Create a parser named DataParser. For more information, see Create a parser.

On the Parser Details page, associate the parser with the created data source.

In the Data Source step of the wizard, click Associate Data Source.

In the dialog box that appears, select DataSource from the Data Source drop-down list, and then click OK.

On the Parser Details page, associate the parser with the created data destination.

Click Data Destination in the wizard. In the Data Destination section, click Associate Data Destination.

In the dialog box that appears, select DataPurpose from the Data Destination drop-down list, and then click OK.

In the Data Destination section, view and save the data destination ID. In this example, the ID is 1000.

When you write the parser script, you must use the data destination ID.

- On the Parser Details page, click Parser.

In the code editor, enter a script. For more information about how to edit a script, see Script syntax.

For more information about function parameters, see Functions.

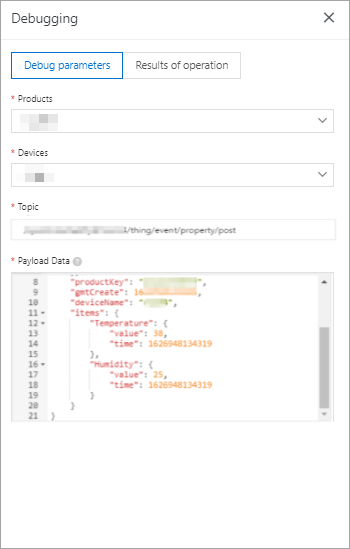

// Use the payload() function to obtain the data that is submitted by devices and convert the data to JSON-formatted data. var data = payload("json"); // Forward submitted TSL data. writeKafka(1000, data, "debug");Click Debugging. In the panel that appears, select a product and a device, specify a topic, and then enter payload data to check whether the script runs as expected.

The following figure shows the parameters.

The following result indicates that the script runs as expected.

action: transmit to kafka[destinationId=1000], data:{"deviceType":"CustomCategory","iotId":"JCp9u***","requestId":"1626948228247","checkFailedData":{},"productKey":"a1o***","gmtCreate":1626948134445,"deviceName":"Device1","items":{"Temperature":{"time":1626948134319,"value":38},"Humidity":{"time":1626948134319,"value":25}}} variables: data : {"deviceType":"CustomCategory","iotId":"JCp9u***","requestId":"1626948228247","checkFailedData":{},"productKey":"a1o***","gmtCreate":1626948134445,"deviceName":"Device1","items":{"Temperature":{"time":1626948134319,"value":38},"Humidity":{"time":1626948134319,"value":25}}}Click Publish.

Go to the Parser tab of the Data Forwarding page. Find the DataParser parser and click Start in the Actions column to start the parser.