Hologres works with Data Lake Formation (DLF) and Object Storage Service (OSS) to provide the data lake acceleration service. The service supports flexible data access and analysis and delivers efficient data processing capabilities. This significantly accelerates queries and analysis of data in OSS data lakes.

Background information

As the digital transformation of enterprises develops, the amount of data increases exponentially. Traditional data analysis faces great challenges in terms of costs, data amount, and data diversity. Hologres works with DLF and OSS to provide the data lake acceleration service based on the data lakehouse architecture. The service helps enterprises implement cost-effective storage of a large amount of data, centralized metadata management, and efficient data analysis and data insights.

Hologres is seamlessly integrated with DLF and OSS to directly accelerate the reads and writes of data in OSS by using foreign tables. Foreign tables are used to map fields rather than store data. The following data formats are supported: Apache Hudi, Delta Lake, Paimon, ORC, Parquet, CSV, and SequenceFile. This eliminates the need for data migration, reduces development and O&M costs, breaks down data silos, and achieves business insights. Two types of Hologres instances are available: Hologres exclusive instances and pay-as-you-go Hologres Shared Cluster instances. For more information, see Purchase a Hologres instance.

The following table describes the Alibaba Cloud services involved in the real-time data lake solution.

Service | Description | Reference |

DLF | Alibaba Cloud DLF is a fully managed service that helps you build data lakes and data lakehouses in the cloud. DLF provides centralized metadata management, centralized permission and security management, and convenient data ingestion and exploration capabilities for data lakes in the cloud. | |

OSS | DLF uses OSS as the unified storage of cloud data lakes. OSS is a secure, cost-effective, and highly reliable service that can store large amounts of data and all types of files. OSS can provide 99.9999999999% of data durability and has become the de facto standard for data lake storage. | |

OSS-HDFS (JindoFS) is a cloud-native data lake storage service. OSS-HDFS is seamlessly integrated with compute engines in the Hadoop ecosystem and provides better performance in offline extract, transform, and load (ETL) of big data based on Hive and Spark than native OSS. OSS-HDFS is fully compatible with Hadoop Distributed File System (HDFS) APIs and supports Portable Operating System Interface (POSIX). You can use OSS-HDFS to manage data in data lake-based computing scenarios in the big data and AI fields. |

Usage notes

Hologres Shared Cluster instances do not store data. If you use a Hologres Shared Cluster instance, you can read data from but cannot write data to OSS data lakes by using foreign tables.

Preparations

In this example, OSS, DLF, and Hologres are activated in the China (Shanghai) region.

Activate OSS and prepare the test data.

On the OSS activation page, follow the on-screen instructions to activate OSS.

NoteAfter OSS is activated, the pay-as-you-go billing method is used by default. If you want to reduce OSS fees, we recommend that you purchase OSS resource plans.

Log on to the OSS console and create an OSS bucket. For more information, see Get started by using the OSS console.

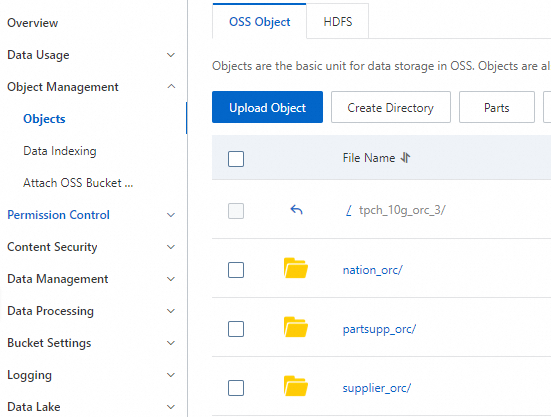

Download the test data file tpch_10g_orc_3.zip and upload the file to the created OSS bucket.

Note

NoteAfter the test data file is uploaded, if files such as

.DS_Storeexist, manually delete them.The test data includes only the nation_orc, supplier_orc, and partsupp_orc files to ensure a short download duration.

Activate DLF and import the test data from OSS to DLF.

Go to the product homepage to activate DLF.

Log on to the DLF console. In the left-side navigation pane, choose Metadata > Metadata. On the Database tab of the page that appears, click Create Database. Then, configure the parameters and click OK. For more information, see Create a metadatabase in the "Metadata management" topic.

In this example, the

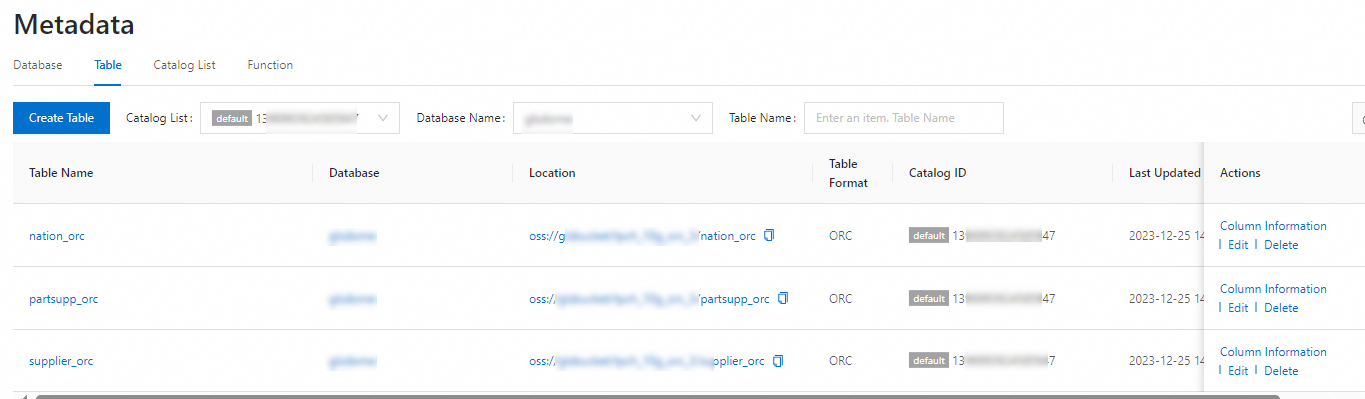

mydatabasedatabase is created.On the Metadata Discovery page, create a metadata extraction task to import the test data from OSS to DLF. For more information, see Metadata discovery.

After the metadata is extracted, you can view the metadata on the Table tab of the Metadata page.

Activate Hologres and purchase a Hologres instance. For more information, see Purchase a Hologres instance.

NoteIf you are a new user, you can go to the Alibaba Cloud Free Trial page to apply for a free trial of Hologres.

Step 1. Configure the environment

Enable data lake acceleration for the Hologres instance.

Go to the Instances page in the Hologres console. Find the desired instance and click Data Lake Acceleration in the Actions column. In the message that appears, click Enable. After data lake acceleration is enabled, the Hologres instance is restarted.

Log on to the Hologres instance and create a database. For more information, see Connect to HoloWeb and perform queries.

Optional. Install an extension. In this example, the extension

dlf_fdwis installed.NoteExtensions are automatically installed for Hologres instances of V2.1 and later. To check the version of your Hologres instance, go to the Instances page and click your instance to navigate to the Instance Details page.

CREATE EXTENSION IF NOT EXISTS dlf_fdw;NoteOn the SQL Editor tab of the HoloWeb console, execute the preceding statement as a superuser to install the extension. An extension is installed at the database level. For each database, you need to install an extension only once. For more information about account authorization in Hologres, see Grant permissions to a service account.

Execute the following statement to create a foreign server named

dlf_serverand configure endpoints of DLF and OSS to ensure that Hologres, DLF, and OSS can access each other. For more information about how to create a foreign server, see Use DLF to read data from and write data to OSS.-- Create a foreign server in the China (Shanghai) region. CREATE SERVER IF NOT EXISTS dlf_server FOREIGN data wrapper dlf_fdw options ( dlf_region 'cn-shanghai', dlf_endpoint 'dlf-share.cn-shanghai.aliyuncs.com', oss_endpoint 'oss-cn-shanghai-internal.aliyuncs.com');

Step 2: Query data in an OSS data lake by using Hologres foreign tables

Hologres foreign tables only store mappings of data between Hologres and OSS data lakes. Data is stored in OSS data lakes, and no storage space in Hologres is occupied. This way, queries can be responded in seconds or minutes.

Create Hologres foreign tables and map data from the OSS data lake to the Hologres foreign tables.

IMPORT FOREIGN SCHEMA mydatabase LIMIT TO ----Replace mydatabase with the name of the database that you create on the Database tab of the Metadata page. ( nation_orc, supplier_orc, partsupp_orc ) FROM SERVER dlf_server INTO public options (if_table_exist 'update');Query data.

After the foreign tables are created, you can query data in OSS by using the foreign tables. Sample statement:

--TPC Benchmark-H (TPC-H) Q11 statement select ps_partkey, sum(ps_supplycost * ps_availqty) as value from partsupp_orc, supplier_orc, nation_orc where ps_suppkey = s_suppkey and s_nationkey = n_nationkey and RTRIM(n_name) = 'EGYPT' group by ps_partkey having sum(ps_supplycost * ps_availqty) > ( select sum(ps_supplycost * ps_availqty) * 0.000001 from partsupp_orc, supplier_orc, nation_orc where ps_suppkey = s_suppkey and s_nationkey = n_nationkey and RTRIM(n_name) = 'EGYPT' ) order by value desc;

Step 3 (Optional): Query data in an OSS data lake by using Hologres internal tables

In this step, data is imported from the OSS data lake to Hologres internal tables. Data is stored in Hologres. This achieves better query performance and higher data processing capabilities. For more information about storage fees, see Billing overview.

Create internal tables in Hologres that have the same schemas as the foreign tables. Sample statements:

-- Create a table named NATION. DROP TABLE IF EXISTS NATION; BEGIN; CREATE TABLE NATION ( N_NATIONKEY int NOT NULL PRIMARY KEY, N_NAME text NOT NULL, N_REGIONKEY int NOT NULL, N_COMMENT text NOT NULL ); CALL set_table_property ('NATION', 'distribution_key', 'N_NATIONKEY'); CALL set_table_property ('NATION', 'bitmap_columns', ''); CALL set_table_property ('NATION', 'dictionary_encoding_columns', ''); COMMIT; -- Create a table named SUPPLIER. DROP TABLE IF EXISTS SUPPLIER; BEGIN; CREATE TABLE SUPPLIER ( S_SUPPKEY int NOT NULL PRIMARY KEY, S_NAME text NOT NULL, S_ADDRESS text NOT NULL, S_NATIONKEY int NOT NULL, S_PHONE text NOT NULL, S_ACCTBAL DECIMAL(15, 2) NOT NULL, S_COMMENT text NOT NULL ); CALL set_table_property ('SUPPLIER', 'distribution_key', 'S_SUPPKEY'); CALL set_table_property ('SUPPLIER', 'bitmap_columns', 'S_NATIONKEY'); CALL set_table_property ('SUPPLIER', 'dictionary_encoding_columns', ''); COMMIT; -- Create a table named PARTSUPP. DROP TABLE IF EXISTS PARTSUPP; BEGIN; CREATE TABLE PARTSUPP ( PS_PARTKEY int NOT NULL, PS_SUPPKEY int NOT NULL, PS_AVAILQTY int NOT NULL, PS_SUPPLYCOST DECIMAL(15, 2) NOT NULL, PS_COMMENT text NOT NULL, PRIMARY KEY (PS_PARTKEY, PS_SUPPKEY) ); CALL set_table_property ('PARTSUPP', 'distribution_key', 'PS_PARTKEY'); CALL set_table_property ('PARTSUPP', 'bitmap_columns', 'ps_availqty'); CALL set_table_property ('PARTSUPP', 'dictionary_encoding_columns', ''); COMMIT;Synchronize data from the Hologres foreign tables to the Hologres internal tables.

--- Import data from the Hologres foreign tables to the Hologres internal tables. INSERT INTO nation SELECT * FROM nation_orc; INSERT INTO supplier SELECT * FROM supplier_orc; INSERT INTO partsupp SELECT * FROM partsupp_orc;Query data from the Hologres internal tables.

--TPC-H Q11 statement select ps_partkey, sum(ps_supplycost * ps_availqty) as value from partsupp, supplier, nation where ps_suppkey = s_suppkey and s_nationkey = n_nationkey and RTRIM(n_name) = 'EGYPT' group by ps_partkey having sum(ps_supplycost * ps_availqty) > ( select sum(ps_supplycost * ps_availqty) * 0.000001 from partsupp, supplier, nation where ps_suppkey = s_suppkey and s_nationkey = n_nationkey and RTRIM(n_name) = 'EGYPT' ) order by value desc;

FAQ

What do I do if the error message ERROR: babysitter not ready,req:name:"HiveAccess" is reported when I create a Hologres foreign table?

Cause: Data lake acceleration is not enabled.

Solution: Go to the Instances page, find the desired Hologres instance, and then click Data Lake Acceleration in the Actions column. In the message that appears, click Enable.

References

This topic describes how to accelerate queries on data in data lakes. For more information about the data lake feature, see Use DLF to read data from and write data to OSS.