Serverless Application Center allows you to configure custom pipeline execution steps. This way, you can efficiently publish code to Function Compute by configuring pipelines and orchestrating task flows. This topic describes how to manage pipelines in the Function Compute console, including how to configure pipelines, how to configure pipeline details, and how to view pipeline execution records.

Background

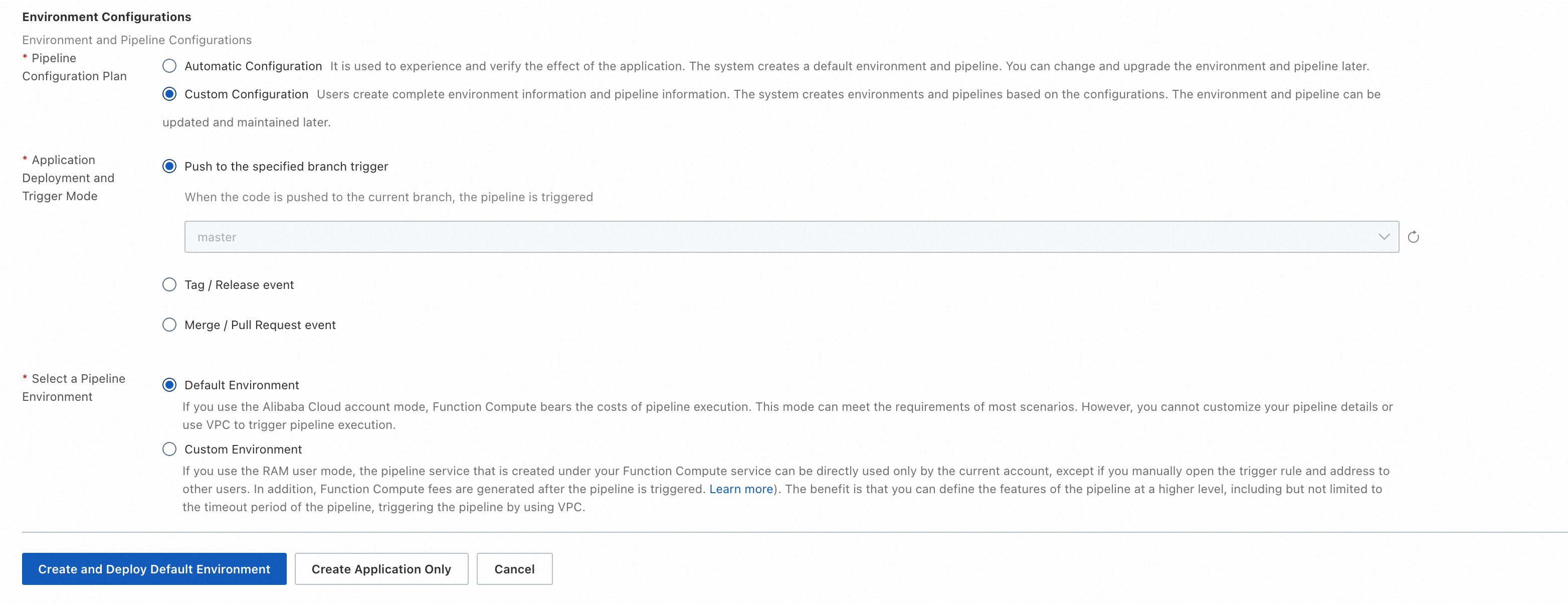

When you create an application, Serverless Application Center creates a default environment for the application. You can specify a method to trigger the Git events of the pipeline in the environment and configure the pipeline settings. When you configure the pipeline, you can select Automatic Configuration or Custom Configuration. If you select Automatic Configuration, Serverless Application Center creates a pipeline based on the default parameter settings. If you select Custom Configuration, you can specify a method to trigger the Git events of the pipeline in the environment. You can also select the execution environment for the pipeline. Information such as Git information and application information is passed on to the pipeline as the execution context.

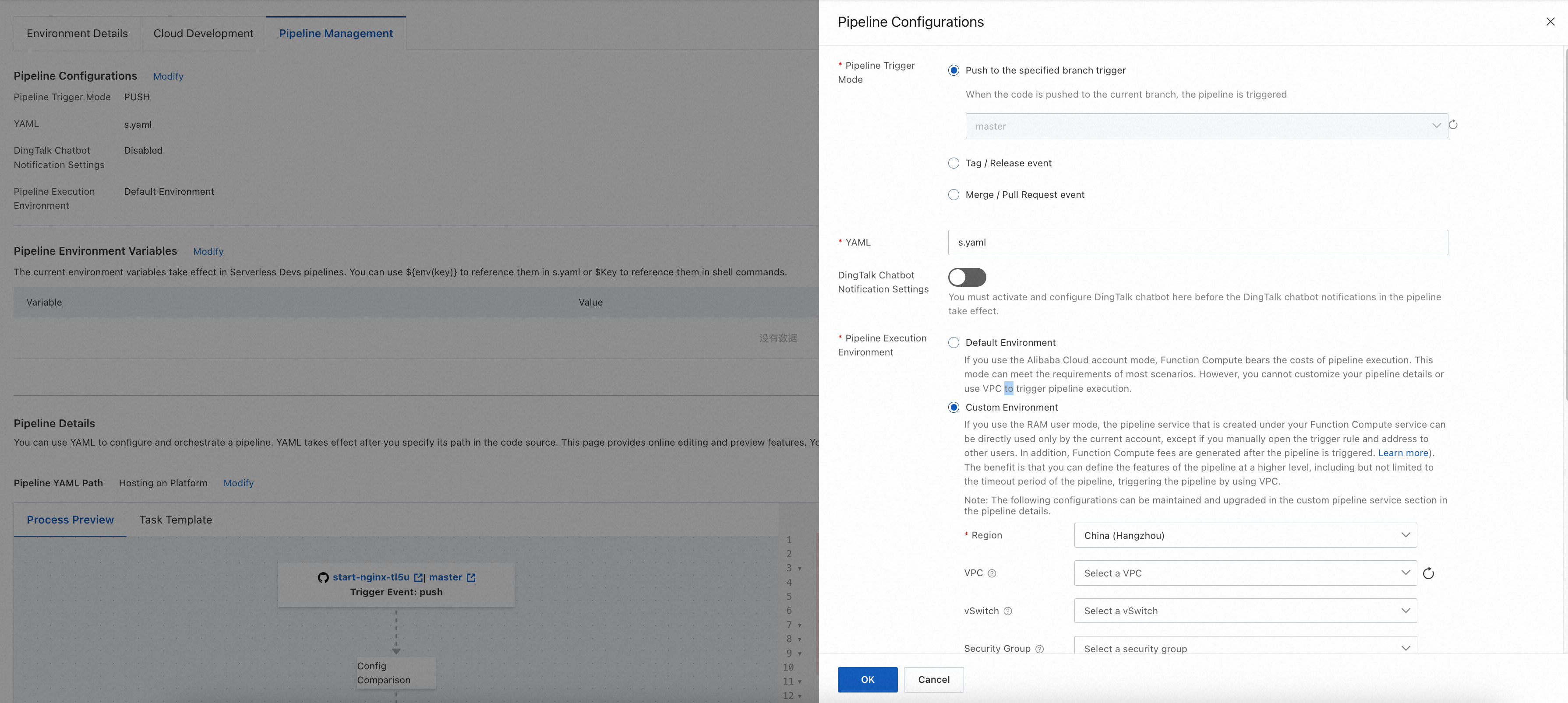

When you modify pipeline configurations, you can modify the trigger method and execution environment. You can also configure DingTalk notifications and YAML files.

Configure a pipeline when you create an application or an environment

When you create an application or an environment, you can specify the Git trigger method and execution environment of the pipeline.

Modify the pipeline of an existing environment

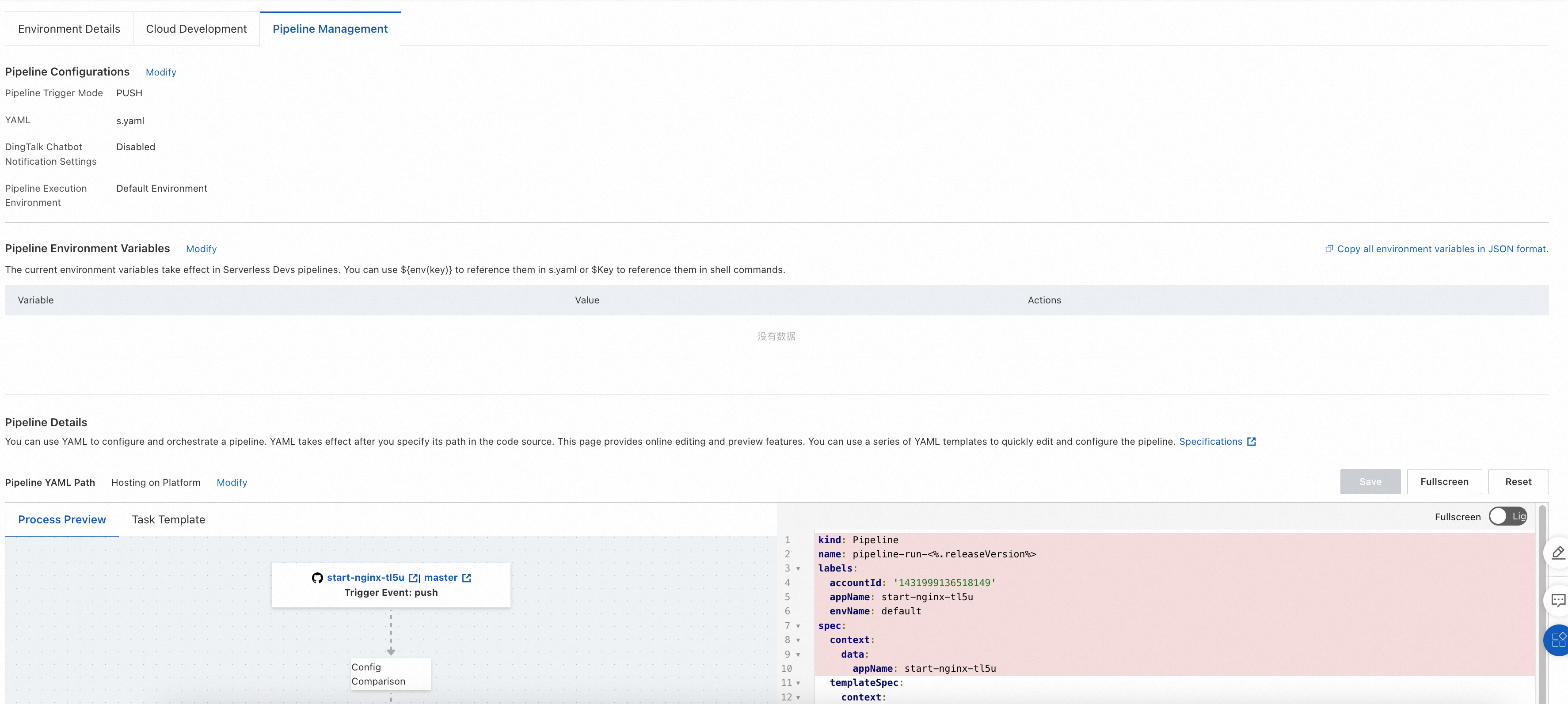

You can change the Git event trigger method, execution environment, DingTalk notifications, and YAML files for a pipeline of an existing environment on the Pipeline Management tab.

Configure a pipeline

To configure a pipeline, you must configure the following parameters: Pipeline Trigger Mode, YAML, DingTalk Chatbot Notification Settings, and Pipeline Execution Environment. When you create an application and an environment, you can configure only the Pipeline Trigger Mode and Pipeline Execution Environment parameters. After you create an application and an environment, you can click Modify to the right of Pipeline Configurations on the Pipeline Management tab to modify the preceding four parameters.

Pipeline Trigger Mode

Serverless Application Center allows you to specify a method to trigger the Git events of the pipeline. Serverless Application Center uses webhooks to receive Git events. When an event that meets the trigger condition is received, Serverless Application Center creates and executes the pipeline based on the YAML file that you specify for the pipeline. The following trigger modes are supported:

Triggered by branch: The environment must be associated with a specific branch and all push events in the branch are matched.

Triggered by tag: All tag creation events of a specific tag expression are matched.

Triggered by branch merging: Merge and pull request events from the source branch to the destination branch with which the environment is associated are matched.

Pipeline Execution Environment

You can set this parameter to Default Environment or Custom Environment.

Default Environment

If you set the Pipeline Execution Environment parameter to Default Environment, Serverless Application Center fully manages the pipeline resources and Function Compute settles the fees during pipeline execution. Each task of the pipeline runs on an independent and sandboxed virtual machine. Serverless Application Center ensures the isolation of the pipeline execution environment. The following items describe the limits that are imposed on the default environment:

Instance specification: 4 vCPUs and 8 GB of memory.

Temporary disk space: 10 GB.

Task execution timeout period: 15 minutes.

Region: If you create an environment by using a template or source code from GitHub, specify the Singapore region. If you create an environment by using Gitee, GitLab, or Codeup, specify the China (Hangzhou) region.

Network: You cannot use fixed IP addresses or CIDR blocks. You cannot configure an IP address whitelist to access specific websites. You cannot access resources in a virtual private cloud (VPC).

Custom Environment

If you set the Pipeline Execution Environment parameter to Custom Environment, the pipeline is executed in your Alibaba Cloud account. Compared with the default environment, a custom environment provides more custom capabilities. Serverless Application Center fully manages tasks in the custom environment and schedules Function Compute instances in real time to run the pipeline based on your authorization. Similar to the default environment, a custom environment provides the serverless feature, which eliminates the need to maintain infrastructure.

Custom environments provide the following custom capabilities:

Region and network: You can specify the region and the VPC for the execution environment to access internal code repositories, artifact repositories, image repositories, and Maven internal repositories. For information about the supported regions, see Supported regions.

Instance specification: You can specify the CPU and memory for the execution environment. For example, you can specify an instance that has high specification to accelerate the build speed.

NoteThe ratio of vCPU capacity to memory capacity (in GB) ranges from 1:1 to 1:4.

Persistence storage: You can configure File Storage NAS (NAS) or Object Storage Service (OSS) mounting. For example, you can use NAS to cache files to accelerate the build speed.

Log: You can specify a Simple Log Service project and Logstore to persist the execution logs of a pipeline.

Timeout period: You can specify custom timeout periods for pipeline tasks. Default value: 600. Maximum value: 86400. Unit: seconds.

Custom environments allow pipeline tasks to run on Function Compute in your account. In this case, you are charged the related fees. For more information, see Billing overview.

YAML

Serverless Application Center is integrated with Serverless Devs. You can use the YAML files of Serverless Devs to declare the resource configurations of your applications. For information about the specifications of YAML files, see YAML specifications. The default YAML file is named s.yaml. You can also specify another YAML file. After you specify the YAML file, you can use it in the pipeline by using the following methods:

If you use the

@serverless-cd/s-deployplug-in, you can use the YAML file to deploy resources when the plug-in is executed. The execution of the plug-in is achieved by adding-t/--templateto the corresponding command in Serverless Devs. In the following example, the YAML file is nameddemo.yaml. To execute the plug-in, run thes deploy -t demo.yamlcommand.

- name: deploy

context:

data:

deployFile: demo.yaml

steps:

- plugin: '@serverless-cd/s-setup'

- plugin: '@serverless-cd/checkout'

- plugin: '@serverless-cd/s-deploy'

taskTemplate: serverless-runner-taskIf you execute the pipeline by using scripts, you can use

${{ ctx.data.deployFile }}to reference a specific YAML file. In the following example, the s plan command is run by using the specified file if you specify a YAML file. Otherwise, the s plan command is run by using the default s.yaml file.

- name: pre-check

context:

data:

steps:

- run: s plan -t ${{ ctx.data.deployFile || s.yaml }}

- run: echo "s plan finished."

taskTemplate: serverless-runner-taskDingTalk Chatbot Notification Settings

After you enable this feature, configure the following parameters: Webhook address, Signing key, Notify only on failure, and custom message. You can manage the tasks that require notifications in a centralized manner. You can also use the YAML file of the pipeline to enable notifications for each task. After you configure notifications, you can configure notifications for each task in the Pipeline Details section.

Pipeline Details section

In the Pipeline Details section, you can configure the tasks in the pipeline and the relationships between the tasks. The platform automatically generates a default pipeline process based on which you can modify configurations.

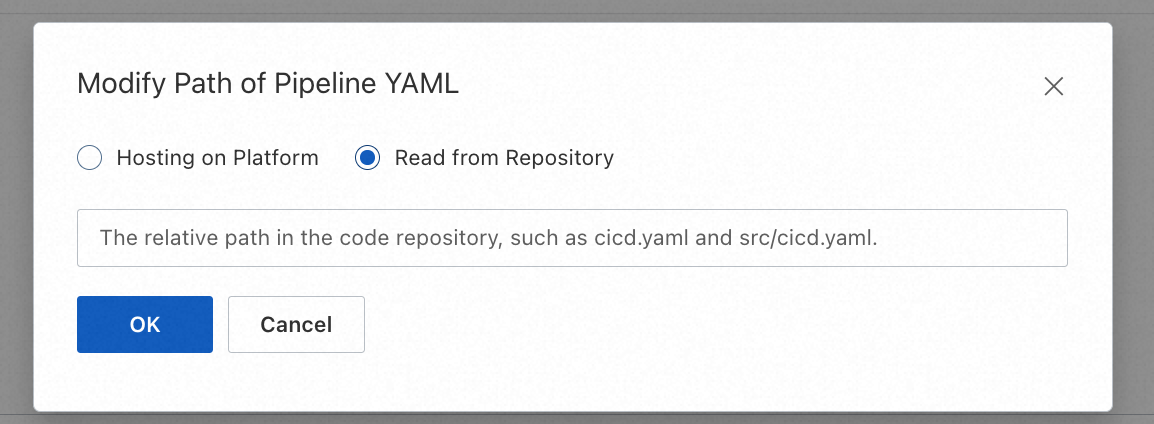

You can manage pipelines by using YAML. When you configure this item, you can select Hosting on Platform or Read from Repository.

If you select Hosting on Platform, predefined variables in the YAML file are not supported. For more information, see Use a .yaml file to describe a pipeline.

If you select Read from Repository, predefined variables in the YAML file are supported.

Hosting on Platform

By default, the YAML files of pipelines are configured in and managed by Serverless Application Center in a centralized manner. After you update the YAML file of a pipeline, the new configurations take effect in the next deployment.

Read from Repository

The YAML file of the pipeline is stored in a Git repository. After you modify and save the configurations in the Function Compute console, Serverless Application Center commits the modifications to the Git repository in real time. This does not trigger the execution of the pipeline. When an event in your code repository triggers the execution of the pipeline, Serverless Application Center creates and executes the pipeline by using the specified YAML file in the Git repository.

You can select Read from Repository in the upper part of the Pipeline Details section and enter the name of the YAML file. The following figure shows the configurations.

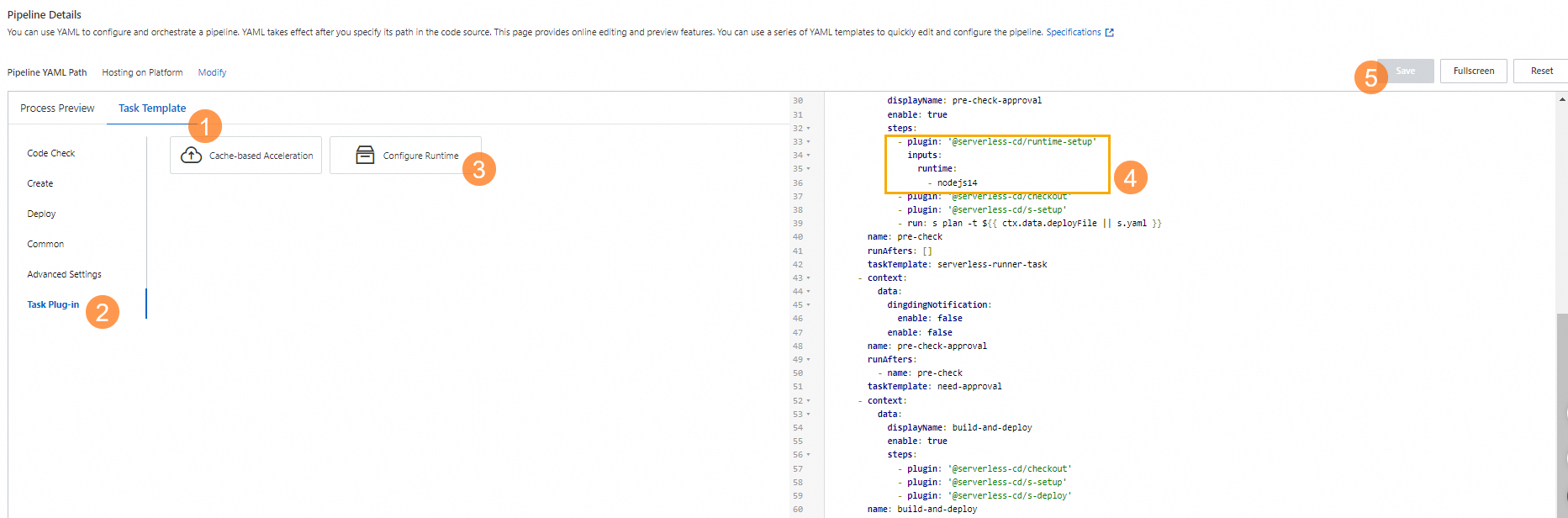

The Pipeline Details section consists of a tool subsection on the left and a YAML editing subsection on the right.

In the YAML editor, you can modify the YAML file of the pipeline to modify the pipeline process. For more information, see Use a .yaml file to describe a pipeline.

The tool subsection provides auxiliary tools for editing YAML files. The following tabs are included in the subsection:

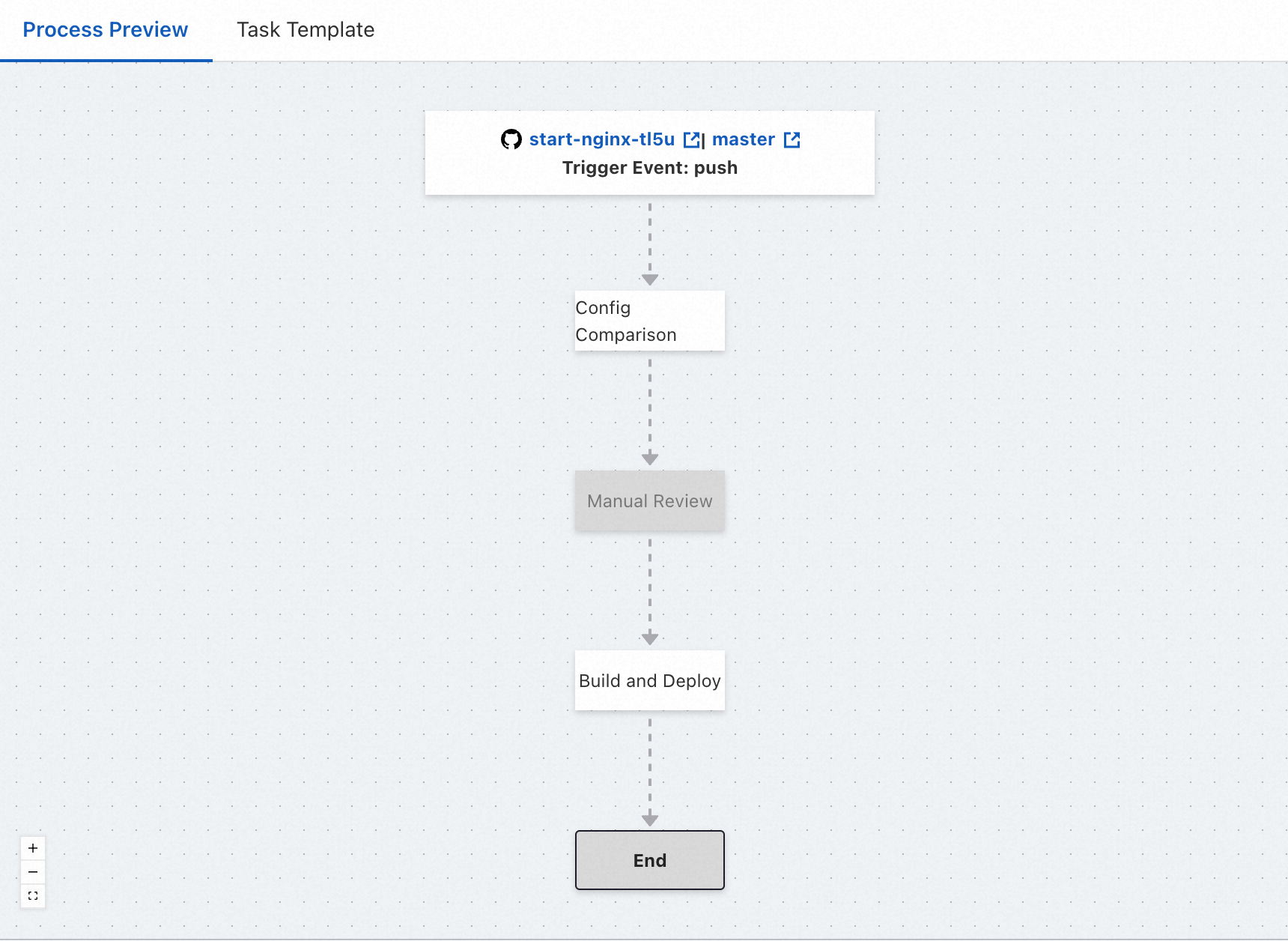

Process Preview tab: You can preview and modify the pipeline process. Process Preview tab

Task Template tab: You can view common YAML templates that are provided. Task Template tab

In the upper-right corner of the subsection, the Save, Fullscreen, and Reset buttons are provided.

You can click Save to save all modifications in the YAML file. Then, all saved data is synchronized to the YAML file of the pipeline.

You can click Fullscreen to fully display the editing subsection.

You can click Reset to undo the most recent modifications to the YAML file.

If you click Reset, the most recent modifications are lost. Before you proceed, we recommend that you back up your data.

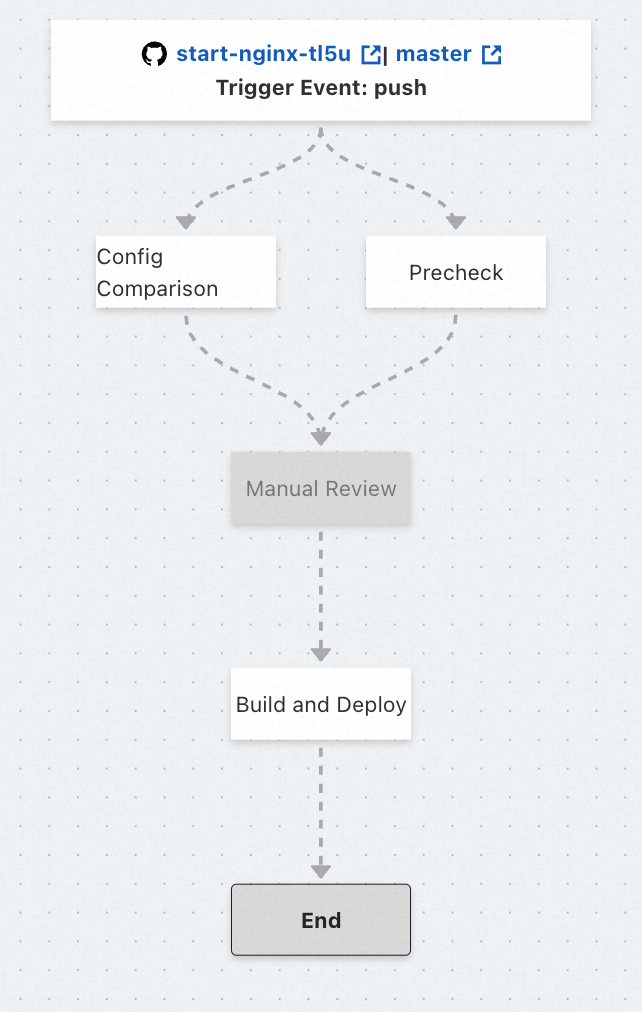

Process Preview tab

On the Process Preview tab, you can preview the pipeline process and modify the basic content of tasks and the relationships between tasks. You can also create and add tasks by using the template. The pipeline process consists of the following nodes: the start node (code source and trigger method), the task node, and the end node. The nodes are connected by dotted arrows, which indicate the dependencies among the nodes. When you move the pointer over a task node, the buttons to add or delete tasks are displayed.

Start node (code source and trigger method)

This node contains the information about the code source and trigger method of a pipeline, and you cannot modify the information. If you want to change the trigger method, go to the Pipeline Configurations section.

ImportantIf you want to change the code repository, go to the application details page. For more information, see Manage applications. After you change the code repository, all pipelines that use the code from the repository become invalid. Proceed with caution.

End node

The last node in the pipeline process. You cannot edit the node.

Task node

This node contains basic information about a specific task. By default, a task node shows the task name. When you click the node, a pop-up appears. You can view and modify the parameters in the pop-up, such as the Task Name, Run After, and Enable parameters. If you do not want to enable the task, the task is skipped and dimmed during the pipeline process.

Dependency

The dependencies among nodes are indicated by one-way dotted arrows. If an arrow starts from Task A and points to Task B, the arrow indicates that Task B runs after and depends on Task A. A task can depend and be dependent on multiple tasks.

You can modify the dependencies by modifying the Run After parameter. For example, if you want to remove the dependency of Task B on Task A, click the Task B node and remove Task A.

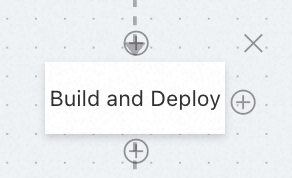

Add a task

If you want to add a task, click the plus icon. You can configure the plus icons on the top, bottom, and right side of the task node. For example, if you want to add Task B to run before Task A, click the plus icon on the top of the Task A node to create Task B. This way, Task A runs after and depends on Task B. If you want to add Task C to run after Task A, click the plus icon at the bottom of the Task A node to create Task C. This way, Task C runs after and depends on Task A. If you want to add Task D, which has the same dependency as Task A, click the plus icon on the right side of the Task A node to create Task D. This way, Task D has the same pre-task and depends on the same task as Task A.

Delete a task

If you want to delete a task, click the cross icon (x). The cross icons are configured in the upper-right corner of a task node. When you click the cross icon, the system prompts you to confirm the deletion of a task to prevent misoperation.

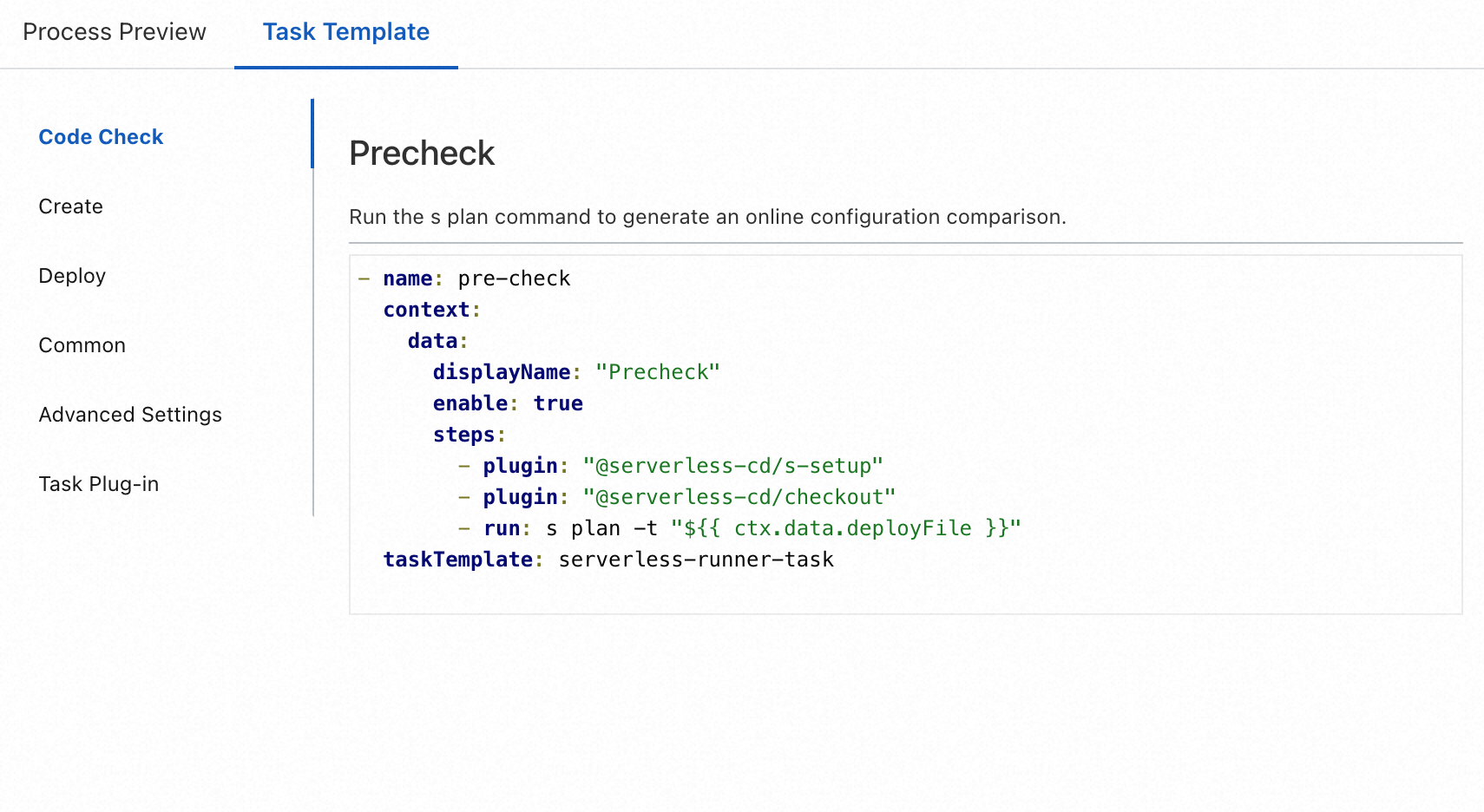

Task Template tab

On the Task Template tab, the following YAML templates for pipeline tasks are provided: Code Check, Create, Deploy, and Common. In addition, the following YAML templates for internal settings of tasks are provided: Advanced Settings and Task Plug-in.

You can select a template from the template list and click it to view the detailed description and YAML content of the template. Copy and paste the YAML content to the corresponding position in the YAML file of the pipeline.

Default pipeline process

By default, the pipeline process consists of three tasks: Config Comparison, Manual Review, and Build and Deploy. The three tasks are executed in sequence. By default, the Manual Review task is disabled. You must manually enable it.

Config Comparison

This task checks whether the YAML file of the pipeline is consistent with the online configurations. This helps you detect unexpected configuration changes in advance.

Manual Review

To ensure the secure release and stability of an application, you can enable the manual review feature. After you enable this feature, the pipeline is blocked in this phase until the manual review is approved. Subsequent processes are performed only after the changes are approved. Otherwise, the pipeline is terminated. By default, the manual review feature is disabled. You must enable the feature.

Build and Deploy

This task builds an application and deploys the application to the cloud. By default, full deployment is performed.

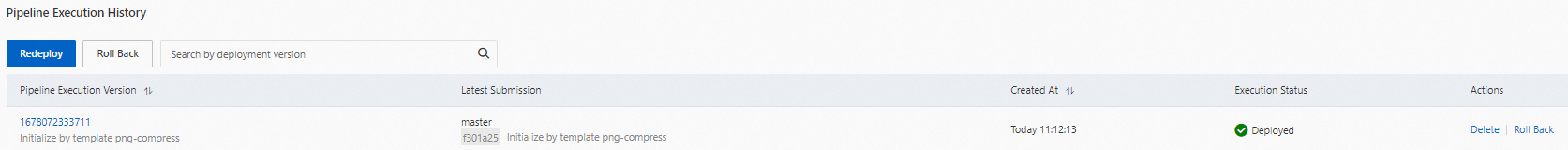

View the execution records of a pipeline

On the details page of an environment, click the Pipeline Management tab. In the Pipeline Execution History section, you can view the execution records of a specific pipeline.

You can click the execution version of a pipeline to view the execution details of the pipeline. Then, you can use the details to view the execution logs and status of the pipeline and troubleshoot issues.

Update the runtime of a pipeline

The following table describes the default build environments of pipelines. Built-in package managers of the default pipelines include Maven, pip, and npm. Only the Debian 10 OS is supported as the runtime environment.

Runtime | Supported version |

Node.js |

|

Java |

|

Python |

|

Golang |

|

PHP |

|

.NET |

|

You can specify the runtime version of a pipeline by using the runtime-setup plug-in or modifying the variables in the YAML file.

The runtime-setup plug-in (recommended)

You can log on to the Function Compute console and find the application that you want to manage. In the Pipeline Details section of the Pipeline Management tab, update the YAML file of the pipeline on the right side by using the task template. To update the YAML file, perform the following steps as shown in the following figure: Click Task Template, select Task Plug-in, and then click Configure Runtime. Then, update the YAML file and click Save.

We recommend that you place the runtime-setup plug-in in the first step to ensure that all subsequent steps take effect.

For information about the parameters of the runtime-setup plug-in, see Use the runtime-setup plug-in to initialize a runtime.

Variables in the YAML file

You can also use the action hook in the YAML file to change the version of Node.js or Python. The following items describe the details:

Node.js

export PATH=/usr/local/versions/node/v12.22.12/bin:$PATH

export PATH=/usr/local/versions/node/v16.15.0/bin:$PATH

export PATH=/usr/local/versions/node/v14.19.2/bin:$PATH

export PATH=/usr/local/versions/node/v18.14.2/bin:$PATH

Sample code:

services: upgrade_runtime: component: 'fc' actions: pre-deploy: - run: export PATH=/usr/local/versions/node/v18.14.2/bin:$PATH && npm run build props: ...Python

export PATH=/usr/local/envs/py27/bin:$PATH

export PATH=/usr/local/envs/py36/bin:$PATH

export PATH=/usr/local/envs/py37/bin:$PATH

export PATH=/usr/local/envs/py39/bin:$PATH

export PATH=/usr/local/envs/py310/bin:$PATH

Sample code:

services: upgrade_runtime: component: 'fc' actions: pre-deploy: - run: export PATH=/usr/local/envs/py310/bin:$PATH && pip3 install -r requirements.txt -t . props: ...