Alibaba Cloud Elasticsearch is connected to Terraform. You can use Terraform to manage Alibaba Cloud Elasticsearch clusters. This topic describes how to use Terraform to create an Alibaba Cloud Elasticsearch cluster.

You can run the sample code in this topic with a few clicks. For more information, visit Terraform Explorer.

Prerequisites

We recommend that you use a RAM user that has the minimum required permissions to perform the operations in this topic. This minimizes the risk of leaking the AccessKey pair of your Alibaba Cloud account. For information about how to attach the policy with the minimum required permissions to the RAM user, see Create a RAM user and Grant permissions to a RAM user. The following policy is used in this example:

{ "Version": "1", "Statement": [ { "Effect": "Allow", "Action": [ "vpc:CreateVpc", "vpc:DeleteVpc", "vpc:CreateVSwitch", "vpc:DeleteVSwitch", "ecs:CreateSecurityGroup", "ecs:ModifySecurityGroupPolicy", "ecs:DescribeSecurityGroups", "ecs:ListTagResources", "ecs:DeleteSecurityGroup", "ecs:DescribeSecurityGroupAttribute" ], "Resource": "*" }, { "Effect": "Allow", "Action": [ "vpc:DescribeVpcAttribute", "vpc:DescribeRouteTableList", "vpc:DescribeVSwitchAttributes" ], "Resource": "*" }, { "Effect": "Allow", "Action": [ "elasticsearch:CreateInstance", "elasticsearch:DescribeInstance", "elasticsearch:ListAckClusters", "elasticsearch:UpdateDescription", "elasticsearch:ListInstance", "elasticsearch:ListAvailableEsInstanceIds" ], "Resource": "*" } ] }The runtime environment for Terraform is prepared by using one of the following methods:

Terraform Explorer: Alibaba Cloud provides Terraform Explorer, an online runtime environment for Terraform. You can use Terraform after you log on to Terraform Explorer without the need to install Terraform. This method is suitable for scenarios in which you want to use and debug Terraform in a fast and convenient manner at no additional costs.

Cloud Shell: Terraform is preinstalled in Cloud Shell and identity credentials are configured. You can directly run Terraform commands in Cloud Shell. This method is suitable for scenarios in which you want to use and debug Terraform in a fast and convenient manner at low costs.

Install and configure Terraform on your on-premises machine: This method is suitable for scenarios in which network conditions are poor or a custom development environment is required.

In this example, fees may be generated for specific resources. Release or unsubscribe from the resources when you no longer require them.

Required resources

alicloud_vpc: virtual private cloud (VPC).

alicloud_security_group: security group.

alicloud_vswitch: vSwitch.

alicloud_elasticsearch_instance: Alibaba Cloud Elasticsearch cluster. You are charged for the cluster. For information about the billing of Elasticsearch clusters, see Billing overview.

Create an Elasticsearch cluster

Create a working directory and a configuration file named main.tf in the directory. The following code creates an Elasticsearch cluster and the related VPC, security group, and vSwitch. Copy the following code to the main.tf configuration file:

variable "region" { default = "cn-qingdao" } data "alicloud_zones" "default" { available_resource_creation = "VSwitch" available_disk_category = "cloud_ssd" } variable "vpc_cidr_block" { default = "172.16.0.0/16" } variable "vsw_cidr_block" { default = "172.16.0.0/24" } variable "node_spec" { default = "elasticsearch.sn2ne.large" } provider "alicloud" { region = var.region } resource "random_integer" "default" { min = 10000 max = 99999 } resource "alicloud_vpc" "vpc" { vpc_name = "vpc-test_${random_integer.default.result}" cidr_block = var.vpc_cidr_block } resource "alicloud_security_group" "group" { name = "test_${random_integer.default.result}" vpc_id = alicloud_vpc.vpc.id } resource "alicloud_vswitch" "vswitch" { vpc_id = alicloud_vpc.vpc.id cidr_block = var.vsw_cidr_block zone_id = data.alicloud_zones.default.zones[0].id vswitch_name = "vswitch-test-${random_integer.default.result}" } resource "alicloud_elasticsearch_instance" "instance" { description = "test_Instance" instance_charge_type = "PostPaid" data_node_amount = "2" data_node_spec = var.node_spec data_node_disk_size = "20" data_node_disk_type = "cloud_ssd" vswitch_id = alicloud_vswitch.vswitch.id password = "es_password_01" version = "6.7_with_X-Pack" master_node_spec = var.node_spec zone_count = "1" master_node_disk_type = "cloud_ssd" kibana_node_spec = var.node_spec data_node_disk_performance_level = "PL1" tags = { Created = "TF", For = "example", } }Run the following command to initialize the Terraform runtime environment:

terraform initIf the following information is returned, Terraform is initialized:

Terraform has been successfully initialized! You may now begin working with Terraform. Try running "terraform plan" to see any changes that are required for your infrastructure. All Terraform commands should now work. If you ever set or change modules or backend configuration for Terraform, rerun this command to reinitialize your working directory. If you forget, other commands will detect it and remind you to do so if necessary.Run the following command to create an Elasticsearch cluster:

terraform applyDuring the execution, enter

yesas prompted and press the Enter key. Wait until the command is complete. If the following information is returned, the cluster is created.You can apply this plan to save these new output values to the Terraform state, without changing any real infrastructure. Do you want to perform these actions? Terraform will perform the actions described above. Only 'yes' will be accepted to approve. Enter a value: yes Apply complete! Resources: 5 added, 0 changed, 0 destroyed.Verify the result.

Run the terraform show command

Run the following command in the working directory to query the details of the cluster that is created by using Terraform:

terraform show

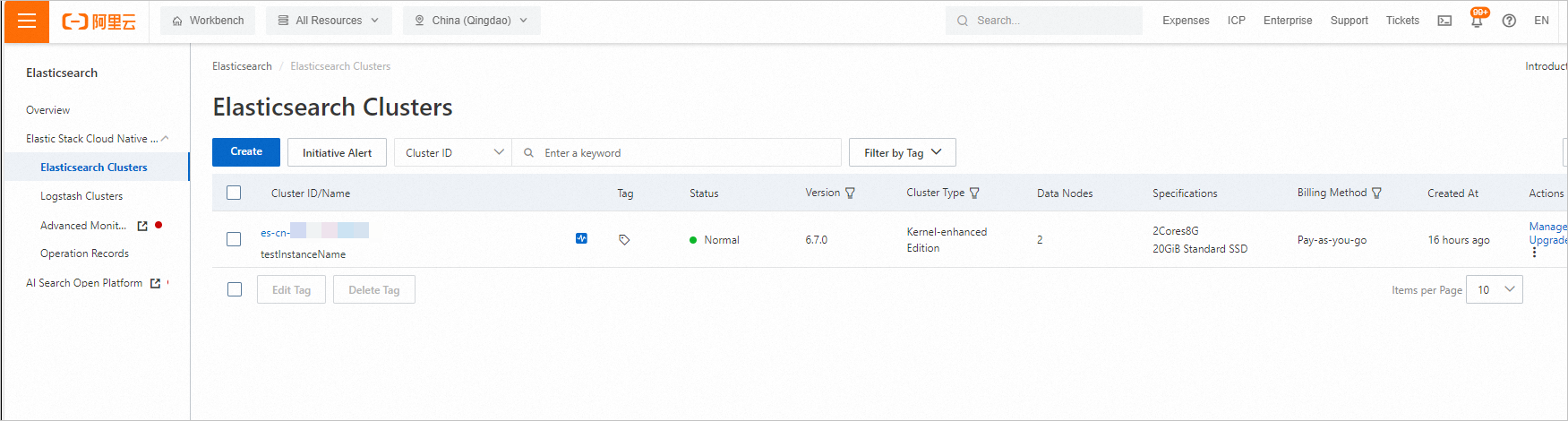

Log on to the Elasticsearch console

Log on to the Elasticsearch console and view the created cluster.

Release resources

If you no longer require the preceding resources that are created or managed by using Terraform, run the following command to release the resources. For more information about the terraform destroy command, see Common commands.

terraform destroyExample

You can run the sample code with a few clicks. For more information, visit Terraform Explorer.

Sample code

If you want to view more complete examples, visit the directory of the corresponding service on the More Complete Examples page.