Spark Thrift Server is a service provided by Apache Spark that supports SQL query execution through JDBC or ODBC connections, making it convenient to integrate Spark environments with existing Business Intelligence (BI) tools, data visualization tools, and other data analysis tools. This topic describes how to create and connect to a Spark Thrift Server.

Prerequisites

A workspace is created. For more information, see Manage workspaces.

Create a Spark Thrift Server session

After you create a Spark Thrift Server, you can select this session when you create a Spark SQL task.

Go to the Sessions page.

Log on to the EMR console.

In the left-side navigation pane, choose .

On the Spark page, click the name of the workspace that you want to manage.

In the left-side navigation pane of the EMR Serverless Spark page, choose Operation Center > Sessions.

On the Session Manager page, click the Spark Thrift Server Sessions tab.

Click Create Spark Thrift Server Session.

On the Create Spark Thrift Server Session page, configure the parameters and click Create.

Parameter

Description

Name

The name of the new Spark Thrift Server.

The name must be 1 to 64 characters in length and can contain letters, digits, hyphens (-), underscores (_), and spaces.

Deployment Queue

Select an appropriate development queue to deploy the session. You can select only a development queue or a queue that is shared by development and production environments.

For more information about queues, see Resource Queue Management.

Engine Version

The engine version used by the current session. For more information about engine versions, see Engine version introduction.

Use Fusion Acceleration

Fusion can accelerate the running of Spark workloads and reduce the total cost of tasks. For billing information, see Billing. For more information about the Fusion engine, see Fusion engine.

Auto Stop

This feature is enabled by default. The Spark Thrift Server session automatically stops after 45 minutes of inactivity.

Network Connectivity

The network connection that is used to access the data sources or external services in a virtual private cloud (VPC). For information about how to create a network connection, see Configure network connectivity between EMR Serverless Spark and a data source across VPCs.

Spark Thrift Server Port

The port number is 443 when you access the server by using a public endpoint, and 80 when you access the server by using an internal same-region endpoint.

Access Credential

Only the Token method is supported.

spark.driver.cores

The number of CPU cores used by the Driver process in a Spark application. The default value is 1 CPU.

spark.driver.memory

The amount of memory that the Driver process in a Spark application can use. The default value is 3.5 GB.

spark.executor.cores

The number of CPU cores that each Executor process can use. The default value is 1 CPU.

spark.executor.memory

The amount of memory that each Executor process can use. The default value is 3.5 GB.

spark.executor.instances

The number of executors allocated by Spark. The default value is 2.

Dynamic Resource Allocation

By default, this feature is disabled. After you enable this feature, you must configure the following parameters:

Minimum Number of Executors: Default value: 2.

Maximum Number of Executors: If you do not configure the spark.executor.instances parameter, the default value 10 is used.

More Memory Configurations

spark.driver.memoryOverhead: the size of non-heap memory that is available to each driver. If you leave this parameter empty, Spark automatically assigns a value to this parameter based on the following formula:

max(384 MB, 10% × spark.driver.memory).spark.executor.memoryOverhead: the size of non-heap memory that is available to each executor. If you leave this parameter empty, Spark automatically assigns a value to this parameter based on the following formula:

max(384 MB, 10% × spark.executor.memory).spark.memory.offHeap.size: the size of off-heap memory that is available to the Spark application. Default value: 1 GB.

This parameter is valid only if you set the

spark.memory.offHeap.enabledparameter totrue. By default, if you use the Fusion engine, the spark.memory.offHeap.enabled parameter is set to true and the spark.memory.offHeap.size parameter is set to 1 GB.

Spark Configuration

Enter Spark configuration information. By default, parameters are separated by spaces, for example,

spark.sql.catalog.paimon.metastore dlf.Obtain the Endpoint information.

On the Spark Thrift Server Sessions tab, click the name of the new Spark Thrift Server.

On the Overview tab, copy the Endpoint information.

Based on your network environment, you can choose one of the following Endpoints:

Public Endpoint: This is suitable for scenarios where you need to access EMR Serverless Spark over the Internet, such as from a local development machine, external network, or cross-cloud environment. This method may incur traffic fees. Make sure that you take necessary security measures.

Internal Same-region Endpoint: This is suitable for scenarios where an Alibaba Cloud ECS instance in the same region accesses EMR Serverless Spark over an internal network. Internal network access is free and more secure, but is limited to Alibaba Cloud internal network environments in the same region.

Create a Token

On the Spark Thrift Server Sessions tab, click the name of the new Spark Thrift Server session.

Click the Token Management tab.

Click Create Token.

In the Create Token dialog box, configure the parameters and click OK.

Parameter

Description

Name

The name of the new token.

Expiration Time

The expiration time of the token. The number of days must be greater than or equal to 1. This feature is enabled by default, and the token expires after 365 days.

Copy the token information.

ImportantAfter a token is created, you must immediately copy the information of the new token. You cannot view the token information later. If your token expires or is lost, you can create a new token or reset the token.

Connect to Spark Thrift Server

When you connect to Spark Thrift Server, replace the following information based on your actual situation:

<endpoint>: The Endpoint(Public) or Endpoint(Internal) information that you obtained on the Overview tab.If you use an internal same-region endpoint, access to Spark Thrift Server is limited to resources within the same VPC.

<port>: The port number. The port number is 443 when you access the server by using a public endpoint, and 80 when you access the server by using an internal same-region endpoint.<username>: The name of the token that you created on the Token Management tab.<token>: The token information that you copied on the Token Management tab.

Connect to Spark Thrift Server by using Python

Run the following command to install the PyHive and Thrift packages:

pip install pyhive thriftWrite a Python script to connect to Spark Thrift Server.

The following is an example Python script that shows how to connect to Hive and display a list of databases. Based on your network environment (public or internal), you can choose the appropriate connection method.

Connect by using a public endpoint

from pyhive import hive if __name__ == '__main__': # Replace <endpoint>, <username>, and <token> with your actual information. cursor = hive.connect('<endpoint>', port=443, scheme='https', username='<username>', password='<token>').cursor() cursor.execute('show databases') print(cursor.fetchall()) cursor.close()Connect by using an internal same-region endpoint

from pyhive import hive if __name__ == '__main__': # Replace <endpoint>, <username>, and <token> with your actual information. cursor = hive.connect('<endpoint>', port=80, scheme='http', username='<username>', password='<token>').cursor() cursor.execute('show databases') print(cursor.fetchall()) cursor.close()

Connect to Spark Thrift Server by using Java

Add the following Maven dependencies to your

pom.xmlfile:<dependencies> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-common</artifactId> <version>3.0.0</version> </dependency> <dependency> <groupId>org.apache.hive</groupId> <artifactId>hive-jdbc</artifactId> <version>2.1.0</version> </dependency> </dependencies>NoteThe built-in Hive version in Serverless Spark is 2.x. Therefore, only hive-jdbc 2.x versions are supported.

Write Java code to connect to Spark Thrift Server.

The following is a sample Java code that connects to Spark Thrift Server and queries the database list.

Connect by using a public endpoint

import java.sql.Connection; import java.sql.DriverManager; import java.sql.ResultSet; import java.sql.ResultSetMetaData; import org.apache.hive.jdbc.HiveStatement; public class Main { public static void main(String[] args) throws Exception { String url = "jdbc:hive2://<endpoint>:443/;transportMode=http;httpPath=cliservice/token/<token>"; Class.forName("org.apache.hive.jdbc.HiveDriver"); Connection conn = DriverManager.getConnection(url); HiveStatement stmt = (HiveStatement) conn.createStatement(); String sql = "show databases"; System.out.println("Running " + sql); ResultSet res = stmt.executeQuery(sql); ResultSetMetaData md = res.getMetaData(); String[] columns = new String[md.getColumnCount()]; for (int i = 0; i < columns.length; i++) { columns[i] = md.getColumnName(i + 1); } while (res.next()) { System.out.print("Row " + res.getRow() + "=["); for (int i = 0; i < columns.length; i++) { if (i != 0) { System.out.print(", "); } System.out.print(columns[i] + "='" + res.getObject(i + 1) + "'"); } System.out.println(")]"); } conn.close(); } }Connect by using an internal same-region endpoint

import java.sql.Connection; import java.sql.DriverManager; import java.sql.ResultSet; import java.sql.ResultSetMetaData; import org.apache.hive.jdbc.HiveStatement; public class Main { public static void main(String[] args) throws Exception { String url = "jdbc:hive2://<endpoint>:80/;transportMode=http;httpPath=cliservice/token/<token>"; Class.forName("org.apache.hive.jdbc.HiveDriver"); Connection conn = DriverManager.getConnection(url); HiveStatement stmt = (HiveStatement) conn.createStatement(); String sql = "show databases"; System.out.println("Running " + sql); ResultSet res = stmt.executeQuery(sql); ResultSetMetaData md = res.getMetaData(); String[] columns = new String[md.getColumnCount()]; for (int i = 0; i < columns.length; i++) { columns[i] = md.getColumnName(i + 1); } while (res.next()) { System.out.print("Row " + res.getRow() + "=["); for (int i = 0; i < columns.length; i++) { if (i != 0) { System.out.print(", "); } System.out.print(columns[i] + "='" + res.getObject(i + 1) + "'"); } System.out.println(")]"); } conn.close(); } }

Connect to Spark Thrift Server by using Spark Beeline

If you are using a self-built cluster, you need to first go to the

bindirectory of Spark, and then use beeline to connect to Spark Thrift Server.Connect by using a public endpoint

cd /opt/apps/SPARK3/spark-3.4.2-hadoop3.2-1.0.3/bin/ ./beeline -u "jdbc:hive2://<endpoint>:443/;transportMode=http;httpPath=cliservice/token/<token>"Connect by using an internal same-region endpoint

cd /opt/apps/SPARK3/spark-3.4.2-hadoop3.2-1.0.3/bin/ ./beeline -u "jdbc:hive2://<endpoint>:80/;transportMode=http;httpPath=cliservice/token/<token>"NoteThe code uses

/opt/apps/SPARK3/spark-3.4.2-hadoop3.2-1.0.3as an example of the Spark installation path in an EMR on ECS cluster. You need to adjust the path based on the actual Spark installation path on your client. If you are not sure about the Spark installation path, you can use theenv | grep SPARK_HOMEcommand to find it.If you are using an EMR on ECS cluster, you can directly use the Spark Beeline client to connect to Spark Thrift Server.

Connect by using a public endpoint

spark-beeline -u "jdbc:hive2://<endpoint>:443/;transportMode=http;httpPath=cliservice/token/<token>"Connect by using an internal same-region endpoint

spark-beeline -u "jdbc:hive2://<endpoint>:80/;transportMode=http;httpPath=cliservice/token/<token>"

When you use Hive Beeline to connect to Serverless Spark Thrift Server, if the following error occurs, it is usually because the version of Hive Beeline is incompatible with Spark Thrift Server. Therefore, we recommend that you use Hive Beeline 2.x.

24/08/22 15:09:11 [main]: ERROR jdbc.HiveConnection: Error opening session

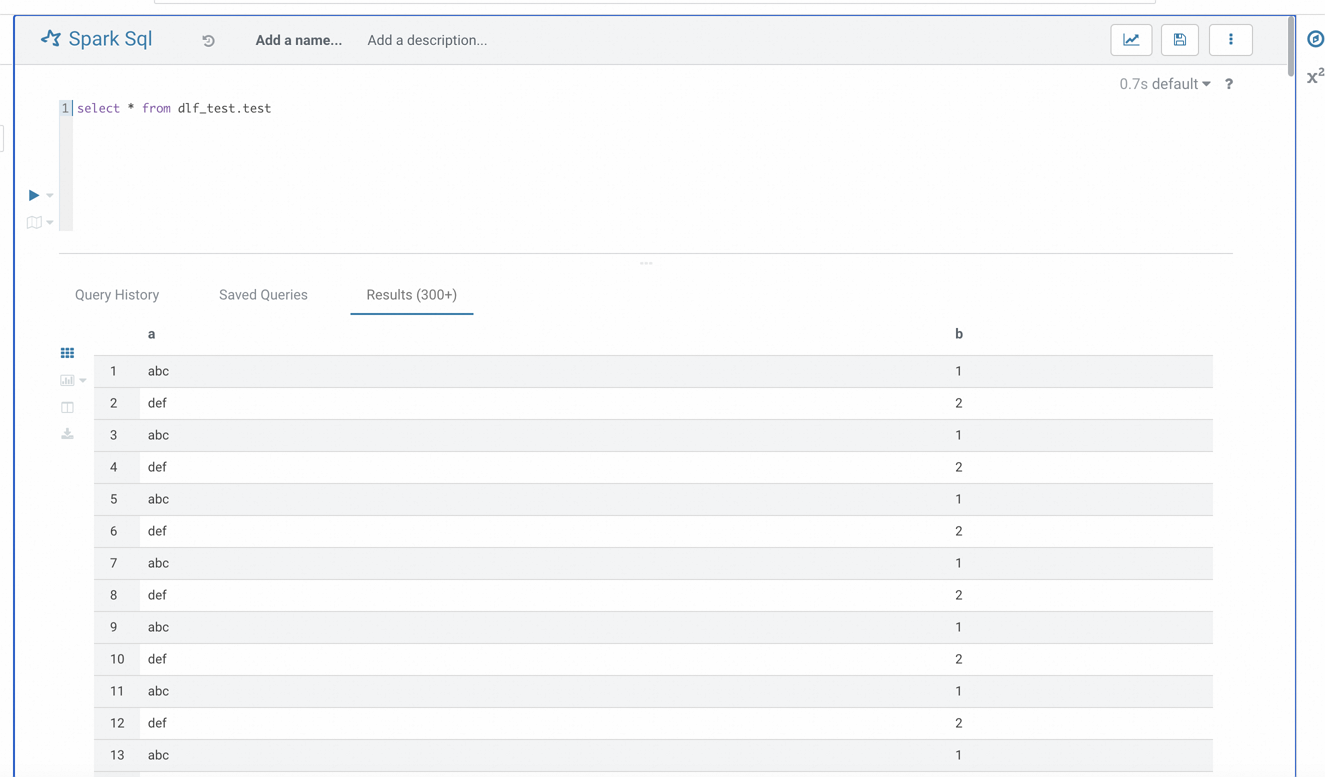

org.apache.thrift.transport.TTransportException: HTTP Response code: 404Connect to Spark Thrift Server by configuring Apache Superset

Apache Superset is a modern data exploration and visualization platform with a rich set of chart forms, from simple line charts to highly detailed geospatial charts. For more information about Superset, see Superset.

Install dependencies.

Make sure that you have installed the

thriftpackage version 0.20.0. If not, you can install it by using the following command:pip install thrift==0.20.0Start Superset and go to the Superset interface.

For more information about how to start Superset, see Superset documentation.

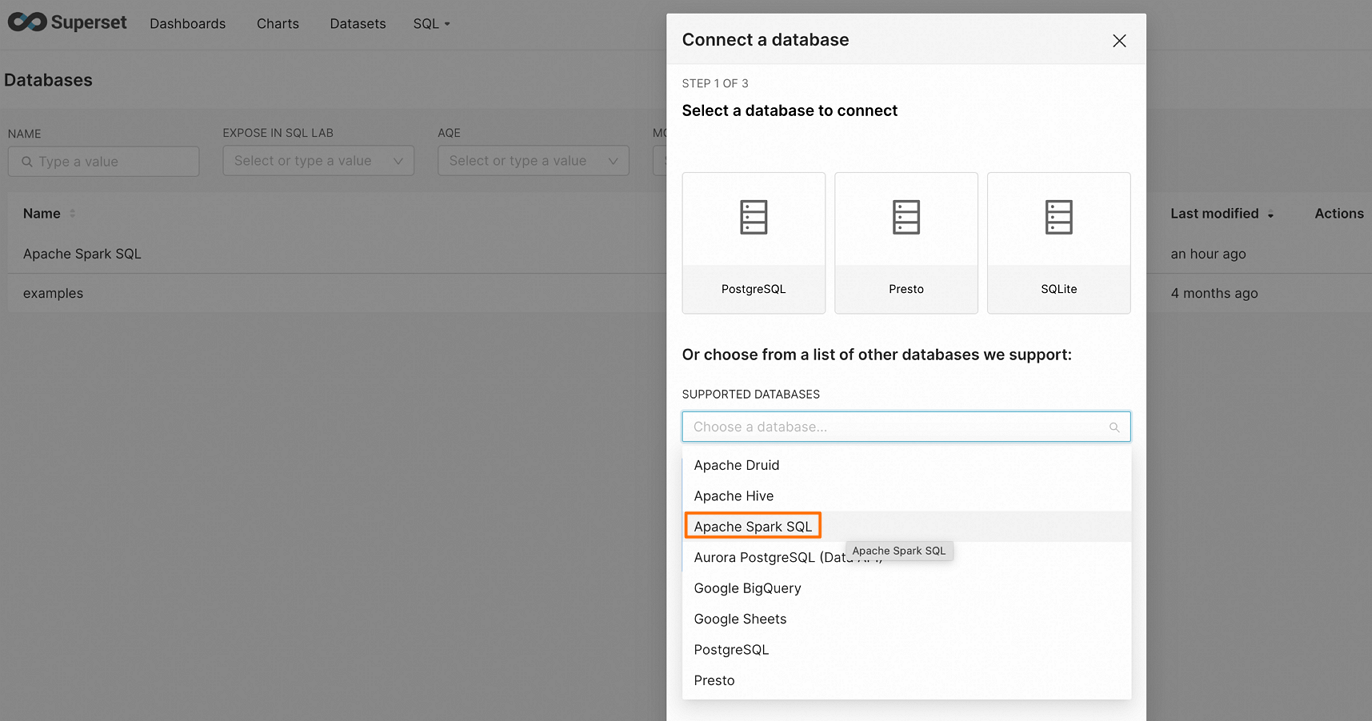

Click DATABASE in the upper-right corner of the page to go to the Connect a database page.

On the Connect a database page, select Apache Spark SQL.

Enter the connection string and configure the related data source parameters.

Connect by using a public endpoint

hive+https://<username>:<token>@<endpoint>:443/<db_name>Connect by using an internal same-region endpoint

hive+http://<username>:<token>@<endpoint>:80/<db_name>Click FINISH to confirm successful connection and verification.

Connect to Spark Thrift Server by configuring Hue

Hue is a popular open-source web interface for interacting with the Hadoop ecosystem. For more information about Hue, see Hue official documentation.

Install dependencies.

Make sure that you have installed the

thriftpackage version 0.20.0. If not, you can install it by using the following command:pip install thrift==0.20.0Add a Spark SQL connection string to the Hue configuration file.

Find the Hue configuration file (usually located at

/etc/hue/hue.conf) and add the following content to the file:Connect by using a public endpoint

[[[sparksql]]] name = Spark Sql interface=sqlalchemy options='{"url": "hive+https://<username>:<token>@<endpoint>:443/"}'Connect by using an internal same-region endpoint

[[[sparksql]]] name = Spark Sql interface=sqlalchemy options='{"url": "hive+http://<username>:<token>@<endpoint>:80/"}'Restart Hue.

After you modify the configuration, you need to run the following command to restart the Hue service for the changes to take effect:

sudo service hue restartVerify the connection.

After Hue is successfully restarted, access the Hue interface and find the Spark SQL option. If the configuration is correct, you should be able to successfully connect to Spark Thrift Server and execute SQL queries.

Connect to Spark Thrift Server by using DataGrip

DataGrip is a database management environment for developers, designed to facilitate the querying, creation, and management of databases. Databases can run locally, on a server, or in the cloud. For more information about DataGrip, see DataGrip.

Install DataGrip. For more information, see Install DataGrip.

The DataGrip version used in this example is 2025.1.2.

Open the DataGrip client and go to the DataGrip interface.

Create a project.

Click

and select .

and select .

In the New Project dialog box, enter a project name, such as

Spark, and click OK.

Click the icon in the Database Explorer

menu bar. Select .

menu bar. Select .

In the Data Sources and Drivers dialog box, configure the following parameters:

Tab

Parameter

Description

General

Name

The custom connection name. For example, spark_thrift_server.

Authentication

The authentication method. In this example, No auth is selected.

In a production environment, select User & Password to ensure that only authorized users can submit SQL tasks, which improves system security.

Driver

Click Apache Spark, and then click Go to Driver to confirm that the Driver version is

ver. 1.2.2.NoteBecause the current Serverless Spark engine version is 3.x, the Driver version must be 1.2.2 to ensure system stability and feature compatibility.

URL

The URL for connecting to Spark Thrift Server. Based on your network environment (public or internal), you can choose the appropriate connection method.

Connect by using a public endpoint

jdbc:hive2://<endpoint>:443/;transportMode=http;httpPath=cliservice/token/<token>Connect by using an internal same-region endpoint

jdbc:hive2://<endpoint>:80/;transportMode=http;httpPath=cliservice/token/<token>

Options

Run keep-alive query

This parameter is optional. Select this parameter to prevent automatic disconnection due to timeout.

Click Test Connection to confirm that the data source is configured successfully.

Click OK to complete the configuration.

Use DataGrip to manage Spark Thrift Server.

After DataGrip successfully connects to Spark Thrift Server, you can perform data development. For more information, see DataGrip Help.

For example, under the created connection, you can right-click a target table and select . Then, you can write and run SQL scripts in the SQL editor on the right to view table data information.

Connect Redash to Spark Thrift Server

Redash is an open-source BI tool that provides web-based database querying and data visualization capabilities.

Install Redash. For installation instructions, see Setting up a Redash Instance.

Install dependencies. Make sure the

thriftpackage of version 0.20.0 is installed. If it is not installed, run the following command:pip install thrift==0.20.0Log on to Redash.

In the left navigation pane, click Settings. On the Data Sources tab, click +New Data Source.

In the dialog box, configure the following parameters, and then click Create.

Parameter | Description | |

Type Selection | Data source type. Select Hive (HTTP). | |

Configuration | Name | Enter a custom name for the data source. |

Host | Enter the endpoint of your Spark Thrift Server. You can find the public or internal endpoint on the Overview tab. | |

Port | Use 443 for access via a public domain name, or 80 for a internal domain name. | |

HTTP Path | Set to | |

Username | Enter any username such as | |

Password | Enter the Token value you created. | |

HTTP Scheme | Enter | |

At the top of the page, choose You can write SQL statements in the editor.

View the jobs run by using a specific session

You can view the jobs that are run by using a specific session on the Sessions page. Procedure:

On the Sessions page, click the name of the desired session.

On the page that appears, click the Execution Records tab.

On the Execution Records tab, you can view the details of a job, such as the run ID and start time, and click the link in the Spark UI column to access the Spark UI.