In the age of big data, stream processing is vital for real-time data analytics. EMR Serverless Spark is a powerful and scalable platform that simplifies real-time data processing and improves efficiency by removing the need to manage servers. This topic describes how to submit a PySpark streaming job using EMR Serverless Spark and demonstrates the platform's ease of use and maintenance for stream processing.

Prerequisites

A workspace has been created. For more information, see Create a workspace.

Procedure

Step 1: Create a real-time Dataflow cluster and generate messages

On the EMR on ECS page, create a Dataflow cluster that includes the Kafka service. For more information, see Create a cluster.

Log on to the master node of the EMR cluster. For more information, see Log on to a cluster.

Run the following command to switch the directory.

cd /var/log/emr/taihao_exporterRun the following command to create a topic.

# Create a topic named taihaometrics with 10 partitions and a replication factor of 2. kafka-topics.sh --partitions 10 --replication-factor 2 --bootstrap-server core-1-1:9092 --topic taihaometrics --createRun the following command to send messages.

# Use kafka-console-producer to send messages to the taihaometrics topic. tail -f metrics.log | kafka-console-producer.sh --broker-list core-1-1:9092 --topic taihaometrics

Step 2: Create a network connection

Go to the Network Connections page.

In the navigation pane on the left of the EMR console, choose .

On the Spark page, click the name of the target workspace.

On the EMR Serverless Spark page, click Network Connection in the navigation pane on the left.

On the Network Connection page, click Create Network Connection.

In the Create Network Connection dialog box, configure the following parameters and click OK.

Parameter

Description

Name

Enter a name for the new connection. For example, connection_to_emr_kafka.

VPC

Select the same VPC as the EMR cluster.

If no VPC is available, click Create VPC to go to the VPC console and create one. For more information, see Create and manage a VPC.

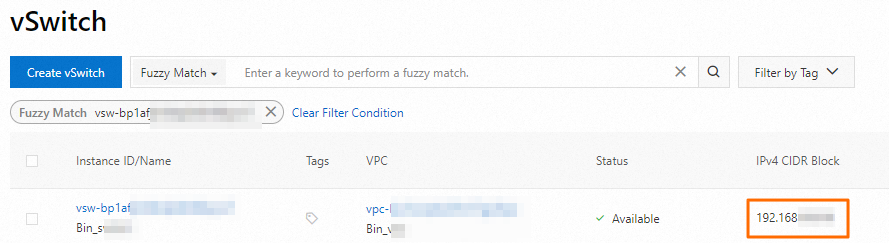

vSwitch

Select the same vSwitch that is in the same VPC as the EMR cluster.

If no vSwitch is available in the current zone, go to the VPC console and create one. For more information, see Create and manage a vSwitch.

When the Status changes to Succeeded, the network connection is created.

Step 3: Add a security group rule for the EMR cluster

Obtain the CIDR block of the vSwitch for the cluster node.

On the Nodes page, click a node group name to view the associated vSwitch information. Then, log on to the VPC console and obtain the CIDR block of the vSwitch on the vSwitch page.

Add a security group rule.

On the EMR on ECS page, click the ID of the target cluster.

On the Basic Information page, click the link next to Cluster Security Group.

On the Security Group Details page, in the Rules section, click Add Rule. Configure the following parameters and click OK.

Parameter

Description

Source

Enter the CIDR block of the vSwitch that you obtained in the previous step.

ImportantTo prevent security risks from external attacks, do not set the authorization object to 0.0.0.0/0.

Destination (Current Instance)

Enter port 9092.

Step 4: Upload JAR packages to OSS

Decompress the kafka.zip file and upload all the JAR packages to Object Storage Service (OSS). For more information, see Simple upload.

Step 5: Upload the resource file

On the EMR Serverless Spark page, click Artifacts in the navigation pane on the left.

On the Artifacts page, click Upload File.

In the Upload File dialog box, click the upload area and select the pyspark_ss_demo.py file.

Step 6: Create and start a streaming job

On the EMR Serverless Spark page, click Development in the navigation pane on the left.

On the Development tab, click the

icon.

icon.Enter a name, select for the job type, and then click OK.

In the new development tab, configure the following parameters, leave the other parameters at their default values, and then click Save.

Parameter

Description

Main Python Resource

Select the pyspark_ss_demo.py file that you uploaded on the Resource Upload page in the previous step.

Engine Version

The Spark version. For more information, see Engine versions.

Execution Parameters

The internal IP address of the core-1-1 node of the EMR cluster. You can view the address under the Core node group on the Nodes page of the EMR cluster.

Spark Configurations

The Spark configuration information. The following code provides an example.

spark.jars oss://path/to/commons-pool2-2.11.1.jar,oss://path/to/kafka-clients-2.8.1.jar,oss://path/to/spark-sql-kafka-0-10_2.12-3.3.1.jar,oss://path/to/spark-token-provider-kafka-0-10_2.12-3.3.1.jar spark.emr.serverless.network.service.name connection_to_emr_kafkaNotespark.jars: Specifies the paths of external JAR packages to load at runtime. Replace the paths with the actual paths of all JAR packages that you uploaded in Step 4.spark.emr.serverless.network.service.name: Specifies the name of the network connection. Replace the name with the name of the network connection that you created in Step 2.

Click Publish.

In the Publish dialog box, click OK.

Start the streaming job.

Click Go To O&M.

Click Start.

Step 7: View logs

Click the Log Exploration tab.

On the Log Exploration tab, you can view information about the application's execution and the returned results.

References

For more information about the PySpark development process, see PySpark development quick start.