JindoTable provides a native engine for you to increase the speed of using Spark, Hive, or Presto to query files in the ORC or Parquet format. This topic describes how to enable query acceleration based on the native engine to improve the performance of Spark, Hive, and Presto.

Prerequisites

An E-MapReduce (EMR) cluster is created, and a file in the ORC or Parquet format is stored in JindoFS or Object Storage Service (OSS). For more information about how to create a cluster, see Create a cluster.

Limits

Binary files are not supported.

Partitioned tables whose values of partition key columns are stored in files are not supported.

EMR clusters of V5.X.X or a later version are not supported.

spark.read.schema (userDefinedSchema) is not supported.

Data of the DATE type must be in the YYYY-MM-DD format and range from 1400-01-01 to 9999-12-31.

If a table contains two columns that have the same column name with different letter cases, such as NAME and name, queries on the columns cannot be accelerated.

The following table lists the supported Spark, Hive, and Presto engines and the file formats supported by each engine.

Engine

ORC

Parquet

Spark2

Supported

Supported

Spark3

Supported

Supported

Presto

Supported

Supported

Hive2

Not supported

Supported

Hive3

Not supported

Supported

The following table lists the supported Spark, Hive, and Presto engines and the file systems supported by each engine.

Engine

OSS

JindoFS

HDFS

Spark2

Supported

Supported

Supported

Presto

Supported

Supported

Supported

Hive2

Supported

Supported

Not supported

Hive3

Supported

Supported

Not supported

Improve the performance of Spark

Enable query acceleration for ORC or Parquet files in JindoTable.

NoteQuery acceleration consumes off-heap memory. We recommend that you add

--conf spark.executor.memoryOverhead=4gto a Spark task to apply for additional resources for query acceleration.If you use Spark to read data from an ORC or Parquet file, the DataFrame API or Spark SQL is required.

Global configuration

Go to the Cluster Overview page of your cluster.

Log on to the Alibaba Cloud EMR console.

In the top navigation bar, select the region where you want to create a cluster. The region of a cluster cannot be changed after the cluster is created.

Click the Cluster Management tab.

On the Cluster Management page, find your cluster and click Details in the Actions column.

Modify the related parameter.

In the left-side navigation pane of the page that appears, choose .

On the Spark service page, click the Configure tab.

Search for the spark.sql.extensions parameter and change its value to io.delta.sql.DeltaSparkSessionExtension,com.aliyun.emr.sql.JindoTableExtension.

Save the configuration.

Click Save in the upper-right corner.

In the Confirm Changes dialog box, specify Description and click OK.

Restart ThriftServer.

Choose in the upper-right corner.

In the Cluster Activities dialog box, specify Description and click OK.

In the Confirm message, click OK.

Job-level configuration

When you configure a Spark Shell or Spark SQL job, add the following configuration to the code:

--conf spark.sql.extensions=io.delta.sql.DeltaSparkSessionExtension,com.aliyun.emr.sql.JindoTableExtensionFor more information about how to configure a Spark job, see Configure a Spark Shell job or Configure a Spark SQL job.

Check whether query acceleration is enabled.

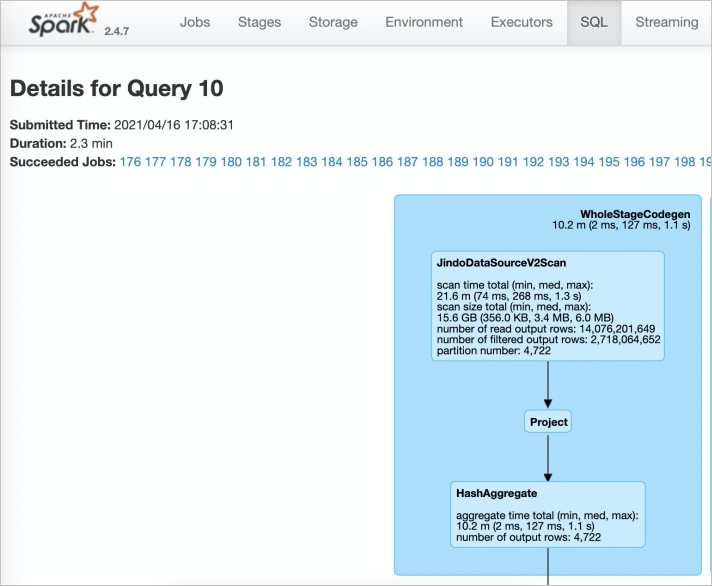

Access the web UI of Spark History Server.

On the SQL tab of Spark, view information about the related task.

If JindoDataSourceV2Scan appears, query acceleration is enabled. Otherwise, check the configurations in Step 1.

Improve the performance of Presto

Presto has high query concurrency and uses off-heap memory for query acceleration. To use the query acceleration feature, make sure that the memory is greater than 10 GB.

By default, catalog: hive-acc of the native engine is built in the Presto service. You can use catalog: hive-acc to enable query acceleration.

Example:

presto --server emr-header-1:9090 --catalog hive-acc --schema defaultWhen you use this feature in Presto, complex data types such as MAP, STRUCT, and ARRAY are not supported.

Improve the performance of Hive

If you have high requirements for job stability, we recommend that you do not enable query acceleration.

You can use one of the following methods to improve the performance of Hive:

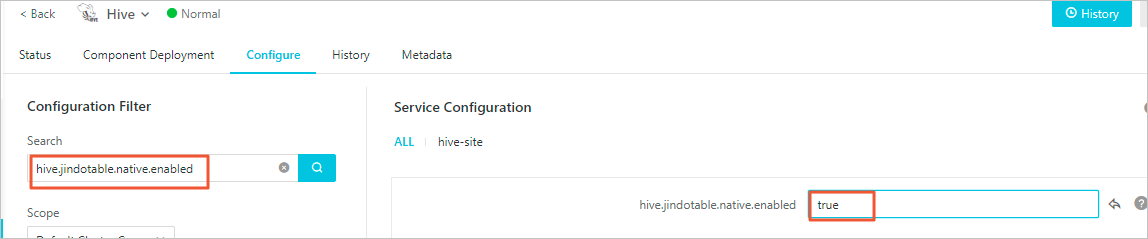

Use the EMR console

On the Configure tab of the Hive service page, search for the hive.jindotable.native.enabled parameter and change its value to true. Then, save the configuration and restart the Hive service. This method is suitable for Hive on MapReduce and Hive on Tez jobs.

Use the CLI

Set

hive.jindotable.native.enabledtotruein the CLI to enable query acceleration. By default, the query acceleration plug-in for Parquet files is deployed in JindoTable in EMR V3.35.0 and later. You can directly configure this parameter in EMR V3.35.0 and later.set hive.jindotable.native.enabled=true;

When you use this feature in Hive, complex data types such as MAP, STRUCT, and ARRAY are not supported.