If a local disk in an E-MapReduce (EMR) cluster is damaged, you will receive a notification about the damage when you use the EMR cluster. Local disks are used in d-series and i-series instance families. This topic describes how to replace a damaged local disk for an EMR cluster.

Precautions

We recommend that you use the method of removing abnormal nodes and adding normal nodes to prevent your business from being affected for a long period of time.

After a disk is replaced, data on the disk is lost. You must make sure that the data has replicas and is backed up before you replace the disk.

To replace a damaged local disk, you need to stop services, unmount the damaged disk, mount a new disk, and restart services. In most cases, the entire replacement process can be complete within five business days. Before you perform the operations described in this topic, you must evaluate the disk usage of the related services and the cluster load and determine whether the cluster can handle the business traffic after the services are stopped.

Procedure

You can log on to the Elastic Compute Service (ECS) console to view the event details, including the instance ID, instance status, damaged disk ID, event progress, and information about related operations.

Step 1: Obtain information about the damaged disk

Log on to the node where the damaged disk is deployed in SSH mode. For more information, see Log on to a cluster.

Run the following command to view the block device information:

lsblkInformation similar to the following output is returned:

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT vdd 254:48 0 5.4T 0 disk /mnt/disk3 vdb 254:16 0 5.4T 0 disk /mnt/disk1 vde 254:64 0 5.4T 0 disk /mnt/disk4 vdc 254:32 0 5.4T 0 disk /mnt/disk2 vda 254:0 0 120G 0 disk └─vda1 254:1 0 120G 0 part /Run the following command to view the disk information:

sudo fdisk -lInformation similar to the following output is returned:

Disk /dev/vdd: 5905.6 GB, 5905580032000 bytes, 11534336000 sectors Units = sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytesRecord the device name and mount point of the damaged disk in the returned information in the preceding two steps. You need to use the device name and mount point to replace

$device_nameand$mount_pathin subsequent commands.In this example, the device name is /dev/vdd, and the mount point is /mnt/disk3.

Step 2: Isolate the damaged local disk

Stop the services that read data from or write data to the damaged disk.

Find the desired cluster in the EMR console and go to the Services tab of the cluster. On the Services tab, find the services that read data from or write data to the damaged local disk and then stop the services. In most cases, you need to stop storage services such as Hadoop Distributed File System (HDFS), HBase, and Kudu. To stop a service, perform the following operations: On the Services tab, find the desired service, move the pointer over the

icon and select Stop. In the dialog box that appears, configure the Execution Reason parameter and click OK. In the Confirm message, click OK.

icon and select Stop. In the dialog box that appears, configure the Execution Reason parameter and click OK. In the Confirm message, click OK. You can run the

sudo fuser -mv $device_namecommand on the node where the damaged local disk is deployed to view processes that occupy the disk and then stop the related services in the EMR console.Run the following command to deny the read and write operations on the damaged local disk at the application layer:

sudo chmod 000 $mount_pathRun the following command to unmount the damaged local disk:

sudo umount $device_name;sudo chmod 000 $mount_pathImportantThe device name of the local disk changes after it is repaired. Therefore, if you do not unmount the local disk in this step, services may read data from or write data to a wrong disk after the local disk is repaired and used as a new disk.

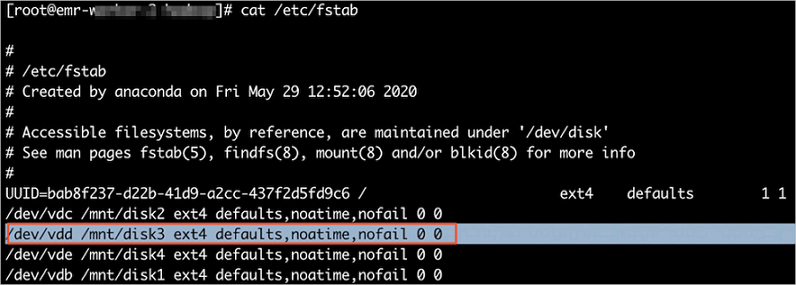

Update the fstab file.

Back up the fstab file in the /etc directory.

Delete records related to the damaged local disk from the fstab file in the /etc directory.

In this example, records related to the disk dev/vdd are deleted.

Start the services that you stopped.

Find the services that you stopped in Step 2 on the Services tab of the desired cluster and then start the services. To start a service, perform the following operations: On the Services tab, find the desired service, move the pointer over the

icon and select Start. In the dialog box that appears, configure the Execution Reason parameter and click OK. In the Confirm message, click OK.

icon and select Start. In the dialog box that appears, configure the Execution Reason parameter and click OK. In the Confirm message, click OK.

Step 3: Replace the disk

Repair the disk in the ECS console. For more information, see Isolate damaged local disks in the ECS console.

Step 4: Mount the new disk

After the disk is repaired, you need to mount the disk as a new disk.

Run the following command to normalize device names:

device_name=`echo "$device_name" | sed 's/x//1'`The preceding command normalizes the directory names such as /dev/xvdk with the letter x removed. The name is changed to /dev/vdk.

Run the following command to create a mount directory:

mkdir -p "$mount_path"Run the following command to mount the disk to the mount directory:

mount $device_name $mount_path;sudo chmod 755 $mount_pathIf the disk fails to be mounted, perform the following steps:

Run the following command to format the disk:

fdisk $device_name << EOF n p 1 wq EOFRun the following command to remount the disk:

mount $device_name $mount_path;sudo chmod 755 $mount_path

Run the following command to modify the fstab file:

echo "$device_name $mount_path $fstype defaults,noatime,nofail 0 0" >> /etc/fstabNoteYou can run the

which mkfs.ext4command to check whether ext4 exists. If ext4 exists, replace$fstypewith ext4. If ext4 does not exist, replace$fstypewith ext3.Create a script file and select code based on the cluster type.

Hadoop cluster in the original data lake scenario:

Other clusters:

Run the following commands to run the script file, create service catalogs, and then delete the script file.

$file_pathspecifies the path in which the script file is stored.chmod +x $file_path sudo $file_path -p $mount_path rm $file_pathUse the new disk.

Restart the services that are running on the node in the EMR console and check whether the disk works properly.