Network Accelerator (NetACC) is a user-mode network acceleration library that leverages the benefits of elastic Remote Direct Memory Access (eRDMA), such as low latency and high throughput, and uses compatible socket interfaces to accelerate existing TCP applications. For TCP applications that require high communication performance, low latency, and high throughput, you can use NetACC to adapt eRDMA and accelerate network communication of the applications without the need to modify application code.

NetACC is in public preview.

Scenarios

NetACC is suitable for high-network-overhead scenarios.

Scenarios in which the packets per second (PPS) rate is high, especially scenarios in which a large number of small packets are sent and received. You can use NetACC to reduce CPU overheads and improve the system throughput in specific scenarios, such as when Redis processes requests.

Network latency-sensitive scenarios: eRDMA provides lower network latency than TCP to accelerate network responses.

Repeated creation of short-lived connections: NetACC can accelerate the process of establishing secondary connections to reduce the connection creation time and improve system performance.

Install NetACC

Installation methods

Use the eRDMA driver to install NetACC

When you install the eRDMA driver, NetACC is automatically installed. For information about how to install the eRDMA driver, see the Install the eRDMA driver on an ECS instance section of the "Use eRDMA" topic.

Separately install NetACC

Run the following command to separately install a specific version of NetACC or temporarily use NetACC on an Elastic Compute Service (ECS) instance:

sudo curl -fsSL https://netacc-release.oss-cn-hangzhou.aliyuncs.com/release/netacc_download_install.sh | sudo sh

Configuration file and optimized parameters

After you install NetACC, the

/etc/netacc.confconfiguration file is automatically generated. To optimize NetACC performance, you can configure specific parameters in the configuration file based on your business requirements, such asNACC_SOR_MSG_SIZE,NACC_RDMA_MR_MIN_INC_SIZE,NACC_RDMA_MR_MAX_INC_SIZE,NACC_SOR_CONN_PER_QP, andNACC_SOR_IO_THREADS. NACC_SOR_MSG_SIZE specifies the size of a buffer. NACC_RDMA_MR_MIN_INC_SIZE specifies the size of the first memory region (MR) registered by RDMA. NACC_RDMA_MR_MAX_INC_SIZE specifies the maximum size of an MR registered by RDMA. NACC_SOR_CONN_PER_QP specifies the number of connections per queue pair (QP). NACC_SOR_IO_THREADS specifies the number of NetACC threads.The following sample code provides an example on how to configure the parameters in the configuration file:

Use NetACC

To use NetACC in applications, run the netacc_run command or configure the LD_PRELOAD environment variable. Before you use NetACC, you must get familiar with the Considerations section of this topic.

Run the netacc_run command

netacc_run is a tool that loads NetACC on application startup. You can add netacc_run before the <COMMAND> command to start an application and load NetACC at the same time. <COMMAND> specifies the command that is used to start an application.

netacc_run provides multiple parameters to improve the performance of NetACC. For example, -t specifies the number of I/O threads and -p specifies the number of connections per QP. The parameters that you configure when you run the netacc_run command overwrite the parameters in the configuration file.

Examples:

In the following examples, Redis applications are used. Add

netacc_runbefore a Redis command to start a Redis application and load NetACC at the same time.Run the following command to start Redis and load NetACC at the same time:

netacc_run redis-serverRun the following command to start the redis-benchmark utility and load NetACC at the same time:

netacc_run redis-benchmark

Configure the LD_PRELOAD environment variable

The LD_PRELOAD environment variable specifies the shared libraries that are preloaded when a program starts. To automate the loading of NetACC, specify NetACC in the value of the LD_PRELOAD environment variable in the relevant script.

Run the following command to query the location of the NetACC dynamic library:

ldconfig -p | grep netaccThe following command output is returned.

Run the following command to configure the

LD_PRELOADenvironment variable to specify the preloaded shared libraries:LD_PRELOAD=/lib64/libnetacc-preload.so your_applicationReplace

your_applicationwith the application that you want to accelerate.Examples: In the following examples, Redis applications are used.

Run the following command to start Redis and load NetACC at the same time:

LD_PRELOAD=/lib64/libnetacc-preload.so redis-serverRun the following command to start the redis-benchmark utility and load NetACC at the same time:

LD_PRELOAD=/lib64/libnetacc-preload.so redis-benchmark

Monitor NetACC

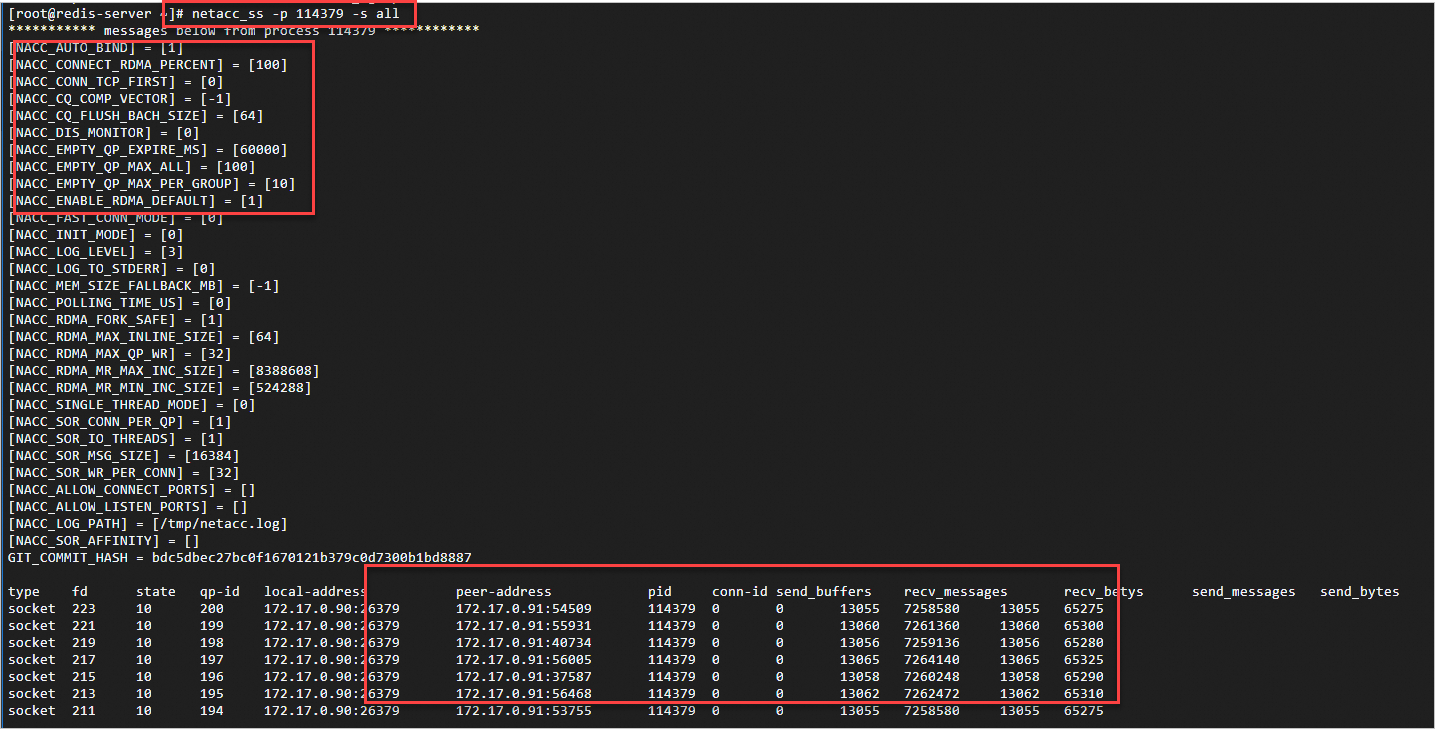

netacc_ss is a monitoring tool of NetACC. Run the netacc_ss command to monitor the status of data sent and received by the processes of NetACC-accelerated TCP applications. To monitor NetACC, you can run the command on a server and a client.

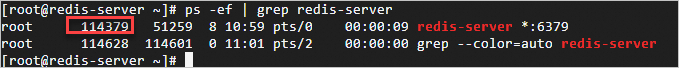

Run the following command to query the status of data sent and received by the processes of NetACC-accelerated TCP applications:

netacc_ss -s all -p <Process ID>To query the ID of a process, run the ps -ef | grep <Process name> command.

Considerations

When you use NetACC, take note that only TCP connections established by using the elastic network interfaces (ENIs) for which the eRDMA Interface (ERI) feature is enabled are converted into RDMA connections. Other connections remain TCP connections.

NoteIf both network communication ends do not support ERI-enabled ENIs, NetACC cannot establish an RDMA connection and falls back to TCP.

If you want multiple processes to communicate with each other when you use NetACC, you cannot send RDMA socket file descriptors to other processes by using the inter-process communication (IPC) mechanism of the kernel.

NoteRDMA connections are established based on specific QPs. The QPs cannot be directly shared among processes. As a result, RDMA connections cannot be shared among processes.

The NetACC framework does not support IPv6. To prevent IPv6-related conflicts or errors when you use NetACC, we recommend that you run the

sysctl net.ipv6.conf.all.disable_ipv6=1command to disable IPv6.NetACC does not support hot updates. Hot updates to NetACC may cause unexpected errors. Before you update NetACC, you must stop the processes of NetACC-accelerated applications.

NetACC does not support specific TCP socket options, such as SO_REUSEPORT, SO_ZEROCOPY, and TCP_INQ.

NetACC depends on the GNU C Library (glibc) and cannot run in a non-glibc environment, such as a Golang environment.

Before you use NetACC, we recommend that you run the

ulimit -l unlimitedcommand to set the maximum amount of physical memory that a process can lock to unlimited.NoteIf the value of the ulimit -l parameter is excessively small, RDMA may fail to register MRs because the size of the MRs exceeds the allowed maximum amount of memory that can be locked.

When a NetACC-accelerated application listens on a TCP port for communication, NetACC also listens on an RDMA port (TCP port plus 20000) to achieve efficient data transfers in an RDMA network environment.

NoteIf the RDMA port is occupied or falls outside the valid port range, the connection cannot be established. Properly allocate ports to prevent port conflicts.

In NetACC, a child process does not inherit the socket connection that is already established by a parent process after the parent process creates the child process by using the

fork()system call.NoteThis may cause a communication failure. In this case, the child process must establish a new socket connection.

By default, the QP reuse feature is disabled in NetACC.

You can set the number of connections per QP (-p) to a value greater than 1 by configuring the

NACC_SOR_CONN_PER_QPparameter in the NetACC configuration file or when you run thenetacc_runcommand to enable the QP reuse feature and allow multiple connections to reuse a QP.When the QP reuse feature is enabled, the number of QPs, management overheads, and resource consumption are reduced to improve overall communication efficiency, especially in scenarios in which a large number of concurrent connections exist.

After you enable the QP reuse feature, multiple RDMA connections may share a local port number. In RDMA, port numbers identify QPs, but not connections. If multiple connections share a QP, the connections also share a local port number.

NoteIf applications require different local port numbers, such as to provide different services or listen on different ports, disable the QP reuse feature. If the QP reuse feature is enabled, connections cannot be distinguished based on local port numbers, which may cause port conflicts.

Use NetACC in Redis applications

Benefits of NetACC for Redis applications

Improved system throughput

NetACC is suitable for scenarios in which Redis processes a large number of requests per second. NetACC reduces CPU overheads and improves system throughput.

Accelerated network responses

NetACC leverages the low latency benefit of eRDMA to significantly accelerate network responses to Redis applications.

NetACC used in Redis performance benchmarks

Redis-benchmark is a built-in benchmark utility of Redis, which is designed to measure the performance of the Redis server under various workloads by simulating a number of clients to concurrently send requests to the Redis server.

Test scenario

Use NetACC in the redis-benchmark utility to simulate 100 clients and 4 threads to make 5 million SET requests.

Preparations

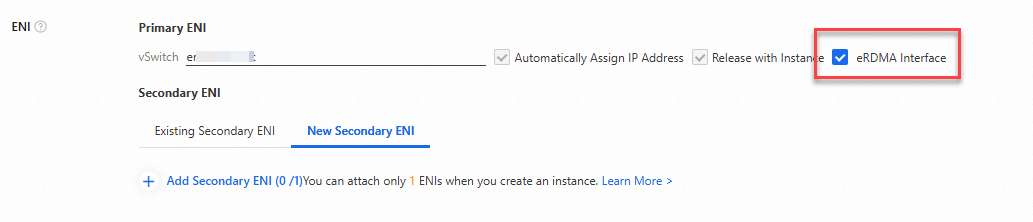

Create two eRDMA-capable ECS instances on the instance buy page in the ECS console. Select Auto-install eRDMA Driver and then select eRDMA Interface to enable the ERI feature for the primary ENI. Use one ECS instance as the Redis server and the other ECS instance as a Redis client.

The ECS instances have the following configurations:

Image: Alibaba Cloud Linux 3

Instance type: ecs.g8ae.4xlarge

Private IP address of the primary ENI: 172.17.0.90 for the server and 172.17.0.91 for the client In the following benchmark, replace the IP addresses with actual values based on your business requirements.

NoteIn this topic, the ERI feature is enabled for the primary ENIs of the ECS instances to perform the benchmark. 172.17.0.90 is the private IP address of the primary ENI of the ECS instance that serves as the Redis server.

If you enable the ERI feature for the secondary ENIs of the ECS instances, replace the preceding IP addresses with the private IP addresses of the secondary ENIs. For more information, see the Bind ERIs to an ECS instance section of the "Use eRDMA" topic.

Procedure

Connect to the ECS instance that serves as the Redis server and the ECS instance that serves as a Redis client.

For more information, see Use Workbench to log on to a Linux instance over SSH.

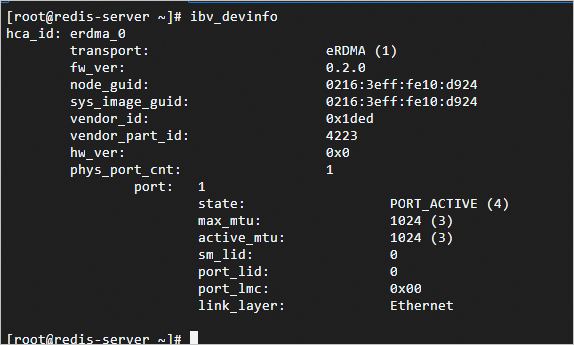

Check whether the eRDMA driver is installed on the ECS instances.

After the ECS instances start, run the

ibv_devinfocommand to check whether the eRDMA driver is installed.The following command output indicates that the eRDMA driver is installed.

The following command output indicates that the eRDMA driver is being installed. Wait for a few minutes until the eRDMA driver is installed, and try again later.

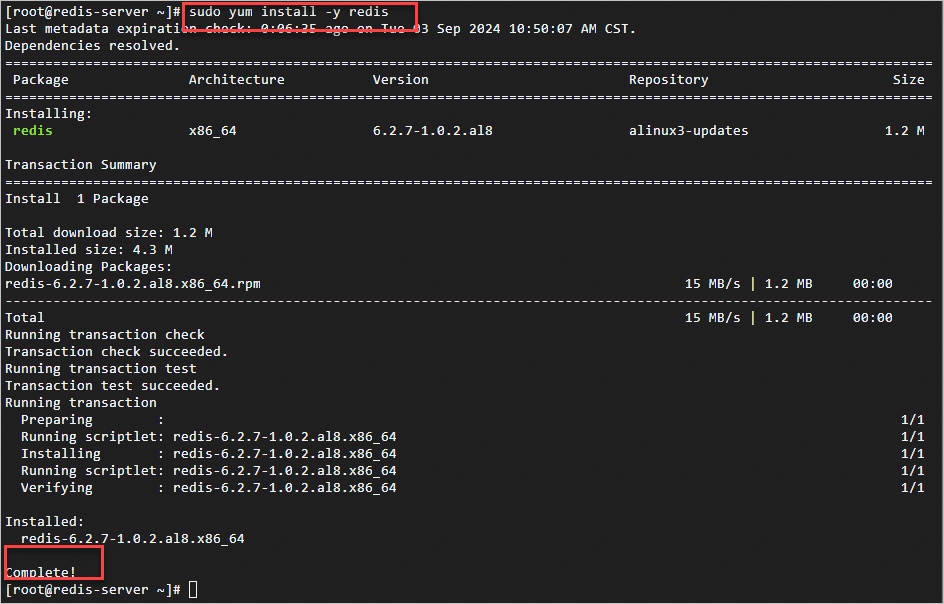

Run the following command on the ECS instances to install Redis:

sudo yum install -y redisThe following command output indicates that Redis is installed.

Use the

redis-benchmarkutility to benchmark the performance of Redis.Perform a benchmark by using NetACC

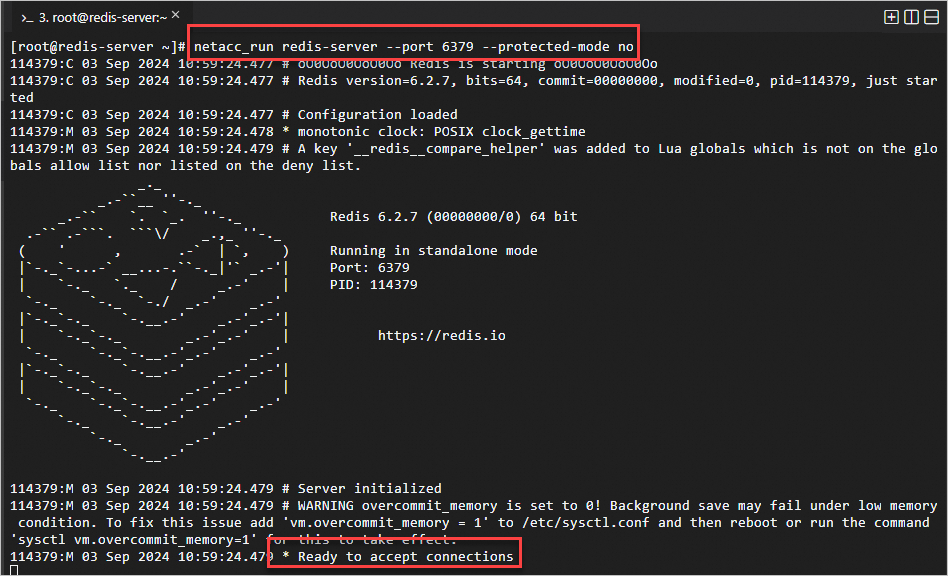

Run the following command on the ECS instance that serves as the Redis server to start Redis and accelerate Redis by using NetACC:

netacc_run redis-server --port 6379 --protected-mode noNoteReplace 6379 with the number of the actual port on which you want to start Redis. For more information, see the Common parameters used together with the redis-server command section of this topic.

In this example, the

netacc_runcommand is run to use NetACC. For other methods of using NetACC, see the Use NetACC section of this topic.

The following command output indicates that Redis is started as expected.

Run the following command on the ECS instance that serves as a Redis client to start redis-benchmark and accelerate redis-benchmark by using NetACC:

netacc_run redis-benchmark -h 172.17.0.90 -p 6379 -c 100 -n 5000000 -r 10000 --threads 4 -d 512 -t setNoteReplace 172.17.0.90 with the actual IP address of the Redis server and 6379 with the number of the actual port on which Redis is started. For more information, see the Common command parameters used with redis-benchmark section of this topic.

The benchmark results may vary based on the network conditions. The benchmark data provided in this topic is only for reference.

The Summary section at the end of the preceding benchmark result indicates that approximately 770,000 requests can be processed per second. For information about the metrics in Redis benchmark results, see the Common metrics in redis-benchmark benchmark results section of this topic.

Perform a benchmark without NetACC

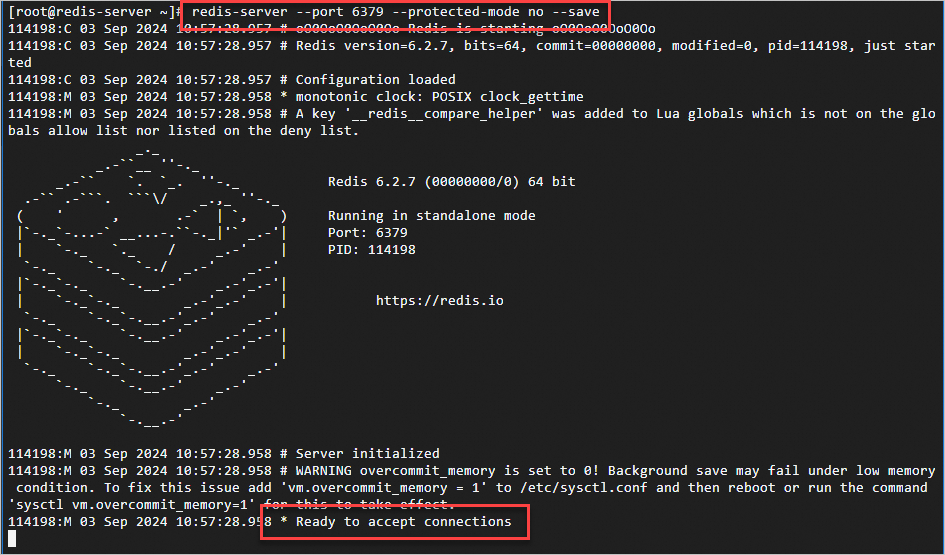

Run the following command on the ECS instance that serves as the Redis server to start Redis:

redis-server --port 6379 --protected-mode no --saveNoteReplace 6379 with the number of the actual port on which you want to start Redis. For more information, see the Common parameters used together with the redis-server command section of this topic.

The following command output indicates that Redis is started as expected.

Run the following command on the ECS instance that serves as a Redis client to start redis-benchmark:

redis-benchmark -h 172.17.0.90 -c 100 -n 5000000 -r 10000 --threads 4 -d 512 -t setNoteReplace 172.17.0.90 with the actual IP address of the Redis server and 6379 with the number of the actual port on which Redis is started. For more information, see the Common command parameters used with redis-benchmark section of this topic.

The benchmark results may vary based on the network conditions. The benchmark data provided in this topic is only for reference.

The Summary section at the end of the preceding benchmark result indicates that approximately 330,000 requests can be processed per second. For information about the metrics in Redis benchmark results, see the Common metrics in redis-benchmark benchmark results section of this topic.