DeepSpeed is an open source deep learning optimization software suite that provides distributed training and model optimization to accelerate model training. This topic describes how to use Arena to quickly submit DeepSpeed distributed training jobs and how to use TensorBoard to visualize training jobs.

Table of contents

Prerequisites

A Container Service for Kubernetes (ACK) cluster that contains GPU-accelerated nodes is created. For more information, see Create an ACK cluster with GPU-accelerated nodes.

The cloud-native AI suite is installed. The version of the CLI ack-arena for machine learning is 0.9.10 or later. For more information, see Deploy the cloud-native AI suite.

The Arena client is installed and the Arena version is 0.9.10 or later. For more information, see Configure the Arena client.

Persistent volume claims (PVCs) are created in the cluster. For more information, see Configure a shared NAS volume.

Usage notes

In this example, DeepSpeed is used to train a masked language model. The sample code and dataset are downloaded and added to the sample image registry.cn-beijing.aliyuncs.com/acs/deepspeed:hello-deepspeed. If you do not need to use the sample image, you can download the source code and dataset from the GitHub URL and save the dataset to a shared NAS volume created by using a pair of PV and PVC. In this example, a PVC named training-data is created to claim a shared NAS volume to store the training results.

If you want to use a custom image, use one of the following methods:

Refer to Dockerfile and install OpenSSH in the base image.

NoteTraining jobs can be accessed only through SSH without passwords. Therefore, you must ensure the confidentiality of the Secrets in the production environment.

Use the DeepSpeed base image provided by ACK.

registry.cn-beijing.aliyuncs.com/acs/deepspeed:v072_base

Procedure

Run the following command to query available GPU resources in the cluster:

arena top nodeExpected output:

NAME IPADDRESS ROLE STATUS GPU(Total) GPU(Allocated) cn-beijing.192.1xx.x.xx 192.1xx.x.xx <none> Ready 0 0 cn-beijing.192.1xx.x.xx 192.1xx.x.xx <none> Ready 0 0 cn-beijing.192.1xx.x.xx 192.1xx.x.xx <none> Ready 1 0 cn-beijing.192.1xx.x.xx 192.1xx.x.xx <none> Ready 1 0 cn-beijing.192.1xx.x.xx 192.1xx.x.xx <none> Ready 1 0 --------------------------------------------------------------------------------------------------- Allocated/Total GPUs In Cluster: 0/3 (0.0%)The output indicates that three GPU-accelerated nodes can be used to run training jobs.

Run the

arena submit deepspeedjob [--falg] commandcommand to submit a DeepSpeed job.If you use the DeepSpeed base image provided by ACK, run the following code to submit a DeepSpeed job that uses one Launcher node and three worker nodes.

arena submit deepspeedjob \ --name=deepspeed-helloworld \ --gpus=1 \ --workers=3 \ --image=registry.cn-beijing.aliyuncs.com/acs/deepspeed:hello-deepspeed \ --data=training-data:/data \ --tensorboard \ --logdir=/data/deepspeed_data \ "deepspeed /workspace/DeepSpeedExamples/HelloDeepSpeed/train_bert_ds.py --checkpoint_dir /data/deepspeed_data"Parameter

Required

Description

Default value

--name

Yes

The name of the job, which is globally unique.

None

--gpus

No

The number of GPUs that are used by the worker nodes where the training job runs.

0

--workers

No

The number of worker nodes.

1

--image

Yes

The address of the image that is used to deploy the runtime.

None

--data

No

Allow the training job to access the data stored in the PVC by mounting the PVC to the runtime. The value consists of two parts separated by a colon (:).

Specify the name of the PVC on the left side of the colon. Run the

arena data listcommand to view the available PVCs in the cluster.Specify the path of the runtime to which the PVC will be mounted on the right side of the colon. The training job retrieves data from the specified path.

If no PVC is available, you can create one. For more information, see Configure a shared NAS volume.

None

--tensorboard

No

Enable a TensorBoard service to visualize the training job. You must configure both this parameter and the --logdir parameter, which specifies the path from which TensorBoard reads event files. If you do not specify this parameter, TensorBoard is not used.

None

--logdir

No

The path from which TensorBoard reads event files. You must specify both this parameter and the --tensorboard parameter.

/training_logs

If you use a non-public Git repository, you can run the following command to submit a DeepSpeed job:

arena submit deepspeedjob \ ... --sync-mode=git \ # The code synchronization mode, which can be git or rsync. --sync-source=<Address of the non-public Git repository> \ # The address of the repository. You must specify both this parameter and the --sync-mode parameter. If you set --sync-mode to git, you can set this parameter to the address of any GitHub project. --env=GIT_SYNC_USERNAME=yourname \ --env=GIT_SYNC_PASSWORD=yourpwd \ "deepspeed /workspace/DeepSpeedExamples/HelloDeepSpeed/train_bert_ds.py --checkpoint_dir /data/deepspeed_data"In the preceding code block, the Arena client synchronizes the source code in git-sync mode. You can customize the environment variables that are defined in the git-sync project.

Expected output:

trainingjob.kai.alibabacloud.com/deepspeed-helloworld created INFO[0007] The Job deepspeed-helloworld has been submitted successfully INFO[0007] You can run `arena get deepspeed-helloworld --type deepspeedjob` to check the job statusRun the following command to query all training jobs submitted by using Arena:

arena listExpected output:

NAME STATUS TRAINER DURATION GPU(Requested) GPU(Allocated) NODE deepspeed-helloworld RUNNING DEEPSPEEDJOB 3m 3 3 192.168.9.69Run the following command to query the GPU resources that are used by the jobs:

arena top jobExpected output:

NAME STATUS TRAINER AGE GPU(Requested) GPU(Allocated) NODE deepspeed-helloworld RUNNING DEEPSPEEDJOB 4m 3 3 192.168.9.69 Total Allocated/Requested GPUs of Training Jobs: 3/3Run the following command to query the GPU resources that are used in the cluster:

arena top nodeExpected output:

NAME IPADDRESS ROLE STATUS GPU(Total) GPU(Allocated) cn-beijing.192.1xx.x.xx 192.1xx.x.xx <none> Ready 0 0 cn-beijing.192.1xx.x.xx 192.1xx.x.xx <none> Ready 0 0 cn-beijing.192.1xx.x.xx 192.1xx.x.xx <none> Ready 1 1 cn-beijing.192.1xx.x.xx 192.1xx.x.xx <none> Ready 1 1 cn-beijing.192.1xx.x.xx 192.1xx.x.xx <none> Ready 1 1 --------------------------------------------------------------------------------------------------- Allocated/Total GPUs In Cluster: 3/3 (100%)Run the following command to query the detailed information about a job and the address of the TensorBoard web service:

arena get deepspeed-helloworldExpected output:

Name: deepspeed-helloworld Status: RUNNING Namespace: default Priority: N/A Trainer: DEEPSPEEDJOB Duration: 6m Instances: NAME STATUS AGE IS_CHIEF GPU(Requested) NODE ---- ------ --- -------- -------------- ---- deepspeed-helloworld-launcher Running 6m true 0 cn-beijing.192.1xx.x.x deepspeed-helloworld-worker-0 Running 6m false 1 cn-beijing.192.1xx.x.x deepspeed-helloworld-worker-1 Running 6m false 1 cn-beijing.192.1xx.x.x deepspeed-helloworld-worker-2 Running 6m false 1 cn-beijing.192.1xx.x.x Your tensorboard will be available on: http://192.1xx.x.xx:31870TensorBoard is used in this example. Therefore, you can find the URL of TensorBoard in the last two rows of the job information. If TensorBoard is not used, the last two rows are not returned.

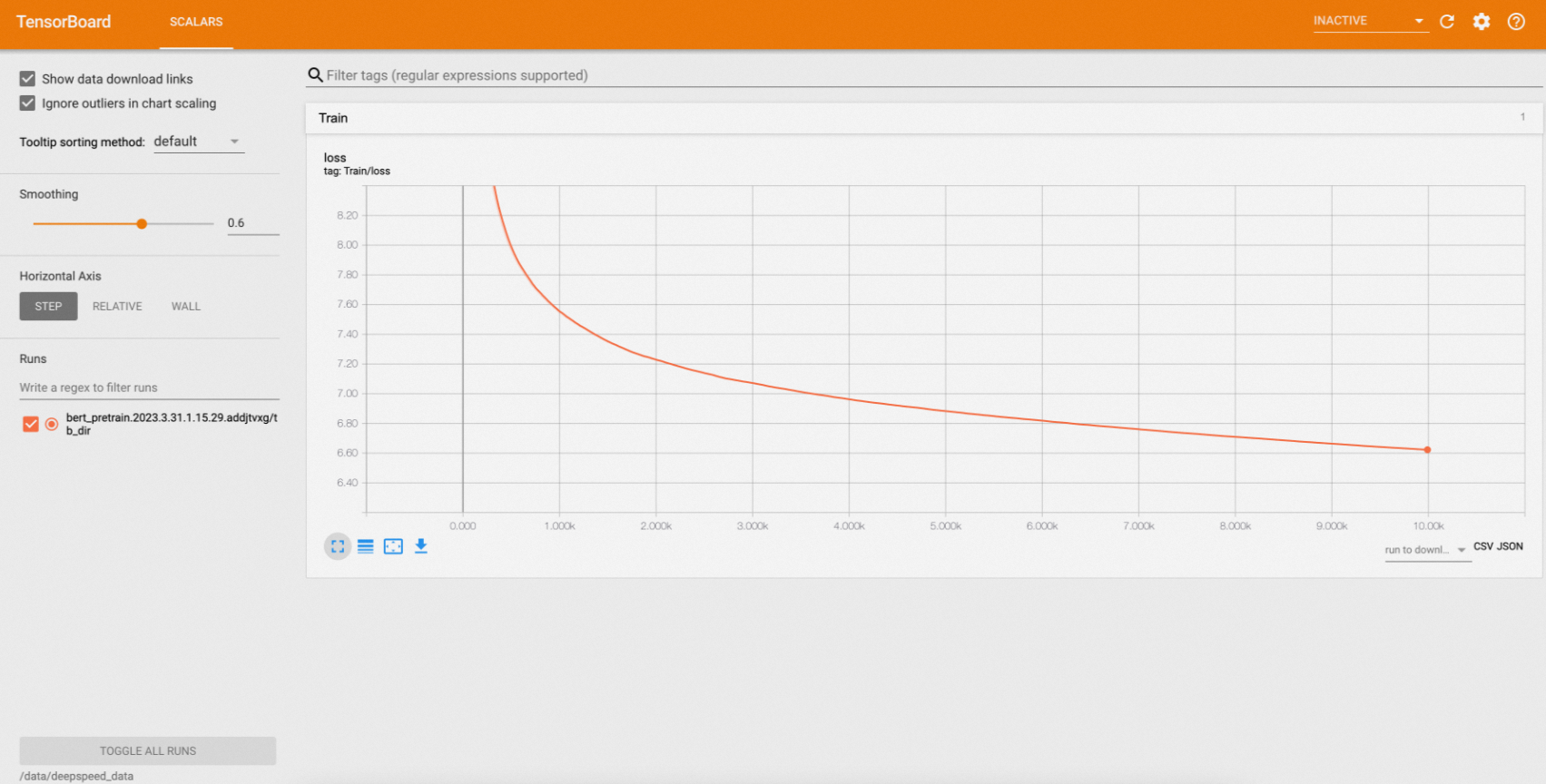

Use a browser to view the training results in TensorBoard.

Run the following command to map TensorBoard to the local port 9090:

kubectl port-forward svc/deepspeed-helloworld-tensorboard 9090:6006Enter

localhost:9090into the address bar of the web browser to access TensorBoard. The following figure shows an example.

Print the log of a job.

Run the following command to print the log of a job:

arena logs deepspeed-helloworldExpected output:

deepspeed-helloworld-worker-0: [2023-03-31 08:38:11,201] [INFO] [logging.py:68:log_dist] [Rank 0] step=7050, skipped=24, lr=[0.0001], mom=[(0.9, 0.999)] deepspeed-helloworld-worker-0: [2023-03-31 08:38:11,254] [INFO] [timer.py:198:stop] 0/7050, RunningAvgSamplesPerSec=142.69733028759384, CurrSamplesPerSec=136.08094834473613, MemAllocated=0.06GB, MaxMemAllocated=1.68GB deepspeed-helloworld-worker-0: 2023-03-31 08:38:11.255 | INFO | __main__:log_dist:53 - [Rank 0] Loss: 6.7574 deepspeed-helloworld-worker-0: [2023-03-31 08:38:13,103] [INFO] [logging.py:68:log_dist] [Rank 0] step=7060, skipped=24, lr=[0.0001], mom=[(0.9, 0.999)] deepspeed-helloworld-worker-0: [2023-03-31 08:38:13,134] [INFO] [timer.py:198:stop] 0/7060, RunningAvgSamplesPerSec=142.69095076844823, CurrSamplesPerSec=151.8552037291255, MemAllocated=0.06GB, MaxMemAllocated=1.68GB deepspeed-helloworld-worker-0: 2023-03-31 08:38:13.136 | INFO | __main__:log_dist:53 - [Rank 0] Loss: 6.7570 deepspeed-helloworld-worker-0: [2023-03-31 08:38:14,924] [INFO] [logging.py:68:log_dist] [Rank 0] step=7070, skipped=24, lr=[0.0001], mom=[(0.9, 0.999)] deepspeed-helloworld-worker-0: [2023-03-31 08:38:14,962] [INFO] [timer.py:198:stop] 0/7070, RunningAvgSamplesPerSec=142.69048436022115, CurrSamplesPerSec=152.91029839772997, MemAllocated=0.06GB, MaxMemAllocated=1.68GB deepspeed-helloworld-worker-0: 2023-03-31 08:38:14.963 | INFO | __main__:log_dist:53 - [Rank 0] Loss: 6.7565You can run the

arena logs $job_name -fcommand to print the job log in real time and run thearena logs $job_name -t Ncommand to print N lines from the bottom of the log. You can also run thearena logs --helpcommand to query the parameters for printing logs.For example, you can run the following command to print five lines from the bottom of the log:

arena logs deepspeed-helloworld -t 5Expected output:

deepspeed-helloworld-worker-0: [2023-03-31 08:47:08,694] [INFO] [launch.py:318:main] Process 80 exits successfully. deepspeed-helloworld-worker-2: [2023-03-31 08:47:08,731] [INFO] [launch.py:318:main] Process 44 exits successfully. deepspeed-helloworld-worker-1: [2023-03-31 08:47:08,946] [INFO] [launch.py:318:main] Process 44 exits successfully. /opt/conda/lib/python3.8/site-packages/apex/pyprof/__init__.py:5: FutureWarning: pyprof will be removed by the end of June, 2022 warnings.warn("pyprof will be removed by the end of June, 2022", FutureWarning)