Data Quality allows you to configure monitoring rules for a single table to monitor whether the quality of table data meets specific conditions. If data quality issues are detected, tasks on which the issues occur are blocked to prevent dirty data from spreading downstream. This ensures that the generated table data meets your business requirements. This topic describes how to configure monitoring rules for a single table, run a monitor, and perform other related operations.

Prerequisites

The metadata of a compute engine is collected. Monitoring rules are configured based on the data tables of a compute engine and are used to monitor the data quality of the tables. Therefore, before you create and configure monitoring rules, you must collect the metadata of the desired compute engine first. For more information, see Metadata collection.

Configuration process

You need to perform the following steps to configure monitoring rules for a table:

Select the table whose data quality you want to check based on monitoring rules.

Create a quality monitor for the table.

When you create and configure a monitor for the table, you can specify the range of table data whose quality you want to monitor, such as a partition, and select the monitoring rules that you want to associate with the monitor. This way, the monitor can be used to monitor the quality of the specified table data based on the rules.

You can create different monitors for different partitions of the same table and associate different monitoring rules with the monitors. This way, the partitions can be monitored based on different data quality check logic.

You can specify a trigger method for the monitor. The monitor can be triggered when the scheduling node that generates the table is run or when you manually run the monitor.

You can specify the conditions under which alerts are sent. You can determine whether to send alerts based on the severity of data quality check results. You can determine whether to block the task that generates the monitored table based on the severity of data quality issues when data changes in the table are automatically checked.

Configure data quality rules to add to quality monitoring.

You can select the monitoring rules based on which you want to monitor the data quality of the table. The specific check methods that are defined in the rules are implemented to determine whether the table data meets your business requirements.

Data Quality allows you to create monitoring rules based on built-in rule templates or create custom template rules based on custom SQL statement logic.

Built-in template rules: You can create monitoring rules based on built-in rule templates provided by DataWorks.

Custom template rules: You can create custom template rules if the built-in rule templates cannot meet your requirements for monitoring the quality of data specified by partition filter expressions. You can save frequently used custom rules as rule templates for future use.

Test and subscribe to the monitor.

Limits

You can configure monitoring rules only for specific types of data sources, including MaxCompute, E-MapReduce (EMR), Hologres, CDH Hive, AnalyticDB for PostgreSQL, AnalyticDB for MySQL, StarRocks, and MySQL. After you configure a monitoring rule for a data source, the rule can be triggered to check the data quality of tables in the data source only if the scheduling node that generates the table data is run on a resource group that is connected to the data source.

To perform data quality checks by using a dynamic threshold rule, you must make sure that sampling records of 21 days are available. If sampling records are collected for less than 21 days, exceptions may occur when you use a dynamic threshold rule. If sampling records of 21 days are not available when you configure a dynamic threshold rule, you can use the data backfill feature to supplement the required data after you associate the rule with scheduling nodes.

Procedure

Step 1: Go to the Table Quality Details page

Go to the Data Quality page.

Log on to the DataWorks console. In the top navigation bar, select the desired region. In the left-side navigation pane, choose . On the page that appears, select the desired workspace from the drop-down list and click Go to Data Quality.

Go to the Configure by Table page.

In the left-side navigation pane, choose .

In the Connection section, select the database where the table for which you want to configure rules resides.

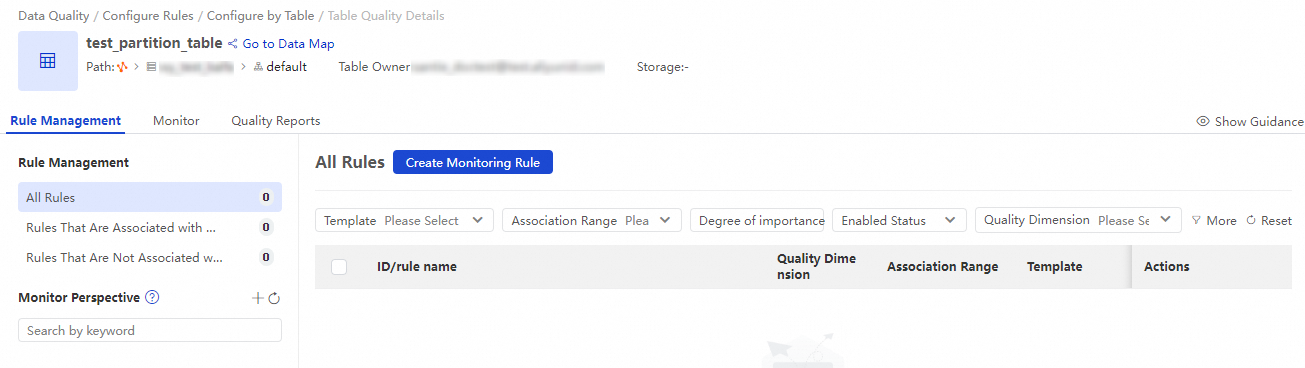

Search for the desired table based on conditions, such as the database type, database, and table name. Then, click the name of the table or click Rule Management in the Actions column of the table to go to the Table Quality Details page.

This page displays all the monitors and rules configured for the table. You can quickly filter rules based on whether the rules are associated with a monitor. For rules that are not associated with a monitor, you can specify the running mode for the rules.

Step 2: Create a monitor

Create a monitor.

You can use one of the following methods to create a monitor:

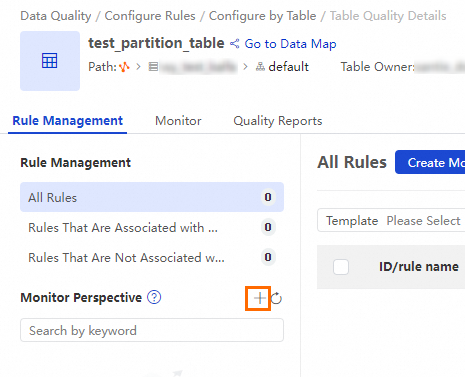

Rule Management tab

On the Table Quality Details page, click the Rule Management tab. Click the icon next to Monitor Perspective

.

.

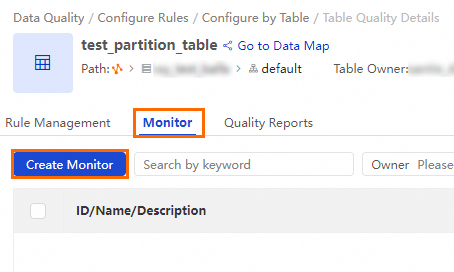

Monitor tab

On the Table Quality Details page, click the Monitor tab. Click Create Monitor.

Configure the parameters for quality monitoring.

Configuration item

Parameter

Description

Basic Configurations

Monitor Name

The name of the monitor.

Quality Monitoring Owner

You can specify the owner of the monitor as needed. When you configure alert subscriptions, you can specify the monitor owner as the alert recipient by using Email, Email and SMS, or Telephone.

Monitored Object

The object for which you want to check the data quality. The default value is the current table.

Data Range

The range of table data whose quality you want to monitor. You can use a partition filter expression to define the partition that needs to be checked.

For a non-partitioned table, you do not need to configure this parameter. All data in the table is checked by default.

For a partitioned table, you must set this parameter to a value in the

Partition key=Partition valueformat. The partition value can be a constant or a built-in partition filter expression.

NoteIf you configure a monitoring rule based on a custom template or a custom SQL statement, this parameter does not take effect. Instead, the partition checked by the rule is determined by the custom SQL statement that is specified in the rule.

Monitoring Rule

Monitoring Rule

The monitoring rules that you want to associate with the monitor. The quality of data in the specified range is monitored based on the rules.

NoteYou can create different monitors for different partitions of the same table and associate different monitoring rules with the monitors. This way, the partitions can be monitored based on different data quality check logic.

If you have not created monitoring rules, you can skip the configuration of this parameter and complete the creation of the monitor first. When you create and configure a monitoring rule, you can add the monitoring rule to a monitor. For information about how to create and configure a monitoring rule, see Step 3: Configure a monitoring rule.

Running Settings

Trigger Method

The trigger method for the monitor.

Triggered by Node Scheduling in Production Environment: After the scheduling node that you associate with the monitor finishes running in Operation Center, the rules that are associated with the monitor are automatically triggered. Note that dry-run nodes do not trigger monitoring rules to run.

Triggered Manually: The monitoring rules that are associated with the monitor are manually triggered.

ImportantIf the table whose data quality you want to check is a non-MaxCompute table and Triggered By Node Scheduling In Production Environment is selected for Trigger Method, you cannot associate scheduling nodes that are run on the shared resource group for scheduling with the monitor. Otherwise, an error may be reported when the monitor is run.

Associated Scheduling Node

If you set the Trigger Method parameter to Triggered By Node Scheduling In Production Environment, you can configure this parameter to select the scheduling nodes that you want to associate with the monitor. After the scheduling nodes finish running, the rules that are associated with the monitor are automatically triggered.

Running Resources

The resources that are required to run the rules. By default, the data source to which the monitored table in the current workspace belongs is selected. If you select another data source, make sure that the related resources can access the monitored table.

Handling Policies

Quality Issue Handling Policies

The blocking or alerting policy that is used to process detected data quality issues.

Blocks: If a data quality issue is detected in the table, the scheduling node in the production environment that generates the table is identified, and the system sets the running status of the node to Failed. In this case, the descendant nodes of the node cannot be run, which blocks the production link to prevent the spread of dirty data.

Default value:

Strong rules · Red anomaly.Alert: If a data quality issue is detected in the table, the system sends alert notifications to the alert recipient by using the configured notification method.

Default values:

Strong rules · Red anomaly,Strong rules · Orange exception,Strong rules · Check Failed,Weak rules · Red anomaly,Weak rules · Orange exception, andWeak rules · Check Failed.

Alert Method Configuration

You can send alert notifications by using Email, Email and SMS, DingTalk Chatbot, DingTalk Chatbot @ALL, Lark Group Chatbot, Enterprise Wecha Robot, Custom WebHook, or Telephone.

NoteYou can add a DingTalk chatbot, Lark chatbot, or WeChat chatbot and obtain a webhook URL. Then, copy the webhook URL to the Recipient field in the Manage Subscriptions dialog box.

The Custom Webhook notification method is supported only in DataWorks Enterprise Edition. For information about the message format of an alert notification sent by using a Custom Webhook, see Appendix: Message format of alert notifications sent by using a custom webhook URL.

When you select Email, Email and SMS, or Telephone as the notification method, you can specify Recipient as Monitor Owner, Shift Schedule, or Node Owner.

Data Quality Monitoring Owner: Alert information will be sent to the Quality Monitoring Owner set in the Basic Configurations section of the current quality monitor.

Shift Schedule: When the monitoring rule associated with the monitor is triggered and an alert is generated, the system sends alert notifications to the person on duty for the current day in the shift schedule.

Scheduling Task Owner: Alert notifications are sent to the owner of the scheduling node associated with the monitor.

Click Save to complete the creation of the monitor.

Step 3: Configure a monitoring rule

You can configure a monitoring rule based on the built-in table-level or field-level rule templates. For more information about the built-in rule templates, see View built-in rule templates.

On the Table Quality Details page, click the Rule Management tab, select the created monitor, and then click Create Rule. The Create Rule panel appears.

Create a monitoring rule.

You can use one of the following methods provided by Data Quality to configure monitoring rules based on your business requirements.

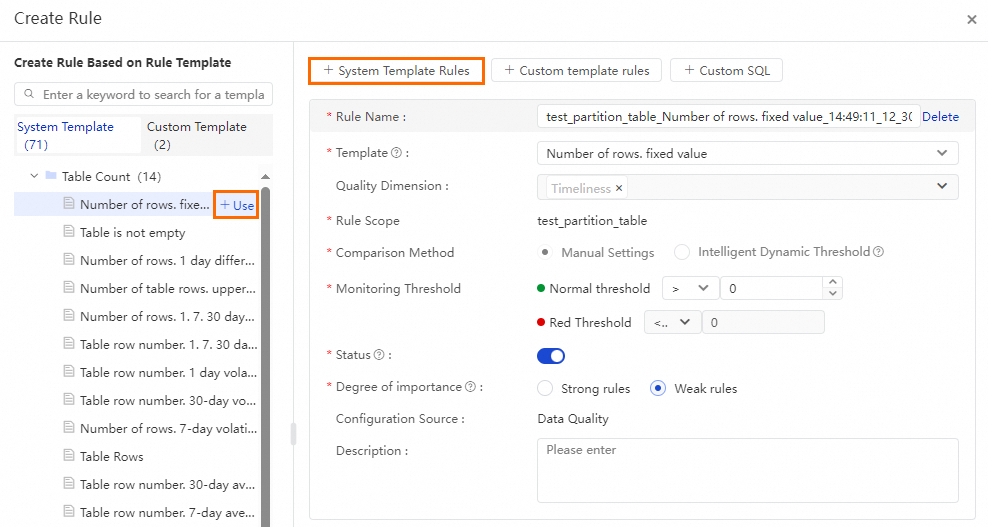

Method 1: Configure a rule based on a built-in rule template

Data Quality provides dozens of built-in rule templates. You can quickly create a monitoring rule based on a built-in rule template.

NoteIn the upper part of the Create Rule panel, you can click + System Template Rules. Then, you can configure the Template parameter to select the desired rule template.

Alternatively, you can find the desired built-in rule template in the left-side navigation pane of the Create Rule panel and click + Use.

You can add multiple rules at the same time.

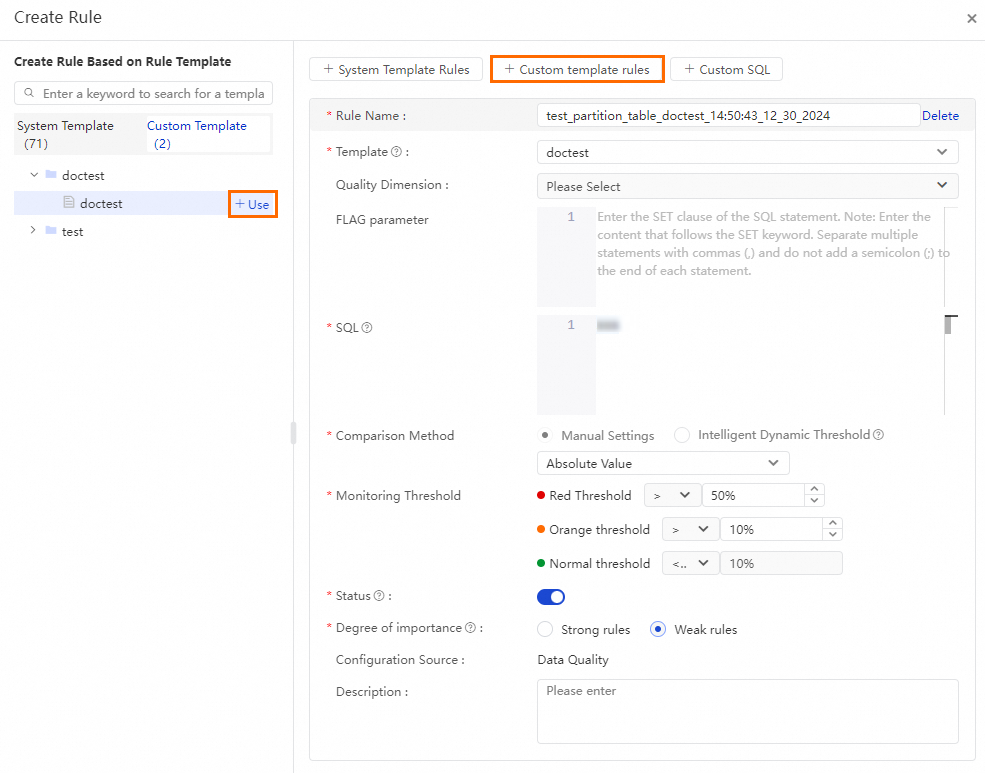

Method 2: Configure a rule based on a custom rule template

NoteBefore you use this method to create a rule, you must go to to create a custom rule template. For more information, see Create and manage custom rule templates.

When you reference a custom rule template, the basic configurations of the template, such as FLAG parameter and SQL, are automatically displayed. You can configure the Rule Name parameter based on your business requirements, and the Monitoring Threshold parameter based on the rule type. For example, you must define a normal threshold and a critical threshold for a numeric rule, and you must define a warning threshold in addition to a normal threshold and a critical threshold for a fluctuation-type rule.

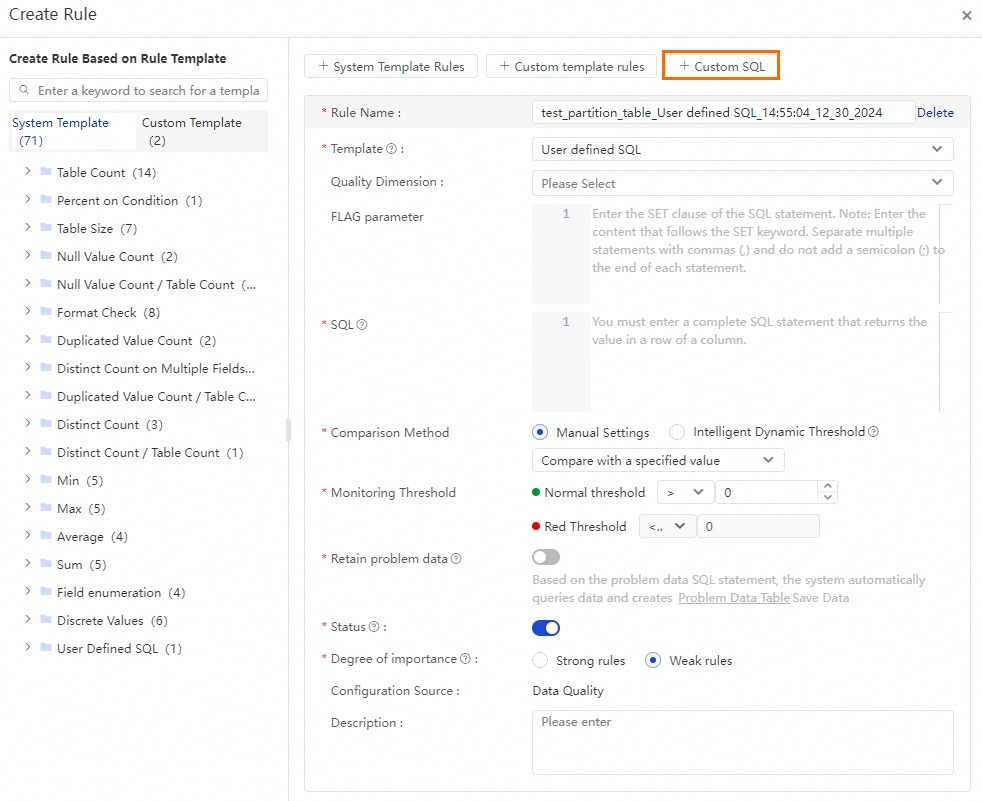

Method 3: Configure a rule based on a custom SQL statement

This method allows you to configure the data quality check logic for the table based on your business requirements.

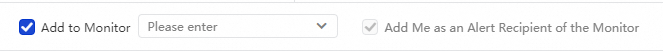

Optional. Add the configured rule to a monitor. For more information about a monitor, see Step 2: Create a monitor.

NoteThe configured monitoring rule can be triggered only if you add the rule to a monitor. To associate a rule with a monitor, you can select an existing monitor here, or select the rule in the Monitoring Rule section when you configure a monitor.

Click Determine.

Step 4: Test the rule

You can use one of the following methods to test how the rule that you associate with the monitor is triggered:

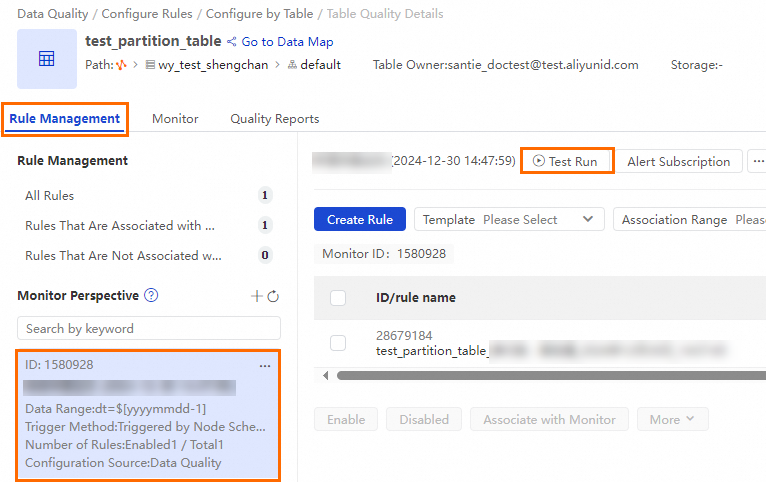

Perform a test run on the Rule Management tab

In the Rule Management tab, find the created monitor in the Monitor Perspective section, and then click Test Run.

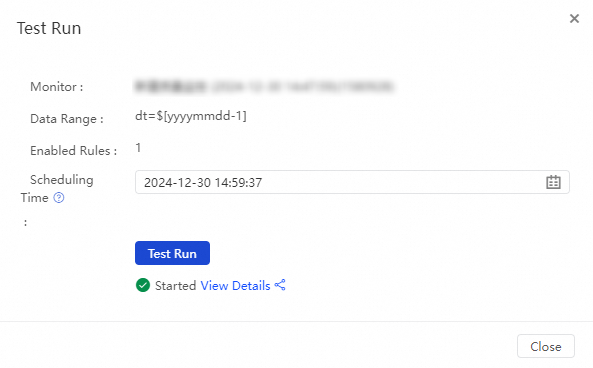

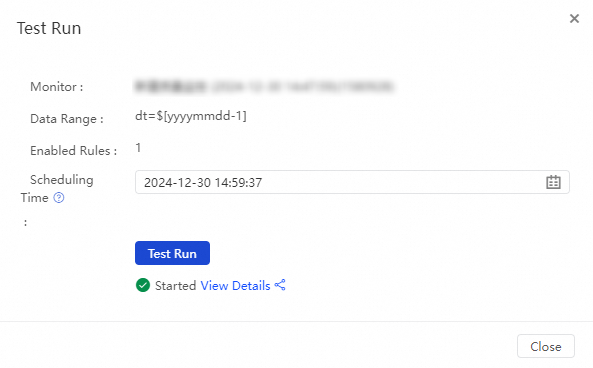

In the Test Run dialog box, check the configurations of parameters, such as Data Range and Scheduling Time, and click Test Run. If Started is displayed, you can click View Details to view results of the test run.

Perform a test run on the Monitor tab

On the Quality monitoring tab, find the created monitor and click Test in the Actions column.

In the Test Run dialog box, check the configurations of parameters, such as Data Range and Scheduling Time, and click Test Run. If Started is displayed, you can click View Details to view results of the test run.

Step 5: Modify alert subscriptions for the monitor

When you create a monitor in Step 2, you have already configured alert subscriptions. When the rule is triggered, the system sends notifications to the specified alert recipients. If you want to modify alert subscriptions to notify other users, you can use one of the following methods:

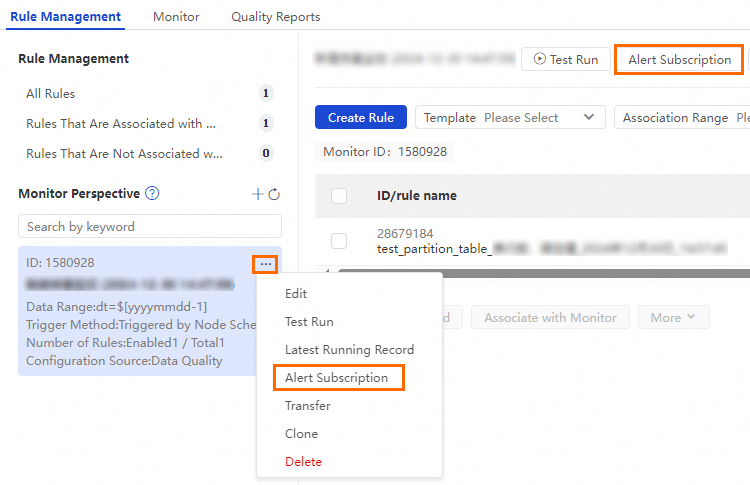

Subscribe to the monitor on the Rule Management tab

In the Rule Management tab, find the created monitor in the Monitor Perspective section and perform the steps shown in the following figure to open the alert subscription page.

In the Alert Subscription dialog box, add a Notification Method and a Recipient, and then click Save in the Actions column. After you save the configurations, you can configure another subscription with a different notification method and alert recipient.

Data Quality supports the following notification methods: Email, Email and SMS, DingTalk Chatbot, DingTalk Chatbot @ALL, Lark Group Chatbot, Enterprise Wechat Robot, Custom Webhook, and Telephone.

NoteYou can add a DingTalk chatbot, Lark chatbot, or WeChat chatbot and obtain a webhook URL. Then, copy the webhook URL to the Recipient field in the Manage Subscriptions dialog box.

The Custom Webhook notification method is supported only in DataWorks Enterprise Edition. For information about the message format of an alert notification sent by using a Custom Webhook, see Appendix: Message format of alert notifications sent by using a custom webhook URL.

When you select Email, Email and SMS, or Telephone as the notification method, you can specify Recipient as Monitor Owner, Shift Schedule, or Node Owner.

Data Quality Monitoring Owner: Alert information will be sent to the Quality Monitoring Owner set in the Basic Configurations section of the current quality monitor.

Shift Schedule: When the monitoring rule associated with the monitor is triggered and an alert is generated, the system sends alert notifications to the person on duty for the current day in the shift schedule.

Scheduling Task Owner: Alert notifications are sent to the owner of the scheduling node associated with the monitor.

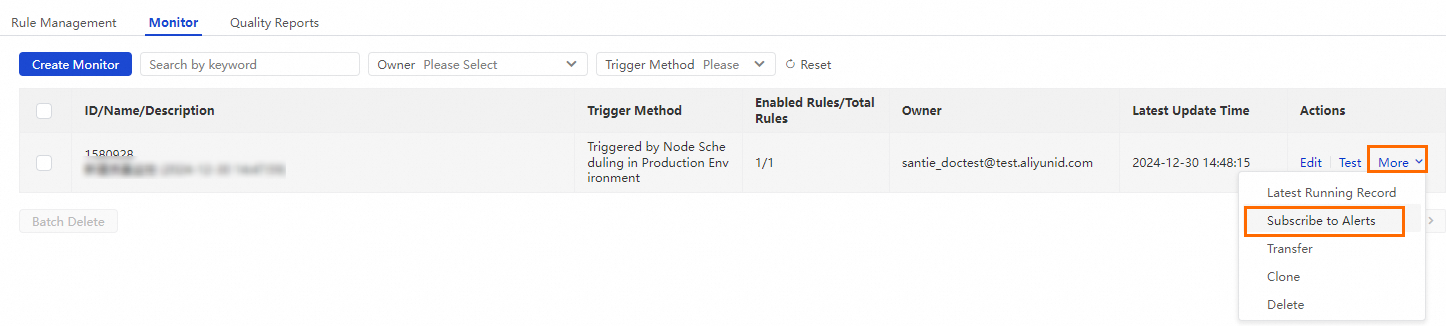

Subscribe to the monitor on the Monitor tab

On the Monitor tab, find the created monitor and choose in the Actions column.

In the Alert Subscription dialog box, add a Notification Method and a Recipient, and then click Save in the Actions column. After you save the configurations, you can configure another subscription with a different notification method and alert recipient.

Data Quality supports the following notification methods: Email, Email and SMS, DingTalk Chatbot, DingTalk Chatbot @ALL, Lark Group Chatbot, Enterprise Wechat Robot, Custom Webhook, and Telephone.

NoteYou can add a DingTalk chatbot, Lark chatbot, or WeChat chatbot and obtain a webhook URL. Then, copy the webhook URL to the Recipient field in the Manage Subscriptions dialog box.

The Custom Webhook notification method is supported only in DataWorks Enterprise Edition. For information about the message format of an alert notification sent by using a Custom Webhook, see Appendix: Message format of alert notifications sent by using a custom webhook URL.

When you select Email, Email and SMS, or Telephone as the notification method, you can specify Recipient as Monitor Owner, Shift Schedule, or Node Owner.

Data Quality Monitoring Owner: Alert information will be sent to the Quality Monitoring Owner set in the Basic Configurations section of the current quality monitor.

Shift Schedule: When the monitoring rule associated with the monitor is triggered and an alert is generated, the system sends alert notifications to the person on duty for the current day in the shift schedule.

Scheduling Task Owner: Alert notifications are sent to the owner of the scheduling node associated with the monitor.

Next steps

After the monitor is run, you can choose Quality O&M in the left-side navigation pane and click Monitor and Running Records to view the quality check status of the specified table and the complete quality rule check records.

Appendixes

Appendix 1: Calculation formulas of the fluctuation rate and variance

Calculation formula of the fluctuation rate:

Fluctuation rate = (Sample value - Baseline value)/Baseline valueSample value: The sample value for the current day. For example, if you want to check the fluctuation in the number of table rows on an SQL task within a day, the sample value is the number of table rows in partitions on that day.

Baseline value: The comparison value collected from the previous N days.

NoteIf you want to check the

fluctuation in the number of table rows on an SQL task within a day, the baseline value is the number of table rows in partitions on the previous day.If you want to check the

average fluctuation in the number of table rows on an SQL task within seven days, the baseline value is the average number of table rows in the last seven days.

Calculation formula of the variance:

(Current sample value - Average value of previous N days)/Standard deviationNoteYou can calculate the fluctuation variance only for numeric fields such as BIGINT and DOUBLE fields by using the preceding formula.

Appendix 2: Built-in partition filter expressions

$[yyyymmdd-1]

The partitions are daily partitions. The partition data matching the data timestamp needs to be checked.

$[yyyymmdd-1]/hour=$[hh24]

The partitions are level-2 partitions. All the level-2 partitions on the scheduling day need to be checked.

$[yyyymmdd]

The partitions are daily partitions. The partition data on the scheduling day needs to be checked.

$[yyyymmddhh24miss]

Partitions contain the 24-hour time format information, such as 20211216174847. The partition data at a specific point in time on the day that is specified by bizdate needs to be checked.

$[yyyymmddhh24miss-1/24]

Partitions contain the 24-hour time format information, such as 20211216174847. The partition data at 1 hour earlier than a specific point in time on the day that is specified by bizdate needs to be checked.

$[yyyymmdd]000000

The partitions are partitions at 00:00 each day, such as 20211216000000. The partition data at 00:00 on the day that is specified by bizdate needs to be checked.

$[yyyymmdd-7]

The partitions are daily partitions, such as 20211216. The partitions that are seven days earlier than the scheduling day need to be checked.

$[hh24miss-1/24]

The partitions are hourly partitions, such as 180000. The partitions that are 1 hour earlier than the scheduling time need to be checked.

$[hh24miss-30/24/60]

The partitions are hourly partitions, such as 180000. The partitions that are 30 minutes earlier than the scheduling time need to be checked.

$[add_months(yyyymmdd,-1)]

The partitions are daily partitions, such as 20211208. The partitions that are one month earlier than the scheduling day need to be checked.