Errors related to permissions

Error message:

com.aliyun.datahub.exception.NoPermissionException: No permission, authentication failed in ramThe error message indicates that the RAM user does not have the required permissions. For more information about how to grant permissions to a RAM user, see Access control.

Errors related to an ApsaraDB RDS instance in a VPC

Error message:

InvalidInstanceId.NotFound:The instance not in current vpcSolution:

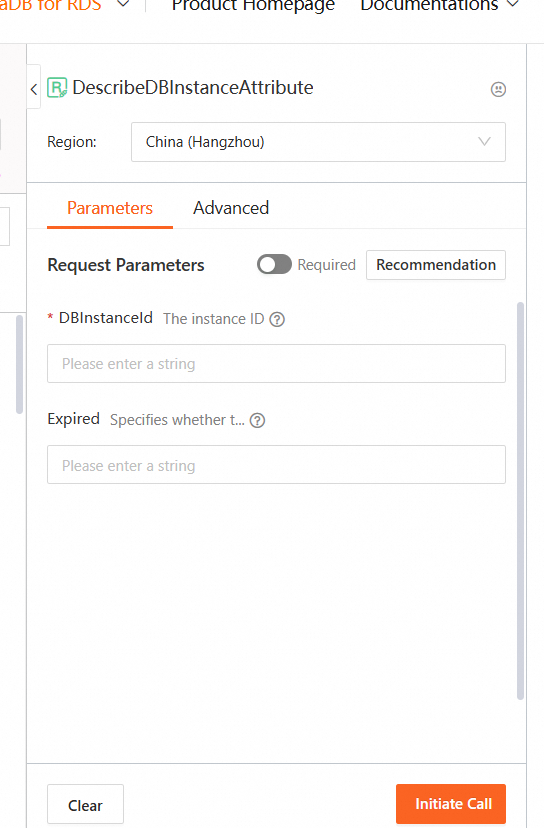

Call the DescribeDBInstanceAttribute operation to query the details of the ApsaraDB RDS instance.

Click Debug. On the right part of the page that appears, select a region and enter the instance ID, as shown in the following figure.

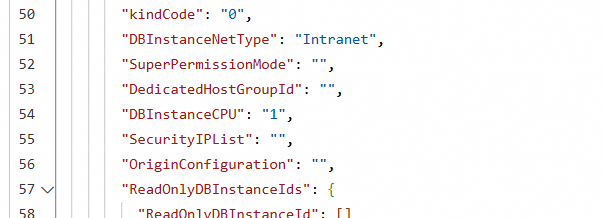

Click Call. Find VpcCloudInstanceId in the returned results.

Go to the panel to synchronize data from DataHub to ApsaraDB RDS. Then, enter the obtained instance ID of the VPC in the Instance ID field.

Errors related to JAR package conflicts

Common JAR package conflicts that may occur when you use DataHub SDK for Java

InjectionManagerFactory not found

By default, DataHub SDK for Java depends on Jersey client V2.22.1. If you use a Jersey client of a version later than V2.22.1, you must add dependencies to the SDK.

<dependency>

<groupId>org.glassfish.jersey.inject</groupId>

<artifactId>jersey-hk2</artifactId>

<version>xxx</version>

</dependency>java.lang.NoSuchFieldError: EXCLUDE_EMPTY

The version of the jersey-common library is earlier than V2.22.1. We recommend that you use jersey-common library V2.22.1 or later.

Error reading entity from input stream

Cause 1: The version of the HTTP client is earlier than V4.5.2. Upgrade the version of the HTTP client to V4.5.2 or later.

Cause 2: The SDK of the current version does not support specific data types. The SDK must be upgraded.

jersey-apache-connector of versions later than V2.22.1 contains bugs related to TCP connections.

Use V2.22.1.

java.lang.NosuchMethodError:okhttp3.HttpUrl.get(java/lang/String:)okhttp3/HttpUrl

Run the mvn dependency:tree command to check whether the version of the OkHttp client conflicts with dependencies.

javax/ws/rs/core/ResponseStatusFamily

Check the dependencies of the javax.ws.rs package. For example, check whether the javax.ws.rs package depends on jsr311-api.

Other errors

Parse body failed, Offset: 0

In most cases, this error occurs when data is being written. Earlier versions of Apsara Stack DataHub do not support binary data transmission of protocol buffers. However, binary data transmission is enabled in some SDKs by default. In these cases, you must manually disable binary data transmission.

Java SDK

datahubClient = DatahubClientBuilder.newBuilder()

.setDatahubConfig(

new DatahubConfig(endpoint,

// Specify whether to enable binary data transmission. In DataHub SDK for Java V2.12 and later, the server supports binary data transmission.

new AliyunAccount(accessId, accessKey), true))

.build();Python SDK

# Json mode: for datahub server version <= 2.11

dh = DataHub(access_id, access_key, endpoint, enable_pb=False)Go SDK

config := &datahub.Config{

EnableBinary: false,

}

dh := datahub.NewClientWithConfig(accessId, accessKey, endpoint, config)Logstash

Set the value of enable_pb to false.Request body size exceeded

The error message indicates that the size of the request body exceeds the upper limit. For more information, see Limits.

Record field size not match.

The error message indicates that the specified schema does not match the schema of the topic. We recommend that you call the getTopic method to obtain the schema.

The limit of query rate is exceeded.

To ensure efficient use of resources, we set a limit to the number of queries per second (QPS) that are processed by DataHub. This error occurs if the frequency of data reads or writes exceeds the upper limit. We recommend that you read or write data in batches. For example, you can write a batch of data every minute and read 1,000 records each time a batch of data is written.

Num of topics exceed limit

In the latest version of DataHub, the maximum number of topics that can be contained in a project is set to 20.

SeekOutOfRange

The offset parameter is invalid or the offset expires.

Offset session has changed

A subscription cannot be consumed by multiple consumers at the same time. Check whether a subscription is consumed by multiple consumers in the program.

Can I synchronize data of the DECIMAL type to MaxCompute?

Data of the DECIMAL type with no precision is supported by MaxCompute. By default, a DECIMAL value can contain up to 18 digits on each side of the decimal point.

What does the addAttribute method do?

You can use the addAttribute() method to add additional attributes to a record based on your business requirements. Additional attributes are optional.

How do I delete data from a topic?

DataHub does not allow you to delete data from a topic. However, you can reset offsets to invalidate data.

The data within a shard is stored in a file located at the specified Object Storage Service (OSS) path. The name of the file is randomly generated. If the file size exceeds 5 GB, another file is created to store the data from the shard. Can I modify the file size?

No, you cannot modify the file size.

What can I do if my AnalyticDB for MySQL instance cannot access a public endpoint?

You must apply for an internal endpoint in AnalyticDB for MySQL. Log on to your AnalyticDB for MySQL instance, execute the alter database set intranet_vip = true statement to connect to the database, and then execute the select internal_domain, internal_port from information_schemata statement to query the endpoint.